It's about time! The key principles of reinforcement learning (e.g., learning by interaction, TD learning) are fundamental to intelligence.

05.03.2025 14:54 — 👍 3 🔁 0 💬 0 📌 0@khurramjaved.com.bsky.social

Working on scalable and decentralized algorithms for real-time reinforcement learning. Research scientist @ Keen AGI Prev - PhD with Richard S. Sutton

It's about time! The key principles of reinforcement learning (e.g., learning by interaction, TD learning) are fundamental to intelligence.

05.03.2025 14:54 — 👍 3 🔁 0 💬 0 📌 0

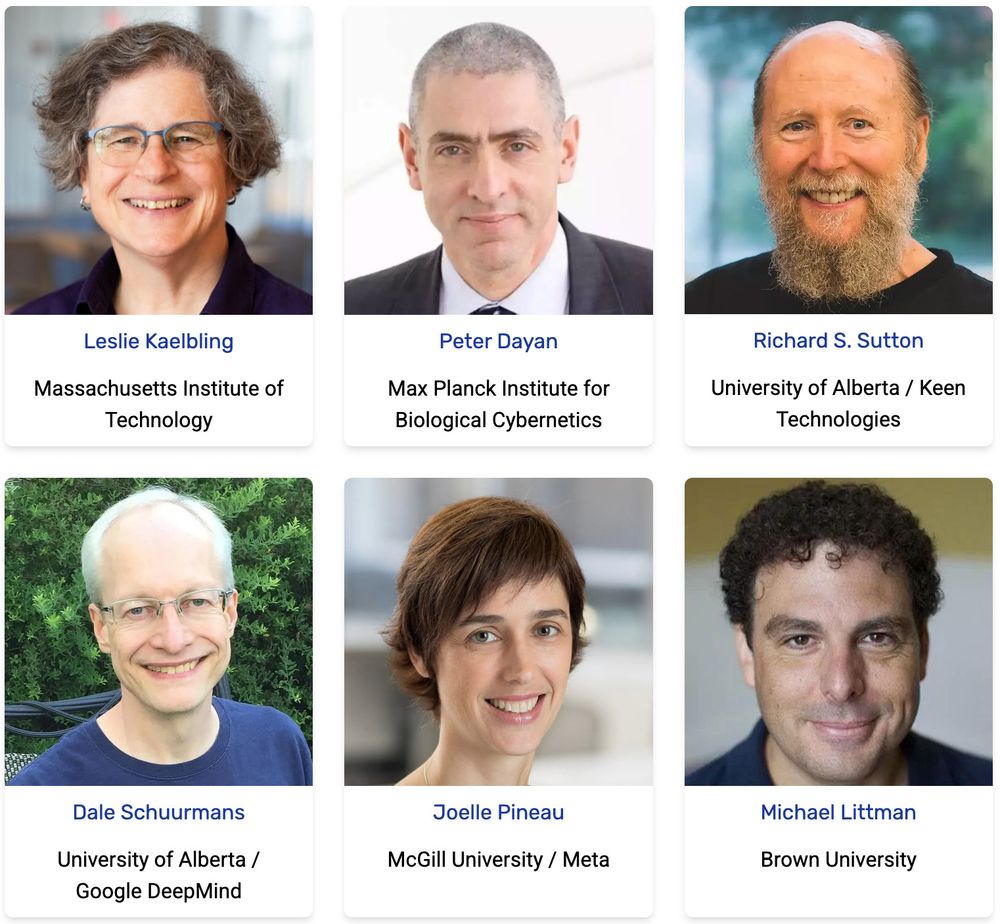

RLC Keynote speakers: Leslie Kaelbling, Peter Dayan, Rich Sutton, Dale Schuurmans, Joelle Pineau, Michael Littman

Some extra motivation for those of you in RLC deadline mode: our line-up of keynote speakers -- as all accepted papers get a talk, they may attend yours!

@rl-conference.bsky.social

Olympiad problems are designed to have elegant solutions and new problems are often designed around old patterns. As language models get better we should expect them to conquer IMO/IOI problems.

Simple problems not designed to have elegant solutions would prove harder for language models.

How to keep the AI hype cycle going:

1. Propose new knowledge based benchmarks and show existing LLMs do poorly on them.

2. Train LLMs on the knowledge required to do well on the new benchmarks.

3. Use improvements on the benchmarks as signs of rapid progress.

Almost all robotics startups are betting on learning from large supervised learning datasets collected by teleoperation. The odds of success for this strategy are small.

Like the Sim2Real bubble, this bubble might not burst for years. At-least it's keeping the roboticists employed.

I think it would help to have a more precise example. An example that not only specifies the environment dynamics and the reward, but also explains the economic value of solving it and how we know existing solutions are suboptimal.

23.12.2024 00:05 — 👍 1 🔁 0 💬 1 📌 0That is a fair position! New ideas could still improve the existing solutions by a lot.

What is a problem that can be simulated quickly, has no sim to real gap, and is economically valuable? Ideal example would also have an existence proof of a better solution (e.g., strong human performance).

Although the distinction between real-world vs simulation is not the right one. The right abstraction is big worlds vs small worlds [1]. We don't have algorithms that can learn in big worlds.

[1] The Big World Hypothesos and its Ramifications

openreview.net/pdf?id=Sv7Da...

The recipe that combines lots of experience and compute with existing algorithms works in the simulation regime (OpenAI Five, AlphaStar).

We can make the recipe more efficient but there is no research bottleneck imo.

Real-world learning requires new ideas. Existing algorithms completely fail.

If one only cares about learning in simulators then they can simplify the problem. E.g., assume they have a perfect model, the environment state, and the ability to jump to arbitrary states.

This simpler setting is solved from a research perspective imo which is why engineering is the bottleneck.

Blender is amazing for 3D rendering! The paid alternatives are obscenely expensive for anyone but the professionals.

05.12.2024 14:47 — 👍 2 🔁 0 💬 0 📌 0Despite significant growth of the AI community some promising research directions are untouched because we rely on a homogeneous set of tools (e.g., autograd).

Ideas that are easy to implement with existing tools win the software lottery and are more thoroughly tested.

This year's (first-ever) RL conference was a breath of fresh air! And now that it's established, the next edition is likely to be even better: Consider sending your best and most original RL work there, and then join us in Edmonton next summer!

02.12.2024 19:37 — 👍 19 🔁 3 💬 0 📌 0RLC will be held at the Univ. of Alberta, Edmonton, in 2025. I'm happy to say that we now have the conference's website out: rl-conference.cc/index.html

Looking forward to seeing you all there!

@rl-conference.bsky.social

#reinforcementlearning