Great being at #NeurIPS last week!

Thankful for the good times in the sun and the people I met.

If you’ve ever wondered whether model performance can be inferred directly from your training alone, check out our work!

1/2

@tum-aim-lab.bsky.social

Chair of AI in Healthcare and Medicine, led by @danielrueckert.bsky.social, at TU Munich. 🌐 www.kiinformatik.mri.tum.de/en/chair-artificial-intelligence-healthcare-and-medicine

Great being at #NeurIPS last week!

Thankful for the good times in the sun and the people I met.

If you’ve ever wondered whether model performance can be inferred directly from your training alone, check out our work!

1/2

Saturday 11:15am: „Are foundation models useful feature extractors for electroencephalography analysis?“ - Özgün Turgut, Felix Bott, Markus Ploner, Daniel Rückert

neurips.cc/virtual/2025...

3/3

Friday 4:30pm: „Gradient-Weight Alignment as a Train-Time Proxy for Generalization in Classification Tasks“ - Florian A. Hölzl, Daniel Rückert, Georgios Kaissis

neurips.cc/virtual/2025...

2/3

It’s this time of the year again! #NeurIPS2025

If you are in San Diego, make sure to check out 2 works from our lab this week 📝📝 #AIMresearch

1/3

Their paper, "Evaluation and mitigation of the limitations of large language models in clinical decision-making," is an important contribution to the integration of AI in healthcare.

Read it here: www.nature.com/articles/s41...

Huge congratulations to Paul Hager and Dr. med. Friederike Jungmann for winning the MDSI Best Paper Award in the Societal Impact category! 🏆🎊

See more details in the thread below!

Photo's copyright: Andreas Heddergott/TUM

#AIMResearch #AIMNews #MDSI #BestPaperAward

We celebrated the 5th anniversary of our research chair at @tum.de! 💙🥂

It's been an incredible journey of research and collaboration. Thank you to everyone who has made this possible. We are very much looking forward to the next years to come!

#AIMAnniversary #AIMNews

Quick look back at an insightful day yesterday at the Bavarian Conference on AI in Medicine, where @paulhager.bsky.social , @luciehuang.bsky.social, Alina Dima, and Vasiliki Sideri-Lampretsa were representing us.

Team, thank you for being such good ambassadors! 👏

#AIMNews #AIinMedicine

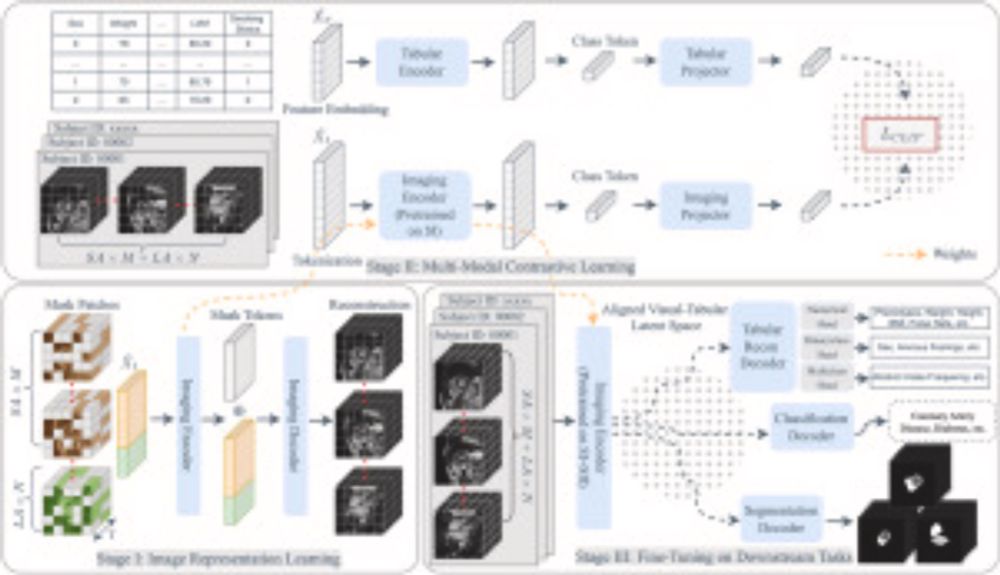

However, that’s not all! Yundi is currently extending the framework by integrating K-space signal data and genomic information to further enhance its multimodal capability.

29.10.2025 12:19 — 👍 0 🔁 0 💬 0 📌 0By doing this, ViTa enables a broad spectrum of downstream applications, including cardiac phenotype and physiological feature prediction, segmentation, and classification of cardiac/metabolic diseases within a single unified framework.

29.10.2025 12:19 — 👍 1 🔁 0 💬 1 📌 0ViTa is a multi-modal, multi-task, and multi-view foundation model that delivers a comprehensive representation of the heart and a precise interpretation of individual disease risk. It integrates anatomical information from 3D+time cine MRI stacks with detailed patient-level tabular data.

29.10.2025 12:19 — 👍 0 🔁 0 💬 1 📌 0

We are wrapping up our 5th-year anniversary paper series with ViTa by Yundi Zhang et al. (www.sciencedirect.com/science/arti...), a work that adresses: How to realize personalized cardiac healthcare that moves beyond a single task?

#AIMResearch #AIMAnniversary #MultiModalLearning #CardiacMRI

But the work hasn't stopped there! Progress on the benchmark is tracked using an online leaderboard: huggingface.co/MIMIC-CDM, and we are currently developing the next generation of medical benchmarks in co-operation with Google for Health.

27.10.2025 08:18 — 👍 0 🔁 0 💬 0 📌 0

Models were found to perform significantly worse than doctors, to not follow guidelines, and to be extremely sensitive to simple changes in input. This means more work has to be done before we can safely deploy them for high stakes clinical decision making.

Paper: www.nature.com/articles/s41...

While LLMs aced standard medical licensing exams, the authors argued for evaluation in real-world clinical settings. So they developed a new dataset and benchmark that features and simulates real-world emergency room cases, and tests robustness and adherence to clinical guidelines.

27.10.2025 08:18 — 👍 0 🔁 0 💬 1 📌 0Is it safe to use LLMs in the clinic today? This is the central question that @paulhager.bsky.social and Friederike Jungmann tackled in their 2024 study published in #NatureMedicine, which is our 5th-year anniversary's highlight paper this week.

#AIMAnniversary #AIMResearch #LLM #Benchmark

Paul presented dynamic, temporally resolved lung imaging using INR-based registration. Steven focused on multi-contrast fetal brain MRI reconstruction for improved motion correction and image quality.

Thank you both for sharing your work and always welcome!

Last week we had the pleaure of hosting Paul Kaftan (Institute of Medical System Biology, @uniulm.bsky.social) and Steven Jia (Institut de Neurosciences de la Timone, @univ-amu.fr) for talks on the incredible potential of Implicit Neural Representations (INRs) in medical imaging.

#AIMnews #INRs

We wish you success in all your future endeavors!

17.10.2025 11:38 — 👍 2 🔁 0 💬 0 📌 0

Huge and well-deserved congratulations to Dmitrii Usynin on successfully completing his doctoral journey in our lab! 🎊

Dima’s research has resulted in significant contributions to field of trustworthy artificial intelligence in particular for collaborative biomedical image analysis.

Since then, NIK's underlaying concept has kept significant traction, demonstrated by extensions like PISCO and the adaptation of the implicit k-space paradigm to other areas in the field like motion-resolved abdominal MRI (ICoNIK).

09.10.2025 11:35 — 👍 0 🔁 0 💬 0 📌 0The result? Flexible temporal resolution and efficient single-heartbeat reconstructions, marking a significant step toward real-time cardiac MRI without large training datasets.

Paper: link.springer.com/chapter/10.1...

🎯 @wqhuang.bsky.social, et al.'s answer was Neural Implicit k-Space (NIK) – a binning-free framework for non-Cartesian cardiac MRI reconstruction. By learning a continuous neural implicit representation directly in k-space, NIK eliminated the need for complex non-uniform FFTs and data binning.

09.10.2025 11:35 — 👍 0 🔁 0 💬 1 📌 0

Throwback to 2022! 🕰️ Continuing with our 5th-year anniversary series, we're now revisiting a question we faced at that time: How fast can we reliably see the beating heart in MRI without sacrificing image quality?

#AIMAnniversary #AIMResearch #NeuralImplicitRepresentation #CardiacMRI

We are thrilled to announce that Dr. Sevgi Gokce Kafali has been awarded the prestigious Alexander von Humboldt Postdoctoral Fellowship! 🎉 During her fellowship, she will focus on the "Assessment of Cardiac Health through Opportunistic Screening using MRI."

Our warmest congratulations!

#AIMNews

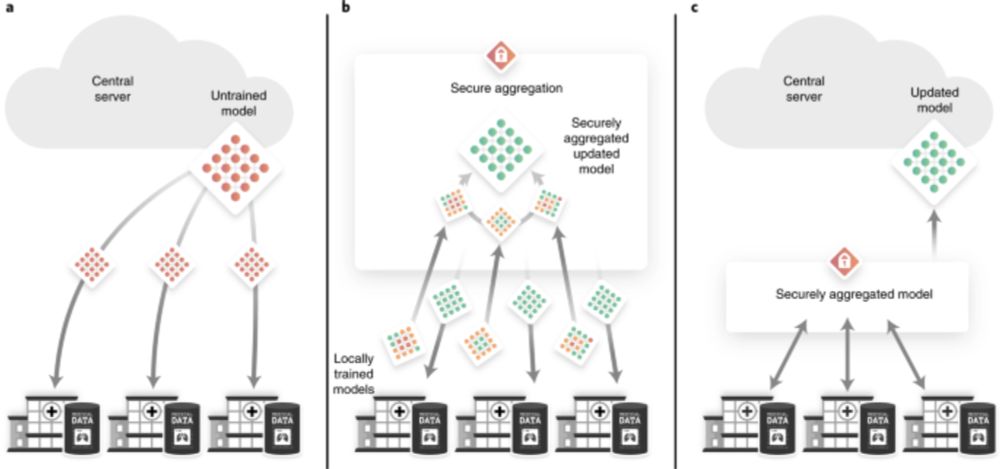

Building private and trustworthy AI in medicine is an ongoing journey, and we're incredibly proud of the foundational steps we've taken.

02.10.2025 11:49 — 👍 1 🔁 0 💬 0 📌 0🧑💻 @g-k.ai went on to co-develop VaultGemma at Google DeepMind – a leading differentially private large language model: services.google.com/fh/files/blo..., research.google/blog/vaultge...

02.10.2025 11:49 — 👍 1 🔁 0 💬 1 📌 0The principles behind PriMIA resonated deeply, sparking significant follow-up research:

🧑💻 @zilleralex.bsky.social further explored the nuanced trade-offs between privacy guarantees and model accuracy: www.nature.com/articles/s42....

🎯 @zilleralex.bsky.social, @g-k.ai, et al. addressed this by introducing PriMIA – a pioneering open-source framework for collaborative AI training. PriMIA combines federated learning, differential privacy, and secure multi-party computation.

Paper: www.nature.com/articles/s42...

First up, let's rewind to 2021 with research that tackled a critical challenge:

How do we train powerful AI models on sensitive medical data from multiple hospitals while maintaining patient privacy and data locality?