Thanks!!

14.11.2025 21:28 — 👍 1 🔁 0 💬 0 📌 0Thanks!!

14.11.2025 21:28 — 👍 1 🔁 0 💬 0 📌 0

Preprint: osf.io/eq2ra

This project was a huge undertaking with some fantastic co-authors: Kuan-Jung Huang, @byungdoh.bsky.social, Grusha Prasad, @sarehalli.bsky.social, @tallinzen.bsky.social and @linguistbrian.bsky.social

(13/13)

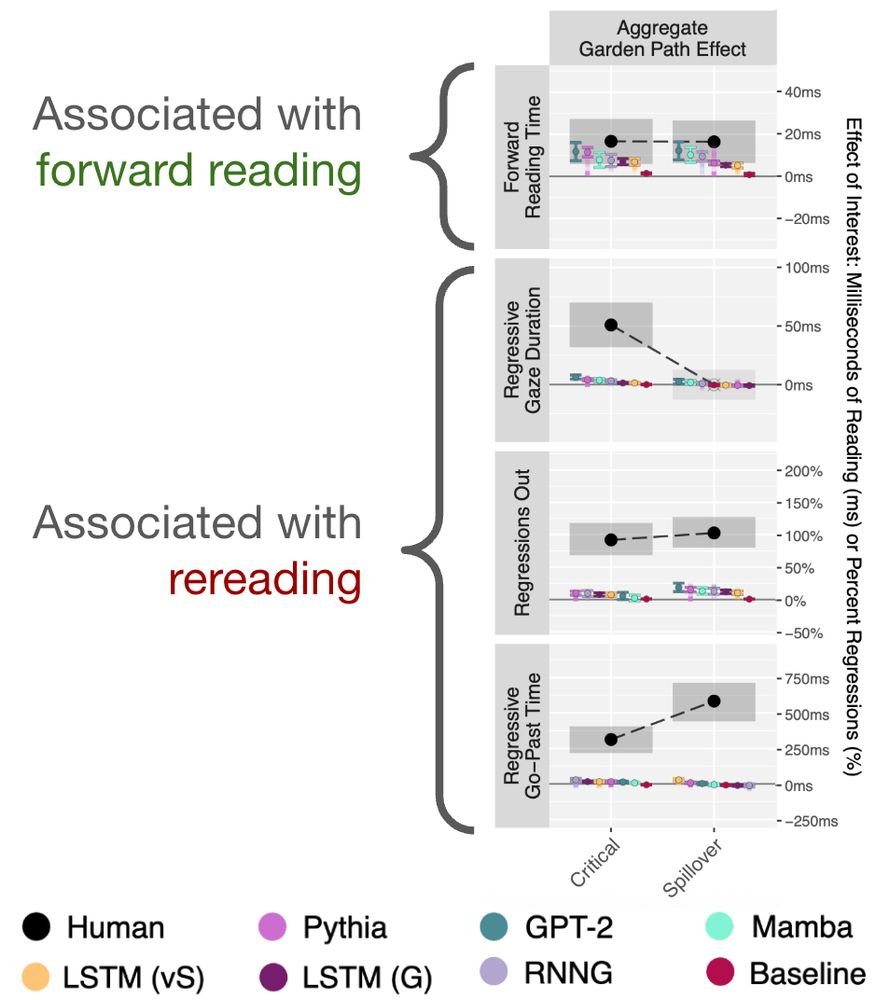

Summary: Prediction and structural processing have dissociable influences on reading behavior. LLMs are a good model of when our eyes move forward, but can’t explain when, where, or why humans reread. To model rereading, we need models that go beyond next-word prediction. (12/n)

14.11.2025 19:18 — 👍 0 🔁 0 💬 1 📌 0

What's going on in the cases predictability can't explain? When humans reread in syntactically challenging sents, they focus on parts of the sentence that are most helpful for revising the structure (in this case, the verb) consistent with structural processing accounts. (11/n)

14.11.2025 19:18 — 👍 0 🔁 0 💬 1 📌 0

But, LLMs drastically underpredicted the magnitude of difficulty whenever syntactically challenging parts of a sentence caused comprehenders to reread. More specifically, LLMs can’t predict the rate at which comprehenders reread, or the amount of time they spend rereading. (10/n)

14.11.2025 19:18 — 👍 0 🔁 0 💬 1 📌 0In contrast to prior results, the LLMs were able to explain the cost of processing syntactically challenging sentences, but only when the challenging parts of a sentence didn’t trigger re-reading (forward reading). (9/n)

14.11.2025 19:18 — 👍 0 🔁 0 💬 1 📌 0

We recorded eye movements of 368 participants when reading syntactically challenging sentences. From these eye movements we derive multiple measures of processing difficulty, and evaluate which measures the prediction account can and cannot explain using >400 different LLMs.(8/n)

14.11.2025 19:18 — 👍 0 🔁 0 💬 1 📌 0This is tricky to test because we need: 1) fine-grained behavioral measures sensitive to different types of difficulty 2) a large sample of human readers 3) a diverse set of syntactically challenging stimuli 4) a diverse set of LLMs for predictability estimation. (7/n)

14.11.2025 19:18 — 👍 0 🔁 0 💬 1 📌 0No single theory can account for all of the data. Perhaps *both* prediction and structural processing drive reading behavior. Can we find any evidence that they dissociate, and if so, what are their behavioral signatures? (6/n)

14.11.2025 19:18 — 👍 0 🔁 0 💬 1 📌 0

One example is garden path sentences, where LLMs have been shown to only predict a small fraction of the full processing cost. (5/n)

14.11.2025 19:18 — 👍 0 🔁 0 💬 1 📌 0

The prediction view has been shown to explain reading behavior in structurally simple sentences, but drastically underpredicts the difficulty readers experience in syntactically complex/challenging sentences. The opposite is true of the structural processing view. (4/n)

14.11.2025 19:18 — 👍 0 🔁 0 💬 1 📌 0(2) The prediction view: the cost of processing each word in a sentence can be fully reduced to the word’s contextual predictability (i.e. surprisal). Predicting the next word is exactly what LLMs are trained to do, so they’re a great tool for evaluating this view. (3/n)

14.11.2025 19:18 — 👍 0 🔁 1 💬 1 📌 0

We conducted a high-powered (n=368) eyetracking while reading study to test two competing views:

(1) The structural processing view: eye movements reflect the cost of mentally assembling the words of a sentence into a larger meaning. (2/n)

New Preprint: osf.io/eq2ra

Reading feels effortless, but it's actually quite complex under the hood. Most words are easy to process, but some words make us reread or linger. It turns out that LLMs can tell us about why, but only in certain cases... (1/n)