Super cool eval and event!

04.08.2025 18:54 — 👍 2 🔁 0 💬 0 📌 0Seth Karten

@sethkarten.ai.bsky.social

Autonomous Agents | PhD @ Princeton | World Gen @ Waymo | Prev: CMU, Amazon | NSF GRFP Fellow

@sethkarten.ai.bsky.social

Autonomous Agents | PhD @ Princeton | World Gen @ Waymo | Prev: CMU, Amazon | NSF GRFP Fellow

Super cool eval and event!

04.08.2025 18:54 — 👍 2 🔁 0 💬 0 📌 0Lidar is great for cars but we dont have a high utility sensor equivalent for grasping. Which yes is ultimately measuring friction but i think that misses the point. We need Dense Verification to signal closed loop autonomy

31.07.2025 17:13 — 👍 3 🔁 0 💬 1 📌 0Id say grasping is mainly a sensor issue

29.07.2025 06:27 — 👍 0 🔁 0 💬 1 📌 0

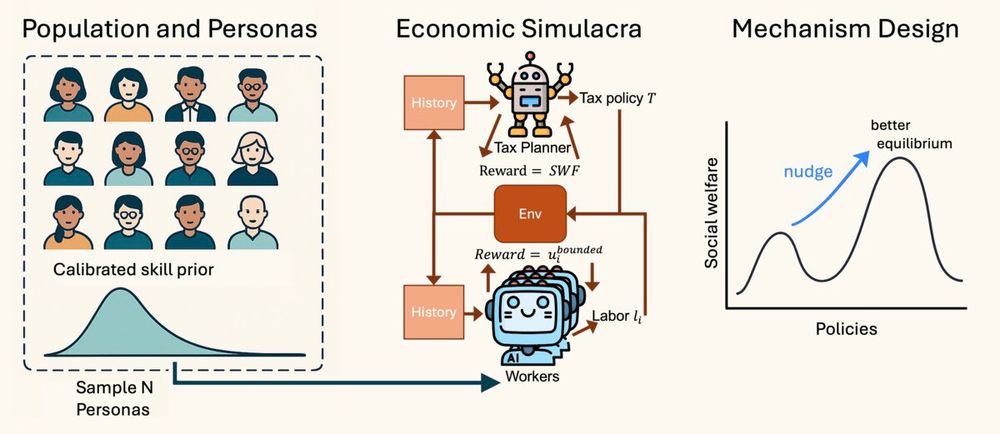

Diagram of LLM Economist: left—grid of persona‑conditioned worker agents; center—planner LLM sends tax schedule; right—social‑welfare ‘hill‑climb’.

🚀 New preprint!

🤔 Can one agent “nudge” a synthetic civilization of Census‑grounded agents toward higher social welfare—all by optimizing utilities in‑context? Meet the LLM Economist ↓

Special thanks to my collaborators Wenzhe Li, Zihan Ding, Samuel Kleiner, Yu Bai, Chi Jin and to Cooperative AI foundation for great feedback at the 2024 summer school

23.07.2025 17:29 — 👍 1 🔁 0 💬 0 📌 0A sandbox for mechanism design: iterate incentive schemes inside large‑scale simulacra before touching the real world.

Thoughts? RT if you think generative agents can design policy. 🔄❤️

Timeline plot: planner changes each tax year; welfare remains elevated across turnovers.

Democratic alignment: in a special case, periodic citizen voting can fire the planner. Leader turnover keeps welfare high and prevents policy drift—central nudging plus decentralized oversight in one sandbox.

23.07.2025 17:29 — 👍 1 🔁 0 💬 1 📌 0

Bar chart comparing social welfare for U.S. baseline, LLM planner, Saez schedule; LLM nearly matches Saez.

Centralized nudging: the planner’s marginal taxes beat U.S. statutory rates and approach Saez on aggregate welfare (almost double vs baseline).

23.07.2025 17:29 — 👍 1 🔁 0 💬 1 📌 0

Synthetic behavioral policies → we sample workers from 2023 ACS skills & demographics, then let each agent verify its own bounded rational utility from individualized preferences, enabling counterfactual reasoning.

23.07.2025 17:29 — 👍 1 🔁 0 💬 1 📌 0

We turn economic policy into in‑context RL:

• 1 000+ scalable persona‑agents with bounded‑rational utilities

• Planner agent designs marginal‑tax nudges

• Arbitrary economic simulacra in scenarios such as voting

📄 arxiv.org/abs/2507.15815

💻 github.com/sethkarten/L...

Diagram of LLM Economist: left—grid of persona‑conditioned worker agents; center—planner LLM sends tax schedule; right—social‑welfare ‘hill‑climb’.

🚀 New preprint!

🤔 Can one agent “nudge” a synthetic civilization of Census‑grounded agents toward higher social welfare—all by optimizing utilities in‑context? Meet the LLM Economist ↓

😅probably still confusing i guess. We are working on a getting started tutorial video/livestream

22.07.2025 19:36 — 👍 0 🔁 0 💬 1 📌 0Are you on the discord? That is the best place to ask questions.

For visual learners, I recommend playing the games yourself to gain familiarity with why it is hard.

Hopefully the compression didnt ruin the text :(

18.07.2025 20:20 — 👍 0 🔁 0 💬 1 📌 0

Open review doesnt seem public yet but here are the titles

18.07.2025 20:05 — 👍 1 🔁 0 💬 1 📌 0Full rules, practice ladder, and timeline 👇

pokeagent.github.io

Updated discord link due to massive enrollment! discord.gg/E2DuX5FWF7

Huge shout-out to my collaborators: Jake Grigsby, @stephmilani.bsky.social , Kiran Vodrahalli, Amy Zhang, Fei Fang, Yuke Zhu, and Chi Jin

14.07.2025 16:33 — 👍 1 🔁 0 💬 0 📌 0

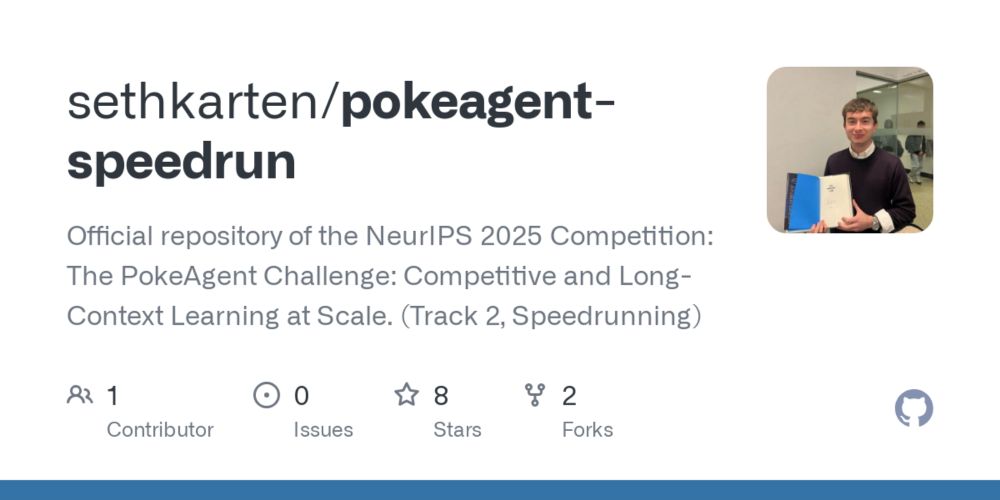

⏱️ Speedrun baseline (Track 2) — pokeagent-speedrun

mGBA wrapper + tool-use prompts for Emerald any % agents.

GitHub → github.com/sethkarten/p...

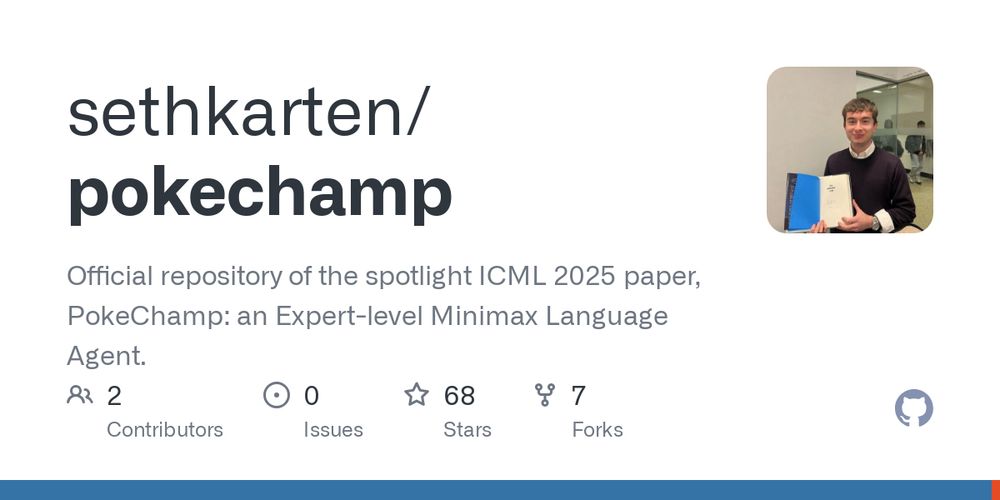

🧩 LLM baseline (Track 1) — PokeChamp

Prompt-only GPT + minimax search hits 76 % win-rate vs. scripted bots.

GitHub → github.com/sethkarten/p...

🤖 RL baseline (Track 1) — MetaMon

Population-based training with action masking for competitive play.

GitHub → github.com/UT-Austin-RP...

Banner reading “PokéAgent Challenge @ NeurIPS 2025” with two panels: Track 1 – Competitive Pokémon Battle Bots, Track 2 – Long-Horizon RPG Gameplay. Call-to-action: “Create video-game AI! Win prizes! Live now at pokeagent.github.io.”

🚀 Launch day! The NeurIPS 2025 PokéAgent Challenge is live. @neuripsconf.bsky.social

Two tracks:

① Showdown Battling – imperfect-info, turn-based strategy

② Pokemon Emerald Speedrunning – long horizon RPG planning

5 M labeled replays • starter kit • baselines.

Bring your LLM, RL, or hybrid agent!

Full paper on OpenReview → openreview.net/pdf?id=SnZ7SKykHh

Code & starter kit → github.com/sethkarten/pokechamp

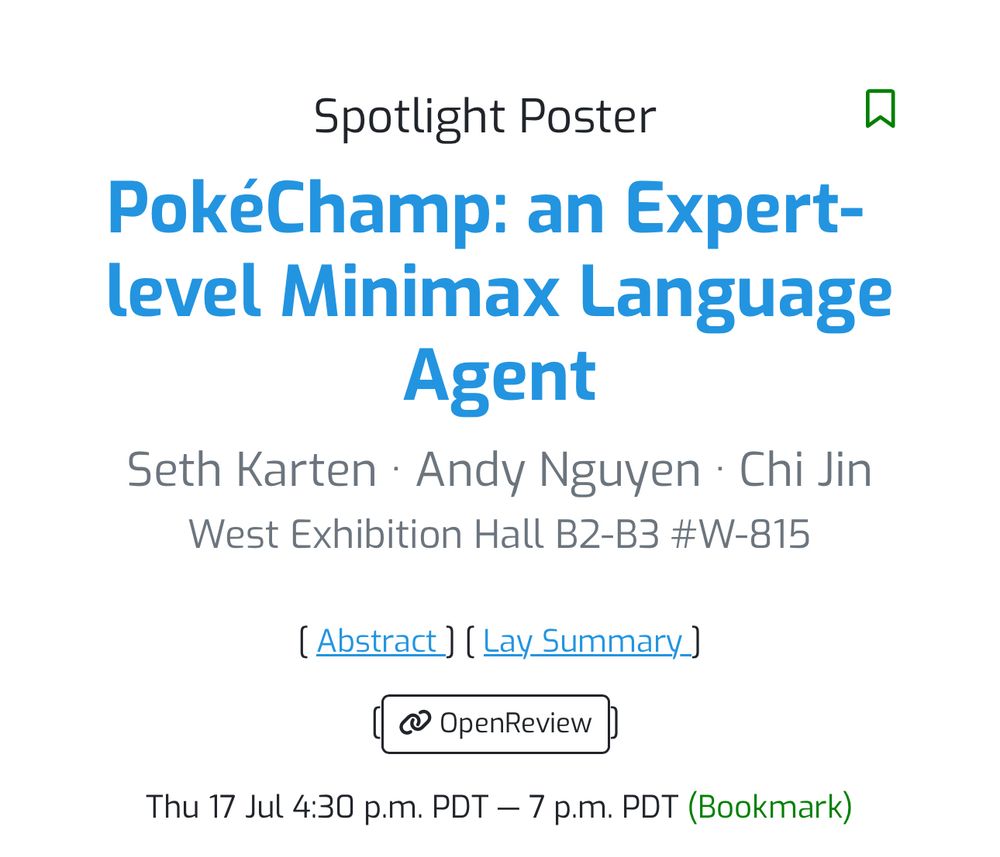

🚀 5 days until my ICML spotlight poster!

Key insights we’ll unpack:

• Base LLM + test-time planning

• Game-theoretic scaffolding

• Context-engineered opponent prediction

• Comparative LLM-as-judge (relative > absolute)

Catch me Thu Jul 17, 4:30-7 PM PT👇

Heading to #ICML2025 next week! If you’re into all things API (Artificial Pokémon Intelligence) from our PokéChamp spotlight to the upcoming NeurIPS PokeAgent Challenge, LLM-agent scaffolding & reasoning, or mechanism-design nudging, let’s connect. DMs open!

09.07.2025 17:06 — 👍 4 🔁 0 💬 0 📌 0Congrats, Simon!

09.07.2025 15:40 — 👍 0 🔁 0 💬 0 📌 0Also the Pokemon Agent challenge by @sethkarten.ai @stephmilani.bsky.social and others!

pokeagent.github.io

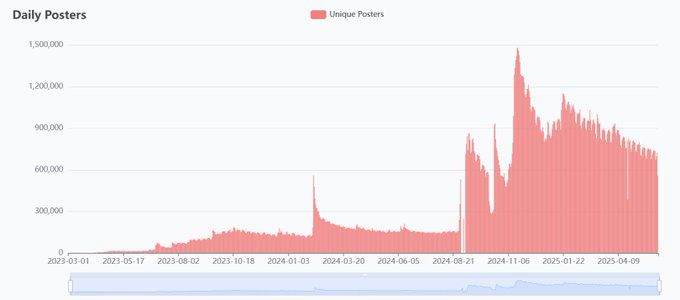

I do think that cross-posting is worth it still because the audience on this site isnt necessarily on the other

04.06.2025 18:19 — 👍 1 🔁 0 💬 2 📌 0Social media is usually started by young people and academics are an older crowd so it mainly just follows pre-existing social graphs. I joined academic twitter originally for the discoverability, which seems quite lost here

04.06.2025 18:11 — 👍 0 🔁 0 💬 1 📌 0

Social media takeoff is hard. Bluesky still lacks the capability to compete with twitter

04.06.2025 17:43 — 👍 0 🔁 0 💬 1 📌 0Thank you!!

30.05.2025 17:23 — 👍 2 🔁 0 💬 0 📌 0

Excited to announce that I will be spending the summer at @Waymo on the simulation realism team! I’ll be working on learning to generate simulated worlds.

🚙🚙🚙

Send me a message if youre in the bay area and want to chat!