Bootstrapped a Private GenAI Startup to $1M revenue, AMA

blog.helix.ml/p/bootstrapp...

Bootstrapped a Private GenAI Startup to $1M revenue, AMA

blog.helix.ml/p/bootstrapp...

Thanks Hannah! 💖

03.04.2025 07:26 — 👍 4 🔁 0 💬 0 📌 0

Good morning #KubeCon! Come find me if you want to talk running LLM systems like vision RAG in production on Kubernetes today. Email luke@helix.ml or reply here!

03.04.2025 07:25 — 👍 6 🔁 0 💬 0 📌 0

Phil setting up a vLLM vision model provider on helix on his nerd phone on the Elizabeth line #kubecon

02.04.2025 13:53 — 👍 5 🔁 0 💬 0 📌 0I mean, yes please 😄

02.04.2025 08:31 — 👍 1 🔁 0 💬 0 📌 0Catch my talk with Priya Samuel on running LLMs in production on k8s today at #KubeCon at S10A at 3:15pm ✨

02.04.2025 07:56 — 👍 8 🔁 2 💬 0 📌 0

Trying (but failing) to be as colourful as @hannahfoxwell.net today!

02.04.2025 07:54 — 👍 1 🔁 0 💬 1 📌 1

Oh hello there BlueSky! We've arrived here just in time to share all the highlights of #KubeCon #CloudNativeCon in sunny London!

Don't miss @hannahfoxwell.net and @lmarsden.bsky.social at 15:20 today talking about Platform Engineering and Developer Experience For Your On Prem LLM

I looked to define a term that’s being thrown around — #AI-native developer — with the help of @guypo.com @lmarsden.bsky.social and Thoughtworks’ Mike Mason so you can prepare your software teams for the near future.

thenewstack.io/what-is-an-a...

What’s your definition? Only on @thenewstack.io

Based on my experience of riding the crazy horse that is cursor agent mode, spec-driven development is much needed!

20.02.2025 18:43 — 👍 2 🔁 0 💬 0 📌 0

Deepseek are clever fuckers. I wrote this about how Deepseek is pushing decision makers in large financial institutions to seriously consider running their own models instead of calling out to Microsoft, Amazon & Google

Link below 👇

Speaker @lmarsden.bsky.social of MLOps Consulting will be in our SOOCon25 AI Openness track! 🎤 Join to hear about Production-Ready LLMs on Kubernetes: Patterns, Pitfalls, and Performance.💡https://buff.ly/3HjeQxq

#opensource #opensourceai #ai #stateofopencon #soocon25 #opensourcelondon #openuk

The cover of Ted Chiang's Exhalation. It shows a pair of lungs made up by plants and gears/cogs.

I enjoyed Stories of Your Life and Others so much that I'm moving straight to Chiang's other collection: Exhalation.

12.01.2025 07:59 — 👍 10 🔁 2 💬 2 📌 0MS Teams in the UK this morning is the most grim experience imaginable, tons of jitter and bandwidth seems fucked - like trying to do a video call over a highly contended LTE connection - my network connection is fine (per mtr) - is there an outage in some MS data center somewhere?

08.01.2025 11:34 — 👍 2 🔁 0 💬 0 📌 0

We shipped a lot over Christmas and I came here to release it:

github.com/helixml/heli...

- You can now drag'n'drop files directly into knowledge for Helix apps (rather than having to go via the filestore).

- Initial support for MCP (more on this coming soon!)

Cancelled my X subscription over all the stupid political interference

03.01.2025 20:50 — 👍 6 🔁 0 💬 1 📌 0

Thank you to @dciangot.com for doing the heavy lifting getting HelixML GPU runners running on Slurm HPC infra to take advantage of hundreds of thousands of GPUs running on Slurm infrastructure and transform them into multi-tenant GenAI systems!

Read all about it here: blog.helix.ml/p/running-ge...

This is so awesome! Imagine being able to combine the worlds of cloud-native scalable web services for LLMOps with the raw power of Slurm-powered supercomputers with some of the biggest compute power, networking and GPUs! Check out the writeup here: blog.helix.ml/p/running-ge...

20.12.2024 10:58 — 👍 4 🔁 0 💬 0 📌 0

So @cybernetist.com got gptscript working with llama3.3:70b! Check out the detailed writeup here: blog.helix.ml/p/gptscript-...

19.12.2024 17:25 — 👍 5 🔁 3 💬 0 📌 0

Got helix.ml running GenAI on a supercomputer..

13.12.2024 19:04 — 👍 3 🔁 0 💬 0 📌 0You're a dope llama hacker (ha ha try finding a gif for that)

11.12.2024 19:34 — 👍 0 🔁 0 💬 0 📌 0

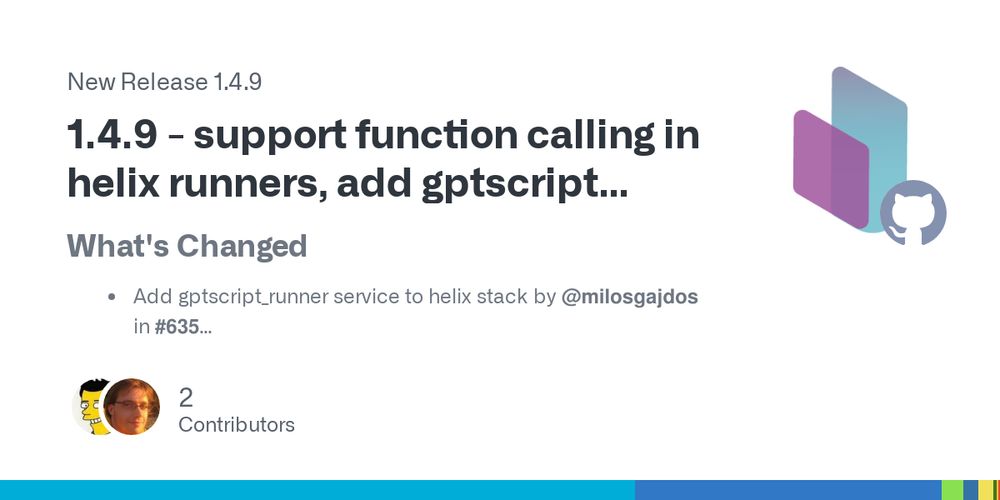

Fucking dope helix.ml release from @cybernetist.com if you like function calling in open source LLMs and @GPTScript_ai github.com/helixml/heli...

(Excuse my French)

Here's the post with the youtube video walkthrough of the workshop in it: blog.helix.ml/p/building-r...

04.12.2024 14:43 — 👍 1 🔁 0 💬 0 📌 0

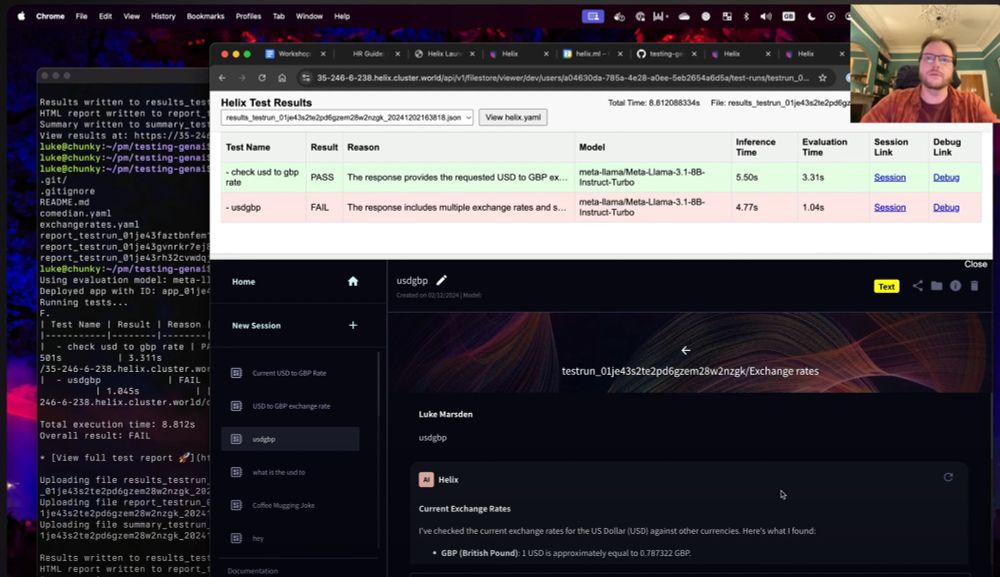

Test Driven Development (TDD) for your LLMs? Yes please, more of that please!

Back to basics - write a test, see the test fail, improve the prompt, see it pass, check it in - just like you would with any other code 😄

Luke, this post, including the video, was very helpful. I loved the conference talk. I took my graduate students through a somewhat similar exercise last week for their last lab of the semester. Well done. Thank you.

28.11.2024 10:58 — 👍 2 🔁 1 💬 0 📌 0This is a wonderful post by @lmarsden.bsky.social Great presentation and video as well.

28.11.2024 11:00 — 👍 2 🔁 1 💬 0 📌 0

ICYMI, here's my 2-part blog series on how we can all be AI engineers with open source models – and how to apply testing best practices to LLM apps

1. blog.helix.ml/p/we-can-all...

2. blog.helix.ml/p/from-click...

The reference architecture above uses option (a) for development and the whole stack runs on kind on your laptop

And you're right without GPUs you lose fine tuning and also image models. But you can still do knowledge, integrating the LLM with APIs via OpenAPI spec and tests

Yes, if you don't want to run your own GPUs, you can a) use an external LLM provider like togetherai (but then you lose the benefits of total data privacy within the cluster) or b) use ollama with CPU inference, but it's fairly slow unless you have lots of cores and use a smaller model

24.11.2024 08:17 — 👍 1 🔁 0 💬 1 📌 0