In 1-2 weeks we land live query profiling in Polars Cloud.

See exactly how many rows are consumed and produced per operation. Which operation takes most runtime, and watch the data flow through live, like water. 😍

@ritchie46.bsky.social

Author and Founder of Polars

In 1-2 weeks we land live query profiling in Polars Cloud.

See exactly how many rows are consumed and produced per operation. Which operation takes most runtime, and watch the data flow through live, like water. 😍

ClickBench now runs the Polars streaming engine.

Polars is the fastest solution on that benchmark on Parquet file(s) 😎

The speed is there. This year, we will tackle out of core (spill to disk) and distributed to truly tackle scale.

benchmark.clickhouse.com#system=-ahi|...

I am sorry about that. We do welcome contributions, however design-wise we have to be strict. I would always recommend picking accepted issues and asking if now is the time to implement them before putting in the effort.

10.01.2026 18:05 — 👍 0 🔁 0 💬 1 📌 0

The pre-release of Polars 1.36 is out. Please give it a try so that we can ensure a stable final release with minimal regressions.

It lands a lot of goodies:

- Extension types

- Lazy pivots

- Streaming group_by_dynamic

- Float16 support

- Nested .over() expressions

github.com/pola-rs/pola...

Polars 1.34.0 is out!

Any Polars query can be turned into a generator!

Aside from that Polars now properly supports decimal types, scan_iceberg is completely native, cross joins can maintain order and much more.

Changelogs here:

- github.com/pola-rs/pola...

- github.com/pola-rs/pola...

Struct:

Think of a tuple with named fields. Or multiple columns in a single column.

4/4

Many new expressions are lowered to the streaming engine. This means you can run more queries faster!

See the full changelog here:

github.com/pola-rs/pola...

3/4

More joins will be lowered to more strict variants based on predicates. This can save a lot of intermediate rows!

2/4

The Categorical type is now streaming! No `StringCache` anymore and working Categoricals in distributed Polars.

github.com/pola-rs/pola...

Polars 1.32 is out and it lands a lot!

Let's go through a few:

1/4

Selectors are now implemented in Rust and we can finally select arbitrary nested types:

Join me the 24th in SF for a @pola.rs meetup!

I will be having a talk about Polars, Polars-Cloud and the upcoming distributed engine.

NVIDIA will also be doing a talk about their GPU acceleration with Polars-CuDF

Hope to see you there!

lu.ma/60b6wfs8

No more `with pl.StringCache()`

Soon... 🌈

This Thursday I will join Lawrence Mitchell from @nvidia

on the podium during the NVIDIA GTC in Paris.

We'll discuss how we made Polars work on the GPU and how it will scale to multi-GPU in the future.

On se voit là-bas !

vivatechnology.com/sessions/ses...

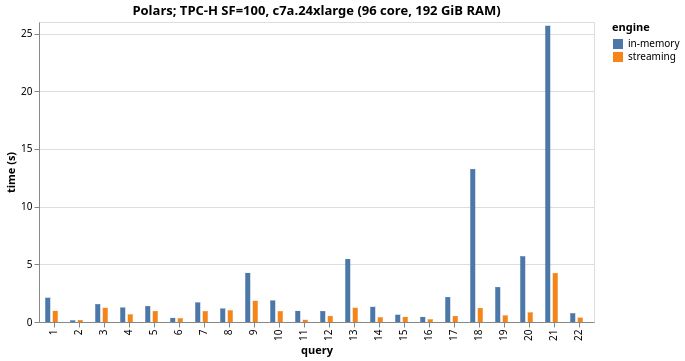

Polars has gotten 4x faster than Polars! 🚀

In the last months, the team has worked incredibly hard on the new-streaming engine and the results pay off. It is incredibly fast, and beats the Polars in-memory engine by a factor of 4 on a 96vCPU machine.

** Sponsor announcement ** Polars is a Supporter of RustWeek!

Find out more about them here: pola.rs

Thank you @pola.rs for your support! 🙏

More info about RustWeek and tickets: rustweek.org

#rustweek #rustlang

Yeah, or even for single machine remotely. E.g. let's say you run a very small node as airflow orchestrator, but need a big VM for the ETL job. That orchestrator can initiate the remote query and doesn't have to worry about hardware setup/teardown.

17.02.2025 13:44 — 👍 1 🔁 0 💬 1 📌 0Already got all TPC-H queries running distributed!

12.02.2025 14:16 — 👍 7 🔁 0 💬 0 📌 0He is a notorious Polars hater and has tweeted he wants the project to fail.

He fears the fact that Polars deviation from the pandas API will splinter the landscape and doesn't appreciate new API development. I haven't read a technical reason from his side.

That was all pre 1.0.

We've released 1.0 in july last year. The API is stable now.

I would call that a weakness of the AI. 😅

28.01.2025 17:09 — 👍 1 🔁 0 💬 1 📌 0

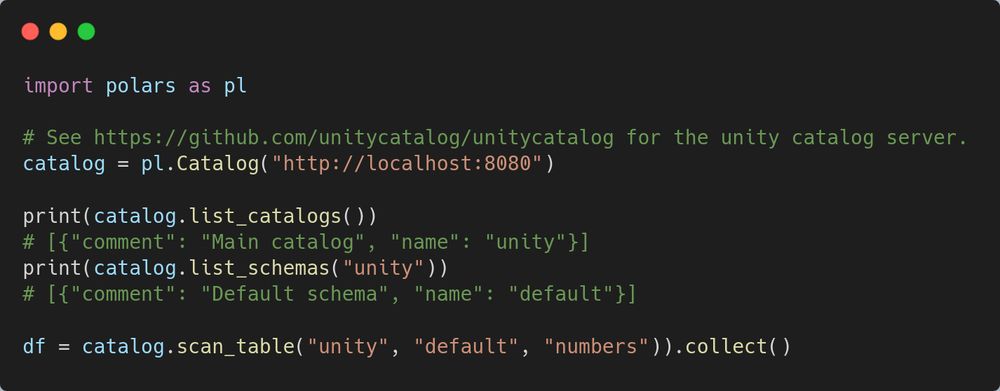

This weeks Polars release we shipped initial Unity Catalog support. This makes integration with Databricks much smoother.

Writing features are under development and will follow soon. Full release notes: github.com/pola-rs/pola...

Learning polars has been ... actually a joy? It just makes sense to my #rstats #dplyr trained data muscles #databs #python

kevinheavey.github.io/modern-polars/

This weeks Polars release has a huge improvement for window functions. They can be an order of magnitude faster.

And we can run 20/22 TPC-H queries on the new streaming engine and all on Polars cloud. More will follow soon! ;)

See the full release docs here:

github.com/pola-rs/pola...

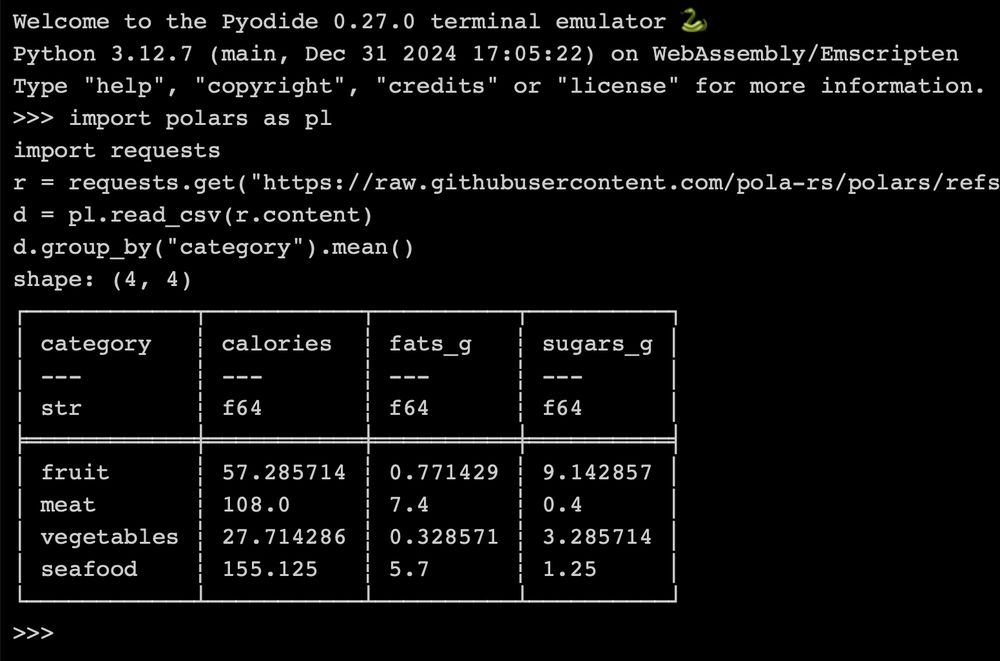

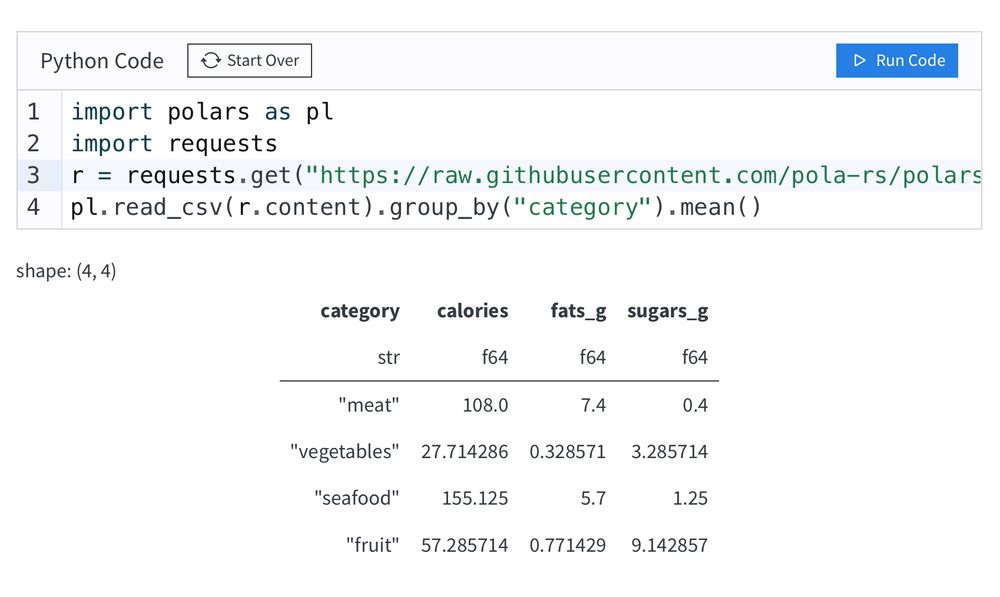

A screenshot of a Pyodide REPL executing Polars code: import polars as pl import requests r = requests.get("https://raw.githubusercontent.com/pola-rs/polars/refs/heads/main/examples/datasets/foods2.csv") pl.read_csv(r.content).group_by("category").mean()

A screenshot of a Quarto Live code cell executing Polars code: import polars as pl import requests r = requests.get("https://raw.githubusercontent.com/pola-rs/polars/refs/heads/main/examples/datasets/foods2.csv") pl.read_csv(r.content).group_by("category").mean()

![A screenshot of a Shinylive app using Polars code:

from shiny import App, render, ui

import polars as pl

from pathlib import Path

app_ui = ui.page_fluid(

ui.input_select("cyl", "Select Cylinders", choices=["4", "6", "8"]),

ui.output_data_frame("filtered_data")

)

def server(input, output, session):

df = pl.read_csv(Path(__file__).parent / "mtcars.csv")

@output

@render.data_frame

def filtered_data():

return (df

.filter(pl.col("cyl") == int(input.cyl()))

.select(["mpg", "cyl", "hp"]))

app = App(app_ui, server)](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:hnkswprmt42fkt2huzbkcpcx/bafkreifcr4vfugeei25pfex52gvvvxnukyy4sms4ytpk6isepn5c3j473i@jpeg)

A screenshot of a Shinylive app using Polars code: from shiny import App, render, ui import polars as pl from pathlib import Path app_ui = ui.page_fluid( ui.input_select("cyl", "Select Cylinders", choices=["4", "6", "8"]), ui.output_data_frame("filtered_data") ) def server(input, output, session): df = pl.read_csv(Path(__file__).parent / "mtcars.csv") @output @render.data_frame def filtered_data(): return (df .filter(pl.col("cyl") == int(input.cyl())) .select(["mpg", "cyl", "hp"])) app = App(app_ui, server)

Recently I've been working on getting #polars running in #pyodide. This was a fun one, even requiring patches to LLVM's #wasm writer! Everything has now been upstreamed and earlier this week Pyodide v0.27.0 released, including a Wasm build of Polars usable in Pyodide, Shinylive and Quarto Live 🎉

04.01.2025 11:59 — 👍 49 🔁 9 💬 0 📌 0

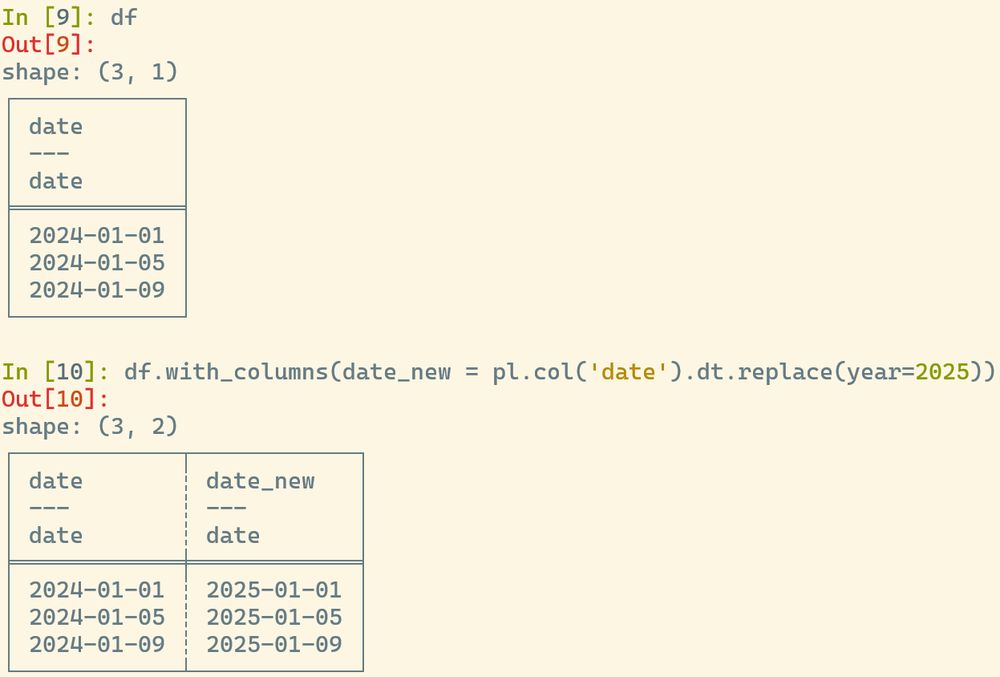

demo of dt.replace

✨ New temporal feature in the next Polars release!

⏲️ dt.replace lets you replace components of Date / Datetime columns

⚡🦀 It's an expressified vectorised rustified version of the Python standard library datetime.replace

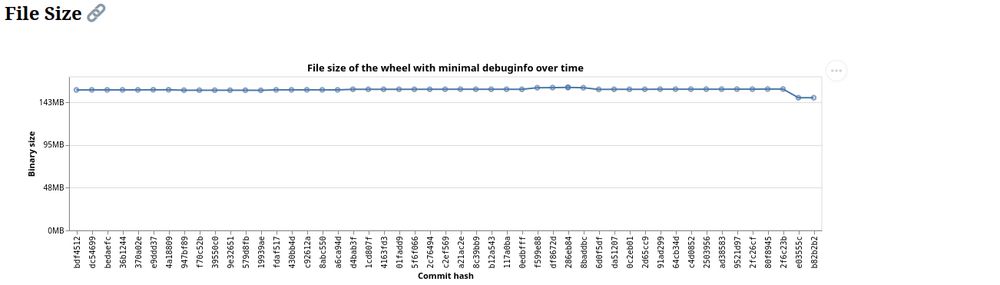

We removed serde from our Series struct and saw a significant drop in Polars' binary size (of all features activated). The amount of codegen is huge. 😮

18.12.2024 13:32 — 👍 5 🔁 0 💬 0 📌 0

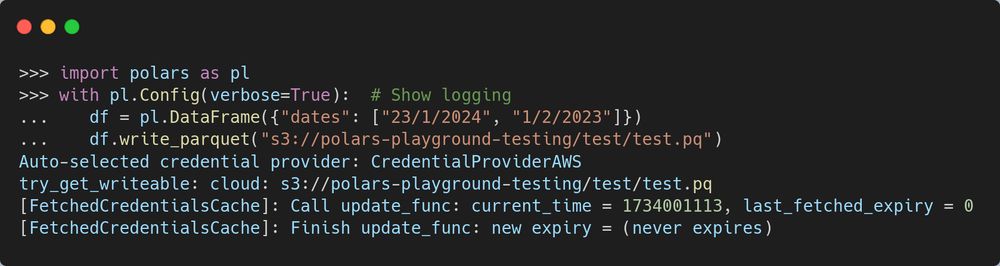

We finally support writing to cloud storage natively and seamlessly!

12.12.2024 11:04 — 👍 18 🔁 2 💬 0 📌 1

Join us this Friday if you're eager to see what it can be like to design a recommender while limiting ourselves to just a DataFrame API. It is somewhat unconventional, but a great excuse to show off a Polars trick or two.

www.youtube.com/watch?v=U3Fi...

Interesting. is it an authentication error with Azure Storage?

08.12.2024 06:11 — 👍 0 🔁 0 💬 1 📌 0Nice Post.

For the benchmarks, I think it would be more fair if you use `scan_csv` as read forces full materialization.

P.S. why use pyarrow to read instead of our native reader?