Sensory reformatting for a working visual memory www.sciencedirect.com/science/arti...

10.10.2025 03:18 — 👍 2 🔁 2 💬 0 📌 0@danwang7.bsky.social

PhD candidate Utrecht University|AttentionLab UU | CAP-Lab | Visual working memory | Attention

Sensory reformatting for a working visual memory www.sciencedirect.com/science/arti...

10.10.2025 03:18 — 👍 2 🔁 2 💬 0 📌 0Many thanks to my co-authors!! @suryagayet.bsky.social @jthee.bsky.social @arora-borealis.bsky.social @Stefan Van der Stigchel @Samson Chota

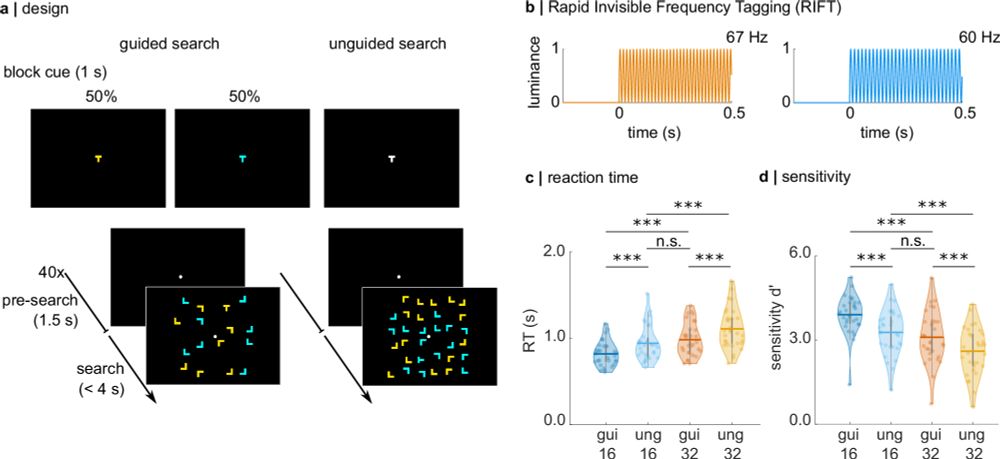

27.08.2025 21:35 — 👍 1 🔁 0 💬 0 📌 0In conclusion, we show that the dynamic interplay between top-down control and bottom-up saliency directly impacts early visual responses, thereby illuminating a complete timeline of attentional competition in visual cortex.

27.08.2025 21:33 — 👍 1 🔁 0 💬 0 📌 0

Last, the greater the RIFT responses to the target compared to the distractor, the faster the participant responded to the target, demonstrating that the RIFT responses capture behaviorally relevant processes.

27.08.2025 21:32 — 👍 1 🔁 0 💬 1 📌 0

2) The presence of a distractor attenuated the initial RIFT response to the target, reflecting competition during the initial stages of visual processing

27.08.2025 21:31 — 👍 1 🔁 0 💬 1 📌 0

For conditional comparisons of the RIFT responses, we found that 1)Both target and distractor evoked stronger initial RIFT responses than nontargets, reflecting top-down and bottom-up attentional effects on early visual processing. And RIFT responses to the distractor eventually be suppressed.

27.08.2025 21:29 — 👍 1 🔁 0 💬 1 📌 0

For tagging manipulation, we tagged target and distractor in distractor present condition, tagged target and one of the nontarget in distractor absent condition. And frequency-tagging manipulation successfully elicited corresponding frequency-specific neural responses

27.08.2025 21:25 — 👍 1 🔁 0 💬 1 📌 0

We found that the salient distractor captured attention on behavioral level

27.08.2025 21:23 — 👍 1 🔁 0 💬 1 📌 0In this study, to determine how top-down and bottom-up processes unfold over time in early visual cortex, we employed Rapid Invisible Frequency Tagging (RIFT) while participants performed the additional singleton task.

27.08.2025 21:18 — 👍 1 🔁 0 💬 1 📌 0

🧠 Excited to share that our new preprint is out!🧠

In this work, we investigate the dynamic competition between bottom-up saliency and top-down goals in the early visual cortex using rapid invisible frequency tagging (RIFT).

📄 Check it out on bioRxiv: www.biorxiv.org/cgi/content/...

Overview of CAP-Lab presentations at ECVP 2025: Lasse Dietz: Anticipated relevance modulates early visual processing Monday 14:45, talk @ Linke Aula Learning & Memory Surya Gayet: Perceptual precedence for expected and dreaded visual events Tuesday 8:30, talk @ RW 1 Using interocular suppression during consciousness research Kabir Arora: Dissociating external and internal attentional selection Tuesday 9:15, talk @ Atrium Maximum Object Recognition & Visual Attention Dan Wang: Unraveling the time course of attentional capture: an EEG-RIFT study Tuesday 15:30-17:00, poster @ Foyer Philosophicum Yichen Yuan: Decoding auditory working memory load from alpha oscillations Wednesday 10:00-11:30, poster @ Foyer Philosophicum

Looking forward to joining #ECVP2025 tomorrow. CAP-Lab is well represented, with 3 talks (@lassedietz.bsky.social on Monday, and @arora-borealis.bsky.social and I on Tuesday), and 2 posters (by @danwang7.bsky.social on Tuesday, and @yichen-yuan.bsky.social on Wednesday). Please come by for a chat! 💜

24.08.2025 20:33 — 👍 7 🔁 1 💬 1 📌 0

🚨 New preprint: Invisible neural frequency tagging (RIFT) for the underfunded researcher:

👉 www.biorxiv.org/cgi/content/...

RIFT uses high-frequency flicker to probe attention in M/EEG with minimal stimulus visibility and little distraction. Until now, it required a costly high-speed projector.

Excited to present at #ECVP2025 - Monday afternoon, Learning & Memory - about how anticipating relevant visual events prepares visual processing for efficient memory-guided visual selection! 🧠🥳

@attentionlab.bsky.social @ecvp.bsky.social

Preprint for more details: www.biorxiv.org/content/10.1...

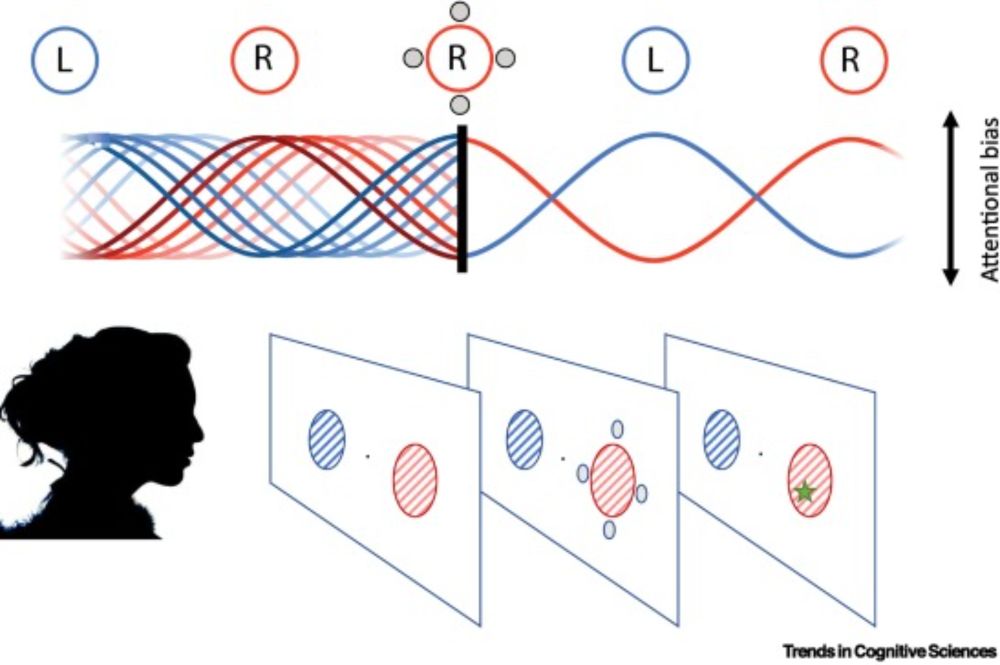

Excited to give a talk at #ECVP2025 (Tuesday morning, Attention II) on how spatially biased attention during VWM does not boost excitability the same way it does when attending the external world, using Rapid Invisible Frequency Tagging (RIFT). @attentionlab.bsky.social @ecvp.bsky.social

24.08.2025 13:13 — 👍 14 🔁 3 💬 0 📌 1Excited to share that I’ll be presenting my poster at #ECVP2025 on August 26th (afternoon session)!

🧠✨ Our work focused on dynamic competition between bottom-up saliency and top-down goals in early visual cortex by using Rapid Invisible Frequency Tagging

@attentionlab.bsky.social @ecvp.bsky.social

IRL/CAP-Lab meeting

I had loads of fun today, sharing thoughts and projects during a joint lab-meeting with @nadinedijkstra.bsky.social's Imagine Reality Lab. Two hours were way too short to discuss all the cool projects!

Thanks everyone for your contributions 💜

Best hunter trainer ever🫡

15.07.2025 12:39 — 👍 1 🔁 0 💬 0 📌 0

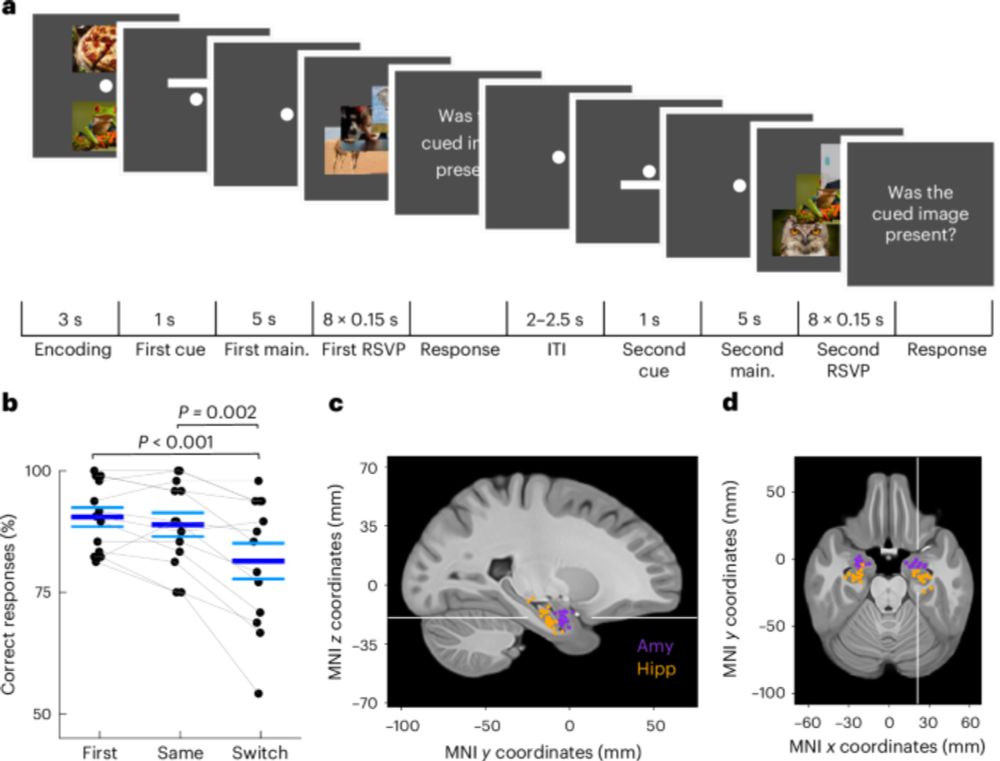

In this Article, Paluch et al. show that unattended working memory items, as well as attended ones, are encoded in persistent activity in the medial temporal lobe. @jankaminski.bsky.social

www.nature.com/articles/s41...

Thrilled to share our new opinion piece—hot off the press—on attentional sampling, co-authored with the magnificent Flor Kusnir and Daniele Re. It captures where our thinking has landed on this topic after years of work.

www.cell.com/trends/cogni...

Last week's symposium titled "Advances in the Encephalographic Study of Attention" was a great success! Held in the KNAW building in Amsterdam and sponsored by the NWO, many of (Europe's) leading attention researchers assembled to discuss the latest advances in attention research using M/EEG.

30.06.2025 07:12 — 👍 25 🔁 7 💬 4 📌 3Now published in Attention, Perception & Psychophysics @psychonomicsociety.bsky.social

Open Access link: doi.org/10.3758/s134...

In our new MEG/RIFT study from @thechbh.bsky.social by

@katduecker.bsky.social , we show that feature-guidance in visual search alters neuronal excitability in early visual cortex —supporting a priority-map-based attentional mechanism.

rdcu.be/eqFX7

Thanks to the support of the Dutch Research Council (NWO) and @knaw-nl.bsky.social , we're thrilled to announce the international symposium "Advances in the Encephalographic study of Attention"! 🧠🔍

📅 Date: June 25th & 26th

📍 Location: Trippenhuis, Amsterdam

Through experience, humans can learn to suppress locations that frequently contain distracting stimuli. Using SSVEPs and ERPs, this study shows that such learned suppression modulates early neural responses, indicating it occurs during initial visual processing.

www.jneurosci.org/content/jneu...

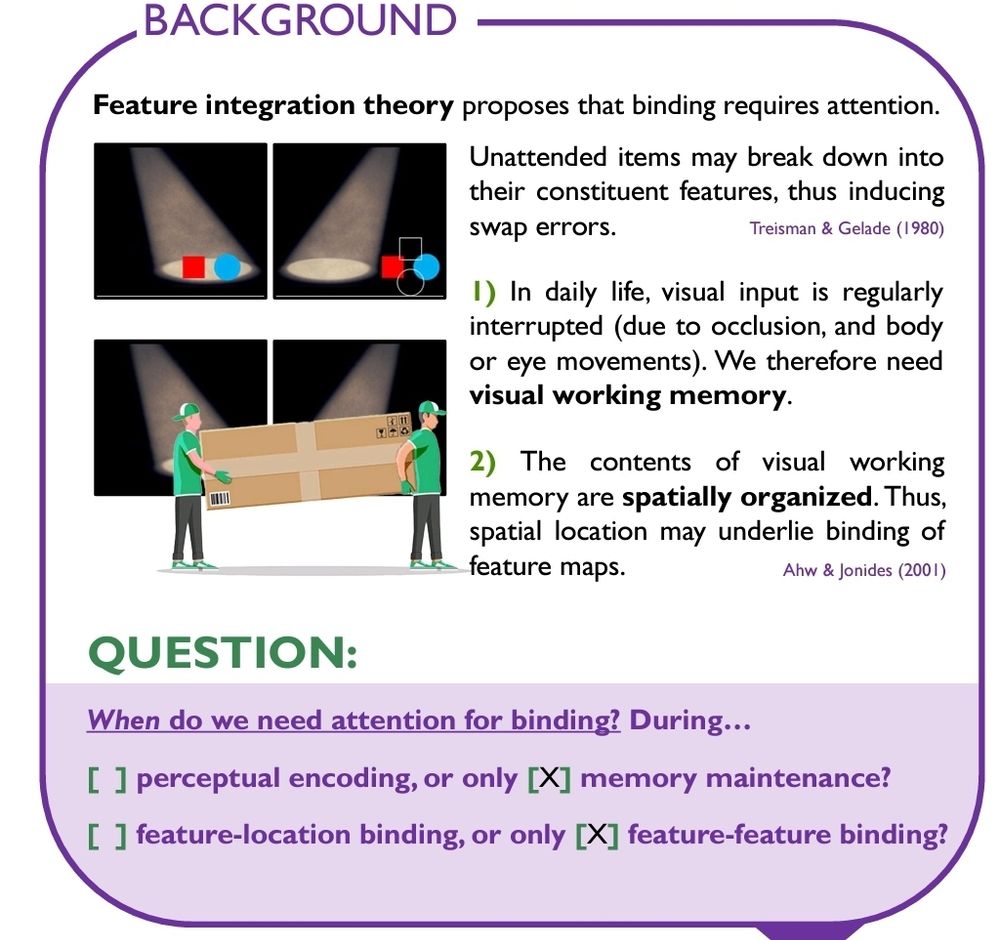

Good morning #VSS2025, if you care for a chat about the role of attention in binding object features (during perceptual encoding and memory maintenance), drop by my poster now (8:30-12:30) in the pavilion (422). Hope to see you there!

19.05.2025 12:36 — 👍 14 🔁 3 💬 3 📌 0Thanks for the recommendation! Really nice paper!

21.12.2024 00:21 — 👍 1 🔁 0 💬 0 📌 0Many thanks to my co-authors! ❤️❤️

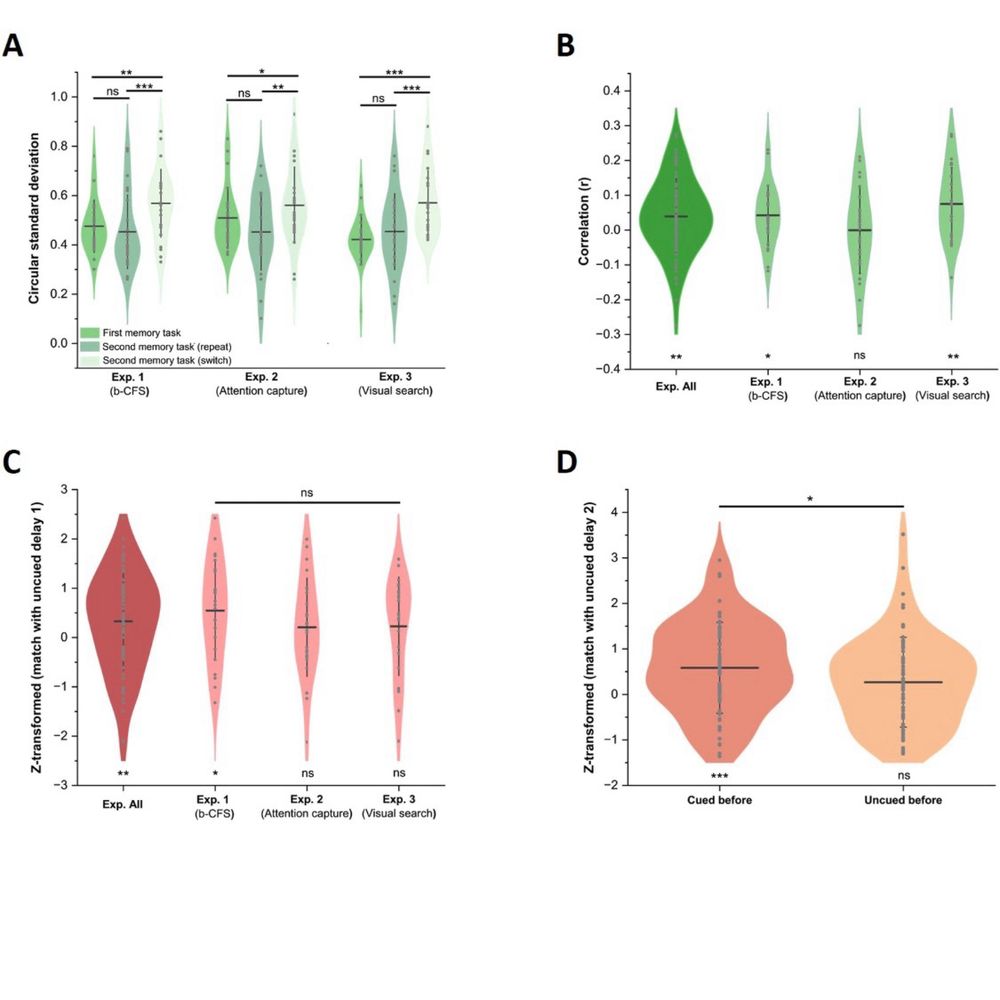

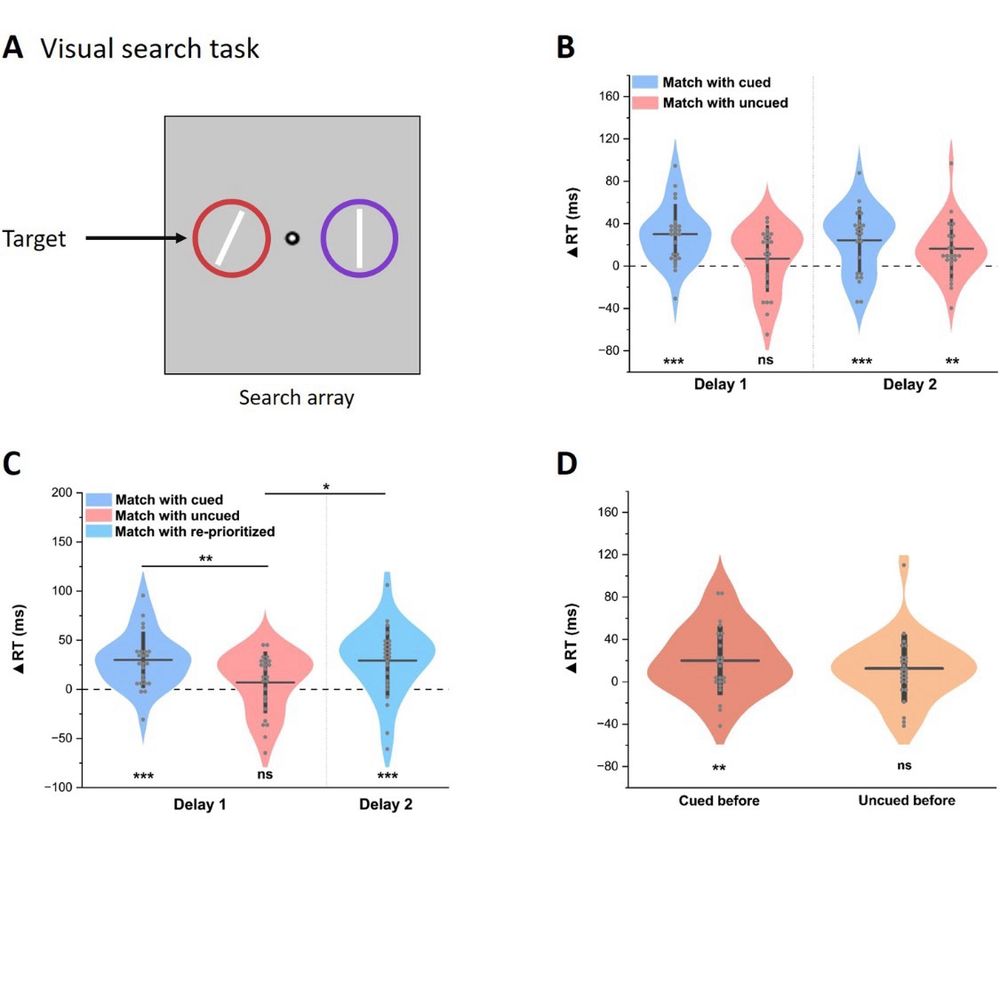

20.12.2024 00:28 — 👍 2 🔁 0 💬 0 📌 0In conclusion, observers can flexibly de-prioritize and re-prioritize VWM contents based on current task demands, allowing observers to exert control over the extent to which VWM contents influence concurrent visual processing.

20.12.2024 00:26 — 👍 3 🔁 1 💬 1 📌 0

In the end, we found no evidence that the influence of non-prioritized memory items on early visual processing differs between the three experimental paradigms. And we found non-prioritized memory items influence early visual processing when we combined the data from three experiments.

20.12.2024 00:23 — 👍 1 🔁 0 💬 1 📌 0

In Experiment 3, we also found that only prioritized memory items influenced early visual processing in terms of the allocation of spatial attention.

20.12.2024 00:15 — 👍 1 🔁 0 💬 1 📌 0