Best part: You can start free, upgrade to Pro ($40/mo) when you need advanced features.

No DevOps. No infrastructure headaches.

Try it: squad.ai

#AIAgents #NoCode #Squad #Automation

@chutesai.bsky.social

Best part: You can start free, upgrade to Pro ($40/mo) when you need advanced features.

No DevOps. No infrastructure headaches.

Try it: squad.ai

#AIAgents #NoCode #Squad #Automation

Squad just made building AI agents ridiculously easy 🔥

Here's what you get:

✅ Drag-and-drop canvas (no code required)

✅ Pre-built agents for common tasks

✅ Custom tool creation with code editor (Pro)

✅ Runs on decentralized Chutes infrastructure

✅ Deploy in minutes, not weeks

The question:

how fast will enterprises realize they have a better option?

Open source won infrastructure. It's winning AI too.

It's about:

• Cost efficiency (85% cheaper than AWS)

• No single point of failure

• Vendor optionality

• Access to a large and constantly evolving selection of models vs being locked to one

Decentralized inference is already faster + cheaper.

Hot take: In 5 years, running all your AI on one centralized provider will feel as risky as hosting everything on a single server.

The shift to decentralized AI isn't about ideology.

Chutes infrastructure update 🚀

Currently running:

• Thousands of H200 & A6000 GPUs

• Processing billions of tokens daily

• leading open source inference provider on OpenRouter

• 100% decentralized on Bittensor Subnet 64

All with ~85% cost savings vs AWS.

Open source AI is scaling on Chutes 💪

If you've been sleeping on open source AI infrastructure, maybe it's time to wake up.

Turns out the future of AI might not be owned by 3 companies.

It might be decentralized.

And it might already be happening.

chutes.ai

This is one of those moments where you realize you've been building something bigger than you thought.

We're not just "a decentralized AI platform."

We might be building the Linux of AI inference.

And we're just getting started.

Here's what really gets us:

The AI inference market is projected to hit $255 BILLION by 2030.

We're leading in open source inference.

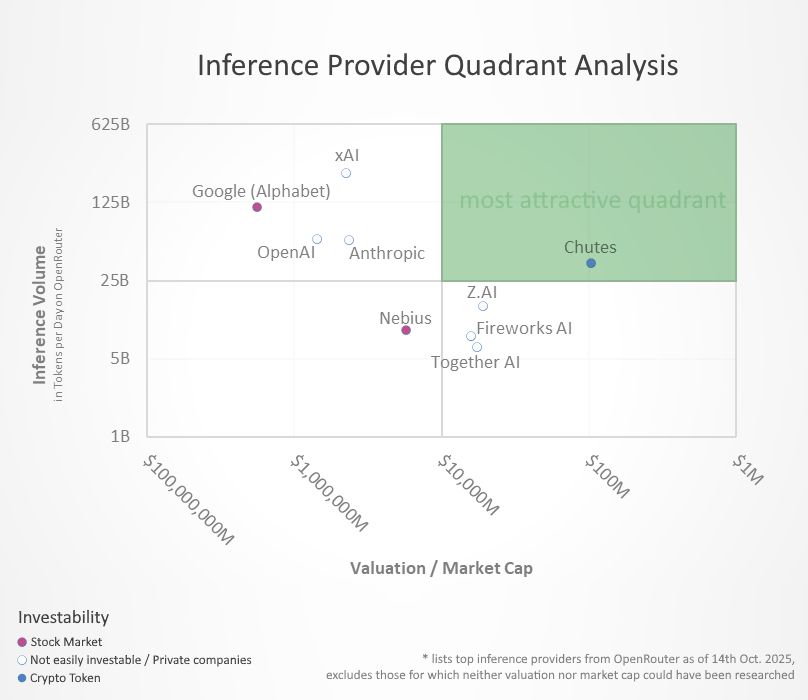

And according to some quadrant analysis floating around, we're massively undervalued compared to competitors.

The companies we're apparently ahead of have:

- 100x our funding

- 10x our team size

- Every tech blog writing about them daily

We have:

- 60+ open source models

- Decentralized infrastructure

- Apparently no chill

Here's the kicker:

We're #1 even though OpenRouter RED-FLAGS us by default because we don't have TEE implemented yet ( Coming very soon btw )

Imagine what happens when we remove that red flag 💀

Chutes is apparently the #1 Open Source inference provider on OpenRouter*

Not top 5. Not top 3.

NUMBER ONE.

40+ BILLION tokens per day.

And we literally just found out. Yesterday.

*P.S Based on publicly available information on OpenRouter Only

so... we just found out something absolutely insane 🧵

we weren't even looking for this data.

one of our engineers was going through OpenRouter's Publicly available charts at 3am (as you do) and discovered something that made us all stop and stare at our screens.

Hunyuan-3 Image Generation Live Now on Chutes 🗻🌻

chutes.ai/app/chute/0c...

A stunning new gen image model - available as part of your Pro, Plus or Base subscription or PAYG through our flex tier.

Send us your image magic below ⬇️ (Attached image taken directly from the API)

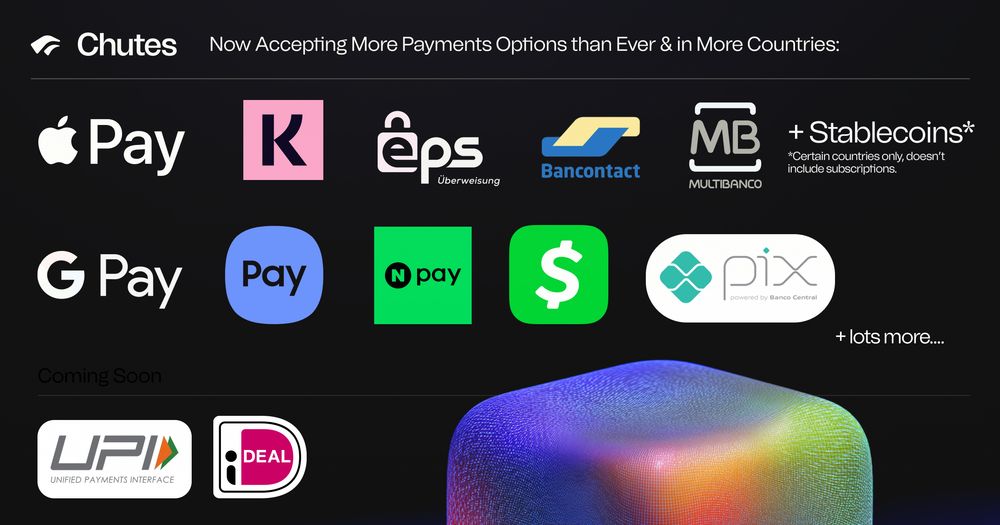

We've just enabled more payment options for our customers 🎉

- Apple & Google pay in more countries

- Buy Now, Pay Later providers such as Klarna

- More European, Asian & Latin American providers such as Pix, Naver Pay & More.

- Stablecoin payments

+ lots more coming soon.

We’ve also launched a few new places to stay connected with updates, support, and community discussions:

• Reddit: r/ChutesAI - reddit.com/r/chutesAI/

• X: x.com/chutes_ai

The team has been working hard behind the scenes to scale up and push Chutes forward, and this marks a big step in that direction.

Say hello to

@0xVeight

@0xsirouk

@0xAlgowary

+ more in our discord, here and in some new channels listed below 👇

Chutes Team Expansion Update

We’re excited to share that

@0xTuDudes

has officially joined chutes.ai to bring extra firepower across development, sales, marketing, and support.

20 TRILLION TOKENS PROCESSED ON CHUTES 🪂

After 10 months we've reached a massive milestone, with thousands of production applications, millions of users - all powered by decentralized inference/compute on SN64 Bittensor.