What might happen to society and politics after widespread automation? What are the best ideas for good post-AGI futures, if any?

David Duvenaud joins the podcast —

pnc.st/s/forecast/...

@forethought-org.bsky.social

Research nonprofit exploring how to navigate explosive AI progress. forethought.org

What might happen to society and politics after widespread automation? What are the best ideas for good post-AGI futures, if any?

David Duvenaud joins the podcast —

pnc.st/s/forecast/...

How could humans lose control over the future, even if AIs don't coordinate to seek power? What can we do about that?

Raymond Douglas joins the podcast to discuss “Gradual Disempowerment”

Listen: pnc.st/s/forecast/...

Should AI agents obey human laws?

Cullen O’Keefe (Institute for Law & AI) joins the podcast to discuss “law-following AI”.

Listen: pnc.st/s/forecast/...

Read it here:

www.forethought.org/research/su...

The ‘Better Futures’ series compares the value of working on ‘survival’ and ‘flourishing’.

In ‘The Basic Case for Better Futures’, Will MacAskill and Philip Trammell describe a more formal way to model the future in those terms.

You can find this and future article narrations wherever you listen to podcasts: www.forethought.org/subscribe#p...

26.08.2025 10:58 — 👍 0 🔁 0 💬 0 📌 0

We're starting to post narrations of Forethought articles on our podcast feed, for people who’d prefer to listen to them.

First up is ‘AI-Enabled Coups: How a Small Group Could Use AI to Seize Power’.

In the fifth essay in the ‘Better Futures’ series, Will MacAskill asks what, concretely, we could do to improve the value of the future (conditional on survival).

Read it here: www.forethought.org/research/ho...

Full episode:

www.youtube.com/watch?v=UMF...

One reason to think the coming century could be pivotal is that humanity might soon race through a big fraction of what's still unexplored of the eventual tech tree.

From the podcast on ‘Better Futures’ —

The fourth entry in the ‘Better Futures’ series asks whether the effects of our actions today inevitably ‘wash out’ over long time horizons, aside from extinction. Will MacAskill argues against that view.

Read it here: www.forethought.org/research/pe...

Full episode:

www.youtube.com/watch?v=UMF...

What is the difference between “survival” and “flourishing”?

Will MacAskill on the better futures model, from our first video podcast:

New podcast episode with Peter Salib and Simon Goldstein on their article ‘AI Rights for Human Safety’.

pnc.st/s/forecast/...

New podcast episode with @tobyord.bsky.social — on inference scaling, time horizons for AI agents, lessons from scientific moratoria, and more.

pnc.st/s/forecast/...

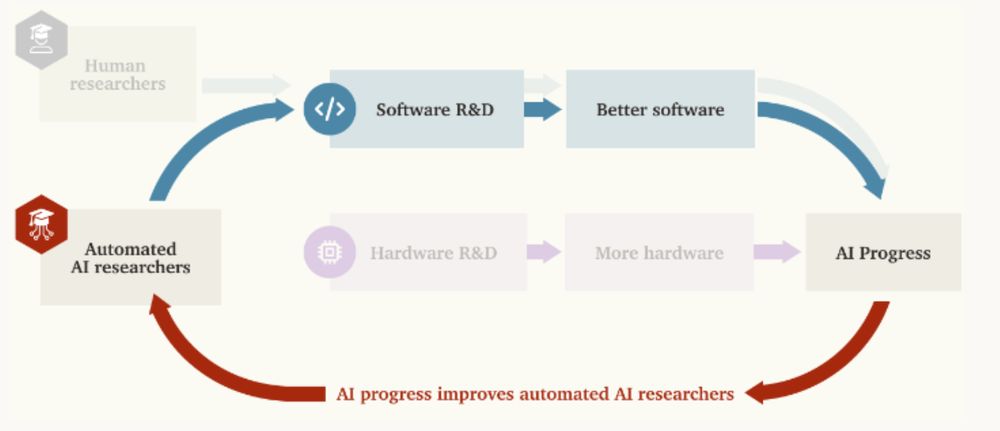

New report: “Will AI R&D Automation Cause a Software Intelligence Explosion?”

As AI R&D is automated, AI progress may dramatically accelerate. Skeptics counter that hardware stock can only grow so fast. But what if software advances alone can sustain acceleration?

x.com/daniel_2718...

Today we’re putting out our first paper, which gives an overview of these challenges. Read it here: www.forethought.org/research/pr...

11.03.2025 15:36 — 👍 1 🔁 0 💬 0 📌 1At Forethought, we’re doing research to help us understand the opportunities and challenges that AI-driven technological change will bring, and to help us figure out what we can do, now, to prepare.

11.03.2025 15:36 — 👍 2 🔁 0 💬 1 📌 0And this might happen blisteringly fast – our analysis suggests we’re likely to see a century’s worth of technological progress in less than a decade. Our current institutions were not designed for such rapid change. We need to prepare in advance.

11.03.2025 15:35 — 👍 1 🔁 0 💬 1 📌 0AI might help us to create many new technologies, and with them new opportunities – from economic abundance to enhanced collective decision-making – and new challenges – from extreme concentration of power to new weapons of mass destruction.

11.03.2025 15:35 — 👍 1 🔁 0 💬 1 📌 0

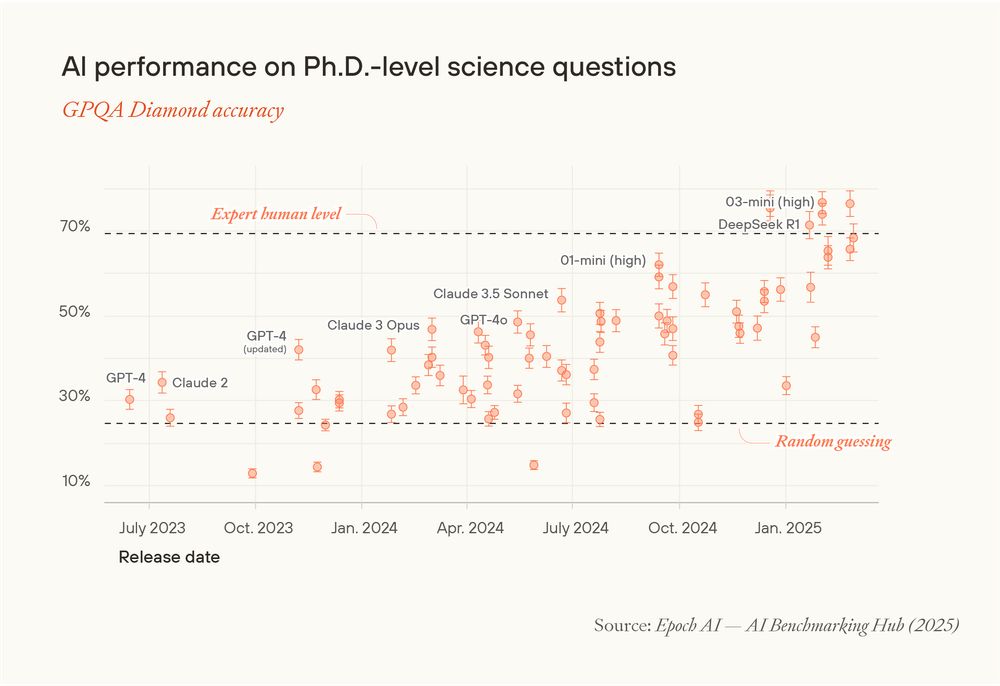

Two years ago, AI systems were close to random guessing at PhD-level science questions. Now they beat human experts. As they continue to become smarter and more agentic, they may begin to significantly accelerate technological development. What happens next?

11.03.2025 15:35 — 👍 4 🔁 0 💬 1 📌 0