🔥 WANTED: Student Researcher to join me, @vdebortoli.bsky.social, Jiaxin Shi, Kevin Li and @arthurgretton.bsky.social in DeepMind London.

You'll be working on Multimodal Diffusions for science. Apply here google.com/about/career...

🔥 WANTED: Student Researcher to join me, @vdebortoli.bsky.social, Jiaxin Shi, Kevin Li and @arthurgretton.bsky.social in DeepMind London.

You'll be working on Multimodal Diffusions for science. Apply here google.com/about/career...

New #J2C Certification:

BM$^2$: Coupled Schrödinger Bridge Matching

Stefano Peluchetti

https://openreview.net/forum?id=fqkq1MgONB

#schrödinger #bridges #bridge

Very excited to share our preprint: Self-Speculative Masked Diffusions

We speed up sampling of masked diffusion models by ~2x by using speculative sampling and a hybrid non-causal / causal transformer

arxiv.org/abs/2510.03929

w/ @vdebortoli.bsky.social, Jiaxin Shi, @arnauddoucet.bsky.social

We are disappointed that some community members have been sending threatening messages to organizers after decisions. While we always welcome feedback, our organizers are volunteers from the community, and such messages will not be tolerated and may be investigated as code of conduct violations.

01.10.2025 15:06 — 👍 19 🔁 3 💬 0 📌 1

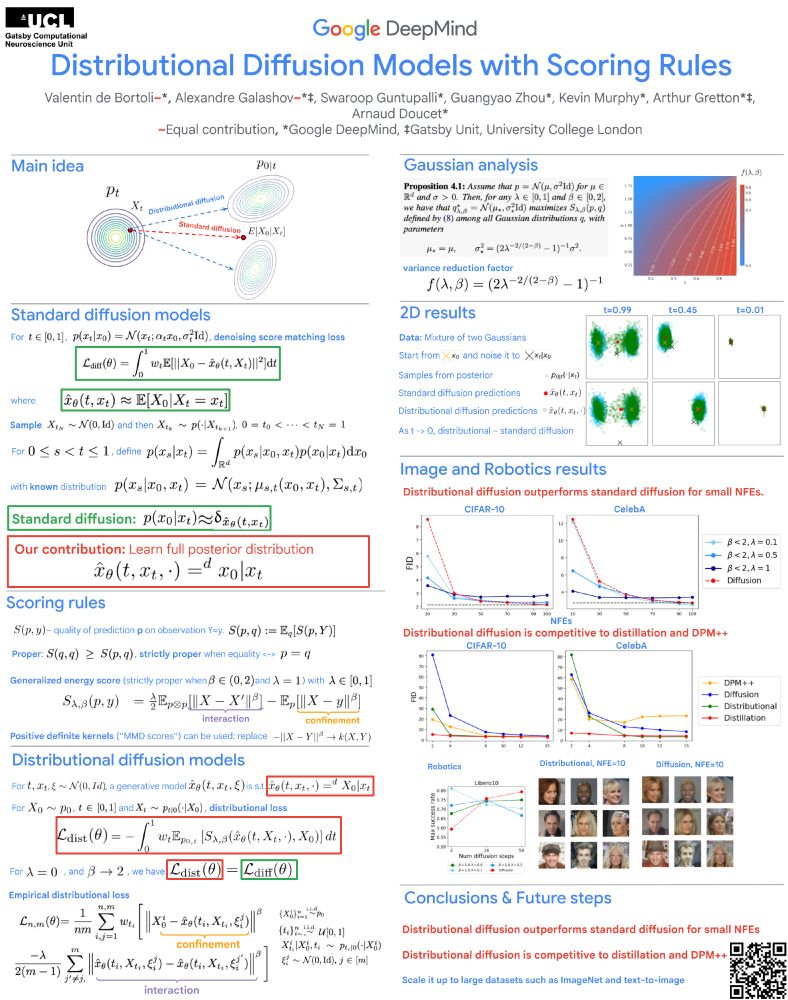

Distributional diffusion models with scoring rules at #icml25

Fewer, larger denoising steps using distributional losses!

Wednesday 11am poster E-1910

arxiv.org/pdf/2502.02483

@vdebortoli.bsky.social

Galashov Guntupalli Zhou

@sirbayes.bsky.social

@arnauddoucet.bsky.social

I know it’s only cifar10 but sota fid without any mini batch OT was already < 2 with 35nfe back in 2022.

Are you sure this makes any difference in the competitive setting? Seems like choosing hyper params makes more of a difference

arxiv.org/abs/2206.00364

It’s used within Sinkhorn

see eg lse model here ott-jax.readthedocs.io/en/stable/_m...

Here's the third and final part of Slater Stich's "History of diffusion" interview series!

The other two interviewees' research played a pivotal role in the rise of diffusion models, whereas I just like to yap about them 😬 this was a wonderful opportunity to do exactly that!

New blog post: let's talk about latents!

sander.ai/2025/04/15/l...

🔥 I'm at ICLR'25 in Singapore this week - happy to chat!

📜 With wonderful co-authors, I'm co-presenting 4 main conference papers and 3

@gembioworkshop.bsky.social papers (gembio.ai), and I contribute to a panel (synthetic-data-iclr.github.io).

🧵 Overview in thread.

(1/n)

I will be attending #ICLR2025 in Singapore and #AISTATS2025 in Mai Khao over the next two weeks.

Looking forward to meeting new people and learning about new things. Feel free to reach out if you want to talk about Google DeepMind.

Check out our Apple research work on scaling laws for native multimodal models! Combined with mixtures of experts, native models develop both specialized and multimodal representations! Lots of rich findings and opportunists for follow up research!

11.04.2025 22:37 — 👍 6 🔁 5 💬 0 📌 1Thanks!

06.04.2025 18:37 — 👍 1 🔁 0 💬 0 📌 0

I guess my point more broadly is that it is hard in general to draw the line or understand future impact of some work.

And reviewing can suck sometimes

“serious [research] as opposed to .. only reason is to produce another paper”

I have dismissed some ideas, later published and turned out to be impactful

ICML reviewing and 2/4 papers are a rehash of existing work, the only purpose is CV padding .. perhaps a necessary evil but frustrating

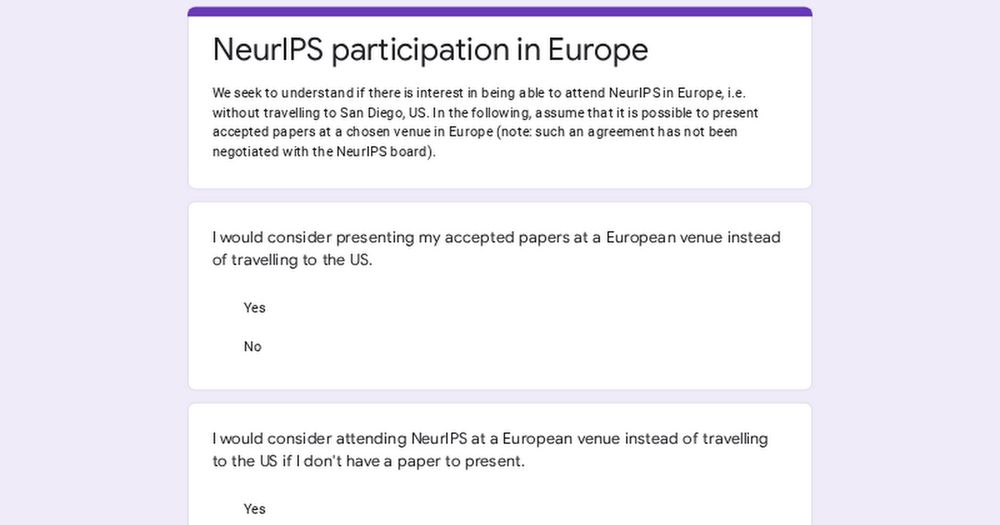

Would you present your next NeurIPS paper in Europe instead of traveling to San Diego (US) if this was an option? Søren Hauberg (DTU) and I would love to hear the answer through this poll: (1/6)

30.03.2025 18:04 — 👍 280 🔁 160 💬 6 📌 12Hiring two student researchers for Gemma post-training team at @GoogleDeepMind Paris! First topic is about diversity in RL for LLMs (merging, generalization, exploration & creativity), second is about distillation. Ideal if you're finishing PhD. DMs open!

26.03.2025 16:24 — 👍 4 🔁 1 💬 0 📌 0

We are hiring on the Generative Media team in London: boards.greenhouse.io/deepmind/job...

We work on Imagen, Veo, Lyria and all that good stuff. Come work with us! If you're interested, apply before Feb 28.

A co-author (and friend!) is hiring his first post doc in Linköping University, Sweden. It seems the application deadline is not settled yet, so you have *plenty of time* to c̶o̶n̶s̶i̶d̶e̶r̶ applyi̶n̶g̶ ! The department is strong and so is he.

zz.zabemon.com/blogs/2025/0...

Paper🧵 (cross-posted at X): When does composition of diffusion models "work"? Intuitively, the reason dog+hat works and dog+horse doesn’t has something to do with independence between the concepts being composed. The tricky part is to formalize exactly what this means. 1/

11.02.2025 05:59 — 👍 39 🔁 15 💬 2 📌 2

Great interview with @jascha.sohldickstein.com about diffusion models! This is the first in a series: similar interviews with Yang Song and yours truly will follow soon.

(One of these is not like the others -- both of them basically invented the field, and I occasionally write a blog post 🥲)

ODE is from arxiv.org/abs/2106.01357 in the appendix, there was an error in first version but hopefully fixed now .

I did not try with the alpha version.

finally managed to sneak my dog into a paper: arxiv.org/abs/2502.04549

10.02.2025 05:03 — 👍 62 🔁 4 💬 1 📌 1

Registration is now open for the 2nd RSS/Turing Workshop on Gradient Flows for Sampling, Inference, and Learning at rss.org.uk/training-eve....

Date: Monday 24 March 2025, 10.00AM - 5.00PM

Location: The Alan Turing Institute

I have tried and works well in practice, it’s a bit similar to initialising reflow from a bridge or diffusion; and is similar to annealed Rf of arxiv.org/abs/2407.12718

You can also use the flow of the SB, we wrote the details here but didn’t investigate much (this was 2020/2021)

Screenshot from arxiv.org/pdf/2410.07815

07.02.2025 20:54 — 👍 3 🔁 1 💬 0 📌 0

With a lot of effort they seem to perform well and be a promising direction. A proper comparison to distillation methods is needed.

Reflow/ IMF does seem to be the best method for OT type trajectories and are similar / compatible with other distillation methods.

Using the same straightness metric as the original RF paper, can show reflow / imf helps.

FM-“OT” and minibatch “OT” do not result in straight paths and hence not OT

I'm increasingly uncomfortable with the argument (read more and more often) that rectified flow (without reflow steps) offers straighter trajectories than diffusion (in the Gaussian case), despite being a diffusion model itself with special noise schedule... It seems it comes from the confusion 1/2

06.02.2025 09:18 — 👍 14 🔁 2 💬 2 📌 1

🚨 One question that has always intrigued me is the role of different ways to increase a model's capacity: parameters, parallelizable compute, or sequential compute?

We explored this through the lens of MoEs: