Join the lab

Brain, Language, & Acoustic Behavior Laboratory

The Speech Motor Neuroscience Group at the University of Wisconsin–Madison inviting applications for an NIH-funded postdoctoral research position in the field of speech motor control and speech motor neuroscience. Details found under the “postdoctoral researchers” tab blab.wisc.edu/join-the-lab/

15.10.2025 00:00 — 👍 1 🔁 0 💬 0 📌 0

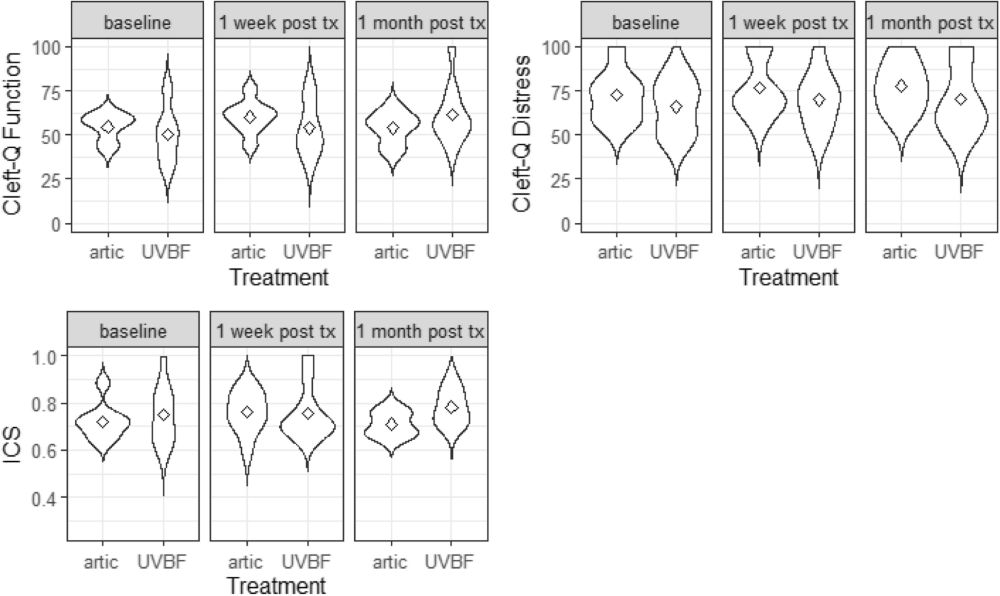

Attending many great talks at #ESSD2025 in Athens, and a great opportunity to present our work on using the compartmental tongue theory to reshape how we quantify tongue movement in swallowing.

10.10.2025 19:20 — 👍 3 🔁 2 💬 0 📌 0

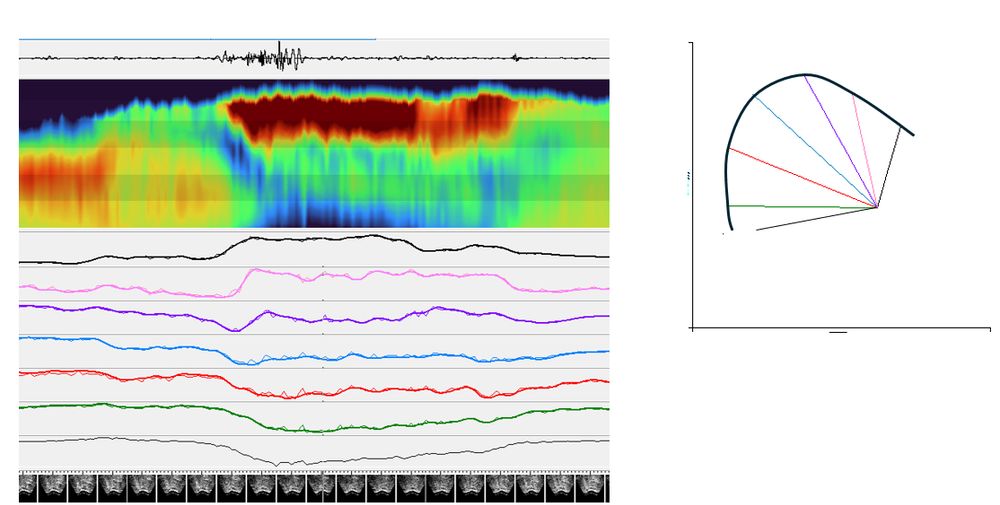

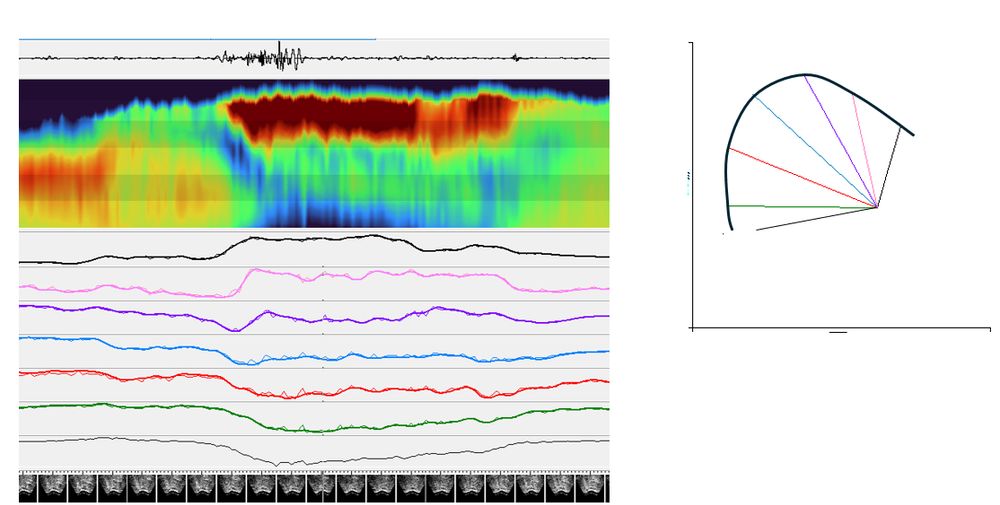

Note all the articulation happening in the posterior tongue and hyoid which is not captured by EMA.

17.09.2025 15:54 — 👍 0 🔁 0 💬 0 📌 0

Co-registered EMA and ultrasound. From top left: Ultrasound with tongue contour, Ultrasound keypoints, 3D head with EMA sensors, , Spectrogram, Glossogram showing vocal tract constrictions in red cavities in blue, waveform. Movie created by AAA app. Use settings to hear audio.

17.09.2025 15:54 — 👍 5 🔁 1 💬 1 📌 1

Yup.

13.09.2025 12:44 — 👍 1 🔁 0 💬 0 📌 0

🚨 Open PhD Position – Grenoble, France 🚨

Join us at GIPSA-lab to explore how Speech Language Models can learn like children: through physical and social interaction. Think AI, robots, development 🧠🤖🎙️

Fully funded (3 yrs) • @cnrs.fr / @ugrenoblealpes.bsky.social

Details 👉 tinyurl.com/bde988b3

01.09.2025 11:48 — 👍 8 🔁 7 💬 0 📌 0

I understand that you need to minimize kit to take to remote parts. A mirror would be convenient. I think we need to do two things. Change the 50mm camera for a wide angle one so that a peripheral mirror is in view. Then design a 45° mirror mount to fit on the side camera mount. I can try this out.

01.09.2025 15:05 — 👍 3 🔁 0 💬 0 📌 0

Hi Matt, AAA can only record one video channel for reasons to do with having to associate splines with input data streams and also the large amount of disk space that would accumulate. In the past we used a CCTV camera mixer. However, I purchased one recently and couldn't get it to work.

01.09.2025 15:05 — 👍 1 🔁 0 💬 1 📌 0

x.com/i/status/195...

Video of Prof Takayuki Arai with his vocal tract models at #Interspeech2025

Love this analogue demonstration.

22.08.2025 09:56 — 👍 2 🔁 0 💬 0 📌 0

We're thrilled to introduce ATHENA: Automatically Tracking Hands Expertly with No Annotations – our open-source, Python-based toolbox for 3D markerless hand tracking!

Paper: www.biorxiv.org/content/10....

16.08.2025 00:49 — 👍 52 🔁 7 💬 2 📌 3

YouTube video by Zongwei Li

DeepCode

Really? github.com/HKUDS/DeepCode

www.youtube.com/watch?v=PRgm...

Paper2Code: Convert research papers into working implementations

Text2Web: Generate frontend applications from descriptions

Text2Backend: Create scalable backend systems automatically

Auto Code Validation: Guaranteed working code

19.08.2025 10:23 — 👍 0 🔁 0 💬 0 📌 0

The work coming out of the Person lab is a must-read for me. www.biorxiv.org/content/10.1...

This new paper shows that cerebellar output neurons encode both predictive and corrective movements, mechanistically linking feedforward and feedback control.

14.08.2025 13:14 — 👍 0 🔁 0 💬 0 📌 0

Although I no longer subscribe to the Equilibrium Point Hypothesis as currently formulated, this proposal is intriguing.

Almanzor et al. (2025). Self-organising bio-inspired reflex circuits for robust motor coordination in artificial musculoskeletal systems.

iopscience.iop.org/article/10.1...

19.07.2025 13:47 — 👍 1 🔁 0 💬 0 📌 0

Not inconsistent with the proposal that an initial feedforward motor cortical pulse determines direction and velocity of movement and a step change near peak velocity modulates deceleration to bring the movement on target. This paper, finds that the cerebellum generates the deceleration step change.

13.07.2025 15:11 — 👍 0 🔁 0 💬 0 📌 0

I believe the cerebellum and possibly motor nuclei learn and map the expected sensory input for a given set of feedforward muscle activations. If there is a mismatch, the cerebellum generates corrective output. If the mismatch persists the cerebellum slowly adapts to the new sensory expectation.

12.07.2025 14:19 — 👍 0 🔁 0 💬 0 📌 0

Nice paper but overlooks the groundbreaking and elegant work describing movement sequence learning. Sosnik, R., Hauptmann, B., Karni, A., & Flash, T. (2004). When practice leads to co-articulation: the evolution of geometrically defined movement primitives. Experimental Brain Research, 156, 422-438.

09.07.2025 10:20 — 👍 2 🔁 0 💬 1 📌 0

Video corresponding to above glossogram. Red line is midsagittal tongue contour automatically estimated using #DeepLabCut Blue line indicates base of mandible to hyoid and purple line indicates base of mandible to short tendon. In collaboration with @drjoanma.bsky.social

27.05.2025 11:51 — 👍 2 🔁 0 💬 0 📌 1

Glossogram with dark red indicating constriction and blue diagonal (tongue compartment contracted) demonstrating peristaltic transfer of water bolus from oral-pharyngeal. This is easiest to explain as sequential extension of neuromuscular compartments of the tongue.

27.05.2025 11:51 — 👍 7 🔁 4 💬 1 📌 0

Great to see this out. Will read it carefully.

01.05.2025 10:28 — 👍 0 🔁 0 💬 1 📌 0

If your ultrasound displays the scanning frame rate, you can adjust the parameters until you get a good compromise between image quality and frame rate. However you may still get occasional duplicated frames as the video buffering and scanning rates are not synchronized.

16.04.2025 10:40 — 👍 1 🔁 0 💬 3 📌 0

I suspect that your recordings have a scan frame rate of around 20Hz. Inside the ultrasound the scanned frame data is converted into an image and passed to the video buffer. The 60Hz video outputs the most recent image in the buffer but since the images are only updated at 20hz you get duplicates.

16.04.2025 10:40 — 👍 0 🔁 0 💬 1 📌 0

Auld yin, fanatical patch birder, East Lothian, bird ringing trainer, nest recorder, moth counter, fly fisherman, CCU nurse

i’m a linguist. he/him https://jofrhwld.github.io/

SLHlab's main focus is to inform and influence evidence based clinical practice

Post doc w/ Carol Espy-Wilson @UMD Electrical and Computer Engineering; PhD CCC-SLP conducting clinical trials at Syracuse University. Developer of efficacious, theory-driven clinical AI speech tech.

All the Recent Rare & Scarce Bird News & Images from Lothian. Regular updates. Locals please add @birdinglothian.bsky.social to your messages

Official Bluesky feed of the Journal of Biomechanics

Editors-in-Chief: Frank Gijsen, Stephen Piazza, and Saeed Shirazi-Adl.

Posts by Associate Editor: Felipe Carpes.

The Society for the Neural Control of Movement: Advancing our understanding of how the brain controls movement.

www.ncm-society.org

academic at @phoneticslab.bsky.social :: speech production, vocal tract imaging, dynamical systems, computational modelling :: https://samkirkham.github.io

Senior Lecturer in Speech and Language Therapy, Queen Margaret University. Interested in the application of ultrasound in swallowing, motor speech disorders and speech prosody.

www.pinkflag.com

https://pinkflag.greedbag.com

@swimhq.bsky.social

The official(ish) account of the Auditory-VIsual Speech Association (AVISA) AV 👄 👓 speech references, but mostly what interests me avisa.loria.fr

@immersionhq.bsky.social / @swimminginsound.bsky.social / @wirehq.bsky.social / @GitheadHQ.bsky.social etc. / @oraclebxl.bsky.social

www.swimminginsound.com / www.immersionhq.uk / www.slackcity.org.uk /

From Brighton!

UK and Ireland Child Speech Disorder Research Network. SLT researchers in children's Speech Sound Disorders #SSD #CAS https://t.co/j3o2bfa4nP

Motor control neuroscientist, Jefferson Moss Rehab Research Institute. Motor planning, learning, and cognitive-motor interactions. https://www.jefferson.edu/academics/colleges-schools-institutes/skmc/departments/rehabilitation/faculty/wong.html (he/him) 🏳️🌈

Professor of Phonetics at Lancaster University. Gàidhlig, Phonetics, Sociophonetics, Bilingualism, Articulation, Sociolinguistics. Otherwise in the mountains.

She/her

https://clairenance.github.io/

Family or local history oriented Scotland stories at https://noisybrain.blog/

“Fix the money, fix the world”

#Bitcoin

Jesus Is Lord

Once was speech technologist - Water of Leith, Edinburgh - Born 320.23 ppm

How do we move? I study brains and machines at York University (Assistant Professor). Full-time human.

Neural Control & Computation Lab

www.ncclab.ca

Thinking about the brain, spinal cord and how we move (and related neurotechnology). Into books, music, coffee, food, photography+art, animals & some humans. Now group leader at Champalimaud Research

#neuroskyence #Sensorimotor #compneurosky #Science