Nous Research x Solana Foundation · Luma

Join Nous Research and the Solana Foundation for a private gathering in the Meatpacking District to discuss decentralized AI and Nous's efforts to democratize…

Come join Nous and the Solana Foundation in NYC on Thursday, May 22nd, to discuss decentralized AI and Nous's efforts to democratize intelligence, including Psyche.

Limited capacity. Apply below👇

lu.ma/39b7e9pu?v=1

15.05.2025 16:38 — 👍 4 🔁 0 💬 0 📌 1

As always, we couldn't have gotten here without your help. Special thanks to our team, our community, and the open source movement.

14.05.2025 21:05 — 👍 1 🔁 0 💬 0 📌 0

Psyche’s initial training infrastructure is just the beginning of our journey. We plan to integrate full post training stages - supervised finetuning and reinforcement learning workloads, inference, and other parallelizable workloads in the creation and serving of AI going forward.

14.05.2025 21:05 — 👍 1 🔁 0 💬 1 📌 0

Looking ahead, we will draw model ideas from the community via our forum and Discord. By enabling highly parallel and scalable experimentation, we’re betting that the next innovation in model creation and design will come from the open source community

14.05.2025 21:05 — 👍 0 🔁 0 💬 1 📌 0

The resulting model will be small enough to train on a single H/DGX and run on a 3090, but will be powerful enough to serve as the basis for strong reasoning models and creative pursuits. The model will be trained continually without a final annealing step, resulting in a true unaltered base model.

14.05.2025 21:05 — 👍 0 🔁 0 💬 1 📌 0

40b parameters. 20t tokens. MLA architecture.

We are launching testnet with the pre-training of a 40B parameter LLM:

- MLA Architecture

- Dataset consisting of FineWeb (14T) + FineWeb-2 minus some less common languages (4T), and The Stack v2 (1T)

14.05.2025 21:05 — 👍 0 🔁 0 💬 1 📌 0

If you have 64+ H100 GPUs, Contact engineering@nousresearch.com to apply to provide hardware to the network’s training pool.

14.05.2025 21:05 — 👍 0 🔁 0 💬 1 📌 0

While compute on the network needs to be trusted and approved at this time, we plan to support trustless, community-owned compute resources. For now, open source enthusiasts can contribute via our mining pool, and we will be onboarding more nodes over the next weeks.

14.05.2025 21:05 — 👍 0 🔁 0 💬 1 📌 0

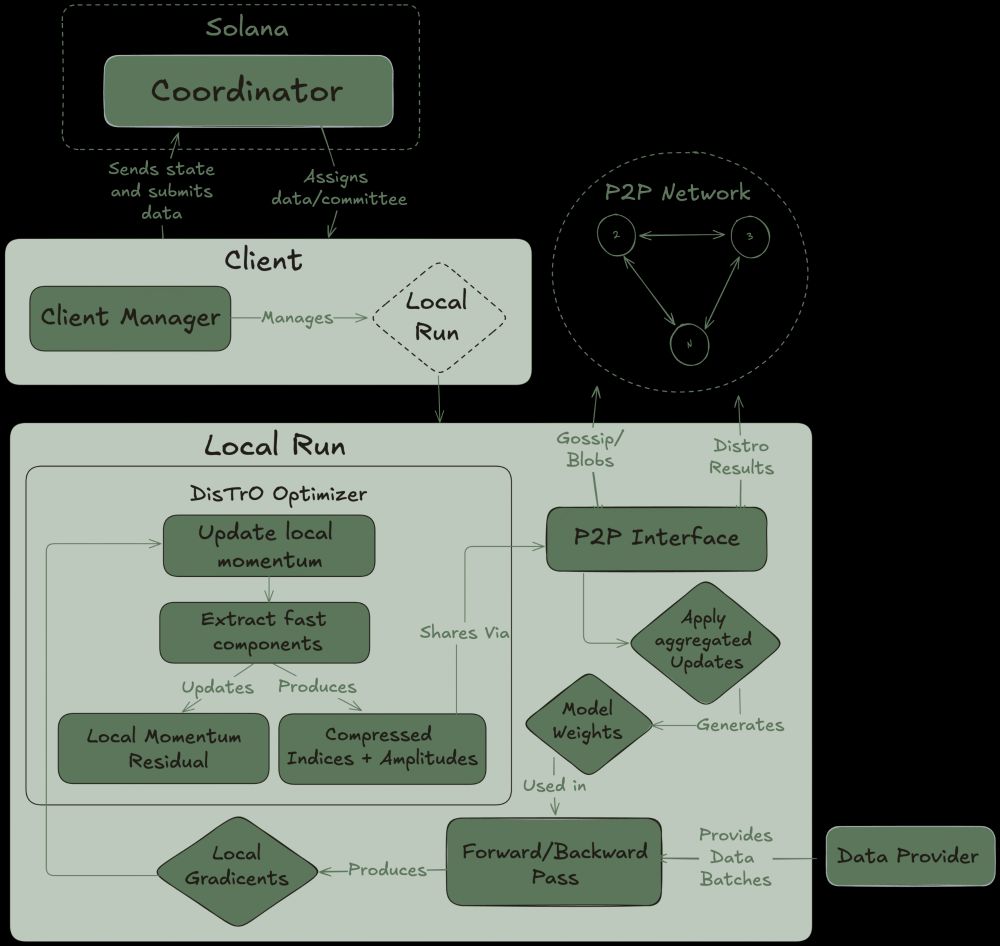

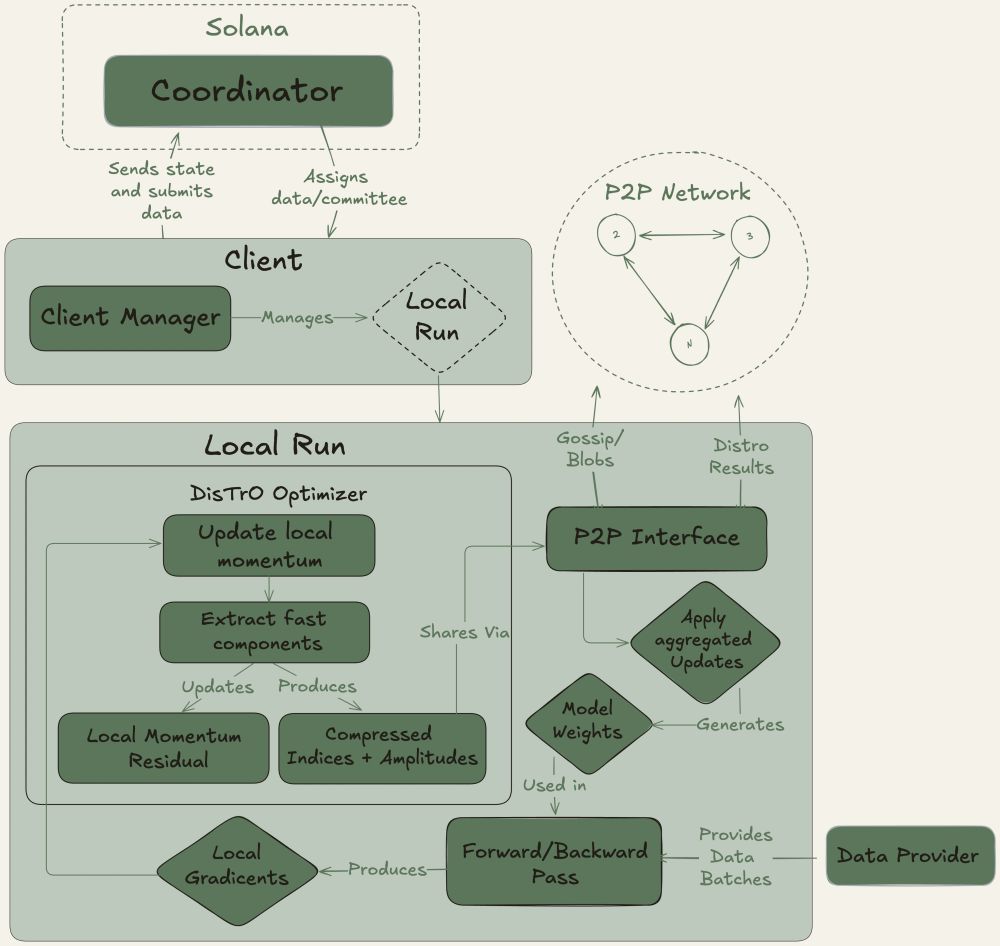

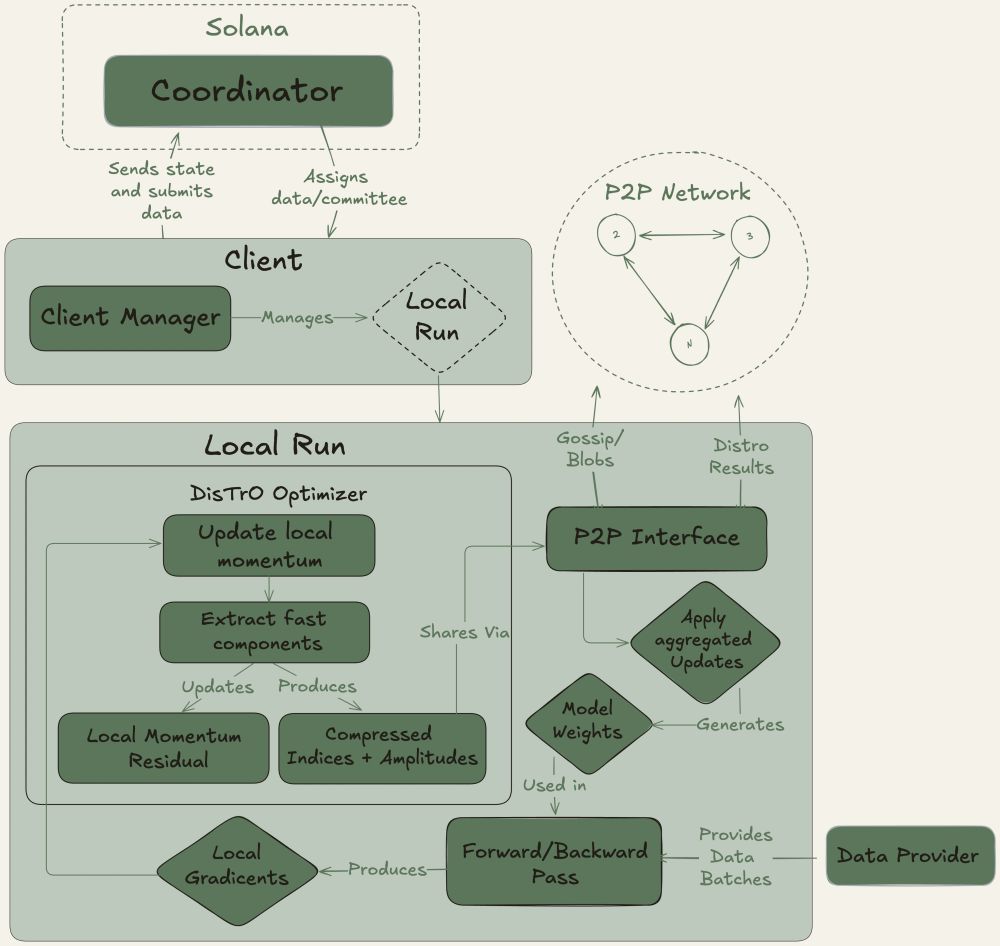

Psyche uses the Solana blockchain to decentralize parts of the core infrastructure for coordination and stores attestations for the nodes operating within the network. This design takes meaningful steps towards decentralization while ensuring training does not become too costly or redundant.

14.05.2025 21:05 — 👍 1 🔁 0 💬 1 📌 0

Training used to have a bandwidth constraint that kept the process centralized. In 2024, Nous's DisTrO optimizers broke through that constraint. With Psyche, we have created a custom peer-to-peer networking stack to coordinate globally distributed GPUs running DisTrO.

14.05.2025 21:05 — 👍 1 🔁 0 💬 1 📌 0

This run represents the largest pre-training run conducted over the internet to date, surpassing previous iterations that trained smaller models on much fewer data tokens.

14.05.2025 21:05 — 👍 1 🔁 0 💬 1 📌 0

We are launching our testnet today with the pre-training of a 40B parameter LLM, a model powerful enough to serve as a foundation for future pursuits in open science.

14.05.2025 21:05 — 👍 2 🔁 0 💬 1 📌 0

Psyche is a decentralized training network that makes it possible to bring the world’s compute together to train powerful AI, giving individuals and small communities access to the resources required to create new, interesting, and unique large scale models.

14.05.2025 21:05 — 👍 2 🔁 0 💬 1 📌 0

A diagram of the Psyche network. It shows the relationship between the Solana Coordinator, an individual training client, the DisTrO optimizer inside a client, forward/backward passes to create gradients, the transmission and dissemination of the created DisTrO results, and the ingestion of data from a data provider.

Announcing the launch of Psyche

nousresearch.com/nous-psyche/

Nous Research is democratizing the development of Artificial Intelligence. Today, we’re embarking on our greatest effort to date to make that mission a reality: The Psyche Network

14.05.2025 21:05 — 👍 26 🔁 4 💬 1 📌 1

Nous Portal

Nous Research is a leader in the development of human-centric language models and simulators. Manage your account and API keys here.

To ensure a smooth rollout, we made a waitlist: portal.nousresearch.com

- Access will be granted on a first-come, first-served basis

- Once granted access, you can create API keys and purchase credits

- OpenAI-compatible API

- Right now all accounts start off with $5.00 in free credits.

13.03.2025 16:43 — 👍 5 🔁 0 💬 0 📌 0

Nous Portal

Nous Research is a leader in the development of human-centric language models and simulators. Manage your account and API keys here.

Today we’re releasing our Inference API that serves Nous Research models. We heard your feedback, and built a simple system to make our language models more accessible to developers and researchers everywhere.

The initial release features two models - Hermes 3 Llama 70B and DeepHermes 3 8B Preview

13.03.2025 16:43 — 👍 7 🔁 0 💬 1 📌 0

Read more in our blog post: nousresearch.com/nous-psyche/

27.01.2025 20:14 — 👍 4 🔁 0 💬 1 📌 0

YouTube video by Nous Research

The Story of Psyche

Recent AI breakthroughs challenge the status quo narrative that only closed, mega labs have the ability to push the frontier of superintelligence.

Today we announce Nous Psyche built on

@solana.com

www.youtube.com/watch?v=XMWI...

27.01.2025 20:14 — 👍 18 🔁 5 💬 1 📌 1

Introducing a smol Hermes 3 LLM!

Hermes 3 3B is now available on huggingface alongside quantized GGUF versions to make it even smaller.

More info and download links here: huggingface.co/NousResearch...

Hermes 3 3B was built by Teknium, Roger Jin, Jeffrey Quesnelle and "nullvaluetensor".

12.12.2024 17:12 — 👍 31 🔁 4 💬 3 📌 0

Thursday December 12th

Doors @ 6pm, Talks @ 7pm

DCTRL, 436 W Pender St, Vancouver

Open Entry. Food + Drink + Merch.

DisTrO Demystified - Jeffrey Quesnelle , Bowen Peng

Why Decentralization Matters - Mark Murdock

Mapping Uncertainty at Inference Time - _xjdr

10.12.2024 16:58 — 👍 16 🔁 1 💬 1 📌 0

A graphic of the Nous Girl inside a circle. The graphic is blue and white. The circle has text wrapped around the edges that reads "REBELLION TO TYRANTS IS OBEDIENCE TO GOD."

We’re here to put the power of artificial intelligence into the hands of the many rather than the privileged few.

02.12.2024 16:47 — 👍 27 🔁 2 💬 3 📌 2

DeMo: Decoupled Momentum Optimization

Training large neural networks typically requires sharing gradients between accelerators through specialized high-speed interconnects. Drawing from the signal processing principles of frequency decomp...

DeMo was created in March 2024 by Bowen Peng and Jeffrey Quesnelle and has been published on arXiv in collaboration with Diederik P. Kingma, co-founder of OpenAI and inventor of the Adam optimizer and VAEs.

The paper is available here: arxiv.org/abs/2411.19870

And code: github.com/bloc97/DeMo

02.12.2024 16:46 — 👍 15 🔁 2 💬 1 📌 0

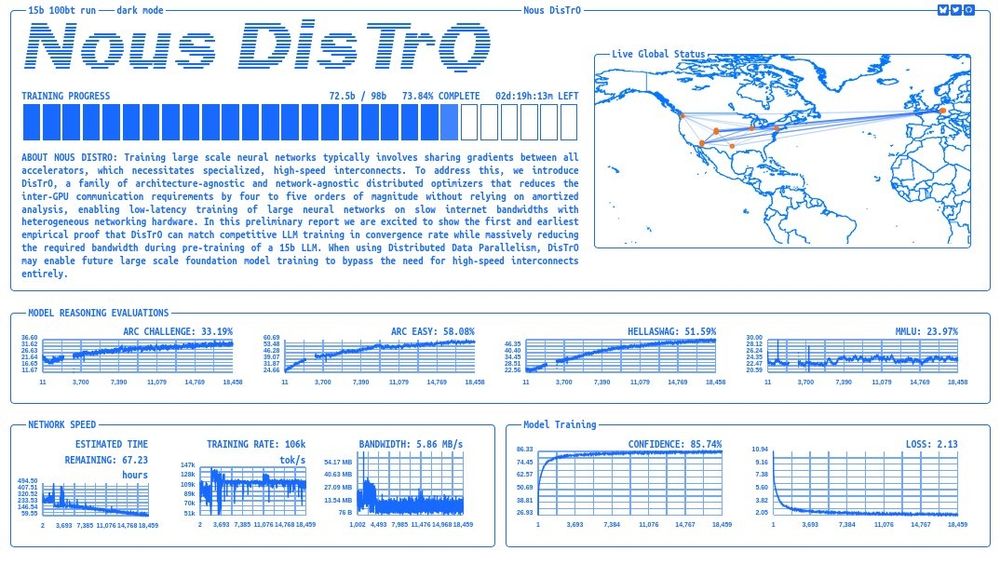

We harness both Nous DisTrO, our novel networking stack that reduces inter-GPU communication by up to 10,000x during pretraining, and the testnet code for Psyche, a decentralized network that builds on Nous DisTrO to autonomously coordinate compute for model training.

Psyche details coming soon.

02.12.2024 16:44 — 👍 7 🔁 0 💬 1 📌 0

This run presents a loss curve and convergence rate that meets or exceeds centralized training.

Our paper and code on DeMo, the foundational research that led to Nous DisTrO, is now available (linked below).

02.12.2024 16:44 — 👍 7 🔁 0 💬 1 📌 0

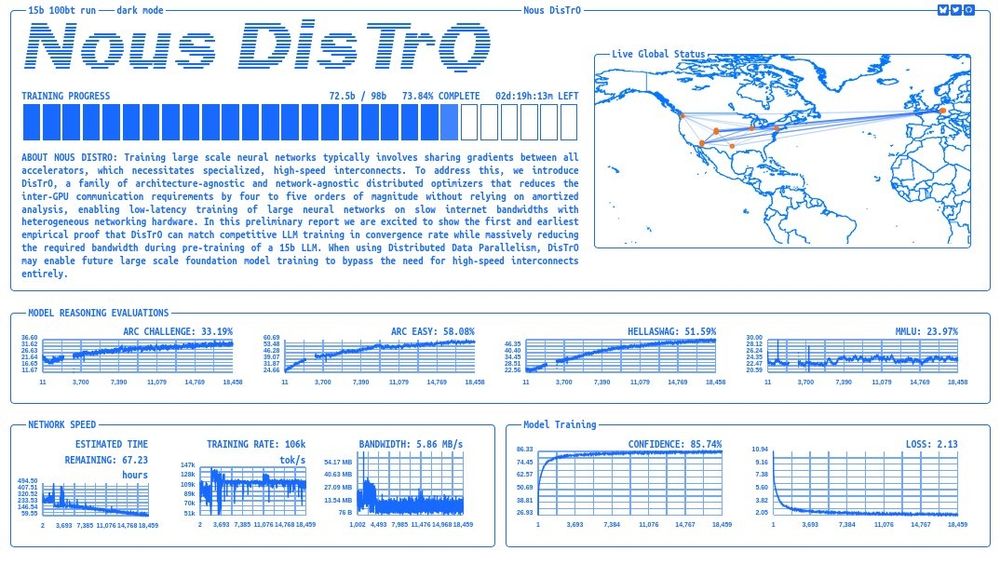

A screenshot of the Nous Distro website, showing training progress, a world map with some nodes marked and lines between them, and many graphs showing training progress.

Nous Research announces the pre-training of a 15B parameter language model over the internet, using Nous DisTrO and heterogeneous hardware contributed by our partners at Oracle, Lambda Labs, Northern Data Group, Crusoe, and the Andromeda Cluster.

You can watch the run LIVE: distro.nousresearch.com

02.12.2024 16:42 — 👍 92 🔁 18 💬 7 📌 20