nice....

15.03.2025 12:47 —

👍 1

🔁 0

💬 0

📌 0

I was lucky enough to be invited give a talk on our new paper on the value of RL in fine-tuning at Cornell last week! Because of my poor time management skills, the talk isn't as polished as I'd like, but I think the "vibes" are accurate enough to share: youtu.be/E4b3cSirpsg.

06.03.2025 18:19 —

👍 15

🔁 3

💬 0

📌 0

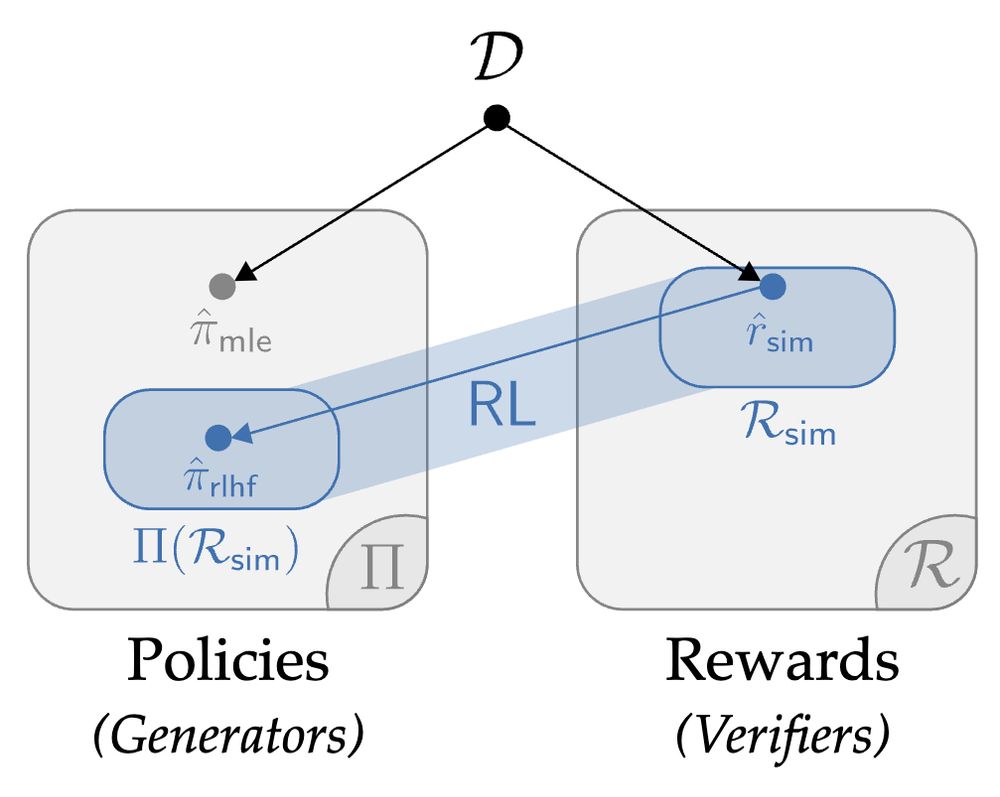

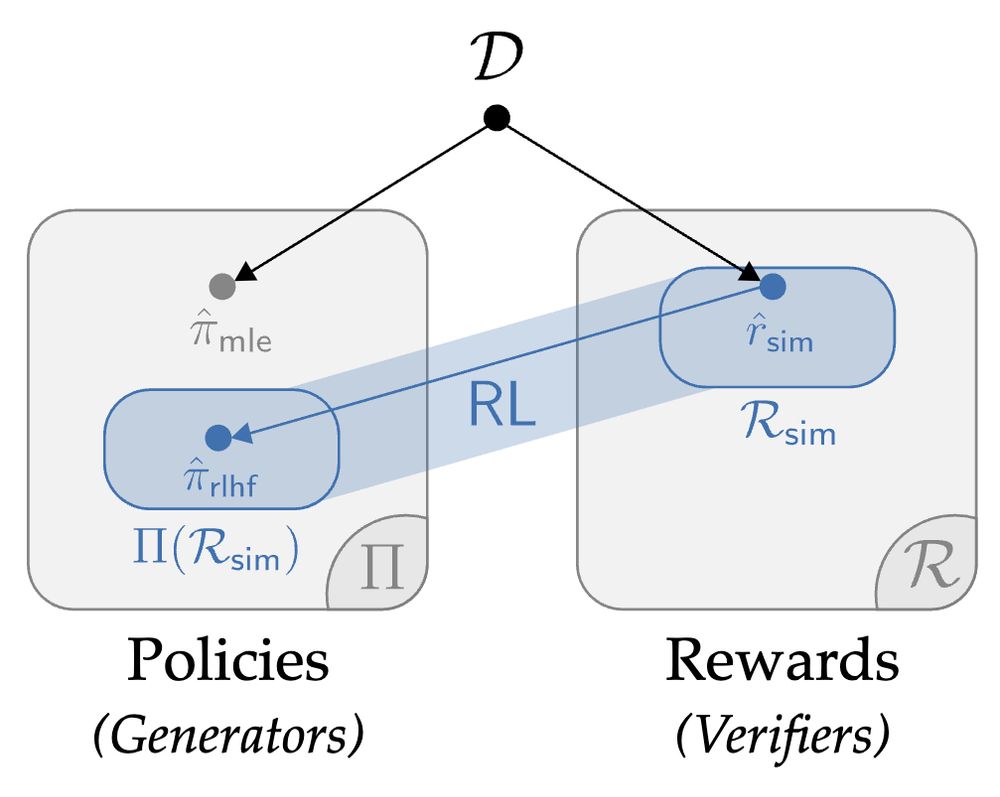

1.5 yrs ago, we set out to answer a seemingly simple question: what are we *actually* getting out of RL in fine-tuning? I'm thrilled to share a pearl we found on the deepest dive of my PhD: the value of RL in RLHF seems to come from *generation-verification gaps*. Get ready to 🤿:

04.03.2025 20:59 —

👍 59

🔁 11

💬 1

📌 3

can you present other people's results :-)

04.03.2025 14:18 —

👍 1

🔁 0

💬 0

📌 0

that makes sense to me.... i should go to bed....

06.02.2025 00:51 —

👍 3

🔁 0

💬 0

📌 0

A Minimaximalist Approach to Reinforcement Learning from Human Feedback

We present Self-Play Preference Optimization (SPO), an algorithm for reinforcement learning from human feedback. Our approach is minimalist in that it does not require training a reward model nor unst...

@gswamy.bsky.social et al propose SPO which builds a game from a preferences, solving for the minimax winner. Handles non-Markovian, intransitive, and stochastic preferences. Nice empirical eval ranging from small demonstrative domains to huge RL domain (Mujoco).

arxiv.org/abs/2401.04056

2/3.

21.11.2024 12:30 —

👍 17

🔁 2

💬 2

📌 0

I have become a fan of the game-theoretic approaches to RLHF, so here are two more papers in that category! (with one more tomorrow 😅)

1. Self-Play Preference Optimization (SPO).

2. Direct Nash Optimization (DNO).

🧵 1/3.

21.11.2024 12:30 —

👍 74

🔁 9

💬 2

📌 2

1....

21.11.2024 00:40 —

👍 4

🔁 0

💬 0

📌 0