Hey Chat, boss man said he doesn't like my artwork.

07.08.2025 19:04 — 👍 0 🔁 0 💬 0 📌 0@depot.dev.bsky.social

40x faster Docker builds on 3x faster GitHub Actions runners. Automatic intelligent caching, blazing-fast compute and zero-configuration ⚡

Hey Chat, boss man said he doesn't like my artwork.

07.08.2025 19:04 — 👍 0 🔁 0 💬 0 📌 0

The Rust crab before Depot: ☠️

After plugging into Depot Cache + faster runners: 🦀💨

Full guide in 🧵

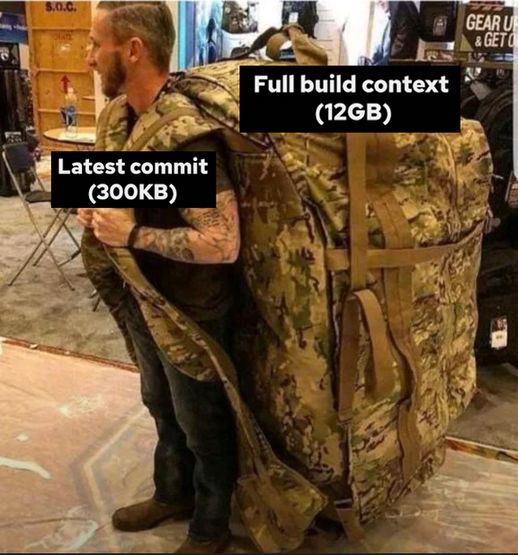

One semicolon = 12GB of pain

04.08.2025 15:19 — 👍 0 🔁 0 💬 0 📌 0

Your CI/CD pipeline shouldn't be a mystery box. With the right monitoring, you can predict and prevent failures before they happen.

Full deep dive with examples and code in the blog post: depot.dev/blog/guide-t...

Next time your GitHub Actions fail "randomly": check resource usage not just error messages, look for patterns across multiple runs, separate environment issues from code issues.

Fix the infrastructure, not just the symptoms.

Stop treating symptoms and fix root causes. Most "random" failures follow patterns when you have the right data.

Memory spikes, CPU saturation, and disk issues all have signatures. Learn to read them.

The debugging pattern that actually works: start simple with basic logging for one-off issues. As your CI/CD scales, invest in proper observability.

Don't waste hours re-running flaky jobs. Understand WHY they're failing.

"No space left on device" speedrun: run "docker system prune -f" to clean up unused layers.

Check your .dockerignore file, remove build artifacts. If you're still tight on space, time for a bigger runner.

CPU bottleneck immediate fixes: more CPU cores (again, costs more), limit parallel operations, check what you're working with using "nproc".

Smart approach: use matrix strategies to distribute work across multiple runners instead of overwhelming one.

High memory usage immediate fixes: upgrade runner size (costs more but works), enable build caching, set memory limits on processes.

Long-term: multi-stage Docker builds, break monolithic jobs into smaller ones, hunt down memory leaks in your test suites.

Self-hosted runners give you the superpower of continuous monitoring.

Install agents like DataDog directly on your runners for baseline metrics every 30-60 seconds, not just when jobs fail. Game changer for debugging.

If you're debugging multiple jobs regularly, searching through GitHub logs gets old fast.

Consider sending metrics to DataDog or similar observability tools. Point-in-time memory and CPU data beats guessing every time.

Pro tip: Wrap expensive operations with the "time" command to see exactly where delays occur.

You'll be surprised how often the "fast" step is eating all your resources and causing mysterious slowdowns.

Quick win: Add memory checks before and after your build steps using "free -h" and "df -h" commands in your workflow.

No dependencies needed, just visibility into what's consuming your resources. You'll be shocked at what you discover.

Most "mysterious" failures aren't code issues at all. They're resource problems: memory exhaustion, CPU bottlenecks, disk space, network timeouts.

But GitHub's logs don't make this obvious. You need to get proactive about monitoring what's actually happening.

The real cost isn't just frustration... it's team productivity.

When jobs fail randomly, devs lose trust in CI/CD. They start ignoring legitimate failures. And when you need that emergency hotfix during an incident? Your flaky pipeline becomes a business problem.

🧵 GitHub Actions failing for no apparent reason? Your code hasn't changed but workflows keep breaking?

You're not alone. Here's how to actually debug what's going wrong (and it's probably not your code) 👇

depot.dev/blog/now-available-claude-code-sessions-in-depot

30.07.2025 20:34 — 👍 0 🔁 0 💬 0 📌 0we automated our github actions runner updates with claude.

ci pulls upstream → tries arm64.patch → fails? claude fixes it → commits → done.

sauce: depot claude --resume means ci never loses context.

no more “wait what was i doing”

Context persistence FTW.

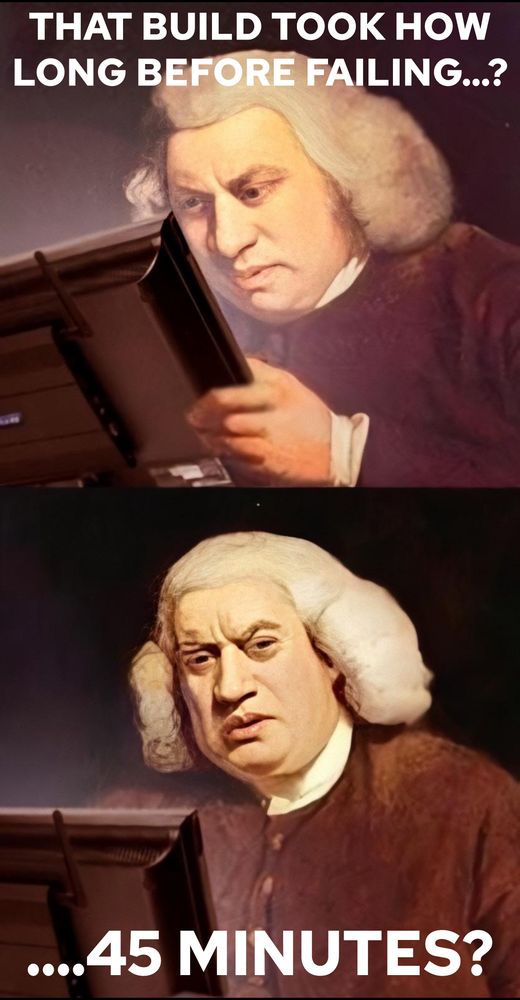

Waiting for a build to finish be like...

29.07.2025 21:13 — 👍 0 🔁 0 💬 0 📌 0Your code is fine. Your GitHub runner isn’t.

Most “mystery” CI failures = resource constraints:

👉 Memory killed

👉 CPU throttled

👉 Disk full

Full guide to debugging GitHub Actions in 🧵

I mean, a fact is a fact...

22.07.2025 02:05 — 👍 0 🔁 0 💬 0 📌 0

Software engineers living in the dark ages be like…

18.07.2025 15:42 — 👍 0 🔁 0 💬 0 📌 0Let's play two truths and a lie...

Which of these is NOT true of our Co-Founder and CTO, Jacob:

1. He used to write programs with pen and paper

2. He prefers light mode

3. He used to be a beekeeper

If your team maintains forks or spends too much time on CI maintenance, Claude Code Sessions might be your salvation.

Read the full breakdown: depot.dev/blog/How-we-...

What used to consume hours of developer time every week now runs automatically in the background.

Zero manual patch conflicts

Breaking changes flagged automatically

Developers focus on features, not fork maintenance

This is what AI automation should look like.

Our daily CI workflow now:

Check for upstream updates → Auto-apply/fix ARM64 patches with Claude → Analyze commits for breaking changes → Create draft PRs with full context → Human reviews in minutes vs hours

For change analysis, Claude reviews all upstream commits since last update, compares against our historical issue database, flags potential breaking changes, and outputs structured JSON for easy parsing.

Smart automation that actually understands context.