Errata-2: Increased penalty on cache misses and branch misses increases the CPI (cycles per instruction) and lowers IPC.

The article has all the details correctly explained.

13.07.2025 14:22 — 👍 0 🔁 0 💬 0 📌 0

Errata-1: I meant to write the law as performance = 1 / (instruction count x cycles per instruction x cycle time).

13.07.2025 14:22 — 👍 0 🔁 0 💬 1 📌 0

In my latest article, I show this connection with the help of several common optimizations:

- loop unrolling

- function inlining

- SIMD vectorization

- branch elimination

- cache optimization

If you are someone who thinks about performance, I think you will find it worth your time.

13.07.2025 13:50 — 👍 0 🔁 0 💬 1 📌 0

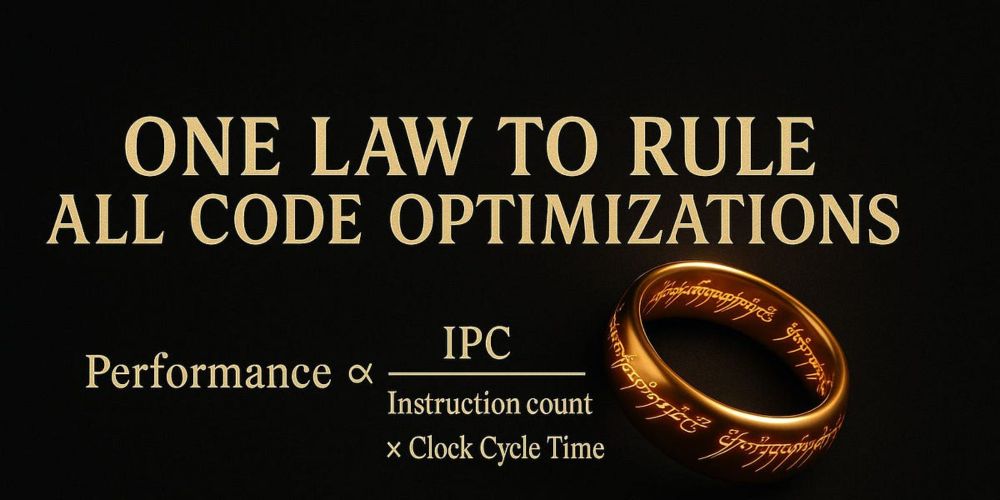

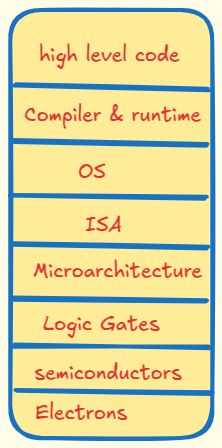

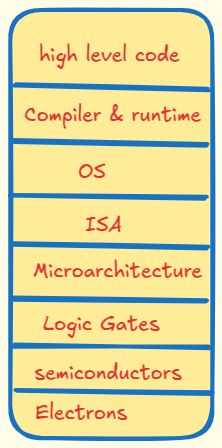

Reasoning about microarchitectural code optimizations also works very similarly. Each optimization affects one or more of these factors:

- number of instructions executed (dynamic instruction count)

- instructions executed per cycle (IPC)

- CPU clock cycle

13.07.2025 13:50 — 👍 0 🔁 0 💬 1 📌 0

As an example: when increasing the depth of the instruction pipeline, CPU architects can use this law to decide how much can they increase the depth. Increasing the depth reduces the cycle time, but it indirectly increases the IPC as cache miss and branch miss penalties become more severe.

13.07.2025 13:50 — 👍 0 🔁 0 💬 1 📌 0

The Iron Law is used by CPU architects to analyse how a change in the CPU microarchitecture will drive the performance of the CPU.

The law defines CPU performance in terms of three factors:

Performance = 1 / (instruction count x instructions per cycle x cycle time)

13.07.2025 13:50 — 👍 0 🔁 0 💬 1 📌 0

This is where you wish for a theory to systematically reason about the optimizations so that you know why they work and why they don't work. A framework that turns the guesses into surgical precision.

Does something like this exist? Maybe there is: the Iron Law of performance.

13.07.2025 13:50 — 👍 0 🔁 0 💬 1 📌 0

People talk about profiling to find bottlenecks, but no one explains how to fix them. Many devs shoot in the dark and when the optimization backfires, they aren't sure how to proceed.

13.07.2025 13:50 — 👍 3 🔁 1 💬 1 📌 0

Skipping computer architecture was my biggest student mistake. Hardware’s execution model dictates memory layout, binaries, compiler output, and runtimes. If you want to build systems, learn how the CPU works. I wrote an article on this:

blog.codingconfessions.com/p/seeing-the...

21.05.2025 04:41 — 👍 1 🔁 1 💬 0 📌 0

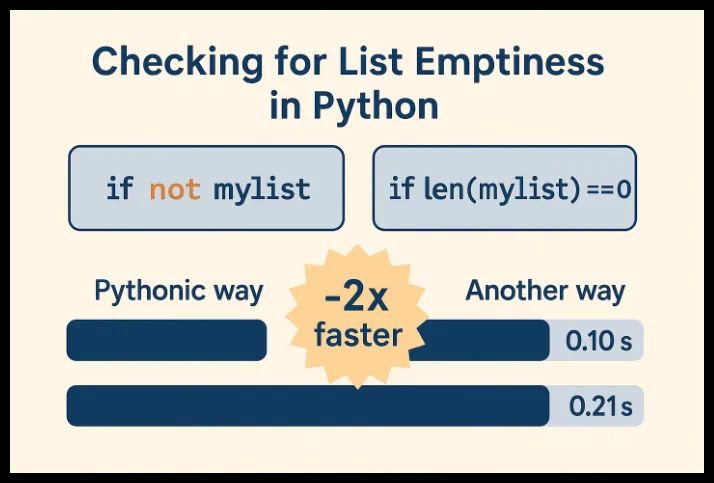

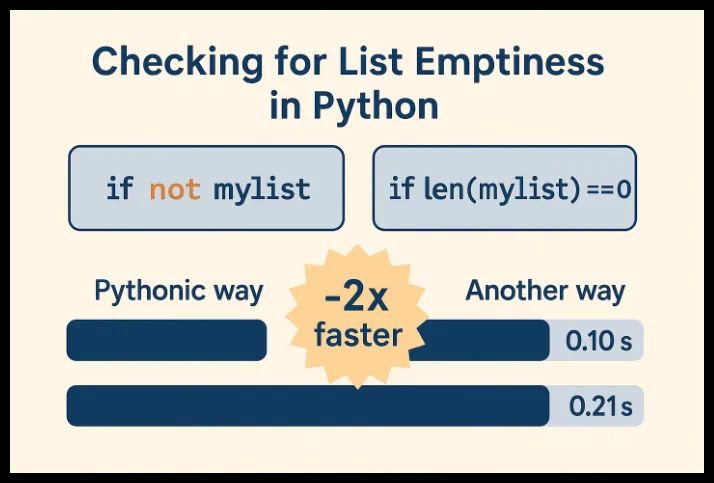

If you want a more detailed explanation of what is happening here, and why the `not` operator doesn't need to do the same thing: see my article.

blog.codingconfessions.com/p/python-per...

12.04.2025 08:43 — 👍 1 🔁 0 💬 0 📌 0

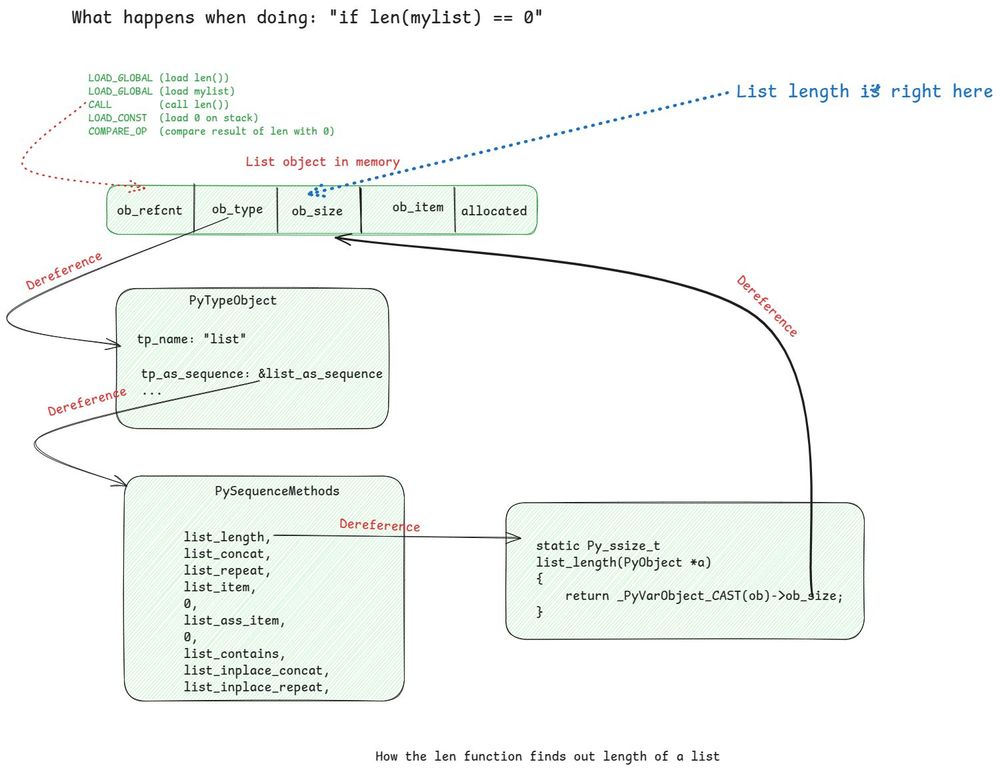

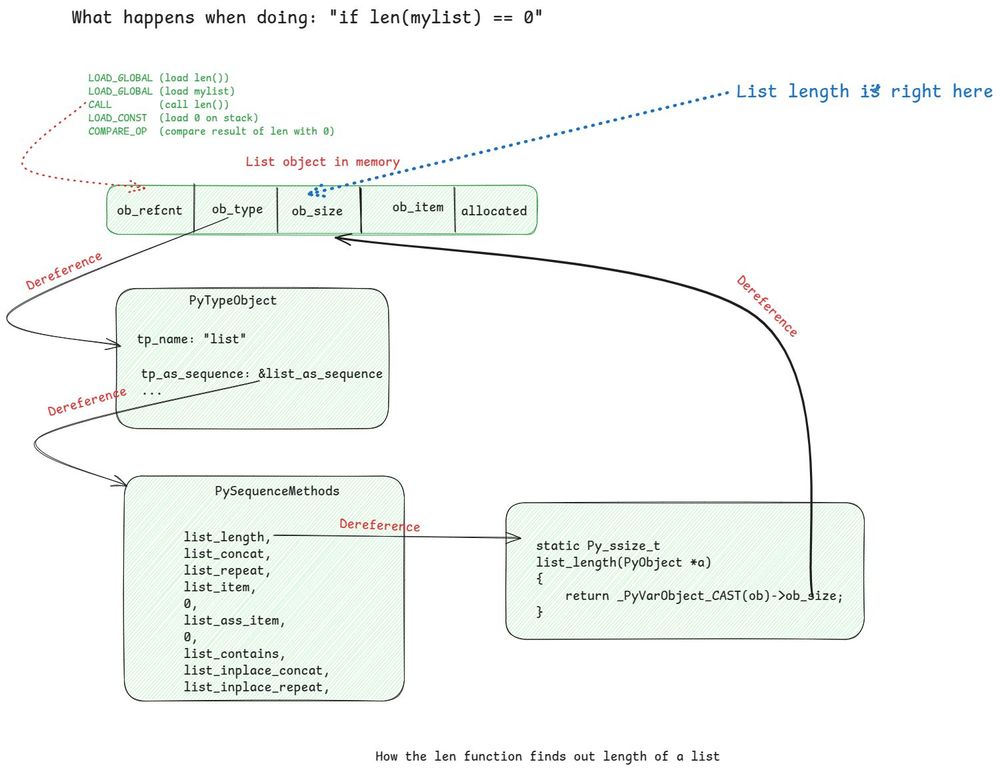

Here’s a fun visual showing how len() finds the length of a list in Python.

The size is stored right inside the object, but len() takes a five-pointer detour, only to land back where it started.

This is why if not mylist is ~2x faster for emptiness checks!

12.04.2025 08:43 — 👍 6 🔁 2 💬 1 📌 0

Hardware-Aware Coding: CPU Architecture Concepts Every Developer Should Know

Write faster code by understanding how it flows through your CPU

My article is written with zero assumption of any prior background in computer architecture. It uses real-world analogies to explain how the CPU works and why these features exist. And gives code examples to put all of that knowledge into action.

blog.codingconfessions.com/p/hardware-a...

28.03.2025 08:48 — 👍 5 🔁 0 💬 0 📌 0

A mispredicted branch usually wastes 20-30 cycles. So you don't really want to be missing branches in a critical path. For that you need to know how the branch predictors work and techniques to help it predict the branches better.

In the article you will find a couple of example on how to do that

28.03.2025 08:48 — 👍 1 🔁 0 💬 1 📌 0

If the prediction is right, your program finishes execution faster.

If the prediction is wrong, then the speculative work goes wasted and the CPU has to start from scratch to fetch the right set of instructions and execute them.

28.03.2025 08:48 — 👍 0 🔁 0 💬 1 📌 0

Waiting until that happens means the instruction pipeline is not doing anything, which wastes resources.

To avoid this wastage of time and resource the CPU employs branch predictors to predict the branch direction and speculatively execute those instructions.

28.03.2025 08:48 — 👍 0 🔁 0 💬 1 📌 0

Finally, branch prediction.

All real-world code is non-linear in nature, it consists of branches, such as if/else, switch case, function calls, loops, etc. The execution flow depends on the branch result and that is not known until the processor executes it.

28.03.2025 08:48 — 👍 0 🔁 0 💬 1 📌 0

To build a performant system, you need to write code which takes advantage of how the caches work.

In the article I explain how caches work and how can you write code to take advantage of caches to avoid the penalty of slow main memory access.

28.03.2025 08:48 — 👍 0 🔁 0 💬 1 📌 0

E.g. an instruction could be finish in 1 cycle but often its operands are in main memory and retrieving them from there takes hundreds of cycles. To hide this high latency, caches are used. Fetching from L1 cache takes only 3-4 cycles.

28.03.2025 08:48 — 👍 0 🔁 0 💬 1 📌 0

While ILP enables the hardware to execute several instructions per cycle, those instructions often are bottlenecked on memory.

28.03.2025 08:48 — 👍 0 🔁 0 💬 1 📌 0

But it is only possible when your code provides enough independent instructions to the hardware. Usually compilers try to optimize for it, but sometimes you need to do it yourself as well.

In the article I explain how ILP works with code examples to demonstrate how you can take advantage of it.

28.03.2025 08:48 — 👍 0 🔁 0 💬 1 📌 0

Instruction level parallelism (ILP) enables the processors to execute multiple instructions of your program in parallel, which results in better utilization of execution resources and faster execution of your code.

28.03.2025 08:48 — 👍 0 🔁 0 💬 1 📌 0

In my latest article I have described the mindset that is required to achieve this. I explain the three key architectural features of modern CPUs which is responsible for the performance of most of our code: instruction level parallelism, caches, and branch prediction.

28.03.2025 08:48 — 👍 0 🔁 0 💬 1 📌 0

When you see an expert optimize a piece of code, it may look like dark magic. In reality, these experts have a deep understanding of the entire computing stack, and when they look at an application profile, they quickly understand the bottlenecks.

28.03.2025 08:48 — 👍 2 🔁 0 💬 1 📌 0

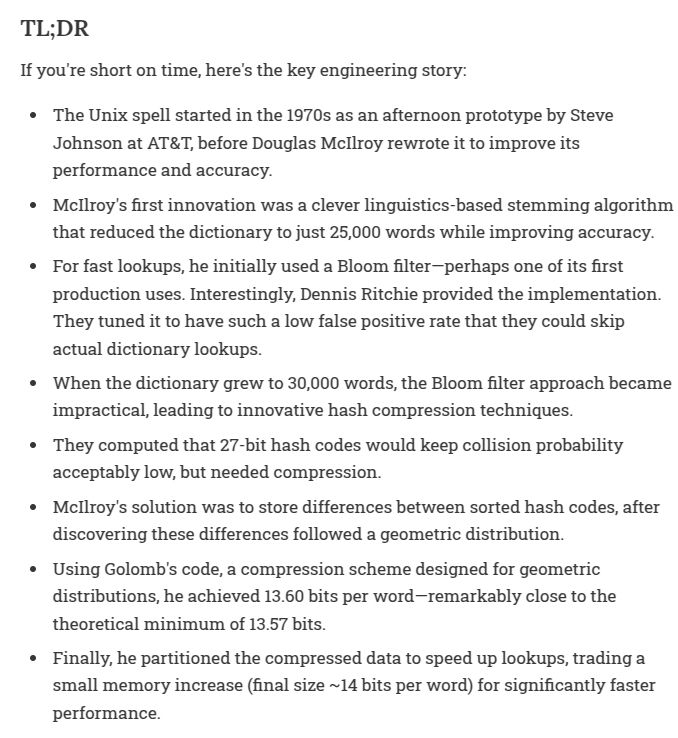

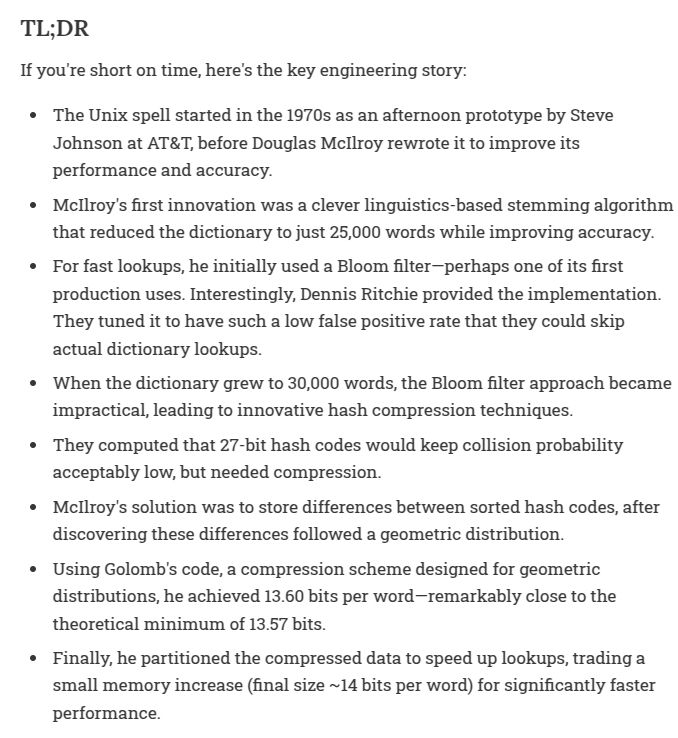

How do you fit a 250kB dictionary in 64kB of RAM and still perform fast lookups? For reference, even with gzip -9, you can't compress this file below 85kB.

In the 1970s, Douglas McIlroy faced this exact challenge while implementing the spell checker for Unix at AT&T.

19.01.2025 03:52 — 👍 14 🔁 7 💬 1 📌 0

Thank you Suvash, really appreciate that you shared it.

24.03.2025 13:46 — 👍 1 🔁 0 💬 1 📌 0

Hardware-Aware Coding: CPU Architecture Concepts Every Developer Should Know

Write faster code by understanding how it flows through your CPU

The second post is by @abhi9u.bsky.social on some common CPU architecture concepts (instruction pipelining, memory caching &speculative execution). Abhi has done a great job with the analogies in this one.

blog.codingconfessions.com/p/hardware-a...

24.03.2025 13:28 — 👍 2 🔁 1 💬 2 📌 0

Thank you, Teiva :)

21.03.2025 16:53 — 👍 0 🔁 0 💬 0 📌 0

Nostarch has C++ crash course, but it is 700+ pages. The language has become a monster.

18.02.2025 04:59 — 👍 1 🔁 0 💬 0 📌 0

Computer History and Architecture.

Writes The Chip Letter.

thechipletter.substack.com

Programmer & researcher, co-creator of https://ohmjs.org. 🇨🇦 🇩🇪 🇪🇺

Co-author of https://wasmgroundup.com — learn Wasm by building a simple compiler in JavaScript.

Prev: CDG/HARC, Google, BumpTop

💻 Software engineer at Google

☕ The Coder Cafe newsletter https://thecoder.cafe

📖 100 Go Mistakes author https://100go.co/book

🏠 https://teivah.dev

改善

https://rdivyanshu.github.io/

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

Founder at ReliScore.com, Visiting Professor of Practice at IITBombay (CSE dept/TrustLab), Instructor at GenWise.in, and a wannabe YouTuber https://www.youtube.com/@TheFutureIQ/). Erdős–Bacon number 7.

Python Triage Member | Focusing on CPython #LKD #Python #ArchLinux #Django #eBPF

https://github.com/furkanonder/

Passionate about compilers & programming languages. GraalVM founder & project lead. VP at Oracle. Expressed opinions are my own. For DM: contact@thomaswue.dev

Sr Sw Eng @Datadoghq, optimize software running on JVM. Love performance topics and mechanical sympathy supporter. Java Champion & @javamissionctrl committer

Assistant Professor at Virginia Tech || Compilers, Programming Languages, and HPC || https://kirshanthans.github.io

breaking databases @tur.so W1 '21 @recursecenter.bsky.social

excited about databases, storage engines and message queues

In a transitional period involving graphs / networks, large language modes, big data and network motifs.

Prefer common sense over hype. Employed at @marimo.io, building calmcode.io and dearme.email. Also blogs over at https://koaning.io.

incoming MIT prof. & director of FLAME lab (https://flame.csail.mit.edu/).

building new languages and compilers to make hardware design fast, fun, and correct

Shopify / Royal Academy of Engineering Research Chair in Language Engineering. https://tratt.net/laurie/

Portland-based mathematician and software engineer. Building a homomorphic encryption compiler at Google.

https://jeremykun.com

https://pimbook.org

https://pmfpbook.org

https://buttondown.email/j2kun

https://heir.dev

CS prof at UC Riverside in Programming Languages and Software Engineering. Original author of NullAway. https://manu.sridharan.net