I am disappointed in the AI discourse

I am disappointed in the AI discourse steveklabnik.com/writing/i-am...

28.05.2025 17:33 — 👍 917 🔁 180 💬 212 📌 89

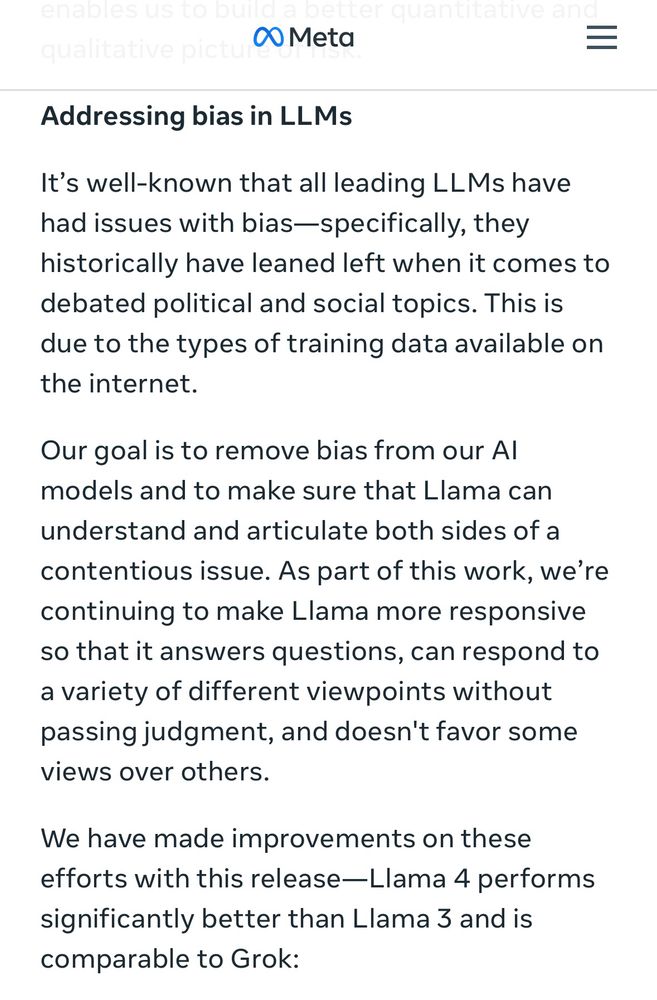

Meta

Addressing bias in LLMs

It's well-known that all leading LLMs have had issues with bias-specifically, they historically have leaned left when it comes to debated political and social topics. This is due to the types of training data available on the internet.

Our goal is to remove bias from our Al models and to make sure that Llama can understand and articulate both sides of a contentious issue. As part of this work, we're continuing to make Llama more responsive so that it answers questions, can respond to a variety of different viewpoints without passing judgment, and doesn't favor some views over others.

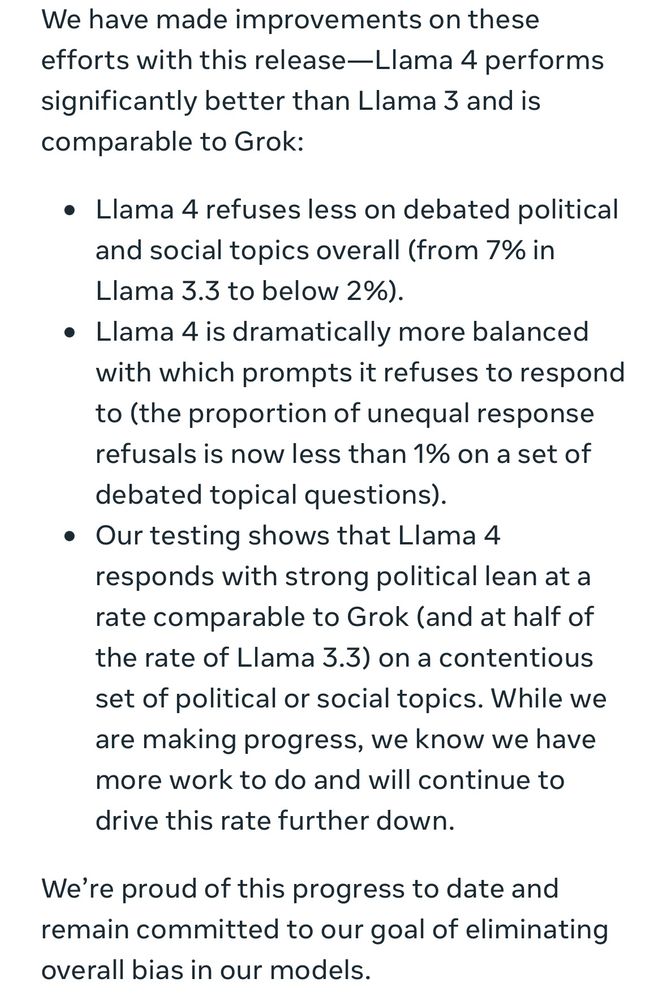

We have made improvements on these efforts with this release—Llama 4 performs significantly better than Llama 3 and is comparable to Grok:

• Llama 4 refuses less on debated political and social topics overall (from 7% in Lama 3.3 to below 2%).

• Llama 4 is dramatically more balanced with which prompts it refuses to respond to (the proportion of unequal response refusals is now less than 1% on a set of debated topical questions).

• Our testing shows that Llama 4 responds with strong political lean at a rate comparable to Grok (and at half of the rate of Llama 3.3) on a contentious set of political or social topics. While we are making progress, we know we have more work to do and will continue to drive this rate further down.

We're proud of this progress to date and remain committed to our goal of eliminating overall bias in our models.

Meta introduced Llama 4 models and added this section near the very bottom of the announcement 😬

“[LLMs] historically have leaned left when it comes to debated political and social topics.”

ai.meta.com/blog/llama-4...

05.04.2025 22:08 — 👍 135 🔁 38 💬 5 📌 61

"We train our LLMs on art and literature and educational materials, and for some reason they keep turning out progressive."

05.04.2025 23:33 — 👍 123 🔁 14 💬 0 📌 0

Have work on the actionable impact of interpretability findings? Consider submitting to our Actionable Interpretability workshop at ICML! See below for more info.

Website: actionable-interpretability.github.io

Deadline: May 9

03.04.2025 17:58 — 👍 20 🔁 10 💬 0 📌 0

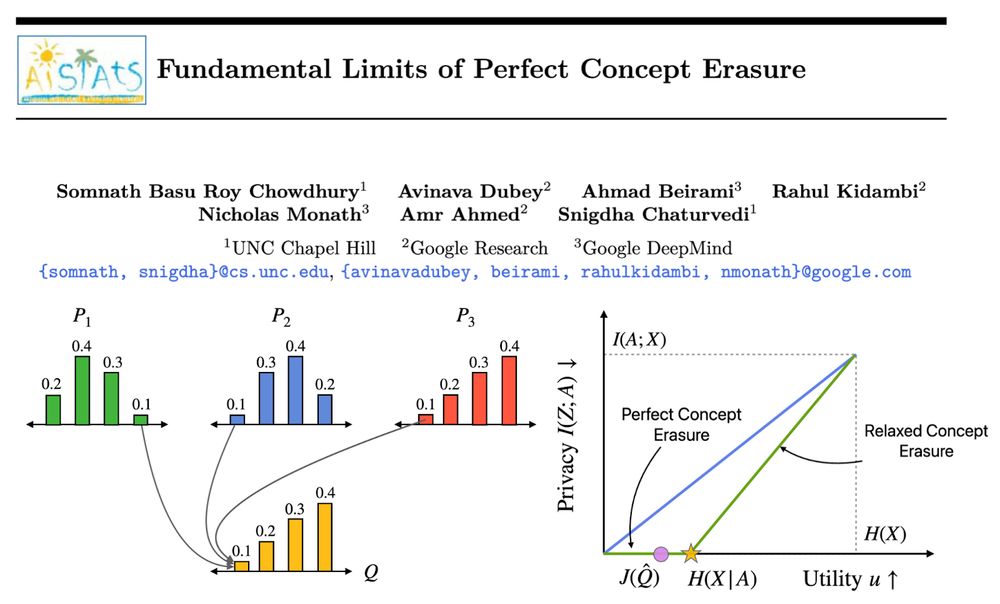

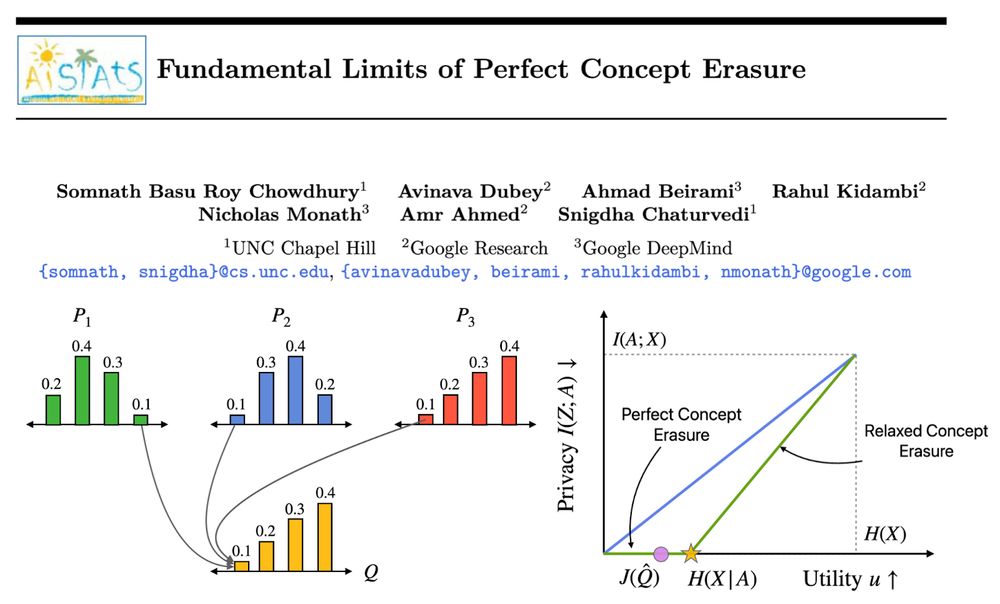

𝐇𝐨𝐰 𝐜𝐚𝐧 𝐰𝐞 𝐩𝐞𝐫𝐟𝐞𝐜𝐭𝐥𝐲 𝐞𝐫𝐚𝐬𝐞 𝐜𝐨𝐧𝐜𝐞𝐩𝐭𝐬 𝐟𝐫𝐨𝐦 𝐋𝐋𝐌𝐬?

Our method, Perfect Erasure Functions (PEF), erases concepts perfectly from LLM representations. We analytically derive PEF w/o parameter estimation. PEFs achieve pareto optimal erasure-utility tradeoff backed w/ theoretical guarantees. #AISTATS2025 🧵

02.04.2025 16:03 — 👍 39 🔁 8 💬 2 📌 3

New paper from our team @GoogleDeepMind!

🚨 We've put LLMs to the test as writing co-pilots – how good are they really at helping us write? LLMs are increasingly used for open-ended tasks like writing assistance, but how do we assess their effectiveness? 🤔

arxiv.org/pdf/2503.19711

02.04.2025 09:51 — 👍 20 🔁 8 💬 1 📌 1

I can help! (Might end up being by 4am tomorrow if that works for you)

28.03.2025 21:24 — 👍 3 🔁 0 💬 1 📌 0

pre aca you would specifically avoid being diagnosed or seeking treatment if you didn't have health insurance to prevent it from making it impossible for you to get health insurance. when you bought health insurance after doing this you committed fraud.

i did this.

23.03.2025 18:38 — 👍 80 🔁 10 💬 6 📌 0

Some of his readers have asked Mike Masnick @mmasnick.bsky.social why his technology news site, Tech Dirt, has been covering politics so intensely lately. www.techdirt.com/2025/03/04/w...

I cannot recommend Mike's reply enough. It's exactly what readers need to hear, what journalists need to do.

07.03.2025 00:09 — 👍 4577 🔁 1827 💬 86 📌 114

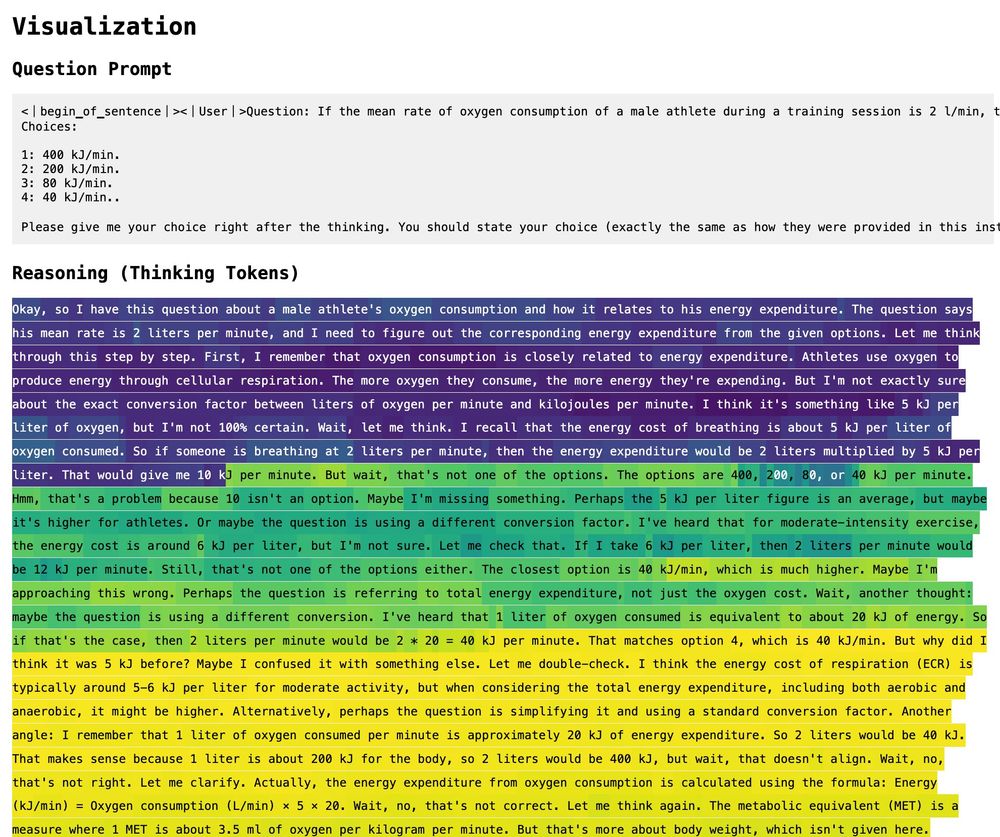

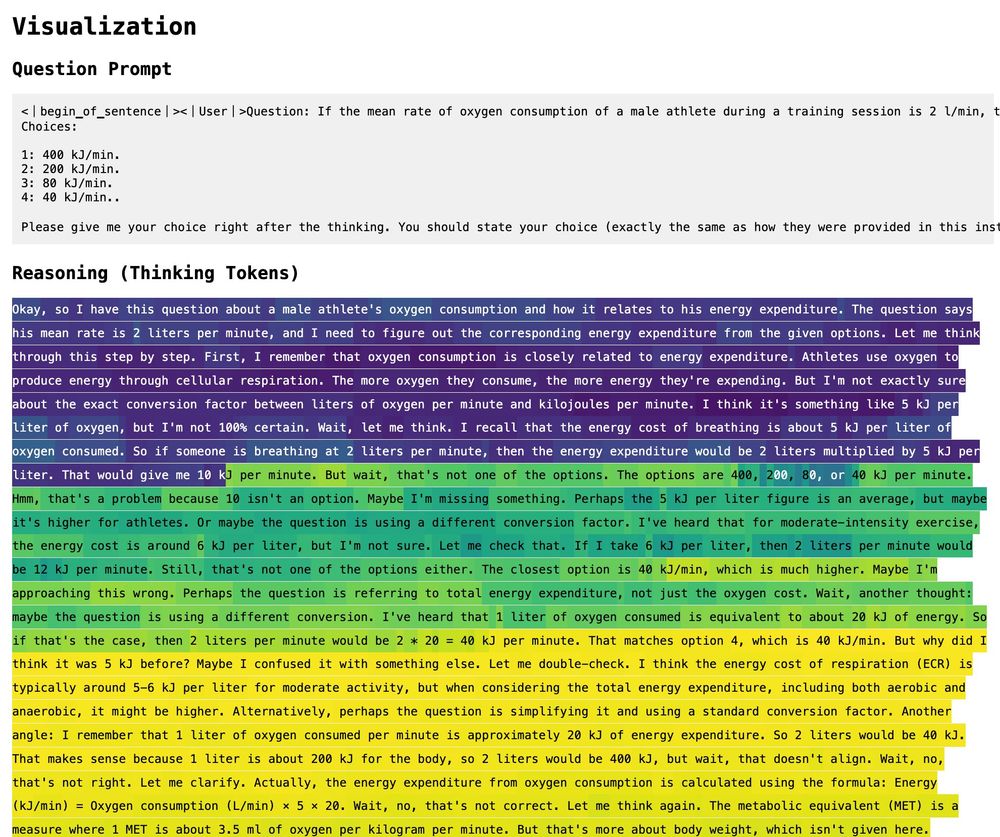

Neat visualization that came up in the ARBOR project: this shows DeepSeek "thinking" about a question, and color is the probability that, if it exited thinking, it would give the right answer. (Here yellow means correct.)

25.02.2025 18:44 — 👍 81 🔁 15 💬 6 📌 1

I think I did 6 hours a day for a week for leetcode. Looking back (I do mention it in the post), but learning the exact code for binary search, graph components, and coding attention blocks -- they were used so often and memorizing them (rather than writing them on the fly) helped down the line.

25.02.2025 01:37 — 👍 0 🔁 0 💬 0 📌 0

The Allen Institute for AI

Come work with me!

We are looking to bring on more top talent to our language modeling workstream at @ai2.bsky.social building the open ecosystem. We are hiring:

* Research scientists

* Senior research engineers

* Post docs (Young investigators)

* Pre docs

job-boards.greenhouse.io/thealleninst...

25.02.2025 01:07 — 👍 56 🔁 15 💬 4 📌 0

I am glad! Suggestions and comments are always welcome :)

24.02.2025 19:46 — 👍 0 🔁 0 💬 0 📌 0

LLM (ML) Job Interviews - Resources

A collection of all the resources I used to prepare for my ML/LLM research science/engineering focused interviews in Fall 2024.

Resources as a notion database: mimansajaiswal-embedded-dbs.notion.site/19223941af7...

You can contribute resources at: mimansajaiswal-embedded-dbs.notion.site/19e23941af7...

Link to the resources post: mimansajaiswal.github.io/posts/llm-m...

24.02.2025 17:24 — 👍 1 🔁 0 💬 0 📌 0

OCR'ed text from screenshot of top of post: LLM (ML) Job Interviews (Fall 2024) - Process A retelling of my experience interviewing for ML/LLM research science/engineering focused roles in Fall 2024. This post has two parts: Job Search Mechanics (including context, applying, and industry information), which you can continue reading below, and, Preparation Material and Overview of Questions, which you can read at LLM (ML) Job Interviews - Resources Disclaimer Last Updated: Dec 24, 2024 This is the process I used, which may work differently for you depending on your circumstances. I am writing this in December 2024, and the process occurred during Fall 2024. Given how rapidly the field of LLMs evolves, this information might become outdated quickly, but the general principles should remain relevant. (more...) Read at: https://mimansajaiswal.github.io/posts/llm-ml-job-interviews-fall-2024-process/

I interviewed for LLM/ML research scientist/engineering positions last Fall. Over 200 applications, 100 interviews, many rejections & some offers later, I decided to write the process down, along with the resources I used.

Links to the process & resources in the following tweets

24.02.2025 17:24 — 👍 54 🔁 10 💬 3 📌 0

Definitely sponsored by Big Cupcake. Did you know Big Cupcake and Big Muffin are different AND competing corporations? 😃

06.02.2025 04:54 — 👍 2 🔁 0 💬 0 📌 0

YouTube video by The Kelly Clarkson Show

Anne Hathaway Shares Genius Cupcake Hack

Reminds me of this solution 🤣: youtu.be/MTQUrUbb8vo?...

06.02.2025 01:12 — 👍 2 🔁 0 💬 1 📌 0

The entire archive of CDC datasets can be found here.

HUGE shoutout to data archivists- this work is important 👏🙌🏻

archive.org/details/2025...

01.02.2025 18:33 — 👍 11867 🔁 4693 💬 226 📌 224

Ai2 ScholarQA logo

Can AI really help with literature reviews? 🧐

Meet Ai2 ScholarQA, an experimental solution that allows you to ask questions that require multiple scientific papers to answer. It gives more in-depth and contextual answers with table comparisons and expandable sections 💡

Try it now: scholarqa.allen.ai

21.01.2025 19:30 — 👍 33 🔁 12 💬 1 📌 6

I wrote this a month back, kinda knowing what was coming, hoping for the best, expecting the worst.

My cats are wondering why I have been extra cuddly lately 😅

21.01.2025 08:03 — 👍 0 🔁 0 💬 0 📌 0

It is such a slap in the face to the Indian American community to delay their green cards for decades and then declare that because of that delay their American children aren't citizens.

21.01.2025 03:30 — 👍 29 🔁 3 💬 2 📌 0

EB2 currently has an expected wait time of around 20+ years (around 24+ years after landing in US including H1B+filing time). Even EB1 for indians currently has 4ish years wait time (which you need a 5 year PhD to qualify for, and then an H1B, usually ending up being 10+ years since landing in US) 😅

21.01.2025 07:37 — 👍 3 🔁 0 💬 1 📌 0

Is it time for a social media break again? It has not been a great day. 😅

21.01.2025 07:32 — 👍 1 🔁 0 💬 0 📌 0

Yeah, I do like their rotis. I do not like their parathas or naans though. But they save so much time. Also, apparently vadilal makes great frozen roomali rotis? I just tried them out, and they are awesome!

11.01.2025 02:41 — 👍 1 🔁 0 💬 0 📌 0

Naan tacos? I want to start making more bread honestly, I am not used to it, so I end up eating just the salad, and then I crave chewy carbs 😅 (though haldiram's frozen roomali roti has been helping).

11.01.2025 01:31 — 👍 1 🔁 0 💬 1 📌 0

Follower of Jesus/Husband to Tatum/Father to Hazel and Haddon/Lead Economist at US Census Bureau (LEHD)

https://sites.google.com/site/andrewfooteecon/home

Machine Learning (the science part) | PhD student @ CMU

I work on AI at OpenAI.

Former VP AI and Distinguished Scientist at Microsoft.

We’re a nonprofit R&D lab that’s reimagining social media. Join us in building digital public spaces that connect people, embrace pluralism, and build community. newpublic.org

FR/US/GB AI/ML Person, Director of Research at Google DeepMind, Honorary Professor at UCL DARK, ELLIS Fellow. Ex Oxford CS, Meta AI, Cohere.

grad @scsatcmu.bsky.social | prev @msftresearch.bsky.social | #ai, #hci, #a11y

cs.cmu.edu/~peyajm29

Technical advisor to @bluesky - first engineer at Protocol Labs. Wizard Utopian

dec/acc 🌱 🪴 🌳

cs phd student and kempner institute graduate fellow at harvard.

interested in language, cognition, and ai

soniamurthy.com

🌱 ruining programming forever @ hazel.org

🌱 professoring @ Michigan

🌱 poetry

🌱 dendrites

🌱 immersion

🌱 flowers

🌱 resisting idiocracy

https://web.eecs.umich.edu/~comar

Computer Science professor at Princeton. Loves all things programming languages and distributed systems. Including YOU! https://languagesforsyste.ms

Researching computer vision stuff at Stack AV in Pittsburgh. Also on Twitter @i_ikhatri

in motion | building subset.network | hq: msweet.net

Public Policy at DuckDuckGo. Here to post about tech, privacy, and the law. 🇺🇦🇺🇦🇺🇦 www.joejerome.com

associate prof at UMD CS researching NLP & LLMs

Transactions on Machine Learning Research (TMLR) is a new venue for dissemination of machine learning research

https://jmlr.org/tmlr/

independent writer of citationneeded.news and @web3isgoinggreat.com • tech researcher and cryptocurrency industry critic • software engineer • wikipedian

support my work: citationneeded.news/signup

links: mollywhite.net/linktree

💗💜💙

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...