Please check out our work!

📄 Preprint: arxiv.org/abs/2506.08641

💻 Code: github.com/ExplainableM...

#TimeSeries #VisionTransformer #FoundationModel

@simonroschmann.bsky.social

PhD Student @eml-munich.bsky.social @tum.de @www.helmholtz-munich.de. Passionate about ML research.

Please check out our work!

📄 Preprint: arxiv.org/abs/2506.08641

💻 Code: github.com/ExplainableM...

#TimeSeries #VisionTransformer #FoundationModel

This project was a collaboration between @eml-munich.bsky.social and Huawei Paris Noah’s Ark Lab. Thank you to my collaborators @qbouniot.bsky.social, Vasilii Feofanov, Ievgen Redko, and particularly to my advisor @zeynepakata.bsky.social for guiding me through my first PhD project!

03.07.2025 07:59 — 👍 3 🔁 2 💬 1 📌 0

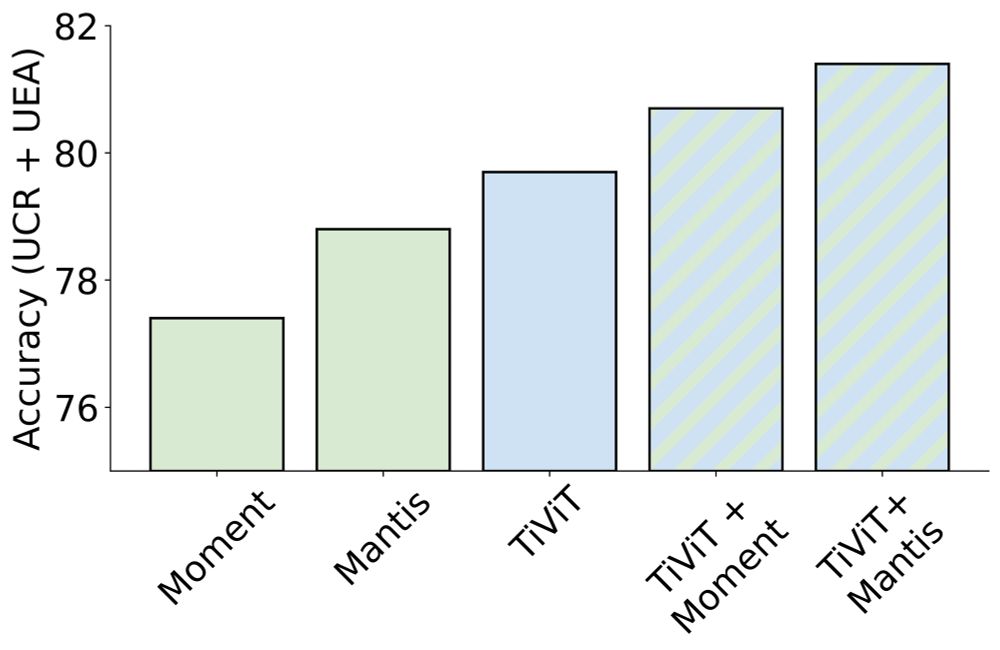

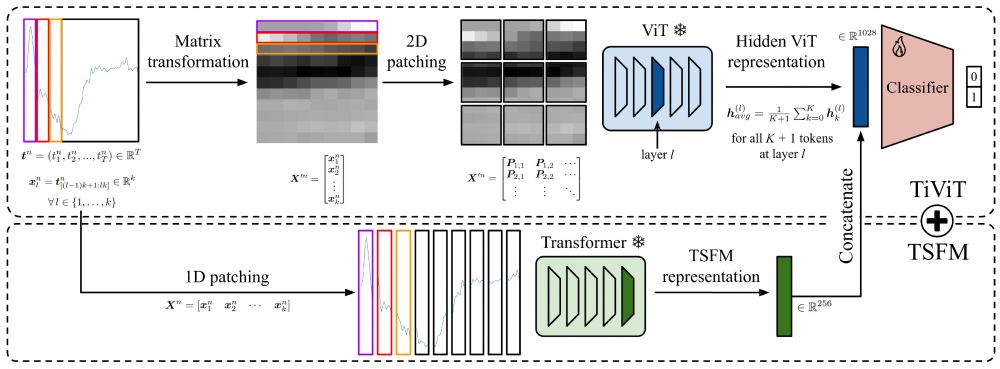

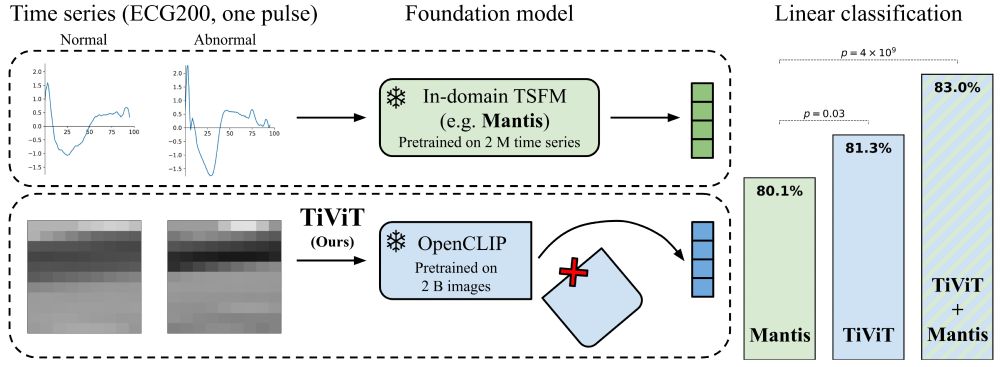

TiViT is on par with TSFMs (Mantis, Moment) on the UEA benchmark and significantly outperforms them on the UCR benchmark. The representations of TiViT and TSFMs are complementary; their combination yields SOTA classification results among foundation models.

03.07.2025 07:59 — 👍 1 🔁 0 💬 1 📌 0

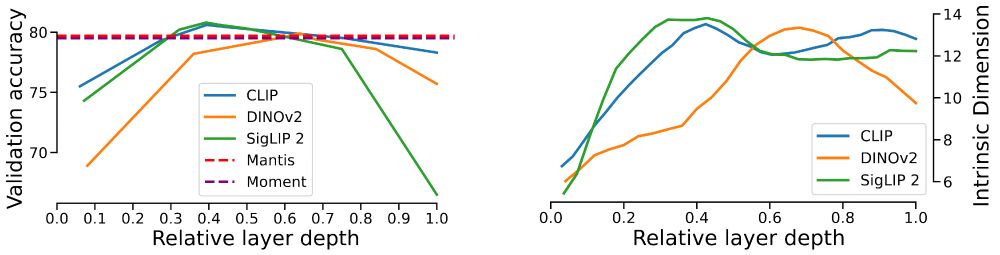

We further explore the structure of TiViT representations and find that intermediate layers with high intrinsic dimension are the most effective for time series classification.

03.07.2025 07:59 — 👍 1 🔁 0 💬 1 📌 0Time Series Transformers typically rely on 1D patching. We show theoretically that the 2D patching applied in TiViT can increase the number of label-relevant tokens and reduce the sample complexity.

03.07.2025 07:59 — 👍 1 🔁 0 💬 1 📌 0

Our Time Vision Transformer (TiViT) converts a time series into a grayscale image, applies 2D patching, and utilizes a pretrained frozen ViT for feature extraction. We average the representations from a specific hidden layer and only train a linear classifier.

03.07.2025 07:59 — 👍 1 🔁 0 💬 1 📌 0

How can we circumvent data scarcity in the time series domain?

We propose to leverage pretrained ViTs (e.g., CLIP, DINOv2) for time series classification and outperform time series foundation models (TSFMs).

📄 Preprint: arxiv.org/abs/2506.08641

💻 Code: github.com/ExplainableM...