title of the paper in behavior research methods

Our paper with @zoepurcell.bsky.social , @luciecharlesneuro.bsky.social and @wimdeneys.bsky.social on using LLMs to estimate belief strength in reasoning is out in Behavior Research Methods.

If you're interested in reasoning biases & LLMs as measurement tools, check it out: rdcu.be/eZXGK (free pdf)

21.01.2026 16:40 —

👍 8

🔁 1

💬 1

📌 1

Many people worry that AI-assisted writing feels “less authentic” and may erode trust.

So we tested it in two preregistered, incentivized trust-game experiments (N = 1,637), which just got published in iScience (Cell Press)

More details 👇

19.01.2026 09:57 —

👍 3

🔁 1

💬 1

📌 0

Our paper with @zoepurcell.bsky.social, @luciecharlesneuro.bsky.social, and @wimdeneys.bsky.social

has been accepted at Behavior Research Methods! 🥳

Here is the updated preprint: osf.io/preprints/ps...

Also, the baserater package is now on CRAN: cran.r-project.org/package=base...

#psynomBRM

27.11.2025 16:14 —

👍 11

🔁 3

💬 0

📌 0

Abstract

Accurately quantifying belief strength in heuristics-and-biases tasks is crucial yet methodologically challenging. In this paper, we introduce an automated method leveraging large language models (LLMs) to systematically measure and manipulate belief strength. We specifically tested this method in the widely used “lawyer-engineer” base-rate neglect task, in which stereotypical descriptions (e.g., someone enjoying mathematical puzzles) conflict with normative base-rate information (e.g., engineers represent a very small percentage of the sample). Using this approach, we created an open-access database containing over 100,000 unique items systematically varying in stereotype-driven belief strength. Validation studies demonstrate that our LLM-derived belief strength measure correlates strongly with human typicality ratings and robustly predicts human choices in a base-rate neglect task. Additionally, our method revealed substantial and previously unnoticed variability in stereotype-driven belief strength in popular base-rate items from existing research, underlining the need to control for this in future studies. We further highlight methodological improvements achievable by refining the LLM prompt, as well as ways to enhance cross-cultural validity. The database presented here serves as a powerful resource for researchers, facilitating rigorous, replicable, and theoretically precise experimental designs, as well as enabling advancements in cognitive and computational modeling of reasoning. To support its use, we provide the R package baserater, which allows researchers to access the database to apply or adapt the method to their own research.

1/10

🚨 New preprint: Using Large Language Models to Estimate Belief Strength in Reasoning 🚨

When asked: "There are 995 politicians and 5 nurses. Person 'L' is kind. Is Person 'L' more likely to be a politician or a nurse?", most people will answer "nurse", neglecting the base-rate info.

A 🧵👇

16.10.2025 16:17 —

👍 13

🔁 3

💬 1

📌 1

Hahaha yeh I even remembered the damn thing to thank them in my talk and then ofc forgot to do it in the panic!

12.07.2025 14:11 —

👍 0

🔁 0

💬 0

📌 0

Great week at the CSBBCS/EPS conference! Speaking alongside two of my academic heroes and mentors was such an honour and a really special moment for me! Huge thanks to CSBBCS and Aimee Surprenant for the invitation and opportunity, and all those who attended our symposium 🙏 @exppsychsoc.bsky.social

12.07.2025 09:03 —

👍 10

🔁 1

💬 1

📌 0

w/ @lauracharbit.bsky.social, Grégoire Borst, @lapsyde.bsky.social and Anne-Marie Nussberger @mpib-berlin.bsky.social. Now in @cognitionjournal.bsky.social 🎉

11.06.2025 10:57 —

👍 0

🔁 0

💬 0

📌 0

Do AI builders hold different values from AI users?

We show that AI builders and men are more utilitarian and less supportive of pro-diversity outputs, highlighting ongoing concerns about workforce diversity and whose values are shaping AI.

tinyurl.com/AIcognit

11.06.2025 10:57 —

👍 8

🔁 6

💬 1

📌 0

New preprint: “Folk Thinking, Fast and Slow: Intuitive Preference for Deliberation in Humans and Machines”

Pop culture often praises intuition (“Blink”, Steve Jobs). But do we really trust it? Across 13 studies, we find a strong intuitive preference for deliberation.

tinyurl.com/8r54dmyn (1/6)

18.02.2025 16:24 —

👍 14

🔁 10

💬 1

📌 1

Come hear about moral psychology and AI at 11am today at #SPSP2025!

@awad.bsky.social @zoepurcell.bsky.social Anne-Marie Nussberger

22.02.2025 15:49 —

👍 8

🔁 5

💬 0

📌 1

New pre-print with @jfbonnefon.bsky.social !

Using Generative AI to Increase Skeptics’ Engagement with Climate Science

Available at: doi.org/10.31234/osf...

19.11.2024 11:19 —

👍 10

🔁 7

💬 0

📌 0

Postdoctoral Researcher (2011) - Birkbeck, University of London

Birkbeck

📣🧠📣 I’m hiring !! 📣🧠📣

We’re looking for a postdoc to join The Uncertainty Lab at @birkbeckpsychology.bsky.social You’ll lead fMRI work on a new project studying how communication with others alters private metacognition of our own minds.

cis7.bbk.ac.uk/vacancy/post...

21.10.2024 10:22 —

👍 54

🔁 48

💬 5

📌 6

Let's give this a try ;-) go.bsky.app/TBS14hQ

18.10.2024 14:07 —

👍 7

🔁 2

💬 3

📌 0

📢Our paper on AI-mediated communication (out now!) shows that:

1) People expect others to use AI tools more than themselves

2) People are more accepting of open than secret AI use (at least, explicitly)

bit.ly/zpurc

👥 w/ Mengchen Dong, Anne-Marie Nussberger, Nils Kobis, & Maurice Jakesch.

06.09.2024 18:14 —

👍 6

🔁 2

💬 1

📌 0

Thanks for coming along!

02.08.2024 12:50 —

👍 1

🔁 0

💬 0

📌 0

We're currently running some follow up studies but should have something available this year :) I'll post it here once we do

02.08.2024 12:49 —

👍 2

🔁 0

💬 1

📌 0

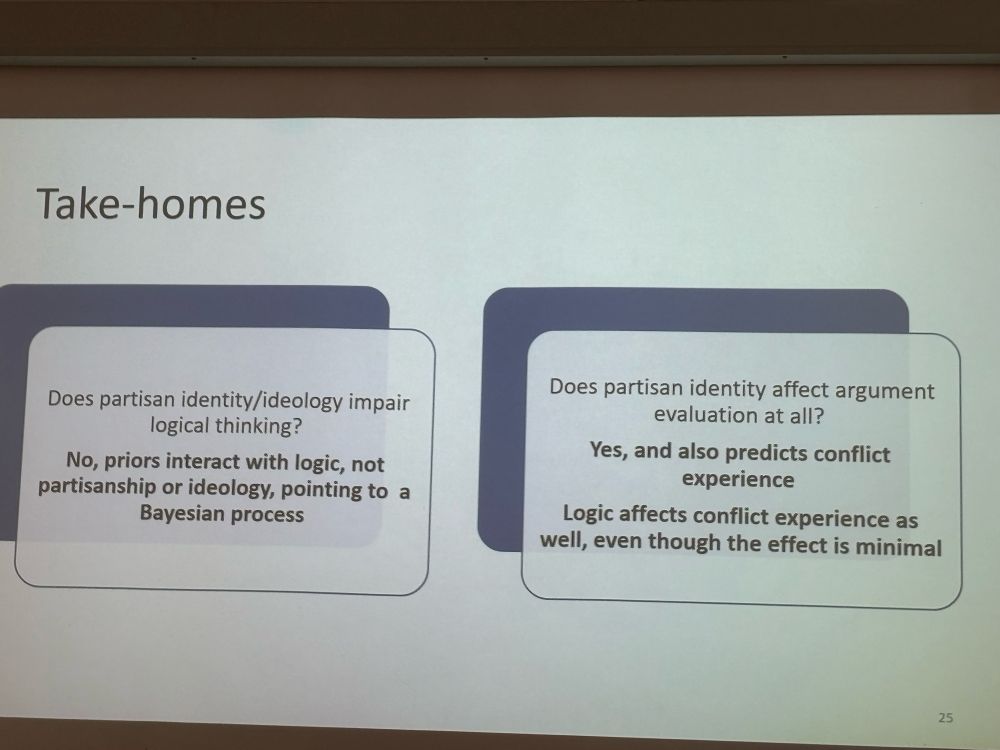

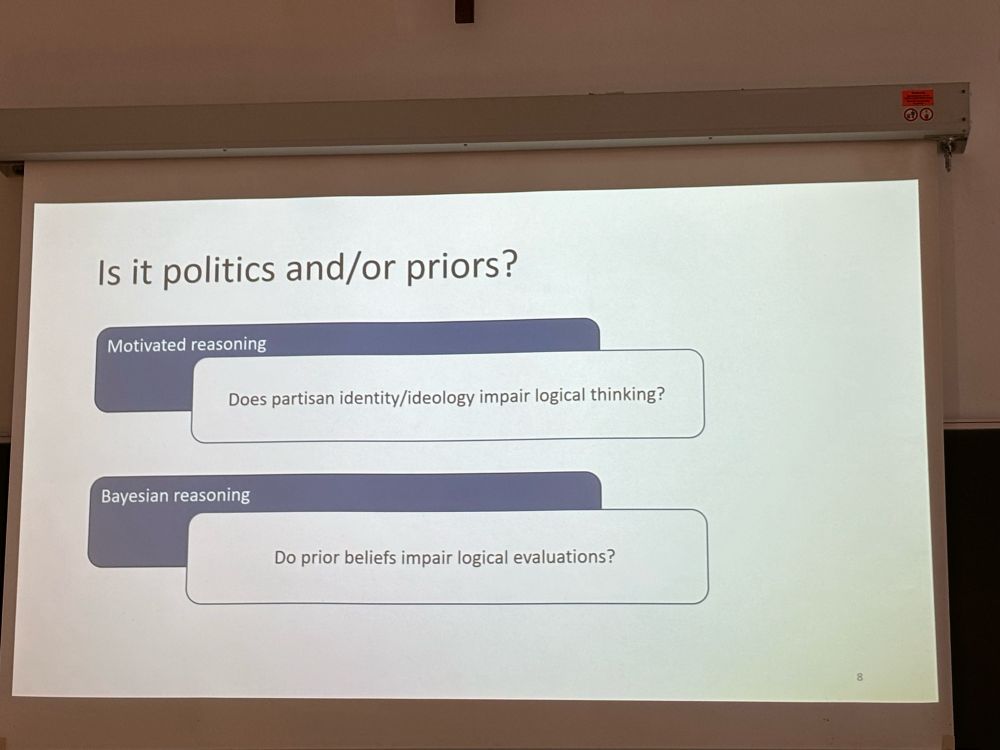

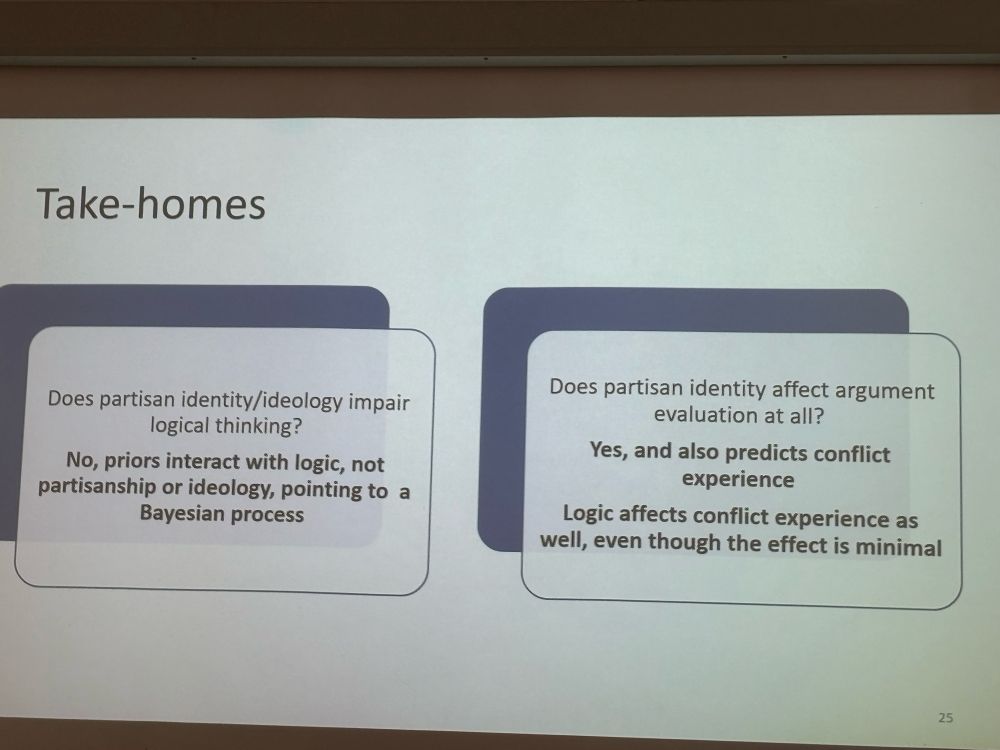

Today at IMBES my favourite talk was by @zoepurcell.bsky.social unpicking the relationships between partisan identity, prior beliefs, and reasoning. Partisan identity doesn’t make reasoning less accurate, but it does make the experience of reasoning subjectively more difficult. #academicsky

10.07.2024 19:55 —

👍 2

🔁 1

💬 2

📌 0

Was thrilled to present our work at #ICT2024! I was overwhelmed by the support for this new angle and am now riding a new wave of motivation for this project -- thank you all✨ @wimdeneys.bsky.social @kobedesender.bsky.social

13.06.2024 07:55 —

👍 2

🔁 0

💬 0

📌 0

Thanks Nick! Was a pleasure to meet you in person :)

13.06.2024 07:23 —

👍 0

🔁 0

💬 1

📌 0