This work wouldn’t be possible without the amazing collaborators and advisors on this project - Yuxuan Liu, Martez Mott, and @anhongguo.bsky.social , thank you so much! (8/8)

01.08.2025 03:33 — 👍 0 🔁 0 💬 0 📌 0

We envision HandProxy will expand the design of existing speech interfaces in XR, and hope this can be implemented on the system level to provide a uniform, cross-application input solution for accessible control and input automation of hand interactions. (7/n)

01.08.2025 03:32 — 👍 0 🔁 0 💬 0 📌 0

A user study on various hand interaction tasks showed a 91.8% command execution accuracy, with an average of 1.09 attempts per speech command. HandProxy was able to interpret diverse commands users issued during tasks, and users found it flexible, effective, and easy to use (6/n)

01.08.2025 03:32 — 👍 0 🔁 0 💬 0 📌 0

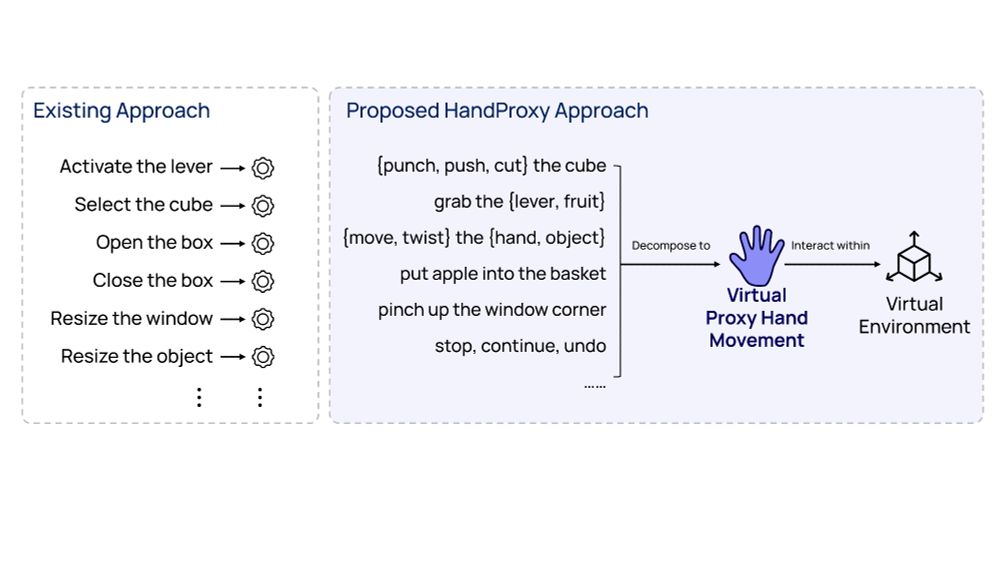

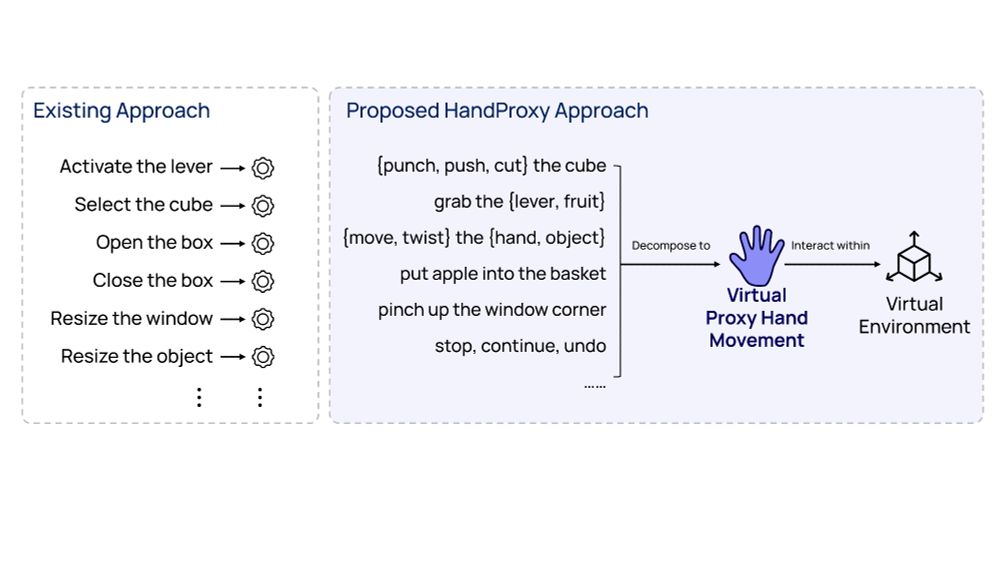

To do so, HandProxy continuously captures speech input and uses LLM to decompose it into a list of hand control primitives. They are used to calculate the 3D hand joints for the hand movement, and joint data is streamed to the virtual environment for hand control (5/n)

01.08.2025 03:32 — 👍 0 🔁 0 💬 0 📌 0

Users can issue various commands, such as gesture (pinch, grab the cube), timing (stop, undo), movement (move up, twist left), or high-level interaction goals (reduce the volume, increase brightness) where HandProxy interprets and decomposes it into detailed hand controls (4/n)

01.08.2025 03:32 — 👍 0 🔁 0 💬 0 📌 0

A figure showing a comparison between the existing approach and the proposed HandProxy approach. The existing approach usually relies on a list of discrete, predefined commands such as "Activate the lever," "Select the cube," "Open the box," "Close the box," "Resize the window," and "Resize the object". The proposed HandProxy approach supports more natural, expressive interactions using varied and context-rich phrases like "{punch, push, cut} the cube," "grab the {lever, fruit}," "{move, twist} the {hand, object}," "put apple into the basket," "pinch up the window corner," and general control commands like "stop, continue, undo." These inputs are decomposed into virtual proxy hand movements, which then interact directly within a virtual environment.

HandProxy introduces a virtual hand as an interaction proxy. It expands the affordance of speech interfaces to support expressive hand interactions. Users can initiate hand interactions through natural speech, and the system interprets and decomposes it to hand movements (3/n)

01.08.2025 03:31 — 👍 0 🔁 0 💬 0 📌 0

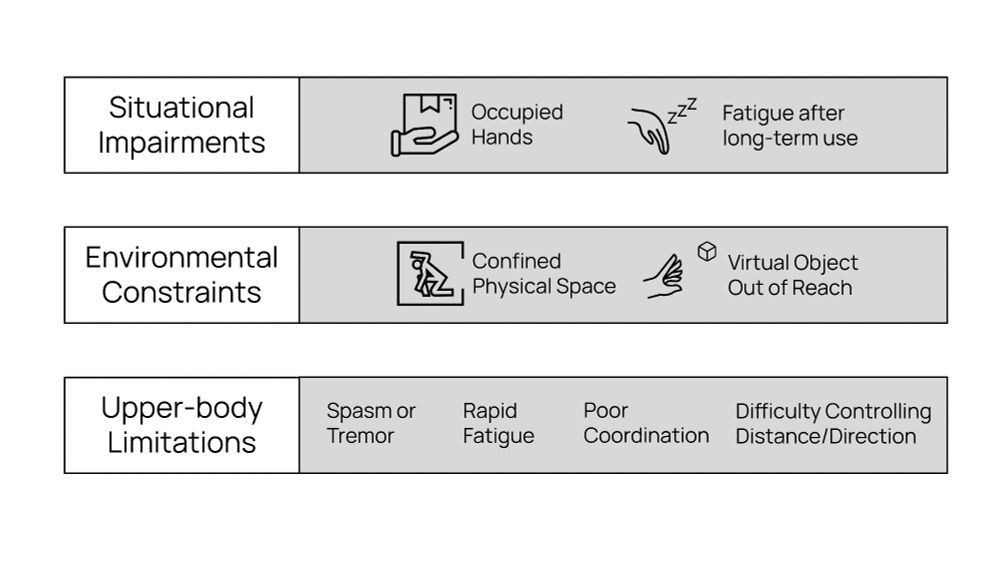

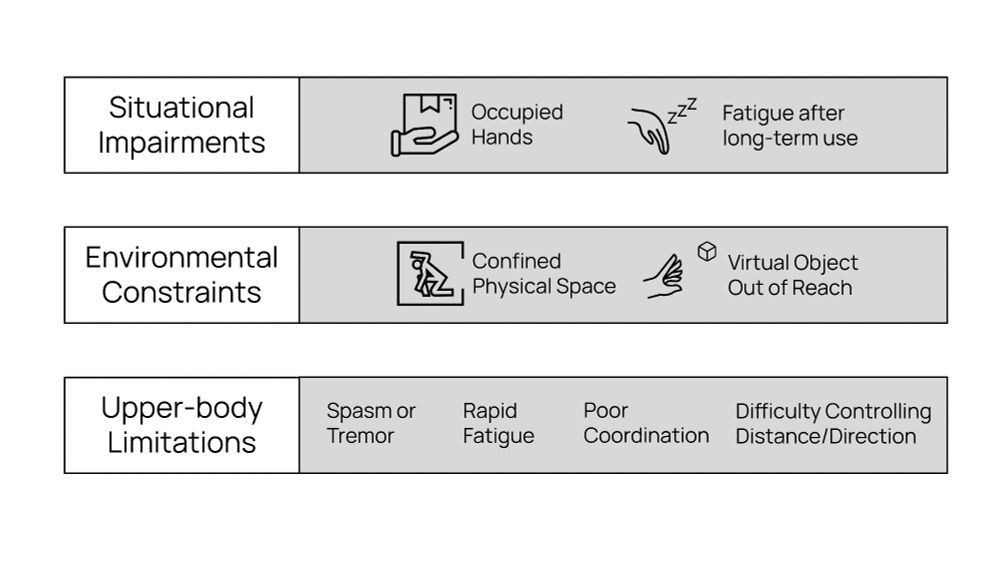

a figure showing possible factors causing hand interactions to be unavailable, including situational impairments (e.g., occupied hands, fatigue after long term use), environmental constraints (e.g., confined physical space, virtual object out of reach), and upper-body limitations (spasm or tremor, rapid fatigue, poor coordination, difficulty controlling distance/direction)

Hand interactions are widely used in XR but are not always feasible, e.g., due to (situational) impairments, environmental constraints. Speech can be used as an alternative, but is often limited to initiating basic gestures and system controls (2/n)

01.08.2025 03:28 — 👍 0 🔁 0 💬 0 📌 0

Introducing HandProxy, a speech-controlled virtual proxy hand that interprets the user’s natural speech input and performs hand interactions in XR on the user's behalf. Accepted at #IMWUT 2025 (1/n)

Paper: bit.ly/4fjdEJN

Demo Video: bit.ly/40KXtPy

01.08.2025 03:25 — 👍 1 🔁 0 💬 7 📌 0

Faculty at Stanford | HCI+ Cognition| Learning Sciences | Artist | Canned seafood enthusiast.

CS PhD student @UMD | she/her | VR/AR & HCI

HCI researcher & PhD student @ University of Michigan

https://shwetharajaram.github.io

I study how to enable interactions with emerging technologies, such as AR and genAI, that are both beneficial and privacy-friendly for end-users!

Ph.D. Student @Stanford.

I study how technology can enhance our senses—helping us see, touch, and experience the world in new ways. https://yujietao.me

PhD Student at UChicago in the Human Computer Integration Lab. she/her. ~Exploring alternative visions of the future with e-waste and computing~ https://jasminelu.site

Incoming PhD student at UMich doing HCI research

Professor of HCII and LTI at Carnegie Mellon School of Computer Science.

jeffreybigham.com

Ph.D. @ CMU HCII. Developing tools and processes to support Responsible AI practices on the ground.

Currently focusing on AI red-teaming, auditing, and impact assessment.

Prev. Microsoft Research, Berkeley EECS.

https://www.wesleydeng.com

🐾Carnegie Bosch Postdoc @ Carnegie Mellon HCII

🤖Mutual Theory of Mind, Human-AI Interaction, Responsible AI.

👩🏻🎓Ph.D. from 🐝Georgia Tech HCC. Prev Google Research, IBM Research, UW-Seattle. She/Her.

🔗 http://qiaosiwang.me/

Associate Professor at University of Chicago, Computer Science. Runs the Human-Computer Integration Lab (https://lab.plopes.org) & also a musician.

HCI/AI Researcher on AI-powered art-making tools. Currently a research Scientist at Midjourney. Ph.D. from the University of Michigan CSE. Previously at Microsoft Research, Naver AI Lab, Adobe Research, and KAIST Kixlab.

Professor of social computing at UW CSE, leading @socialfutureslab.bsky.social

social.cs.washington.edu

CS Ph.D. student at Stanford. Oil painter. HCI, NLP, generative agents, human-centered AI

Michigan faculty, http://www.cond.org

♪~ ᕕ(ᐛ)ᕗ PhD student @ umich researching accessibility, diy tech, AR

she/her

jayl.in

incoming PhD @UMich CSE | HCI + A11y

Assistant Professor at National Taiwan University (NTU, Taipei). PhD, University of Chicago (Human-Computer Interaction, Virtual/Augmented Reality, Wearable, Haptics) https://lab.tengshanyuan.info/

Assist. Prof at CMU, CS PhD at UW. HCI+AI, map general-purpose models to specific use cases!