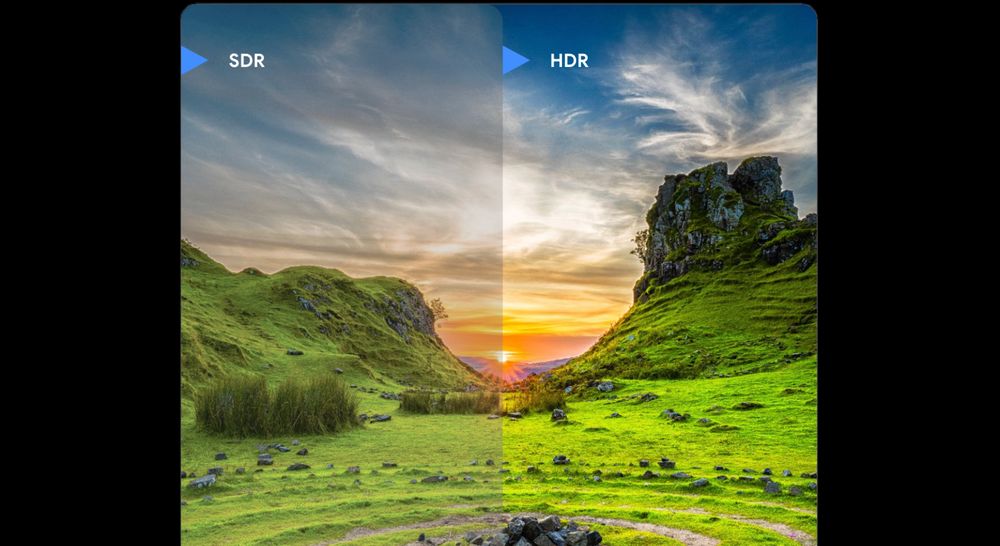

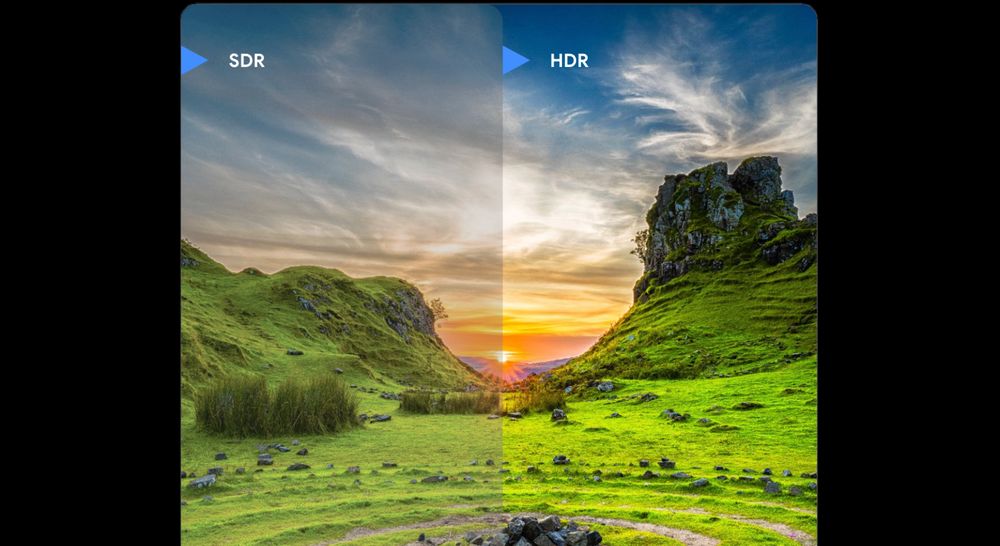

What is HDR?

_Posted by John Reck – Software Engineer_

For Android developers, delivering exceptional visual experiences is a continuous goal. High Dynamic Range (HDR) unlocks new possibilities, offering the potential for more vibrant and immersive content. Technologies like UltraHDR on Android are particularly compelling, providing the benefits of HDR displays while maintaining crucial backwards compatibility with SDR displays. On Android you can use HDR for both video and images.

Over the years, the term HDR has been used to signify a number of related, but ultimately distinct visual fidelity features. Users encounter it in the context of camera features (exposure fusion), or as a marketing term in TV or monitor (“HDR capable”). This conflates distinct features like wider color gamuts, increased bit depth or enhanced contrast with HDR itself.

From an Android Graphics perspective, HDR primarily signifies **higher peak brightness capability that extends beyond the conventional Standard Dynamic Range**. Other perceived benefits often derive from standards such as HDR10 or Dolby Vision which also include the usage of wider color spaces, higher bit depths, and specific transfer functions.

In this article, we’ll establish the foundational color principles, then address common myths, clarify HDR’s role in the rendering pipeline, and examine how Android’s display technologies and APIs enable HDR experience.

## The components of color

Understanding HDR begins with defining the three primary components that form the displayed volume of color: bit depth, transfer function, and color gamut. These describe the precision, scaling, and range of the color volume, respectively.

While a color model defines the format for encoding pixel values (e.g., RGB, YUV, HSL, CMYK, XYZ), RGB is typically assumed in a graphics context. The combination of a color model, a color gamut, and a transfer function constitutes color space. Examples include sRGB, Display P3, Adobe RGB, BT.2020, or BT.2020 HLG. Numerous combinations of color gamut and transfer function are possible, leading to a variety of color spaces.

_Components of color_

#### **Bit Depth**

Bit depth defines the precision of color representation. A higher bit depth allows for finer gradation between color values. In modern graphics, bit depth typically refers to bits per channel (e.g., an 8-bit image uses 8 bits for each red, green, blue, and optionally alpha channel).

Crucially, bit depth does not determine the overall range of colors (minimum and maximum values) an image can represent; this is set by the color gamut and, in HDR, the transfer function. Instead, increasing bit depth provides more discrete steps within that defined range, resulting in smoother transitions and reduced visual artifacts such as banding in gradients.

**5-bit**

**8-bit**

Although 8-bit is one of the most common formats in widespread usage, it’s not the only option. RAW images can be captured at 10, 12, 14, or 16 bits. PNG supports 16 bits. Games frequently use 16-bit floating point (FP16) instead of integer space for intermediate render buffers. Modern GPU APIs like Vulkan even support 64-bit RGBA formats in both integer and floating point varieties, providing up to 256-bits per pixel.

#### **Transfer Function**

A transfer function defines the mathematical relationship between a pixel’s stored numerical value and its final displayed luminance or color. In other words, the transfer function describes how to interpret the increments in values between the minimum and maximum. This function is essential because the human visual system's response to light intensity is non-linear. We are more sensitive to changes in luminance at low light levels than at high light levels. Therefore, a linear mapping from stored values to display luminance would not result in an efficient usage of the available bits. There would be more than necessary precision in the brighter region and too little in the darker region with respect to what is perceptual. The transfer function compensates for this non-linearity by adjusting the luminance values to match the human visual response.

While some transfer functions are linear, most employ complex curves or piecewise functions to optimize image quality for specific displays or viewing conditions. sRGB, Gamma 2.2, HLG, and PQ are common examples, each prioritizing bit allocation differently across the luminance range.

#### **Color Gamut**

Color gamut refers to the entire range of colors that a particular color space or device can accurately reproduce. It is typically a subset of the visible color spectrum, which encompasses all the colors that the human eye can perceive. Each color space (e.g., sRGB, Display P3, BT2020) defines its own unique gamut, establishing the boundaries for color representation.

A wider gamut signifies that the color space can display a greater variety of colors, leading to richer and more vibrant images. However, simply having a larger gamut doesn't always guarantee better color accuracy or a more vibrant result. The device or medium used to display the colors must also be capable of reproducing the full range of the gamut. When a display encounters colors outside its reproducible gamut, the typical handling method is clipping. This is to ensure that in-gamut colors are properly preserved for accuracy, as otherwise attempts to scale the color gamut may produce unpleasant results, particularly in regions in which human vision is particularly sensitive like skin tones.

## HDR myths and realities

With an understanding of what forms the basic working color principles, it’s now time to evaluate some of the common claims of HDR and how they apply in a general graphics context.

### Claim: HDR offers more vibrant colors

This claim comes from HDR video typically using the BT2020 color space, which is indeed a wide color volume. However, there are several problems with this claim as a blanket statement.

The first is that images and graphics have been able to use wider color gamuts, such as Display P3 or Adobe RGB, for quite a long time now. This is not a unique advancement that was coupled to HDR. In JPEGs for example this is defined by the ICC profile, which dates back to the early 1990s, although wide-spread adoption of ICC profile handling is somewhat more recent. Similarly on the graphics rendering side the usage of wider color spaces is fully decoupled from whether or not HDR is being used.

The second is that not all HDR videos even use such a wider gamut at all. Although HDR10 specifies the usage of BT2020, other HDR formats have since been created that do not use such a wide gamut.

The biggest issue, though, is one of capturing and displaying. Just because the format allows for the color gamut of BT2020 does not mean that the entire gamut is actually usable in practice. For example current Dolby Vision mastering guidelines only require a 99% coverage of the P3 gamut. This means that even for high-end professional content, it’s not expected that the authoring of content beyond that of Display P3 is possible. Similarly, the vast majority of consumer displays today are only capable of displaying either sRGB or Display P3 color gamuts. Given that the typical recommendation of out-of-gamut colors is to clip them, this means that even though HDR10 allows for up to BT2020 gamut, the widest gamut in practice is still going to be P3.

Thus this claim should really be considered something offered by HDR video profiles when compared to SDR video profiles specifically, although SDR videos could use wider gamuts if desired without using an HDR profile.

### Claim: HDR offers more contrast / better black detail

One of the benefits of HDR sometimes claimed is dark blacks (e.g. Dolby Vision Demo #3 - Core Universe - 4K HDR or “Dark scenes come alive with darker darks” ) or more detail in the dark regions. This is even reflected in BT.2390: “HDR also allows for lower black levels than traditional SDR, which was typically in the range between 0.1 and 1.0 cd/m2 for cathode ray tubes (CRTs) and is now in the range of 0.1 cd/m2 for most standard SDR liquid crystal displays (LCDs).” However, in reality no display attempts to show anything but SDR black as the blackest black the display is physically capable of. Thus there is no difference between HDR or SDR in terms of how dark it can reach - both bottom out at the same dark level on the same display.

As for contrast ratio, as that is the ratio between the brightest white and the darkest black, it is overwhelmingly influenced by how dark a display can get. With the prevalence of OLED displays, particularly in the mobile space, both SDR and HDR have the same contrast ratio as a result, as they both have essentially perfect black levels giving them infinite contrast ratios.

The PQ transfer function does allocate more bits to the dark region, so in theory it can convey better black detail. However, this is a unique aspect of PQ rather than a feature of HDR. HLG is increasingly the more common HDR format as it is preferred by mobile cameras as well as several high end cameras. And while PQ may contain this detail, that doesn’t mean the HDR display can necessarily display it anyway, as discussed in Display Realities.

### Claim: HDR offers higher bit depth

This claim comes from HDR10 and some, but not all, Dolby Vision profiles using 10 or 12-bits for the video stream. Similar to more vibrant colors, this is really just an aspect of particular video profiles rather than something HDR itself inherently provides or is coupled to HDR. The usage of 10-bits or more is otherwise not uncommon in imaging, particularly in the higher end photography world, with RAW and TIFF image formats capable of having 10, 12, 14, or 16-bits. Similarly, PNG supports 16-bits, although that is rarely used.

### Claim: HDR offers higher peak brightness

This then, is all that HDR really is. But what does “higher peak brightness” really mean? After all, SDR displays have been pushing ever increasing brightness levels before HDR was significant, particularly for sunlight viewing. And even without that, what is the difference between “HDR” and just “SDR with the brightness slider cranked up”? The answer is that we define “HDR” as having a brightness range bigger than SDR, and we think of SDR as being the range driven by autobrightness to be comfortably readable in the current ambient conditions. Thus we define HDR in terms of things like “HDR headroom” or “HDR/SDR ratio” to indicate it’s a floating region relative to SDR. This makes brightness policies easier to reason about. However, it does complicate the interaction with traditional HDR such as that used in video, specifically HLG and PQ content.

#### **PQ/HLG transfer functions**

PQ and HLG represent the two most common approaches to HDR in terms of video content. They represent two transfer functions that represent different concepts of what is “HDR.” PQ, published as SMPTE ST 2084:2014, is defined in terms of absolute nits in the display. The expectation is that it encodes from 0 to 10,000 nits, and expects to be mastered for a particular reference viewing environment. HLG takes a different approach, instead opting to take a typical gamma curve for part of the range before switching to logarithmic for the brighter portion. This has a claimed nominal peak brightness of 1000 nits in the reference environment, although it is not defined in absolute luminance terms like PQ is.

Industry-wide specifications have recently formalized the brightness range of both PQ- and HLG-encoded content in relation to SDR. ITU-R BT. 2408-8 defines the reference white level for graphics to be 203 nits. ISO/TS 22028-5 and ISO/PRF 21496-1 have followed suit; 21496-1 in particular defines HDR headroom in terms of nominal peak luminance, relative to a diffuse white luminance at 203 nits.

The realities of modern displays, discussed below, as well as typical viewing environments mean that traditional HDR video are nearly never displayed as intended. A display’s HDR headroom may evaporate under bright viewing conditions, demanding an on-demand tonemapping into SDR. Traditional HDR video encodes a fixed headroom, while modern displays employ a dynamic headroom, resulting in vast differences in video quality even on the same display.

### Display Realities

So far most of the discussion around HDR has been from the perspective of the content. However, users consume content on a display, which has its own capabilities and more importantly limits. A high-end mobile display is likely to have characteristics such as gamma 2.2, P3 gamut, and a peak brightness of around 2000 nits. If we then consider something like HDR10 there are mismatches in bit usage prioritization:

* PQ’s increased bit allocation at the lower ranges ends up being wasted

* The usage of BT2020 ends up spending bits on parts of a gamut that will never be displayed

* Encoding up to 10,000 nits of brightness is similarly headroom that’s not utilized

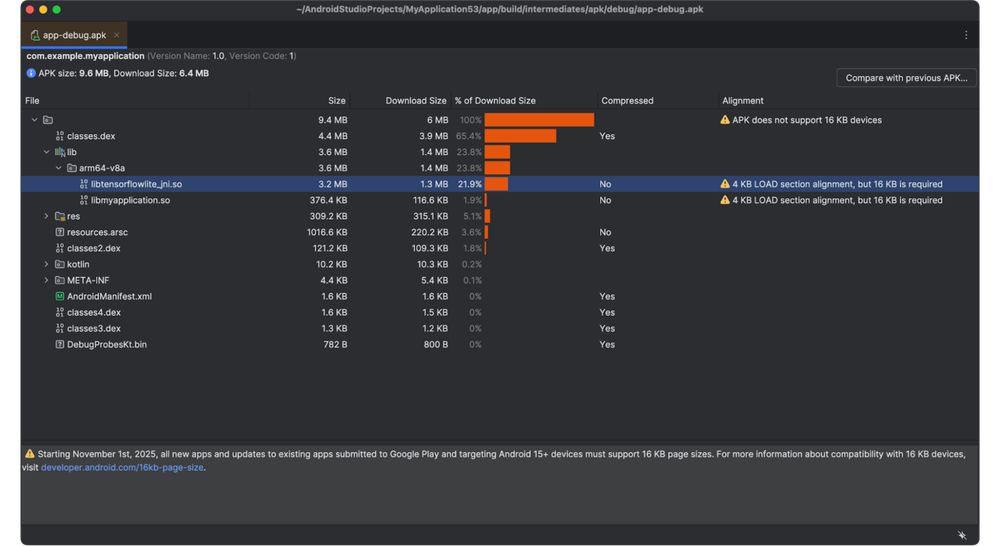

These mismatches are not inherently a problem, however, but it means that as 10-bit displays become more common the existing 10-bit HDR video profiles are unable to actually take advantage of the full display’s capabilities. Thus HDR video profiles are in a position of simultaneously being forward looking while also already being unable to maximize a current 10-bit display’s capabilities. This is where technology such as Ultra HDR or gainmaps in general provide a compelling alternative. Despite sometimes using an 8-bit base image, because the gain layer that transforms it to HDR is specialized to the content and its particular range needs it is more efficient with its bit usage, leading to results that still look stunning. And as that base image is upgraded to 10-bit with newer image formats such as AVIF, the effective bit usage is even better than those of typical HDR video codecs. Thus these approaches do not represent evolutionary or stepping stones to “true HDR”, but rather are also an improvement on HDR in addition to having better backwards compatibility. Similarly Android’s UI toolkit’s usage of the extendedRangeBrightness API actually still primarily happens in 8-bit space. Because the rendering is tailored to the specific display and current conditions it is still possible to have a good HDR experience despite the usage of RGBA_8888.

## Unlocking HDR on Android: Next steps

High Dynamic Range (HDR) offers advancement in visual fidelity for Android developers, moving beyond the traditional constraints of Standard Dynamic Range (SDR) by enabling higher peak brightness.

By understanding the core components of color – bit depth, transfer function, and color gamut – and debunking common myths, developers can leverage technologies like Ultra HDR to deliver truly immersive experiences that are both visually stunning and backward compatible.

In our next article, we'll delve into the nuances of HDR and user intent, exploring how to optimize your content for diverse display capabilities and viewing environments.