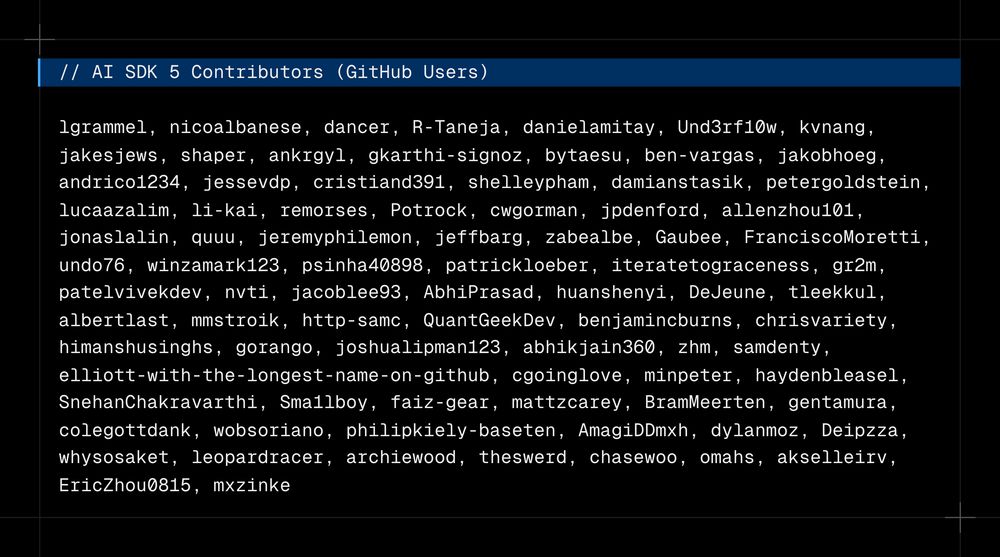

Thank you to everyone who contributed to AI SDK 5.

01.08.2025 11:00 — 👍 3 🔁 0 💬 0 📌 0@sdk.vercel.ai

The AI Toolkit for TypeScript.

Thank you to everyone who contributed to AI SDK 5.

01.08.2025 11:00 — 👍 3 🔁 0 💬 0 📌 0

Read the full AI SDK 5 announcement:

vercel.com/blog/ai-sdk-5

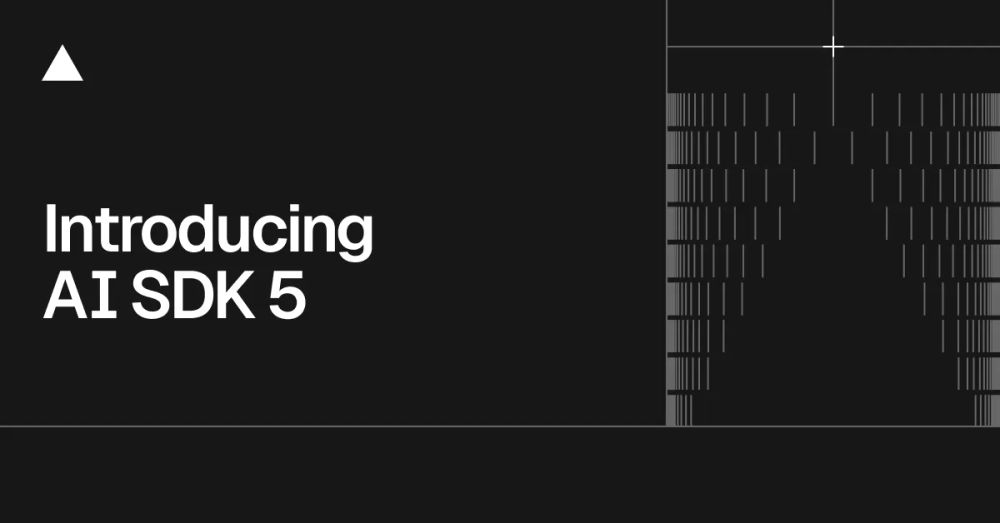

import { generateObject } from "ai"; import { z } from "zod/v4"; const result = await generateObject({ model: "openai/gpt-4o", schema: z.object({ recipe: z.object({ name: z.string(), ingredients: z.array(z.string()), steps: z.array(z.string()), }), }), prompt: "Generate a lasagna recipe.", });

AI SDK 5 works with Zod 4 and Zod Mini giving you faster validation, better TypeScript performance, and reduced bundle size.

31.07.2025 16:11 — 👍 1 🔁 0 💬 1 📌 0

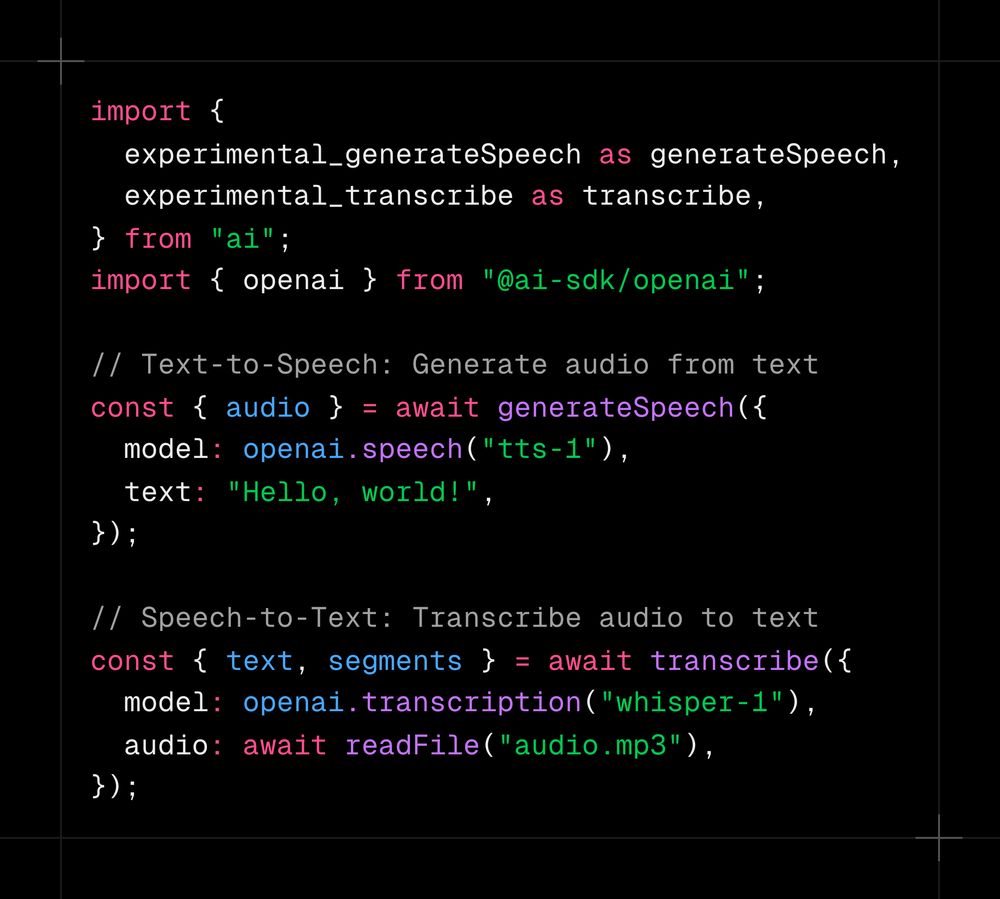

import { experimental_generateSpeech as generateSpeech, experimental_transcribe as transcribe, } from "ai"; import { openai } from "@ai-sdk/openai"; // Text-to-Speech: Generate audio from text const { audio } = await generateSpeech({ model: openai.speech("tts-1"), text: "Hello, world!", }); // Speech-to-Text: Transcribe audio to text const { text, segments } = await transcribe({ model: openai.transcription("whisper-1"), audio: await readFile("audio.mp3"), });

AI SDK 5 extends our unified provider abstraction to speech. Just as we've done for text and image generation, we're bringing the same consistent, type-safe interface to both speech generation and transcription.

31.07.2025 16:11 — 👍 0 🔁 0 💬 1 📌 0![import { streamText, hasToolCall, stepCountIs } from "ai"; const result = streamText({ model: "openai/gpt-4o", messages, tools: { getArtists, getAlbums, getSongs, playSong }, prepareStep({ steps }) { if (hasToolCallInLastStep(steps, "getArtists")) { return { toolChoice: { type: "tool", toolName: "getAlbums" }, }; } }, stopWhen: [stepCountIs(5), hasToolCall("playSong")], });](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:zojotgllpw7iczompptlesx6/bafkreic6aq4qiajnv7iwlkqm2muvt7qpwc25wh5yfpg75naqk2lofrug3i@jpeg)

import { streamText, hasToolCall, stepCountIs } from "ai"; const result = streamText({ model: "openai/gpt-4o", messages, tools: { getArtists, getAlbums, getSongs, playSong }, prepareStep({ steps }) { if (hasToolCallInLastStep(steps, "getArtists")) { return { toolChoice: { type: "tool", toolName: "getAlbums" }, }; } }, stopWhen: [stepCountIs(5), hasToolCall("playSong")], });

AI SDK 5 brings all new agentic controls.

stopWhen transforms single-step calls into multi-step agents by running requests in a tool-calling loop until conditions are met.

prepareStep gives you deterministic control over each step - configure model, context, tools, and more.

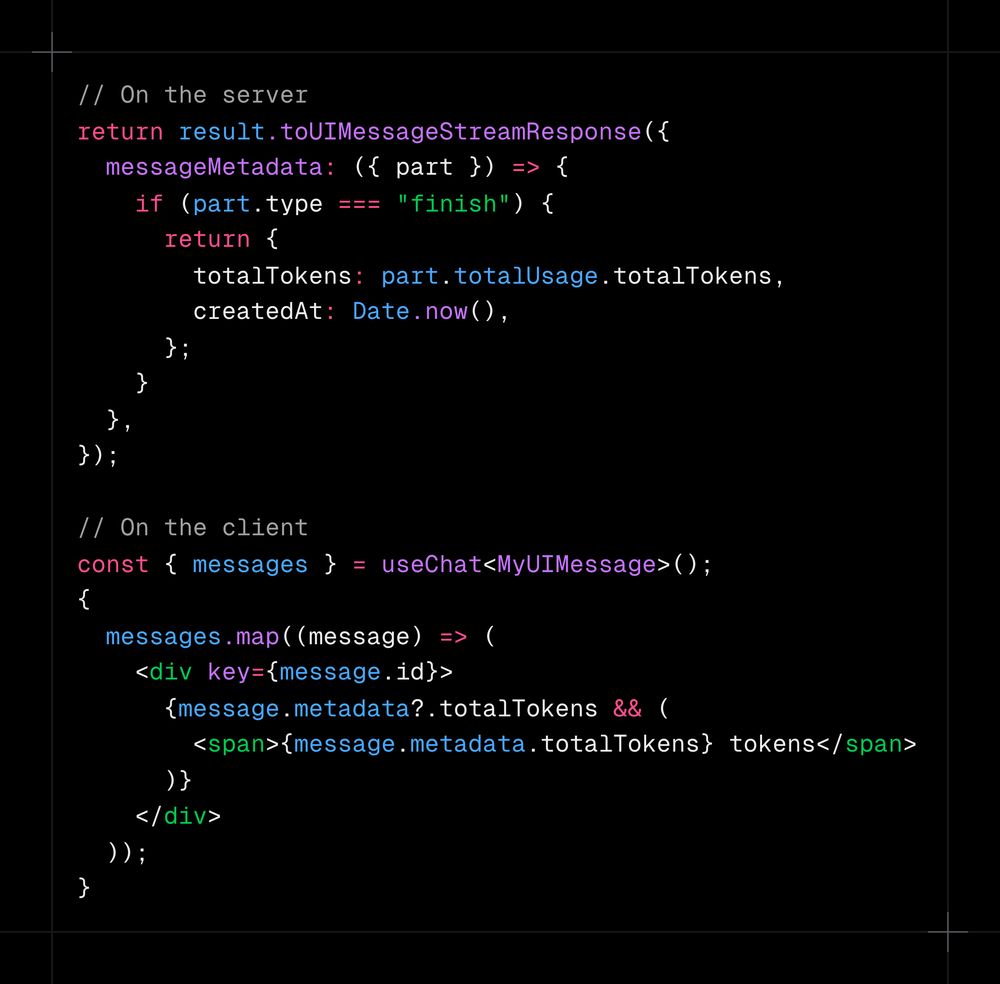

// On the server return result.toUIMessageStreamResponse({ messageMetadata: ({ part }) => { if (part.type === "finish") { return { totalTokens: part.totalUsage.totalTokens, createdAt: Date.now(), }; } }, }); // On the client const { messages } = useChat<MyUIMessage>(); { messages.map((message) => ( <div key={message.id}> {message.metadata?.totalTokens && ( <span>{message.metadata.totalTokens} tokens</span> )} </div> )); }

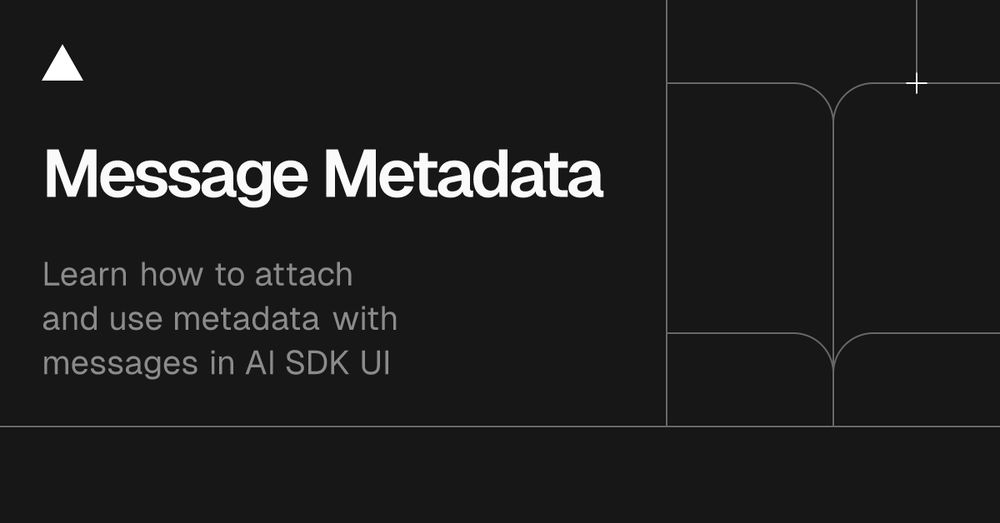

For information about a message, such as a timestamp, model ID, or token count, you can attach type-safe metadata.

31.07.2025 16:11 — 👍 0 🔁 0 💬 1 📌 0

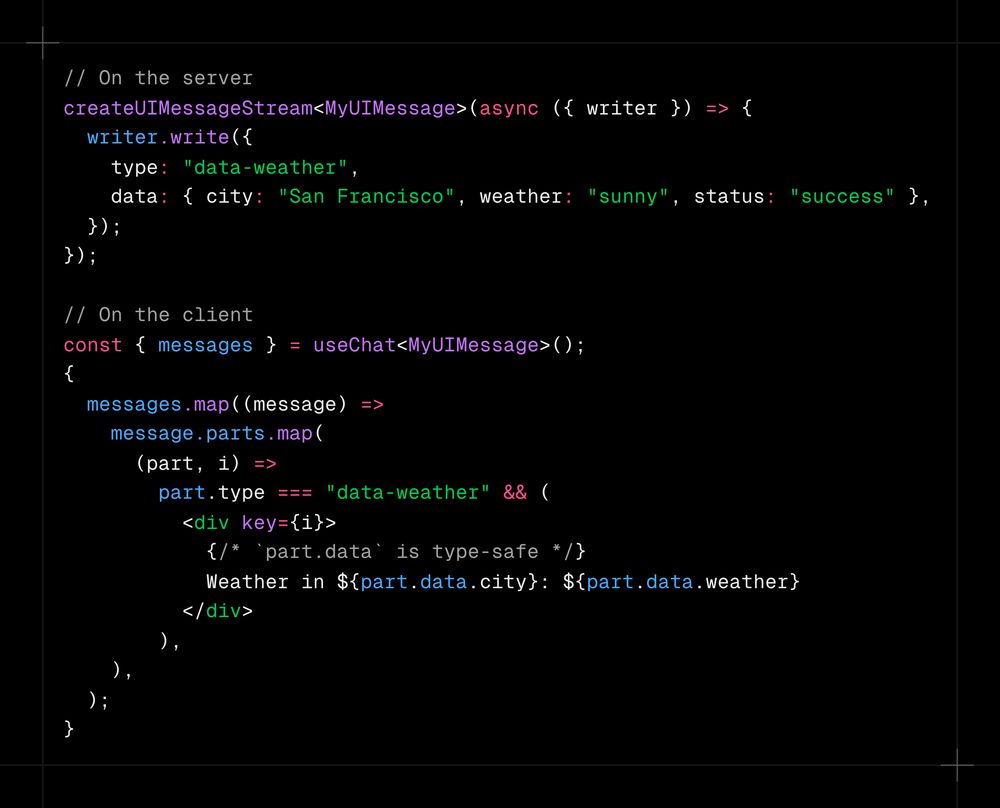

// On the server createUIMessageStream<MyUIMessage>(async ({ writer }) => { writer.write({ type: "data-weather", data: { city: "San Francisco", weather: "sunny", status: "success" }, }); }); // On the client const { messages } = useChat<MyUIMessage>(); { messages.map((message) => message.parts.map( (part, i) => part.type === "data-weather" && ( <div key={i}> {/* `part.data` is type-safe */} Weather in ${part.data.city}: ${part.data.weather} </div> ), ), ); }

Data parts provide a first-class way to stream custom, type-safe data from the server to the client, ensuring your code remains maintainable as your application grows.

31.07.2025 16:11 — 👍 0 🔁 0 💬 1 📌 0

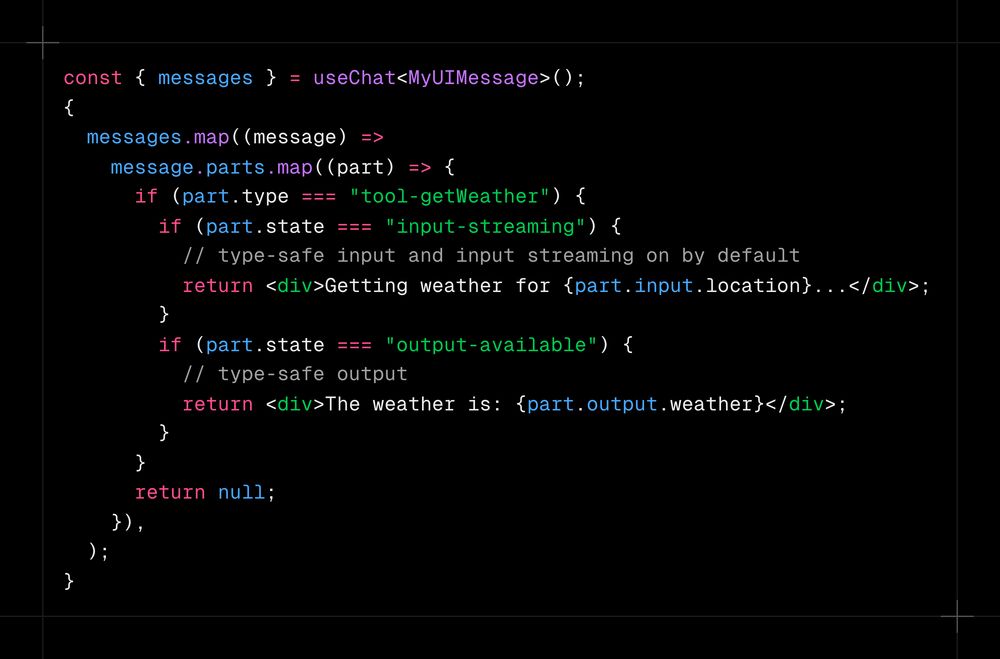

const { messages } = useChat<MyUIMessage>(); { messages.map((message) => message.parts.map((part) => { if (part.type === "tool-getWeather") { if (part.state === "input-streaming") { // type-safe input and input streaming on by default return <div>Getting weather for {part.input.location}...</div>; } if (part.state === "output-available") { // type-safe output return <div>The weather is: {part.output.weather}</div>; } } return null; }), ); }

The chat abstraction has been redesigned to support type-safety, customizable transports, flexible state management, and more.

31.07.2025 16:11 — 👍 1 🔁 0 💬 1 📌 0

AI SDK 5

Introducing type-safe chat, agentic loop controls, data parts, speech generation and transcription, Zod 4 support, global provider, and raw request access.

Documentation:

v5.ai-sdk.dev/docs/ai-sdk...

// Server: Send metadata about the message const result = streamText({ /* ... */ }); return result.toUIMessageStreamResponse({ messageMetadata: ({ part }) => { if (part.type === "finish") { return { totalTokens: part.totalUsage.totalTokens, }; } }, }); // Client: Access metadata via message.metadata { messages.map((message) => ( <div key={message.id}> {message.metadata?.totalTokens && ( <span>{message.metadata.totalTokens} tokens</span> )} </div> )); }

Learn how to use message metadata with useChat to track timestamps, token usage, and more.

24.07.2025 14:10 — 👍 2 🔁 0 💬 1 📌 0

Documentation:

v5.ai-sdk.dev/docs/advanc...

![// ai@5.0.0-beta.24 - UIMessageStream abort handling export async function POST(req: Request) { const { messages }: { messages: UIMessage[] } = await req.json(); const result = streamText({ model: openai("gpt-4o"), messages: convertToModelMessages(messages), abortSignal: req.signal, // forward abort signal }); return result.toUIMessageStreamResponse({ onFinish: async ({ isAborted }) => { if (isAborted) { // Handle abort-specific cleanup } else { // Handle normal completion } }, // ensure stream is consumed after client connection is lost consumeSseStream: consumeStream, }); }](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:zojotgllpw7iczompptlesx6/bafkreibi5mphndyuqw7fkhjzivsphwktsajpf3ng4sbqu5dc2c74f7pjyi@jpeg)

// ai@5.0.0-beta.24 - UIMessageStream abort handling export async function POST(req: Request) { const { messages }: { messages: UIMessage[] } = await req.json(); const result = streamText({ model: openai("gpt-4o"), messages: convertToModelMessages(messages), abortSignal: req.signal, // forward abort signal }); return result.toUIMessageStreamResponse({ onFinish: async ({ isAborted }) => { if (isAborted) { // Handle abort-specific cleanup } else { // Handle normal completion } }, // ensure stream is consumed after client connection is lost consumeSseStream: consumeStream, }); }

Save partial results and handle cleanup properly when a UIMessageStream is aborted.

18.07.2025 16:55 — 👍 1 🔁 0 💬 1 📌 0

Documentation:

v5.ai-sdk.dev/docs/migrat...

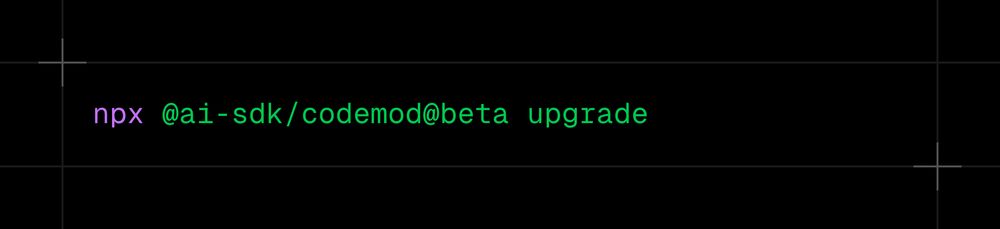

npx @ai-sdk/codemod@beta upgrade

Automatically migrate to AI SDK 5 with our initial set of codemods.

15.07.2025 08:45 — 👍 1 🔁 0 💬 1 📌 0

Documentation:

v5.ai-sdk.dev/providers/a...

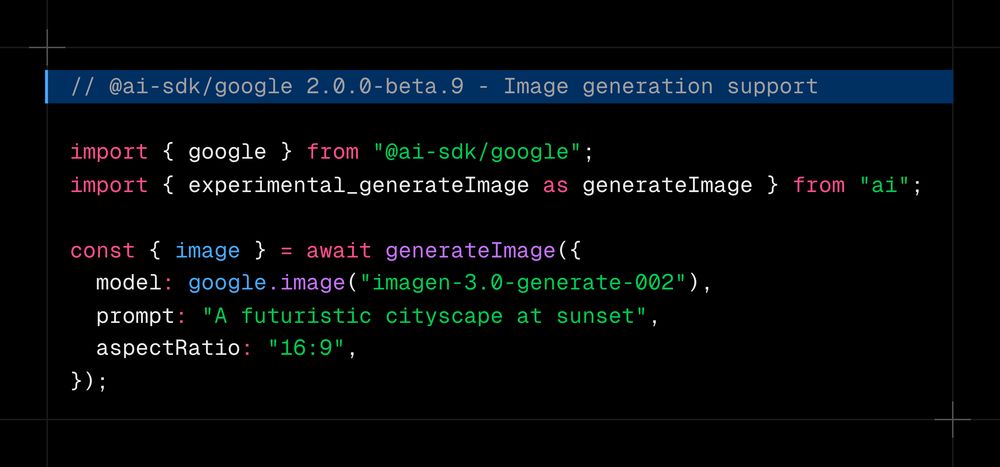

// @ai-sdk/google 2.0.0-beta.9 - Image generation support import { google } from "@ai-sdk/google"; import { experimental_generateImage as generateImage } from "ai"; const { image } = await generateImage({ model: google.image("imagen-3.0-generate-002"), prompt: "A futuristic cityscape at sunset", aspectRatio: "16:9", });

Generate images with Google's Imagen 3.

14.07.2025 17:51 — 👍 2 🔁 0 💬 1 📌 0Documentation:

v5.ai-sdk.dev/docs/ai-sdk...

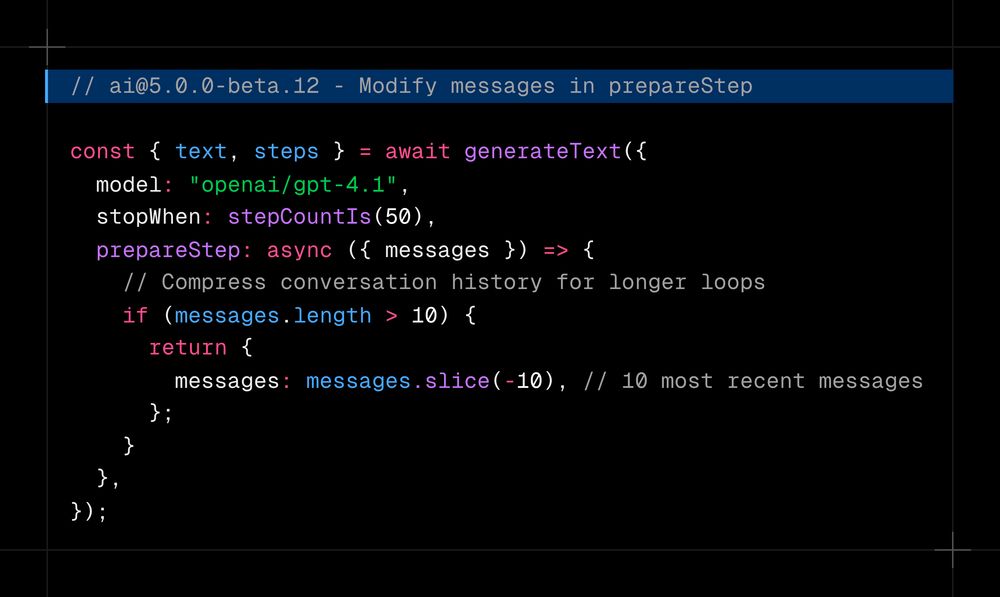

// ai@5.0.0-beta.12 - Modify messages in prepareStep const { text, steps } = await generateText({ model: "openai/gpt-4.1", stopWhen: stepCountIs(50), prepareStep: async ({ messages }) => { // Compress conversation history for longer loops if (messages.length > 10) { return { messages: messages.slice(-10), // 10 most recent messages }; } }, });

You can now modify messages for each step.

For multi-step generations, this provides full control over your context.

Documentation:

v5.ai-sdk.dev/docs/ai-sdk...

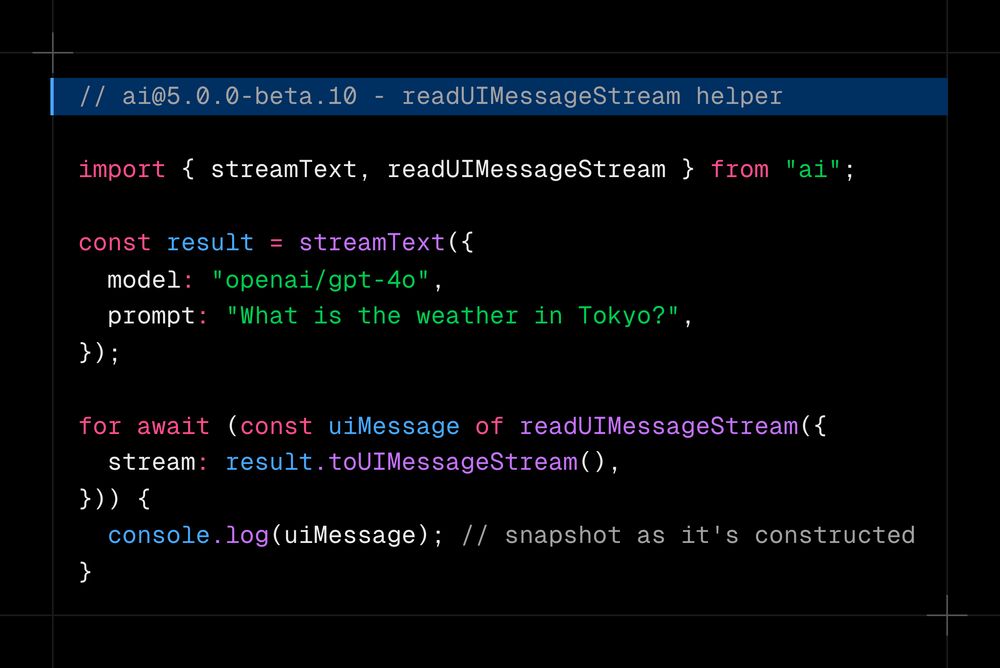

import { streamText, readUIMessageStream } from 'ai'; const result = streamText({ model: 'openai/gpt-4o', prompt: 'What is the weather in Tokyo?', }); const partialUIMessage = readUIMessageStream({ stream: result.toUIMessageStream(), }); for await (const uiMessageSnapshot of partialUIMessage) { console.log(uiMessageSnapshot); }

Use the readUIMessageStream helper for custom stream processing.

08.07.2025 16:36 — 👍 0 🔁 0 💬 1 📌 0

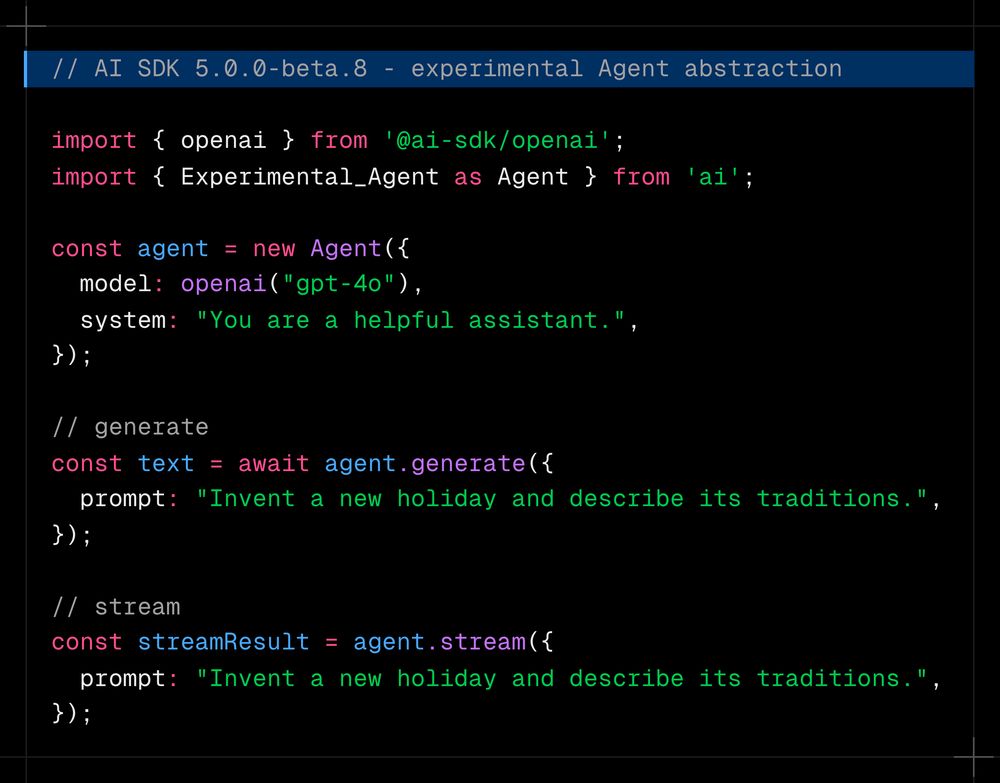

Build agents with the AI SDK.

07.07.2025 10:33 — 👍 2 🔁 0 💬 0 📌 0Changelog:

chat-sdk.dev/docs/resour...

Chat SDK is now using AI SDK 5 Beta.

03.07.2025 20:30 — 👍 0 🔁 0 💬 1 📌 0Guides:

v5.ai-sdk.dev/docs/gettin...

Our Next.js, Nuxt, and Svelte quickstart guides have been updated to AI SDK 5 Beta.

Get started today.

Beta announcement post:

v5.ai-sdk.dev/docs/announ...

The first set of bug fixes & improvements are now live for AI SDK 5 Beta.

We'd love to hear your feedback.

Get started now and please reach out if you have feedback.

v5.ai-sdk.dev/docs/announ...

![// AI SDK 5 beta.1 - agentic controls and typed chat messages export async function POST(req: Request) { const { messages }: { messages: AudioPlayerMessage[] } = await req.json(); const result = streamText({ model: openai("gpt-4o"), messages: convertToModelMessages(messages), tools: { getArtists, getAlbums, getSongs, playSong }, prepareStep({ steps }) { if (hasToolCallInLastStep(steps, "getArtists")) { return { toolChoice: { type: "tool", toolName: "getAlbums" }, }; } }, stopWhen: [stepCountIs(5), hasToolCall("playSong")], }); return result.toUIMessageStreamResponse(); }](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:zojotgllpw7iczompptlesx6/bafkreico4ppahiarqpf5cjlssznwutc3qra2feyv4mij7fzzm5bsvxd7f4@jpeg)

// AI SDK 5 beta.1 - agentic controls and typed chat messages export async function POST(req: Request) { const { messages }: { messages: AudioPlayerMessage[] } = await req.json(); const result = streamText({ model: openai("gpt-4o"), messages: convertToModelMessages(messages), tools: { getArtists, getAlbums, getSongs, playSong }, prepareStep({ steps }) { if (hasToolCallInLastStep(steps, "getArtists")) { return { toolChoice: { type: "tool", toolName: "getAlbums" }, }; } }, stopWhen: [stepCountIs(5), hasToolCall("playSong")], }); return result.toUIMessageStreamResponse(); }

AI SDK 5 is now in Beta!

We redesigned the AI SDK to create a next-generation AI framework:

• Create type-safe, flexible chats with React, Svelte, Vue

• Build agents with prepareStep, stopWhen, and provider-executed tools

• Rely on an updated, robust language model spec