Feel free to reach out with questions, and thanks to my co-authors for their great work and the Brody Lab for their helpful conversations!

arxiv.org/abs/2309.06402 /end

Chris Versteeg

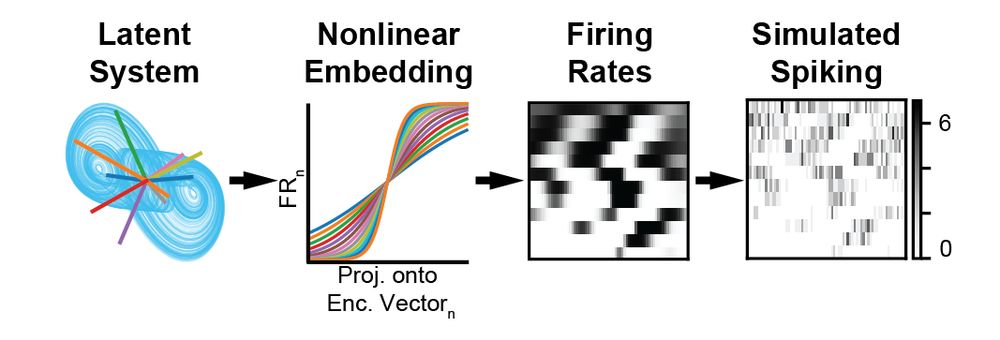

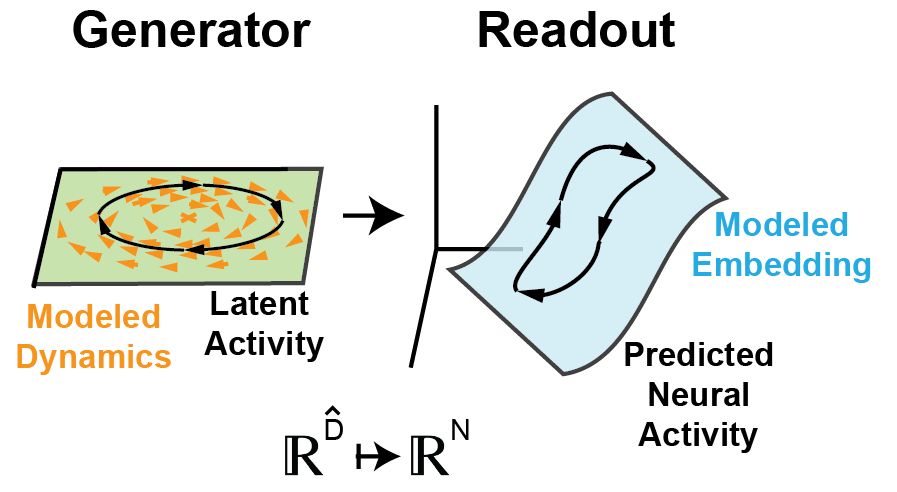

@cversteeg.bsky.social

@cversteeg.bsky.social

Feel free to reach out with questions, and thanks to my co-authors for their great work and the Brody Lab for their helpful conversations!

arxiv.org/abs/2309.06402 /end

In future work, we hope to decompose ODIN models fit to biological neural activity to uncover the computations performed by circuits in the brain! 14/

15.09.2023 17:59 — 👍 0 🔁 0 💬 1 📌 0

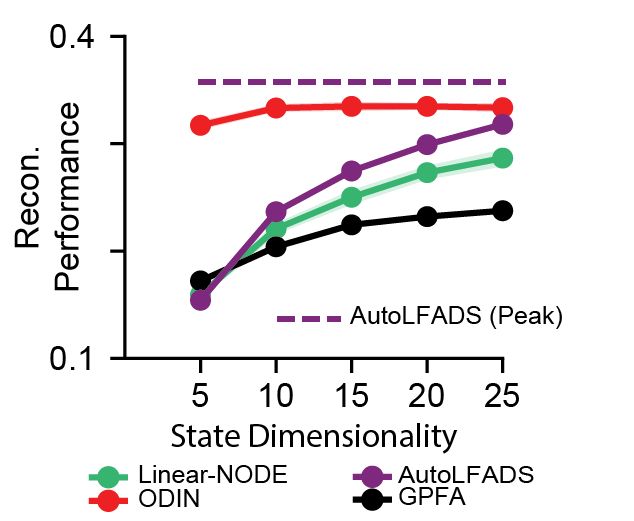

Applying ODIN to neural activity from the monkey motor cortex, we find that ODIN can reconstruct held-out firing rates with high accuracy with only ~10 state dimensions, better than state-of-the-art models with more than double ODIN’s dimensionality. 13/

15.09.2023 17:59 — 👍 0 🔁 0 💬 1 📌 0

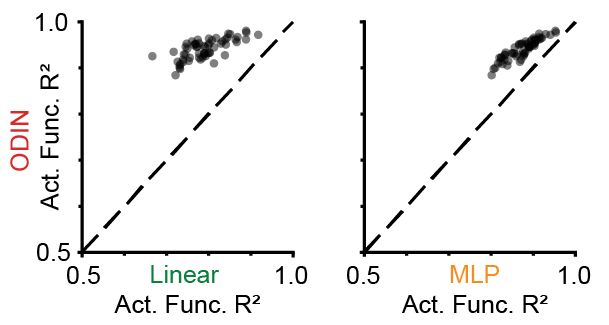

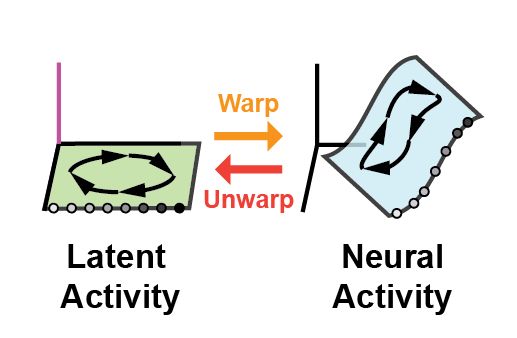

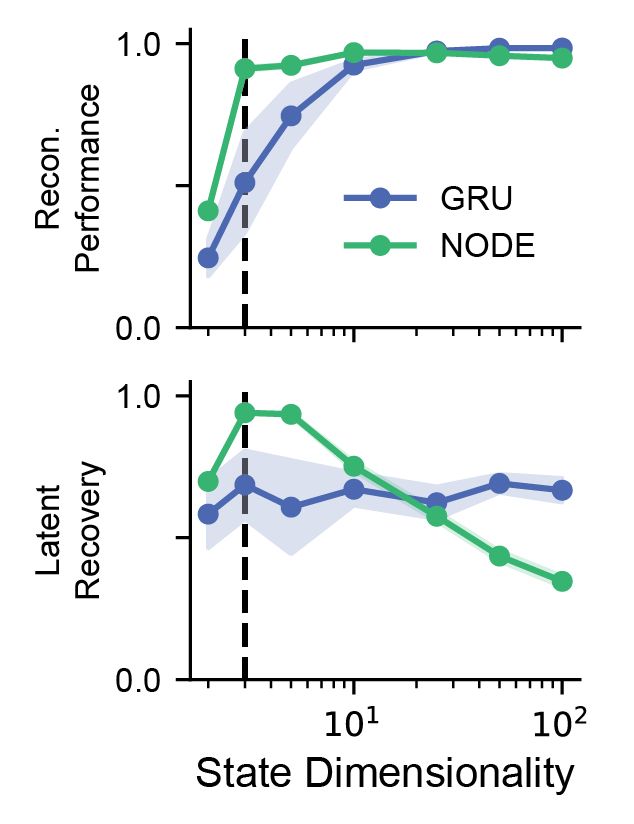

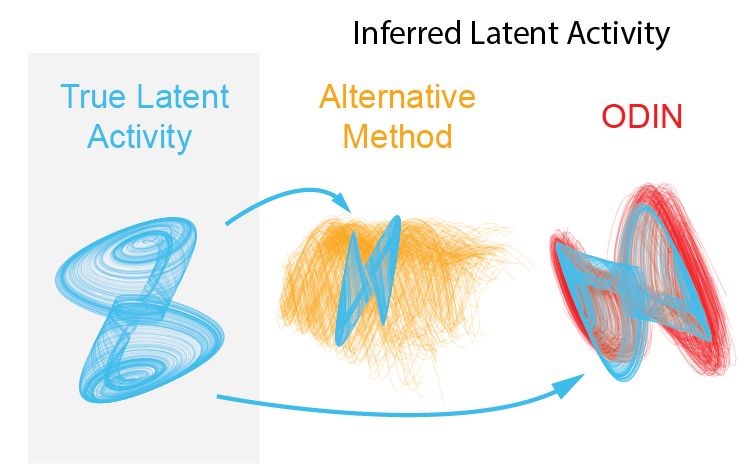

Additionally, ODIN recovers the nature of the simulated nonlinear embedding more accurately than the alternative readouts, suggesting that ODIN is well suited to model neural manifolds. 12/

15.09.2023 17:59 — 👍 0 🔁 0 💬 1 📌 0

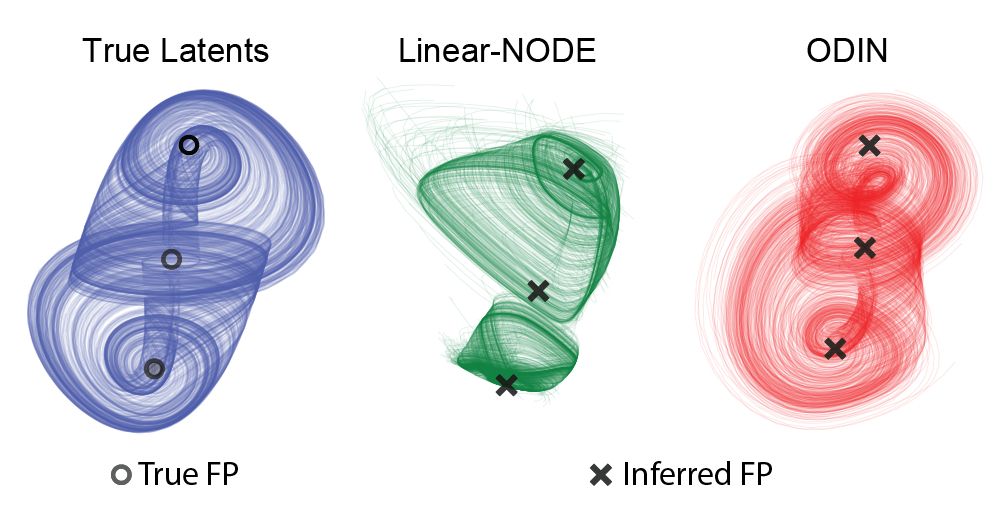

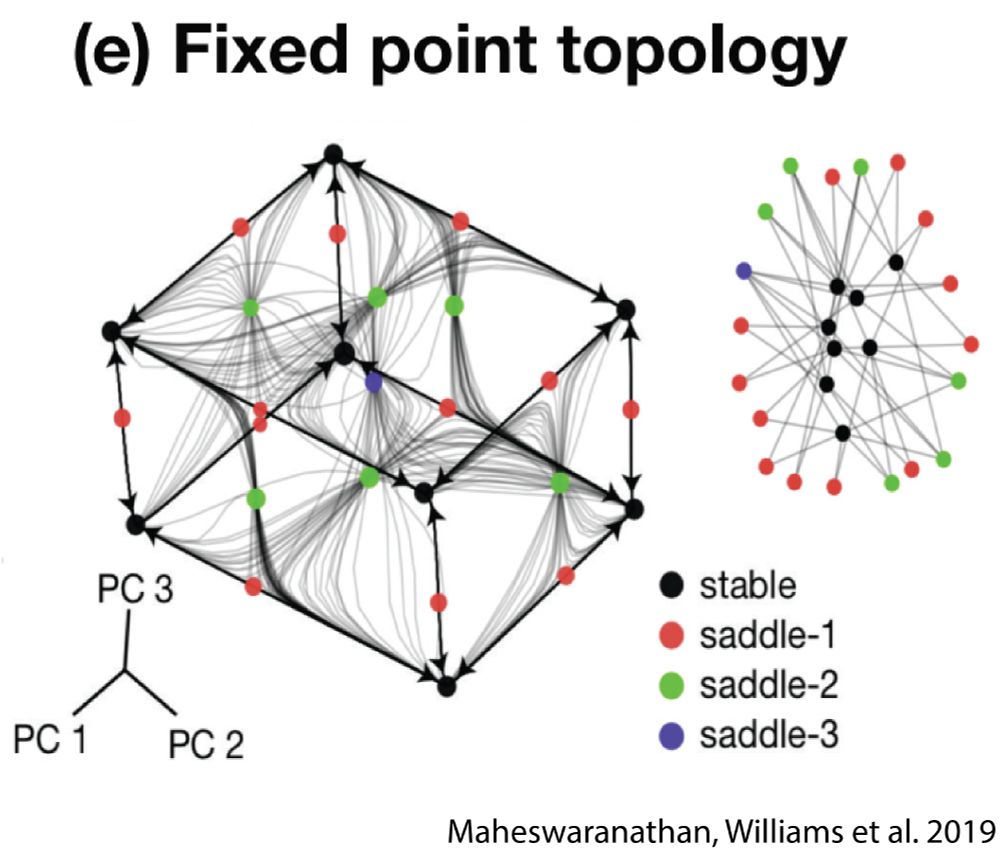

We also find that ODIN allows for accurate recovery of fixed points than models that don’t account for embedding nonlinearities. 11/

15.09.2023 17:58 — 👍 0 🔁 0 💬 1 📌 0

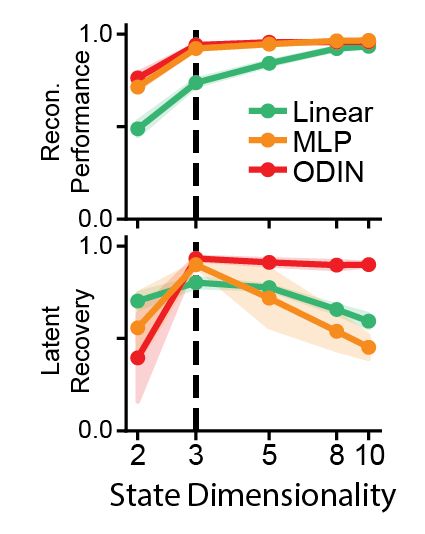

We find that models with Linear or MLP readouts fail to reconstruct neural activity or have poor latent recovery when state dimensionality is incorrectly chosen. In contrast, ODIN had good performance at all relevant state dimensionalities. 10/

15.09.2023 17:57 — 👍 0 🔁 0 💬 1 📌 0

To test the ability of ODIN to accurately recover neural latent dynamics and their embedding, we simulated neural activity from a low-dimensional dynamical system nonlinearly embedded into neural activity. 9/

15.09.2023 17:57 — 👍 0 🔁 0 💬 1 📌 0

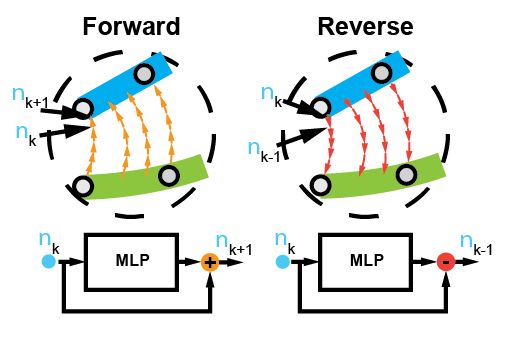

Our new readout, called Flow, is based on invertible ResNets. Flow models the embedding of latent activity into neural activity as a reversible dynamical system, imposing an inductive bias towards injectivity. 8/

15.09.2023 17:57 — 👍 0 🔁 0 💬 1 📌 0

ODIN’s primary innovation is its injective nonlinear readout, which obligates all latent activity to affect neural reconstruction. This penalizes superfluous dynamical features, while readout nonlinearity allows ODIN capture nonlinear embeddings (i.e., neural manifolds). 7/

15.09.2023 17:56 — 👍 0 🔁 0 💬 1 📌 0To fix these problems, we developed a new model called ODIN (Ordinary Differential equations autoencoder with Injective Nonlinear readout). 6/

tenor.com/bPbUv.gif

Our previous work (led by @arsedle) has shown that neural ODE-based architectures can recover the latent space better than RNNs. Unfortunately, we also found that higher dimensional models of all types tend to sacrifice latent recovery for reconstruction performance! 5/

15.09.2023 17:54 — 👍 0 🔁 0 💬 1 📌 0If we can gain confidence that data-trained models accurately capture the features of the neural circuit, we could trust that dynamical analyses applied to data-trained models will reveal the computational mechanisms of the brain! 4/

15.09.2023 17:54 — 👍 0 🔁 0 💬 1 📌 0

In contrast to task-trained models, “data-trained” (e.g., LFADS-like) models learn to approximate a latent dynamical system (the “generator”) and an embedding of those dynamics into neural space (the “readout”) that reconstructs observed spiking data. 3/

15.09.2023 17:54 — 👍 0 🔁 0 💬 1 📌 0

Recent work has demonstrated that task-trained RNNs learn to perform computation via dynamical features (e.g. fixed points) that can provide an intuitive understanding of their underlying computational mechanisms. 2/

15.09.2023 17:53 — 👍 0 🔁 0 💬 1 📌 0

Ever wondered if the dynamics learned by LFADS-like models could help us understand neural computation?@chethan,@arsedle, @JonathanDMcCart, and I developed ODIN to robustly recover latent dynamical features through the power of injectivity! arxiv.org/abs/2309.06402 1/

15.09.2023 17:53 — 👍 10 🔁 4 💬 1 📌 0