OSF

OSF with all stimuli, data, & code as well as detailed supplementary information: osf.io/2asgw/overview. Linked Github repo: github.com/hlplab/Causa...

12.12.2025 17:51 — 👍 0 🔁 0 💬 0 📌 0

APA PsycNet

Congrats to @brainnotonyet.bsky.social alumni Shawn Cummings, @gekagrob.bsky.social & Menghan Yan. Out in JEP:LMC @apajournals.bsky.social: listeners compensate perception of spectral (acoustic) cues based on visually-evident consequences of a pen in mouth of the speaker! dx.doi.org/10.1037/xlm0...

12.12.2025 17:46 — 👍 2 🔁 1 💬 2 📌 0

How AI Hears Accents

Very cool new accent-relatedness visualization, examples, and some insightful observations accent-explorer.boldvoice.com

17.10.2025 20:32 — 👍 0 🔁 0 💬 0 📌 0

Looking for researchers in computational neuroscience and cognition (incl. language, learning, development, decision-making) to join our faculty!

01.10.2025 19:58 — 👍 1 🔁 0 💬 0 📌 0

Review starts 11/1: Asst. prof. (tenure track), human cognition, Brain and CogSci, U Rochester www.sas.rochester.edu/bcs/jobs/fac...

12.09.2024 22:03 — 👍 3 🔁 3 💬 0 📌 0

GitHub - santiagobarreda/STM: The 'STM' (Sliding Template Model) R Package

The 'STM' (Sliding Template Model) R Package. Contribute to santiagobarreda/STM development by creating an account on GitHub.

New R library STM github.com/santiagobarr... by Santiago Barreda that implements Nearey & Assmann's PST model of vowel perception, and a fully Bayesian extension (the BSTM). Easy to use and to apply to your data. It's also what we used in our recent paper www.degruyterbrill.com/document/doi...

30.09.2025 20:50 — 👍 1 🔁 0 💬 0 📌 0

OSF | Sign in

As we write, Nearey & Assmann's PSTM presents a "groundbreaking idea [...], with far-reaching consequences for research from typology to sociolinguistics to speech perception … and few seem to know of it." We hope this paper can help change that! OSF osf.io/tpwmv/ 3/3

30.09.2025 20:46 — 👍 0 🔁 0 💬 0 📌 0

Experimental Approaches to Phonology

This wide-ranging survey of experimental methods in phonetics and phonology shows the insights and results provided by different methods of investigation, including laboratory-based, statistical, psyc...

Nearey & Assmann's PSTM (2007, www.google.com/books/editio...) remains the only fully incremental model of formant normalization, conducting joint inference over both the talker's normalization parameters (*who*'s talking) and the vowel category (*what* they are saying). 2/3

30.09.2025 20:42 — 👍 0 🔁 0 💬 0 📌 0

DL captures human speech perception both *qualitatively* & *quantitatively* (R2>96%) for over 400 combinations of exposure and test items. Yet, previous DL models fail to capture important limitations. Specifically, we find that DL seems to proceed by remixing prev experience 2/2

30.09.2025 20:23 — 👍 0 🔁 0 💬 0 📌 0

Very excited about this: putting distributional learning (DL) models of adaptive speech perception to a strong, informative test sciencedirect.com/science/arti... by Maryann Tan. We use Bayesian ideal observers & adapters to assess whether DL predicts rapid changes in speech perception 1/2

30.09.2025 20:23 — 👍 1 🔁 0 💬 1 📌 0

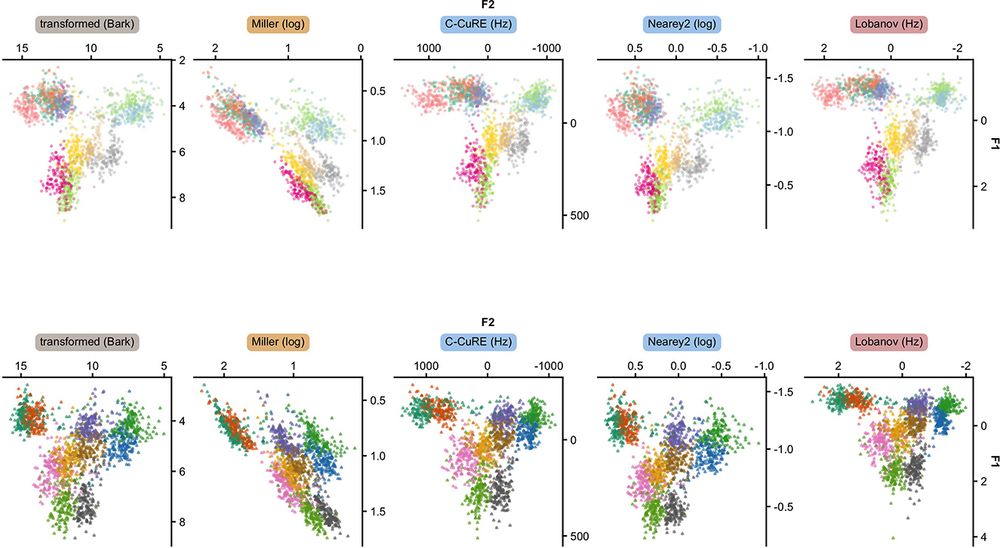

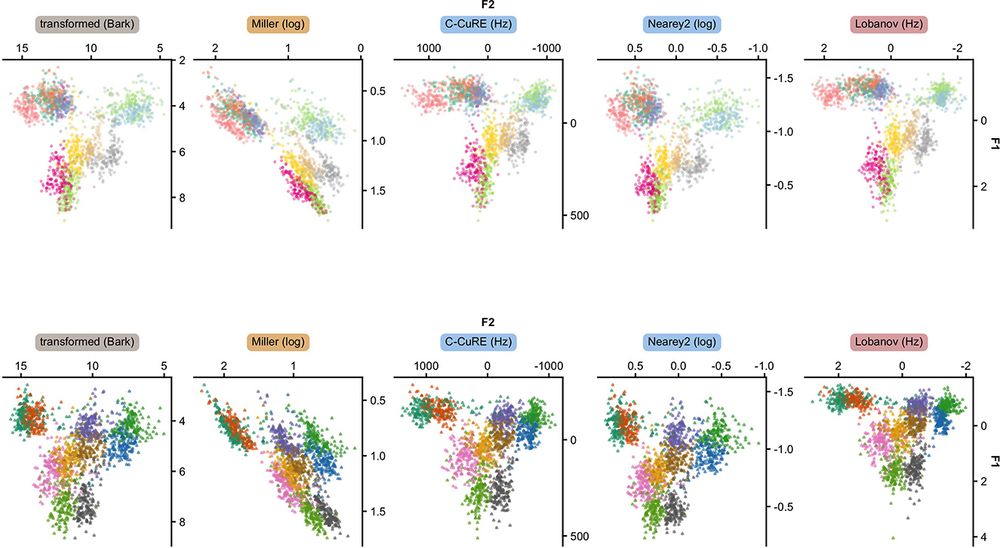

This has been a really eye-opening collaboration that made me realize how little I knew about the auditory system, the normalization of spectral information, & the consequences of making problematic assumptions about the perceptual basis of speech perception when building (psycho)linguistic models!

25.02.2025 15:04 — 👍 0 🔁 0 💬 0 📌 0

Excited to see this out in JASA @asa-news.bsky.social: doi.org/10.1121/10.0.... provides a large-scale evaluation of formant normalization accounts as a model of vowel perception. @uor-braincogsci.bsky.social

25.02.2025 15:02 — 👍 2 🔁 0 💬 2 📌 0

Together w/ @wbushong.bsky.social's recent paper bsky.app/profile/wbus..., this lays out the road ahead for careful research on information maintenance during speech perception. The discussion in Wednesday's paper identifies strong assumptions made in this line of work that might not be warranted.

25.02.2025 14:47 — 👍 0 🔁 0 💬 0 📌 0

By comparing against ideal observer baselines, we identify a reliable, previously unrecognized pattern in listeners' responses that is unexpected under any existing theory. We present simulations that suggest that this pattern can emerge under ideal information maintenance w/ attentional lapses. 3/n

25.02.2025 14:38 — 👍 0 🔁 0 💬 0 📌 0

@uor-braincogsci.bsky.social

25.02.2025 14:24 — 👍 0 🔁 0 💬 0 📌 0

We also revisits long-held assumptions about how we study the maintenance of perceptual information during spoken language understanding. We discuss why most evidence for such maintenance is actually compatible with simpler explanations. 2/2

18.02.2025 15:35 — 👍 0 🔁 0 💬 0 📌 0

New work by @wbushong.bsky.social out in JEP:LMC: listeners might strategically moderate maintenance of perceptual information during spoken language understanding based on the expected informativity of subsequent context. 1/2

18.02.2025 15:35 — 👍 0 🔁 0 💬 1 📌 0

CRC Prominence in Language

SFB-funded Collaborative Research Centre “Prominence in Language” at U Cologne, Germany offers junior & senior research fellowships for 1-6 months between 04-12/2025 (1800-2500 Euro/month) sfb1252.uni-koeln.de/en/ (20 projects in prosody, morphosyntax & semantics, text & discourse structure)

16.12.2024 15:41 — 👍 0 🔁 0 💬 0 📌 0

Select Institute for Collaborative Innovation as your application unit. Apply by 1/28/25 career.admo.um.edu.mo

16.12.2024 15:35 — 👍 0 🔁 0 💬 0 📌 0

Home

Recent News and Events

Research Highlight

Join Andriy Myachykov in Macau =): U Macau invites applications for research assistant professor & postdoctoral fellows under UM Talent Programme aiming to attract high-calibre talents---including in neuroscience & cognitive science at UM Center for Cognitive & Brain Sciences (ccbs.ici.um.edu.mo).

16.12.2024 15:35 — 👍 0 🔁 0 💬 1 📌 0

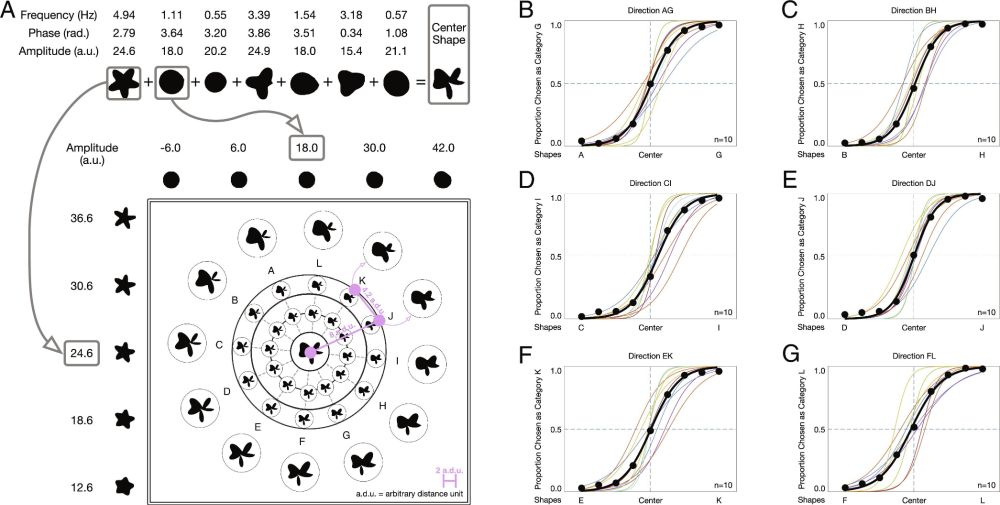

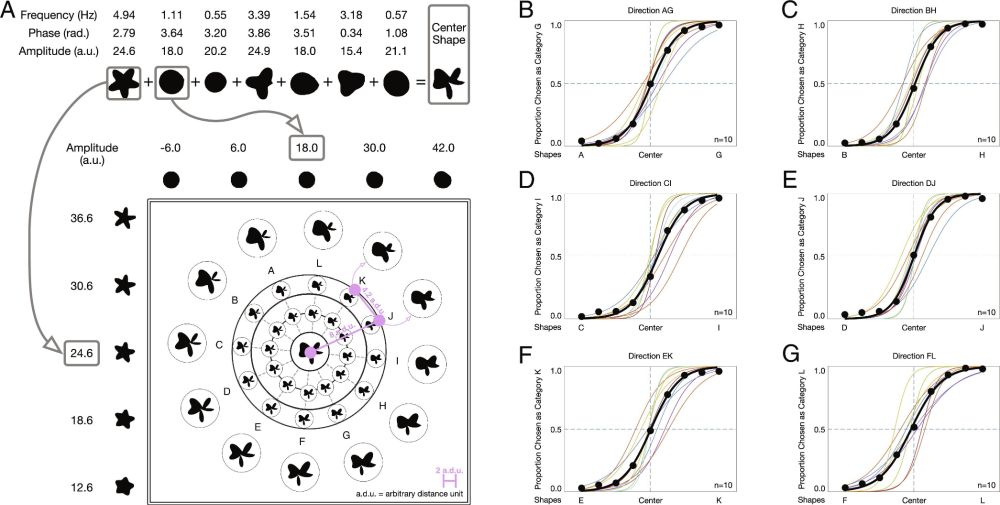

Sculpting new visual categories into the human brain | PNAS

Learning requires changing the brain. This typically occurs through experience, study,

or instruction. We report an alternate route for humans to a...

New paper story time (now out in PNAS)! We developed a method that caused people to learn new categories of visual objects, not by teaching them what the categories were, but by changing how their brains worked when they looked at individual objects in those categories.

www.pnas.org/doi/10.1073/...

04.12.2024 19:59 — 👍 152 🔁 62 💬 8 📌 7

Link to whole thesis www.diva-portal.org/smash/record...

07.12.2024 16:58 — 👍 0 🔁 0 💬 0 📌 0

Article 2 in Frontiers develops a general computational framework to evaluate accounts of formant normalization, and the predictions they make for perception www.frontiersin.org/journals/psy.... The article also discusses under-appreciated shortcomings of popular approaches to such comparisons.

07.12.2024 16:55 — 👍 0 🔁 0 💬 0 📌 0

Exploring the science of mind, brain, & behavior at the University of Rochester @urochester.bsky.social | Research in #BrainScience #CognitiveScience, #Neuroscience, #Psychology, & #AI | #AcademicBluesky

Professor, psycholinguist

PI of the Language & Cognition Lab at Wellesley College. Assistant Professor of Cognitive & Linguistic Sciences and Psychology

Writer and language scientist, author of LINGUAPHILE: A LIFE OF LANGUAGE LOVE and MEMORY SPEAKS: ON LOSING AND RECLAIMING LANGUAGE AND SELF.

Cutting-edge research, news, commentary, and visuals from the Science family of journals. https://www.science.org

Research, news, and commentary from Nature, the international science journal. For daily science news, get Nature Briefing: https://go.nature.com/get-Nature-Briefing

Our mission is to increase openness, integrity, and trustworthiness of research.

Cognitive scientist / Linguist - Full Professor at University of Oslo

Director of @manylanguagesc.bsky.social, PI of ICONIC (https://www.hf.uio.no/iln/english/research/projects/iconic/)

#MetaScience #OpenScience

#DataViz #PresentationDesign #SciComm

ManyLanguages is a globally distributed network of language science laboratories that coordinates data collection for democratically selected studies.

http://many-languages.com

account managed by @congzhang.bsky.social and @timoroettger.bsky.social

Platinum open access journal == No fees for readers or authors. https://ldr.lps.library.cmu.edu

Cognitive scientist at Stanford. Open science advocate. Symbolic Systems Program director. Bluegrass picker, slow runner, dad. http://langcog.stanford.edu

Professor of Natural and Artificial Intelligence @Stanford. Safety and alignment @GoogleDeepMind.

Director, MIT Computational Psycholinguistics Lab. President, Cognitive Science Society. Chair of the MIT Faculty. Open access & open science advocate. He.

Lab webpage: http://cpl.mit.edu/

Personal webpage: https://www.mit.edu/~rplevy

NYU professor, Google research scientist. Good at LaTeX.

Math + coding educator in NYC. Used to be university prof doing neuroscience, far happier in math ed. I enjoy making math games, in Desmos and also p5play. rajeevraizada.github.io

-Assistant Professor @URochester studying naturalistic, interactive human communication and speech/music perception 🧠

-PI of the SoNIC Lab (piazzalab.com)

-BA @Williams | PhD @UCBerkeley | Postdoc @Princeton

-Halfling bard irl 🎶

-she/her 🌈

Assistant Professor @ University of Rochester ◆ narratives, episodic memory, naturalistic cognition, neurofeedback ◆ natcoglab.org ◆ mom ◆ moon elf warlock ◆ 🌈 she/her

Professor of Brain and Cognitive Sciences, Uni Rochester, NY 🐦🐟🐵 Animal cognition, collective behaviour, navigation, tool use, social learning, culture