New work led by

@aaditya6284.bsky.social

"Strategy coopetition explains the emergence and transience of in-context learning in transformers."

We find some surprising things!! E.g. that circuits can simultaneously compete AND cooperate ("coopetition") 😯 🧵👇

11.03.2025 18:18 — 👍 9 🔁 4 💬 1 📌 0

We hope our work deepens the dynamical understanding of how transformers learn, here applied to the emergence and transience of ICL. We're excited to see where else coopetition pops up, and more generally how different strategies influence each other through training. (10/11)

11.03.2025 07:13 — 👍 1 🔁 0 💬 1 📌 0

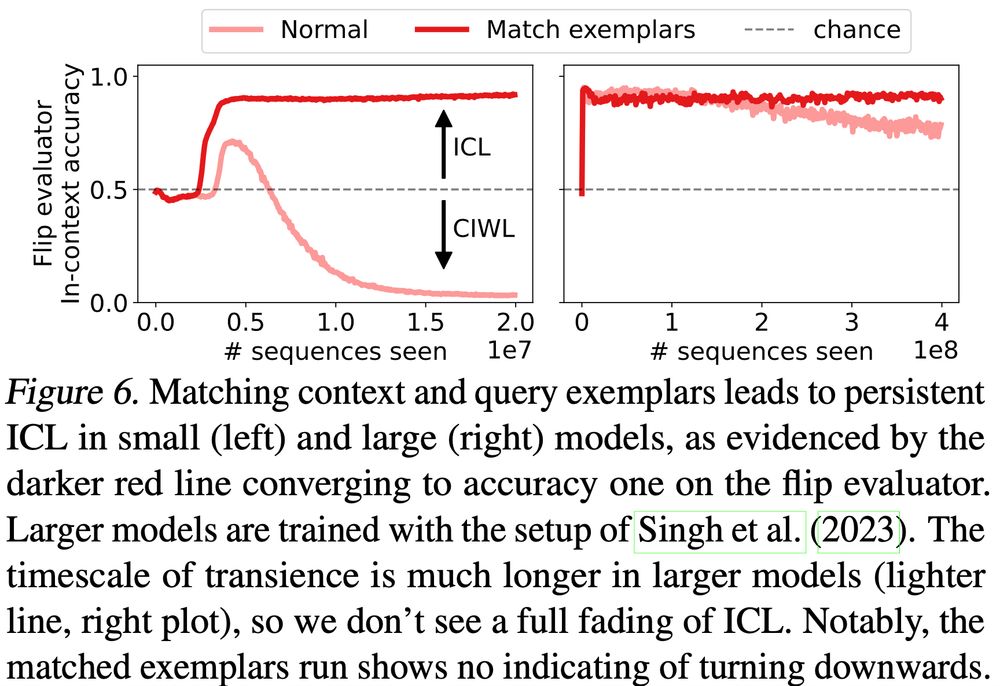

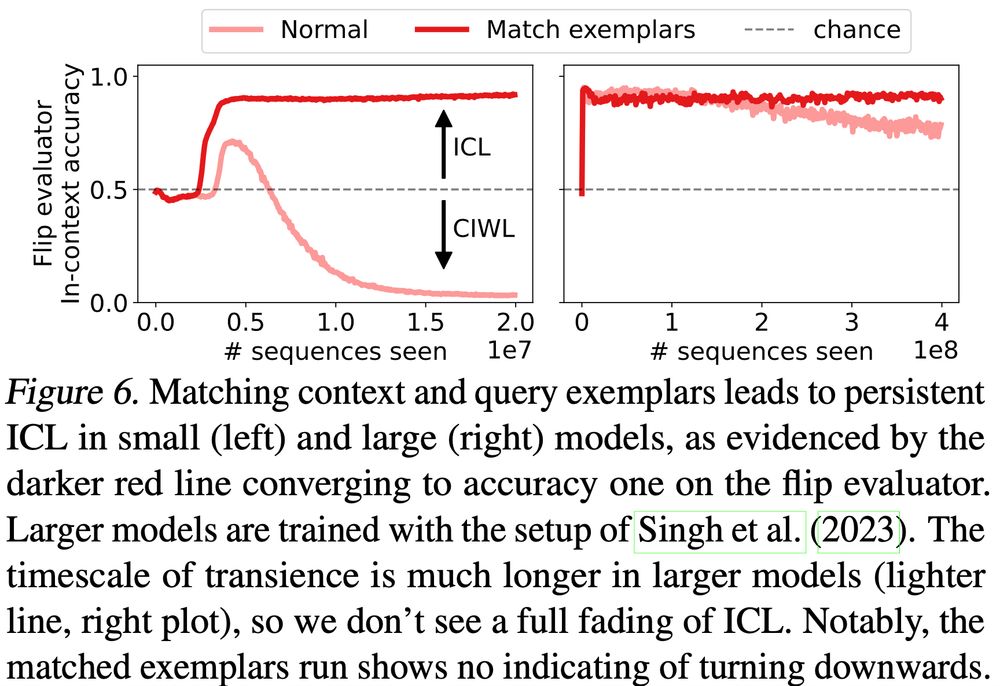

Finally, we carry forward the intuitions from the minimal mathematical model to find a setting where ICL is emergent and persistent. This intervention holds true at larger scales as well, demonstrating the benefits of the improved mechanistic understanding! (9/11)

11.03.2025 07:13 — 👍 0 🔁 0 💬 1 📌 0

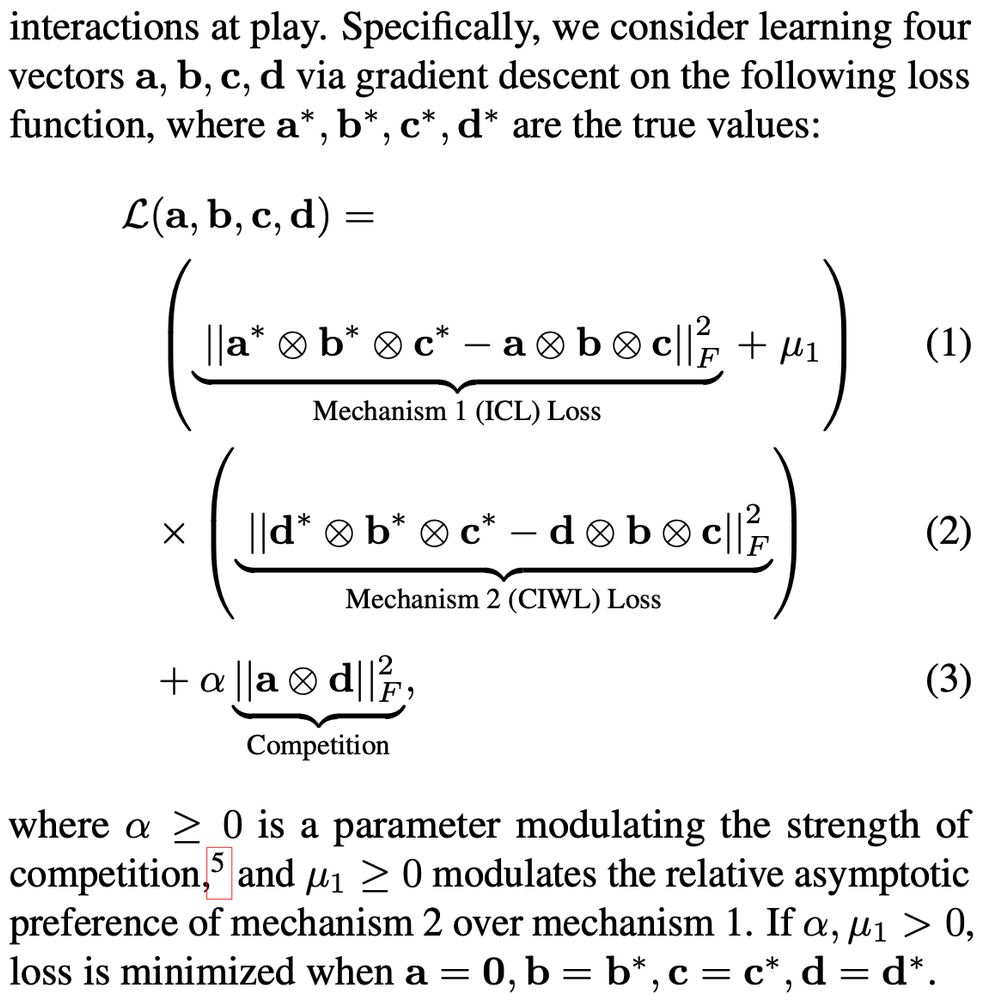

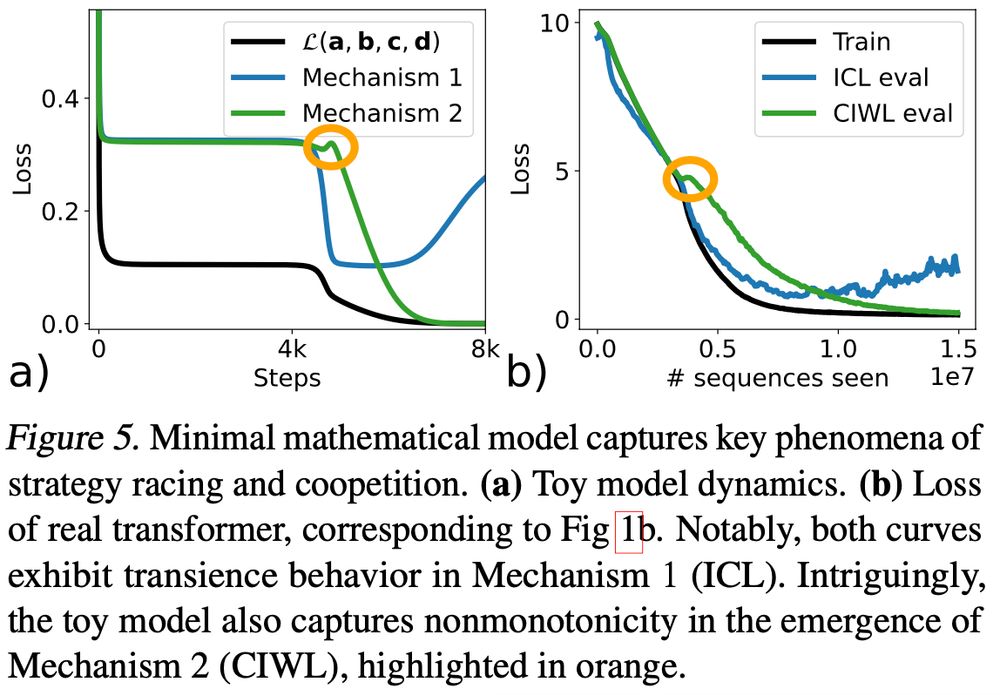

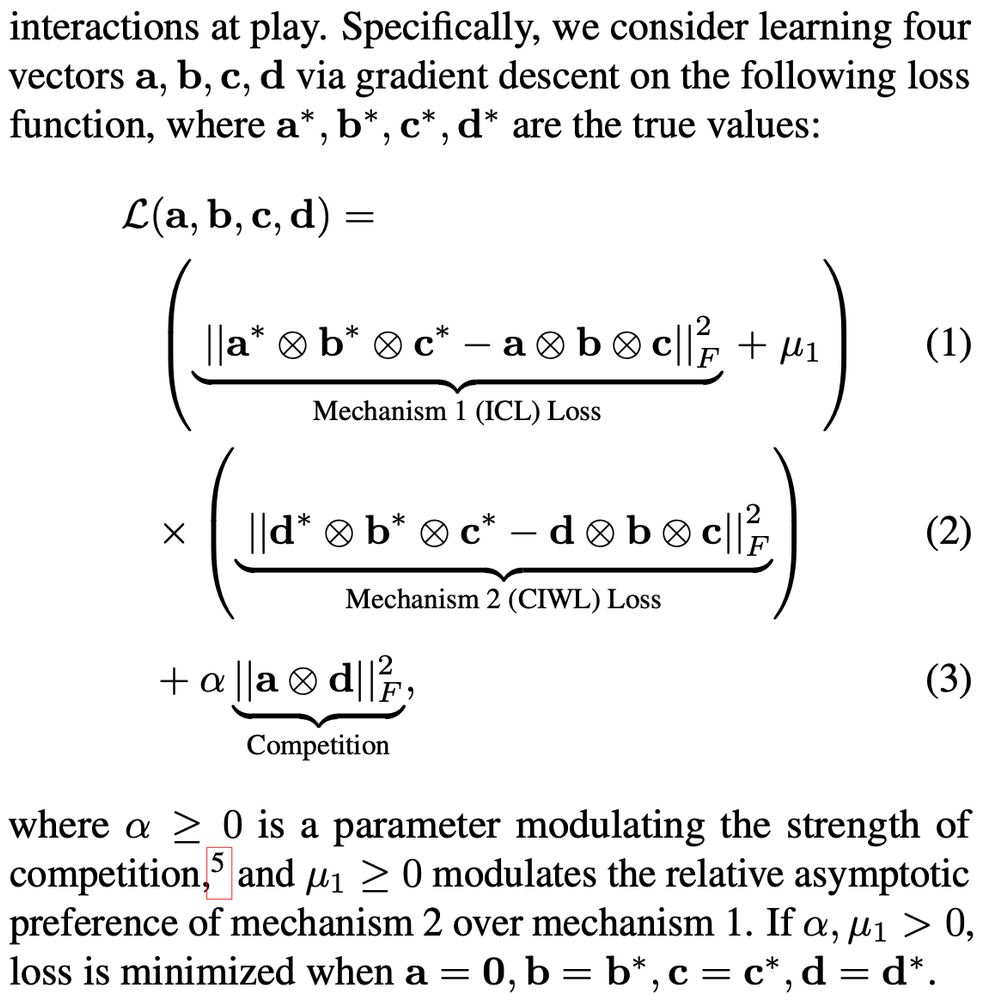

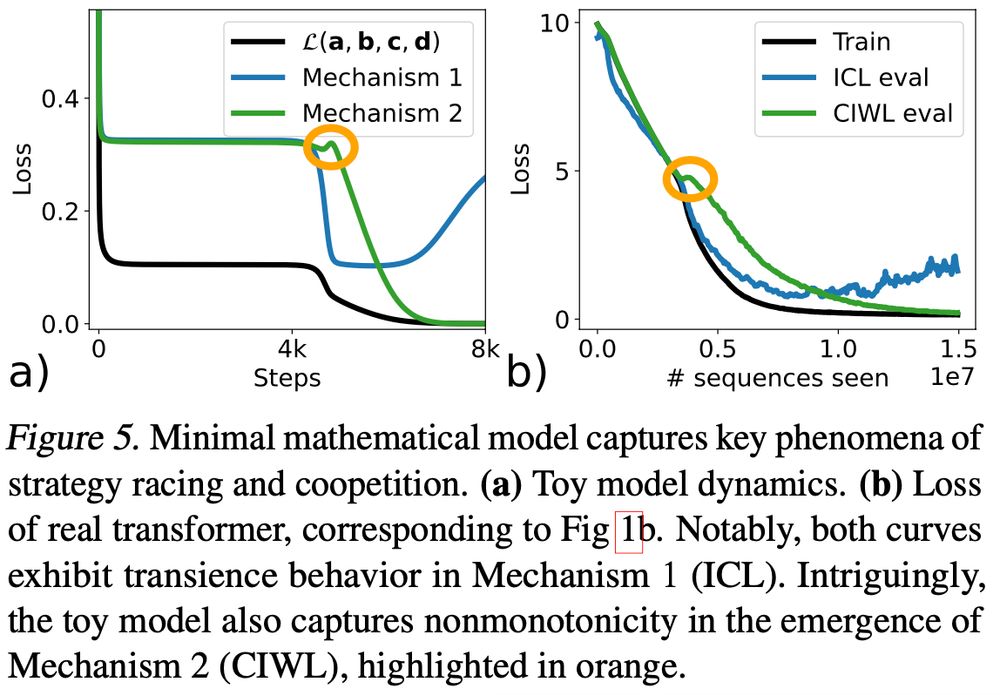

We propose a minimal model of the joint competitive-cooperative ("coopetitive") interactions, which captures the key transience phenomena. We were pleasantly surprised when the model even captured weird non-monotonicities in the formation of the slower mechanism! (8/11)

11.03.2025 07:13 — 👍 0 🔁 0 💬 1 📌 0

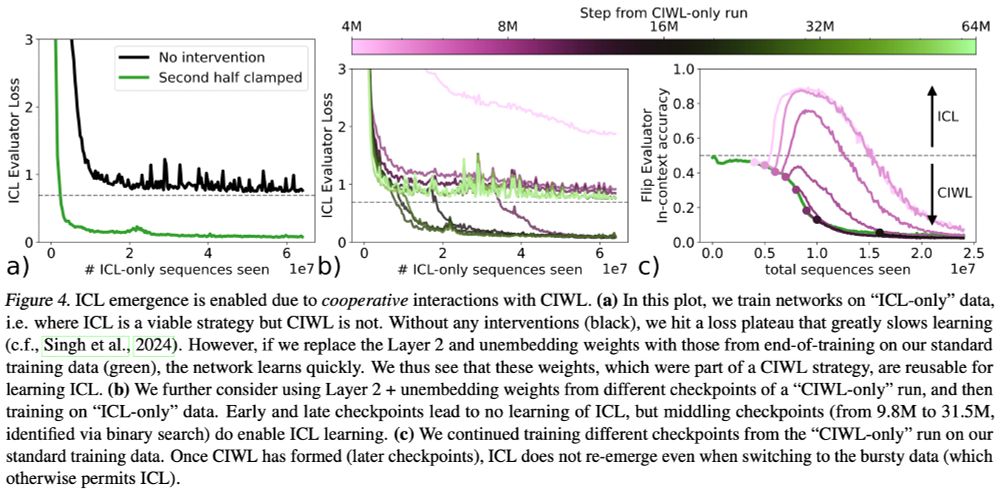

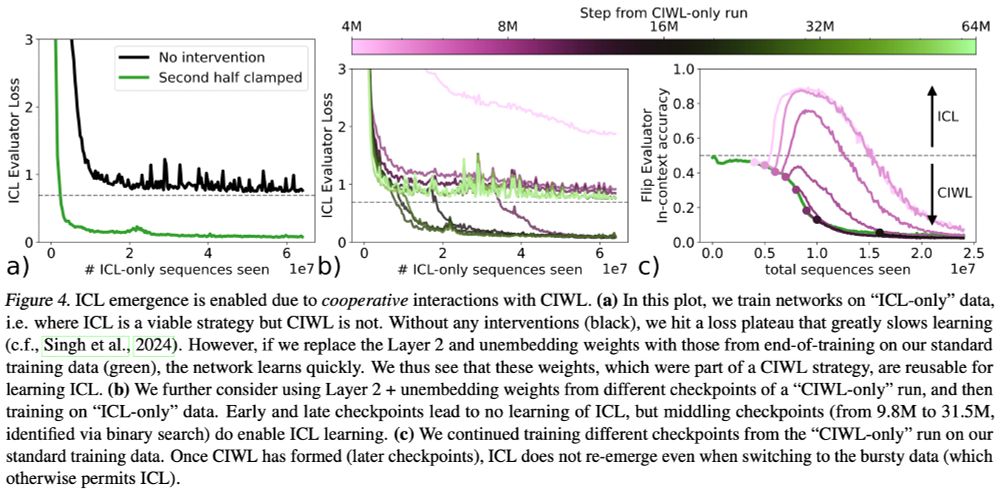

But why does ICL emerge in the first place, if only to give way to CIWL? The ICL solution lies close to the path to the CIWL strategy. Since ICL also helps with the task (and CIWL is "slow), it emerges on the way to the CIWL strategy due to the cooperative interactions. (7/11)

11.03.2025 07:13 — 👍 0 🔁 0 💬 1 📌 0

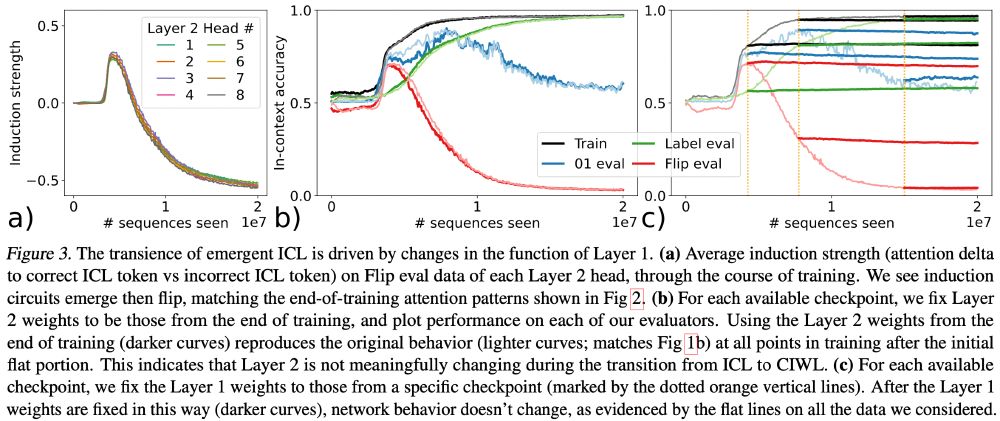

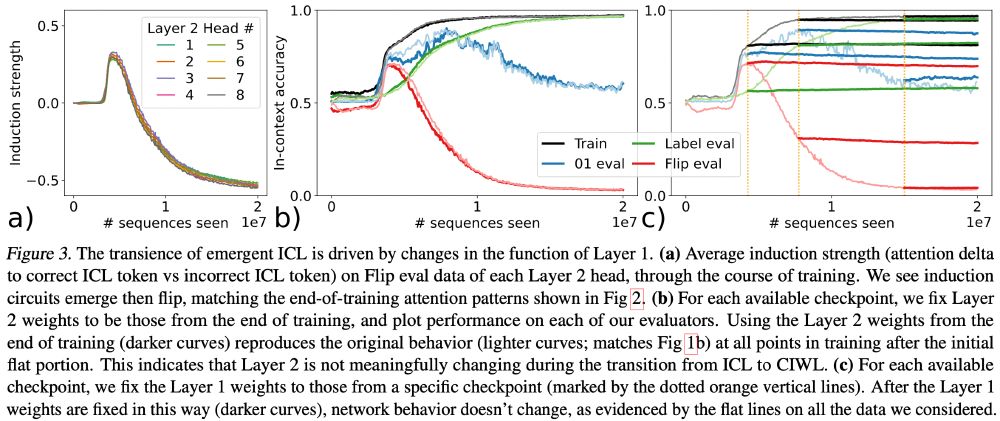

Specifically, we find that Layer 2 circuits (the canonical "induction head") are largely conserved (after an initial phase change), while Layer 1 circuits switch from previous token to attending to self, driving the switch from ICL to CIWL. (6/11)

11.03.2025 07:13 — 👍 0 🔁 0 💬 1 📌 0

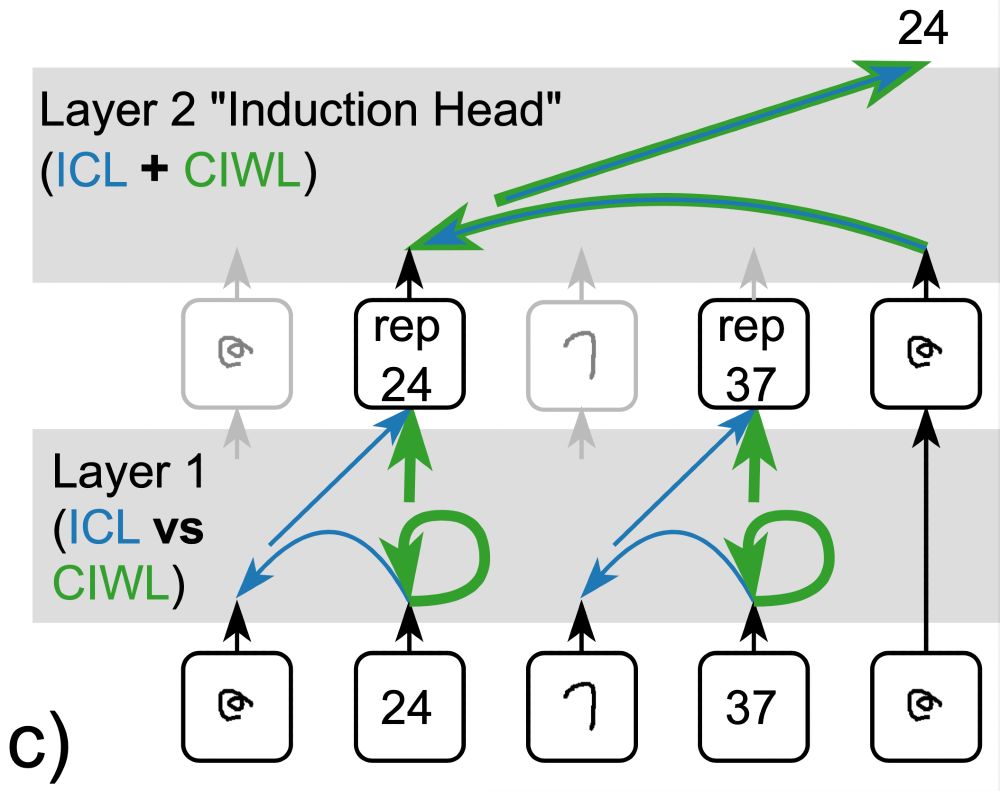

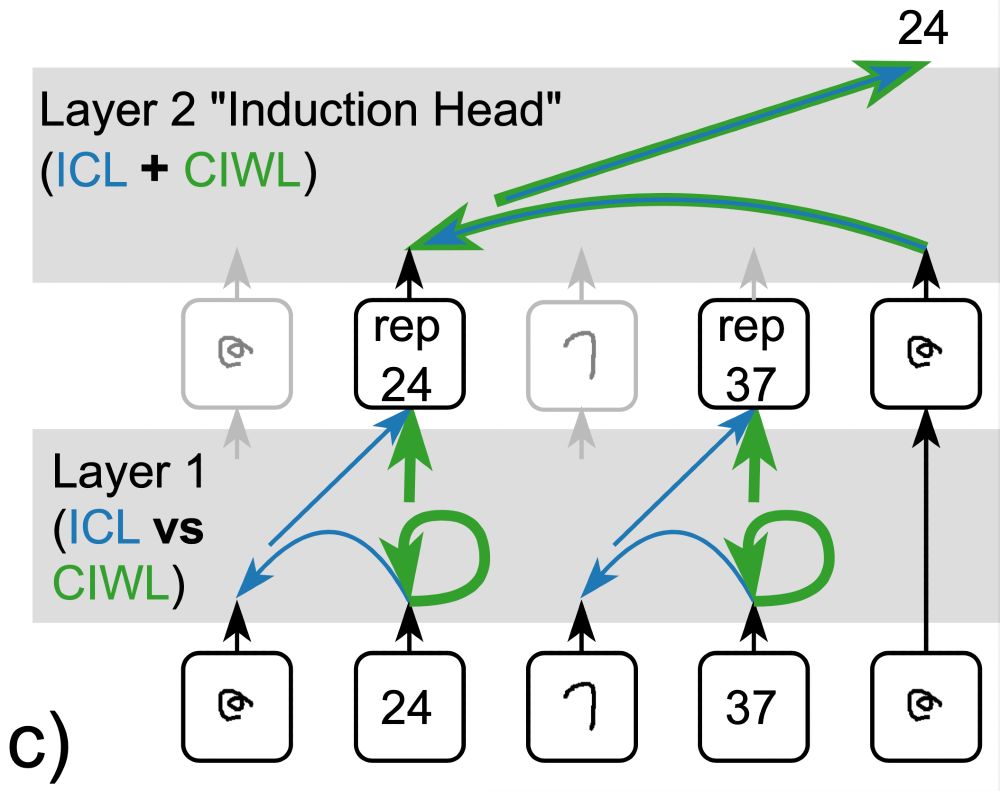

This strategy is implemented through attention heads serving as skip-trigram copiers (e.g., … [label] … [query] -> [label]). While seemingly distinct from the induction circuits that lead to ICL, we find remarkably shared substructure! (5/11)

11.03.2025 07:13 — 👍 0 🔁 0 💬 1 📌 0

We find that the asymptotic mechanism preferred by the model is actually a hybrid strategy, which we term context-constrained in-weights learning (CIWL): the network relies on its exemplar-label mapping from training, but requires the correct label in context. (4/11)

11.03.2025 07:13 — 👍 0 🔁 0 💬 1 📌 0

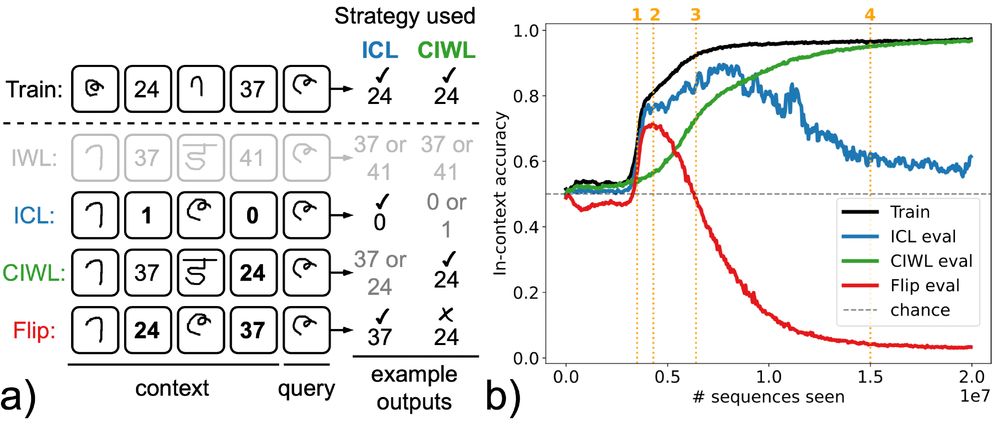

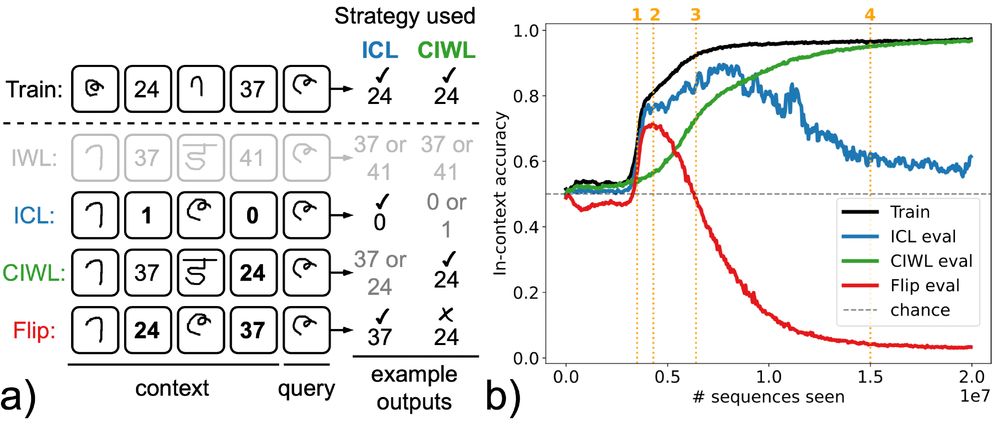

Like prior work, we train on sequences of exemplar-label pairs, which permit in-context and in-weights strategies. We test for these strategies using out-of-distribution evaluation sequences, recovering the classic transience phenomenon (blue). (3/11)

11.03.2025 07:13 — 👍 0 🔁 0 💬 1 📌 0

We use the transience of emergent in-context learning (ICL) as a case study, first reproducing it in a 2-layer attention-only model to enable mechanistic study. (2/11)

x.com/Aaditya6284/...

11.03.2025 07:13 — 👍 0 🔁 0 💬 1 📌 0

Transformers employ different strategies through training to minimize loss, but how do these tradeoff and why?

Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns.

Read on 🔎⏬

11.03.2025 07:13 — 👍 7 🔁 4 💬 1 📌 0

The broader spectrum of in-context learning

The ability of language models to learn a task from a few examples in context has generated substantial interest. Here, we provide a perspective that situates this type of supervised few-shot learning...

What counts as in-context learning (ICL)? Typically, you might think of it as learning a task from a few examples. However, we’ve just written a perspective (arxiv.org/abs/2412.03782) suggesting interpreting a much broader spectrum of behaviors as ICL! Quick summary thread: 1/7

10.12.2024 18:17 — 👍 123 🔁 31 💬 2 📌 1