YouTube video by Devoxx

Deep Dive: GraalVM in Practice by Alina Yurenko

My @graalvm.org Native Image deep dive recording is already up: youtube.com/watch?v=1J6m... 🐰🚀

It includes the very public first demo of Project Crema, Open World for Native Image, at 2:19:54 😅

Thank you, @devoxx.com!

All demos and notes are here: github.com/alina-yur/gr...

08.10.2025 15:30 — 👍 23 🔁 7 💬 0 📌 0

YouTube video by Coffee + Software

Run a supercharged LLM powered by Java and GraalVM #ai #programming #javaprogramming #java #graalvm

run an LLM with a supercharged engine powered by Java and GraalVM (ht @alina-yurenko.bsky.social )

www.youtube.com/shorts/7zSEa...

16.04.2025 16:24 — 👍 17 🔁 3 💬 0 📌 0

✅ @graalvm.org Native Image

✅ Llama3.java

✅ Vector API, FFM API

✅ Apple Silicon ❤️

— `git clone github.com/mukel/llama3... `

— `sdk install java 25.ea.17-graal`

— `make native` (optionally preload a model for zero overhead)

— Profit!🚀

#Java #GraalVM #LLM #LLama

16.04.2025 12:13 — 👍 32 🔁 9 💬 0 📌 1

I had lots of fun talking about my early days as a dev, how I caused double credit bookings all over Germany, stopped smoking to survive long GraalVM meetings and how to heat the room with Java inception.

Already looking forward to part 2.

16.02.2025 14:38 — 👍 18 🔁 7 💬 1 📌 0

Crafting “Crafting Interpreters” – journal.stuffwithstuff.com

"Crafting Interpreters" checks all the boxes, here's a nice writeup from the author journal.stuffwithstuff.com/2020/04/05/c...

07.02.2025 15:44 — 👍 2 🔁 0 💬 0 📌 0

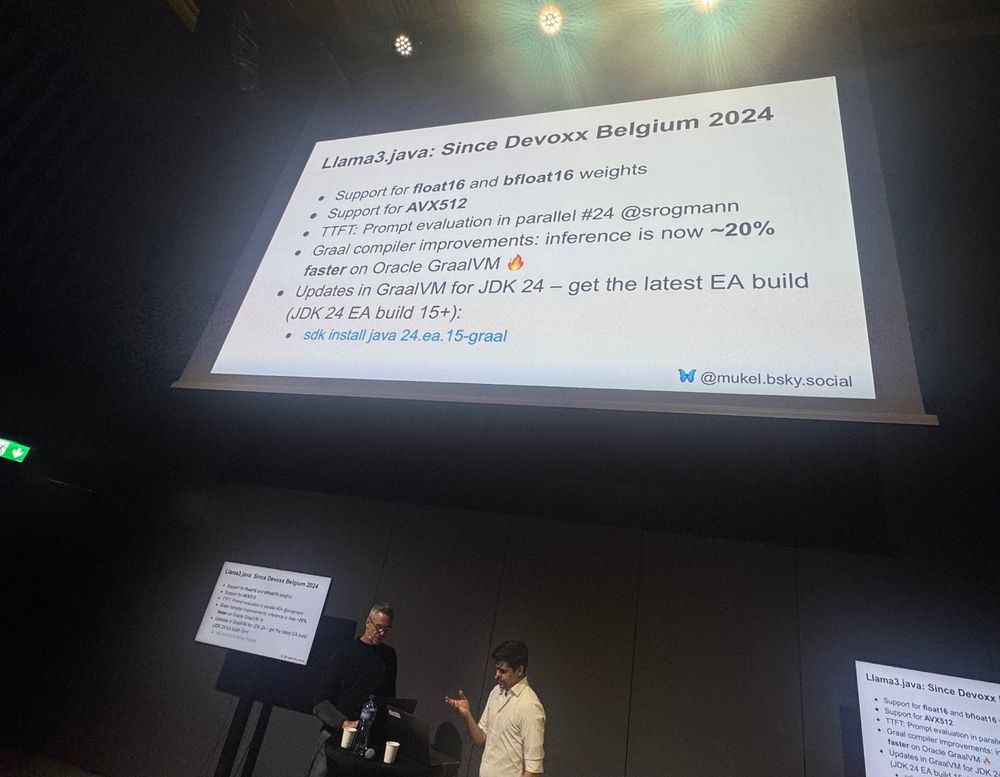

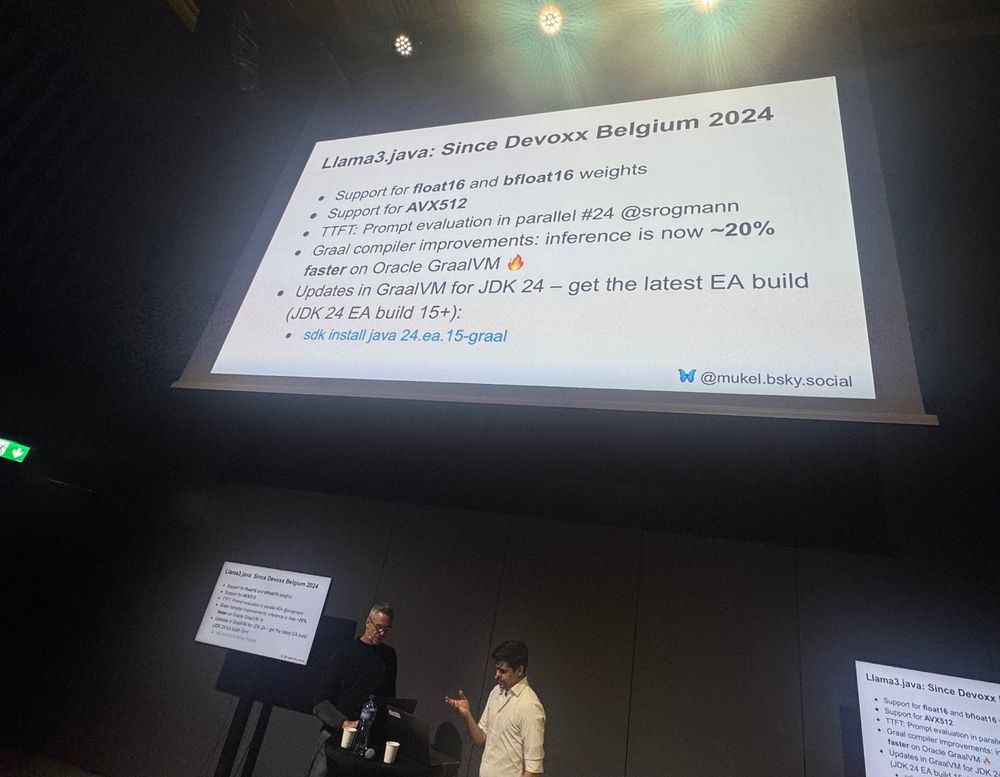

what's new in llama3.java and the upcoming @graalvm.org for JDK 24 🔥

Try it out here: github.com/mukel/llama3...

@stephanjanssen.be @mukel.bsky.social

#VDCERN #VoxxedDaysCERN

15.01.2025 10:24 — 👍 23 🔁 5 💬 1 📌 0

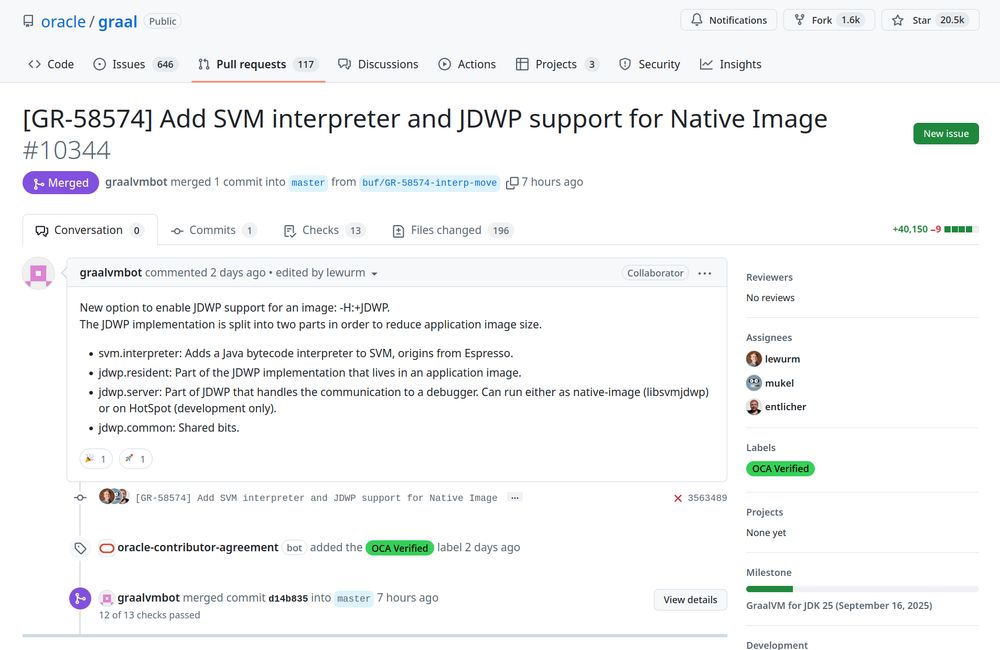

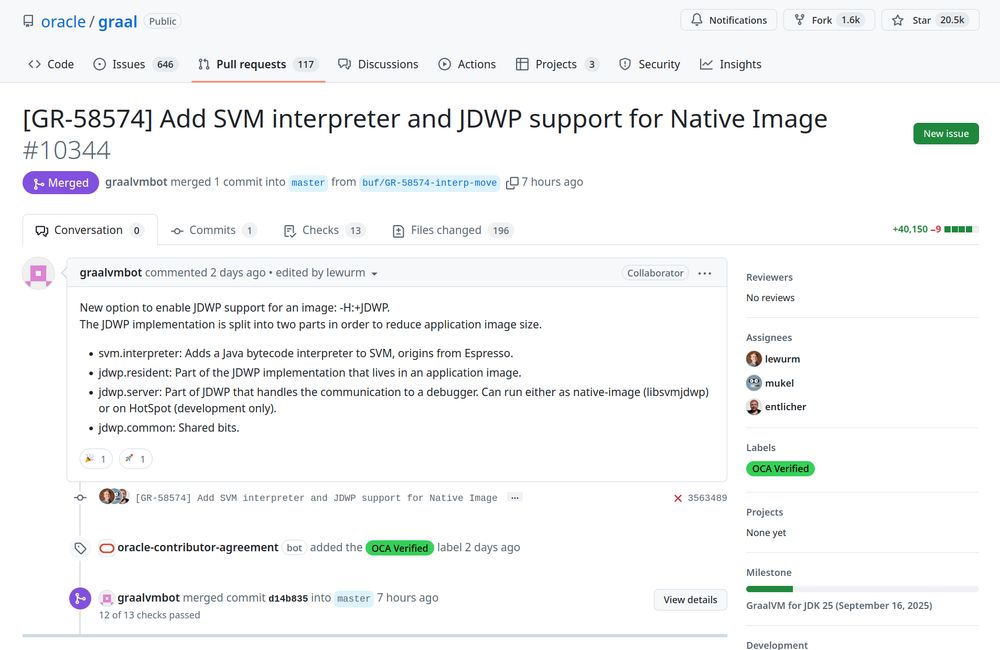

This will be a big deal 🪄🤩. Normally the debug experience of natively compiled languages get obfuscated due to compiler optimisations like inlining. Not here. All optimizations can be enabled to debug GraalVM native images and it looks just the same as without opts.

20.12.2024 12:00 — 👍 12 🔁 2 💬 1 📌 1

We just merged the current status of the upcoming JDWP support for @graalvm.org Native Image! 🥳

This will soon provide developers with the same debugging experience they are used to in Java, but for native images! Stay tuned for more details.

github.com/oracle/graal...

20.12.2024 11:46 — 👍 55 🔁 26 💬 3 📌 2

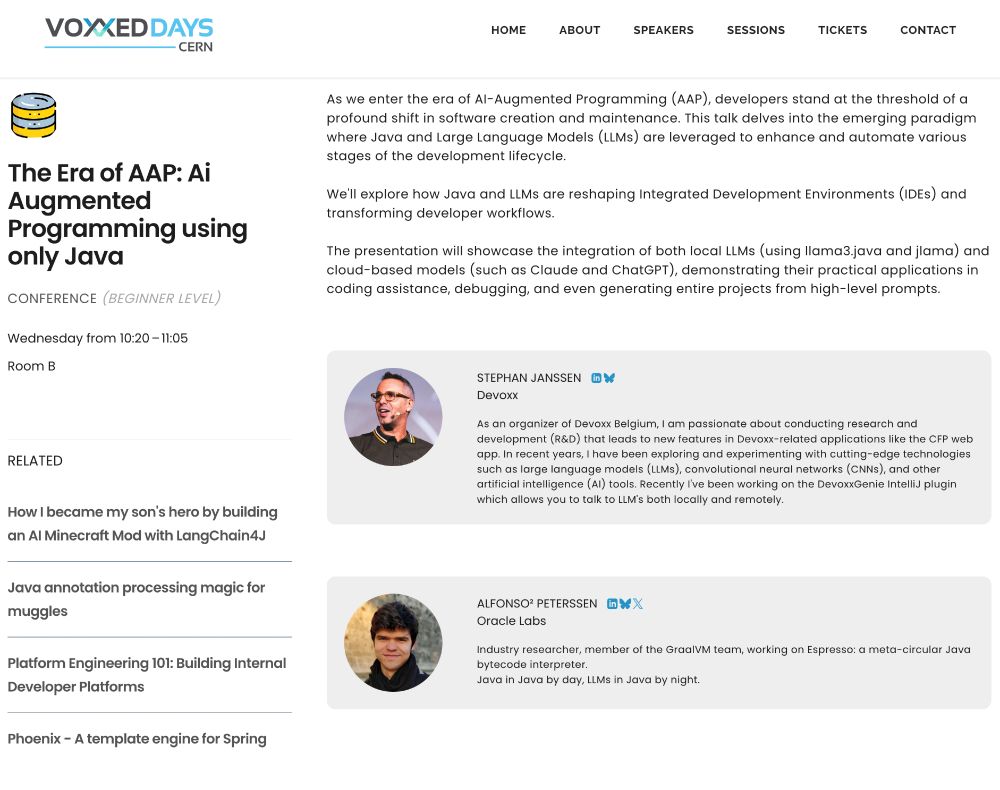

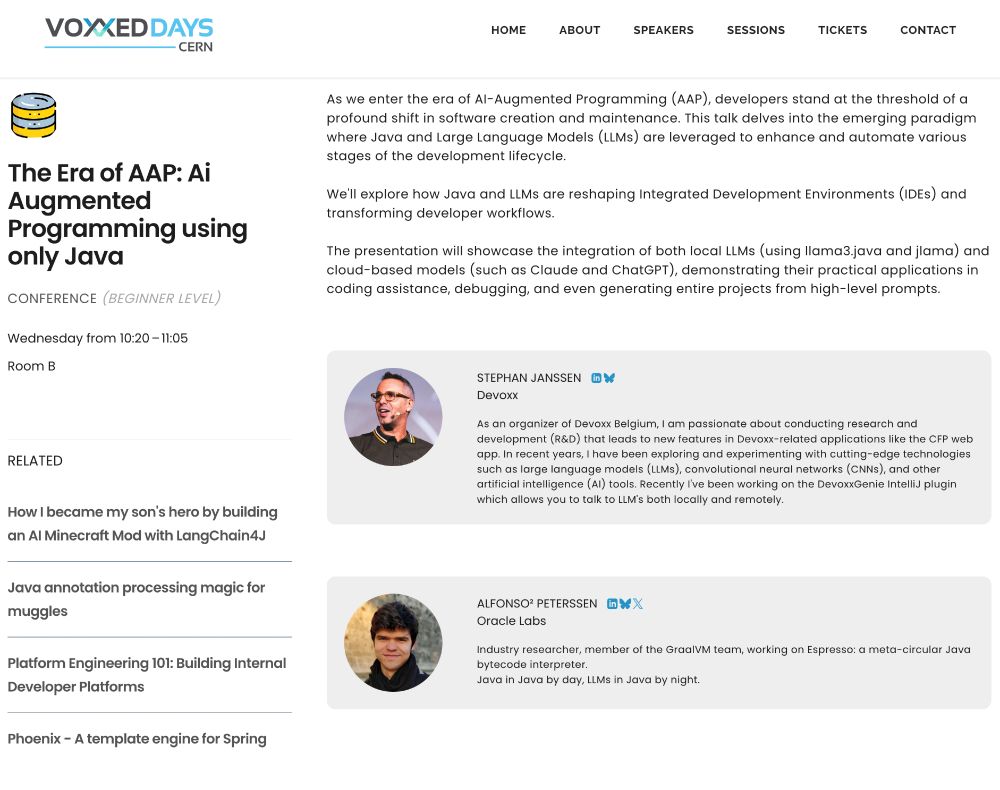

Excited to welcome @mukel.bsky.social as my co-speaker for the VoxxedDays #CERN talk on "The Era of AAP: Ai Augmented Programming using only Java" ☕️ 🚀 🔥

cern.voxxeddays.com/talk/the-era...

25.11.2024 14:12 — 👍 15 🔁 3 💬 0 📌 0

Open Source software engineer: Hibernate team, Quarkus co-founder and more. Cloud Services @ Red Hat.

Les Cast Codeurs founder. Java Champion...

Computer Science 💻 | Software Engineer 🚀 | Once in La Habana 🇨🇺, now in Córdoba 🇪🇸 | Passionate about videogames 🎮 and learning new stuff 📊🔥 | Co-founder of Smash Cuba (and competitive Smash Bros. player from time to time...)

Developer advocate for @BellSoft

Love people, both listen and talk to

https://asm0dey.site/

Java Core Library Team @Oracle (all opinions are my own alone).

Reproducible bugs are candies 🍭🍬

I like programming too much for not liking automatic programming.

The Agent Tools company powering faster, more cost-efficient agent-driven software change.

Java | Spring | OOP & FP |

Debugging apps using System.out.println() since 2003.

Work at JetBrains. Java Champion. Check my Java book: https://mng.bz/671p

https://wien.tomnetworks.com/

Entrepreneur

Costplusdrugs.com

AI @ OpenAI, Tesla, Stanford

A LLN - large language Nathan - (RL, RLHF, society, robotics), athlete, yogi, chef

Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

Democratizing knowledge one keystroke at a time. PhD in Computer Science. College Professor.

Independent AI researcher, creator of datasette.io and llm.datasette.io, building open source tools for data journalism, writing about a lot of stuff at https://simonwillison.net/

Developer Advocate @ JetBrains

YouTube.com/@AntonArhipov

#java #kotlin #programming