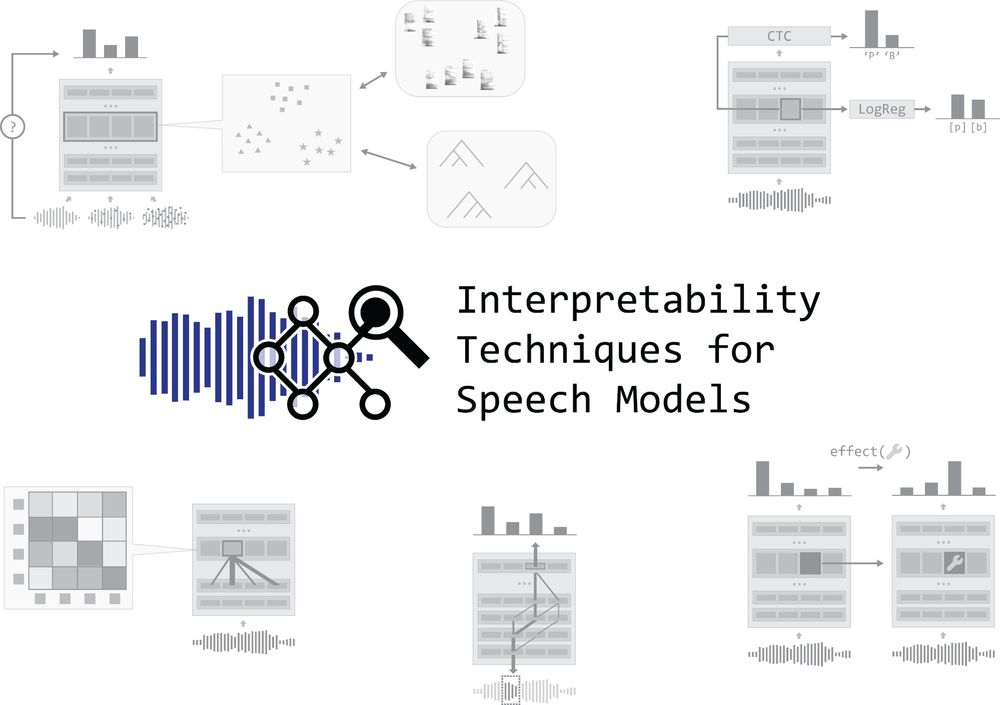

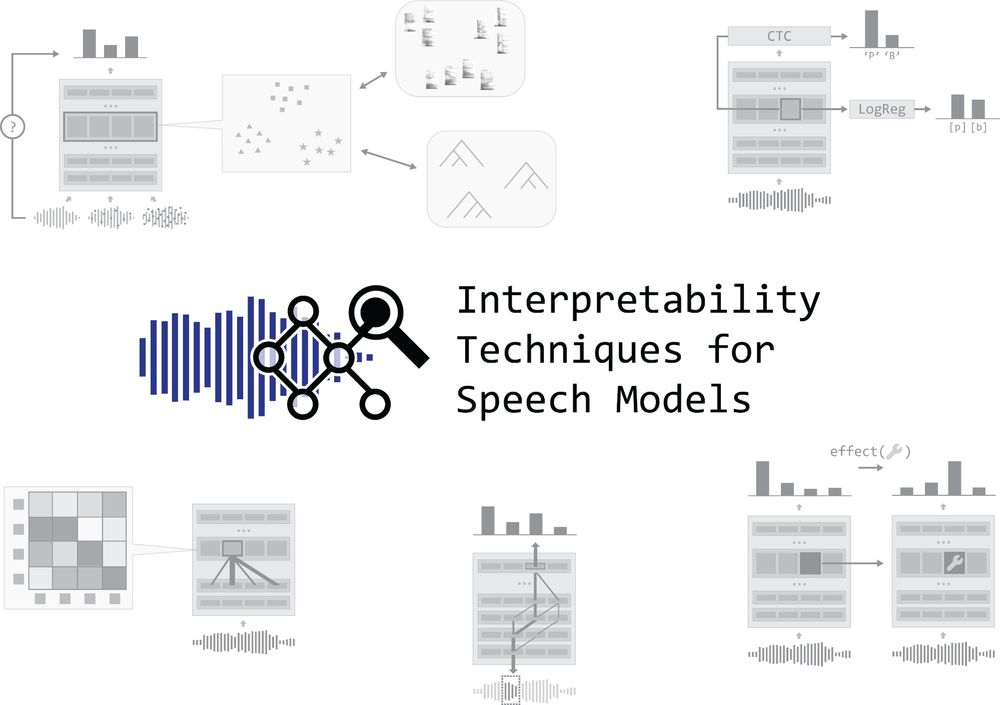

Had such a great time presenting our tutorial on Interpretability Techniques for Speech Models at #Interspeech2025! 🔍

For anyone looking for an introduction to the topic, we've now uploaded all materials to the website: interpretingdl.github.io/speech-inter...

19.08.2025 21:23 — 👍 40 🔁 14 💬 2 📌 1

🔥 I am super excited to be presenting a poster at #ACL2025 in Vienna next week! 🌏

This is my first big conference!

📅 Tuesday morning, 10:30–12:00, during Poster Session 2.

💬 If you're around, feel free to message me. I would be happy to connect, chat, or have a drink!

25.07.2025 15:37 — 👍 5 🔁 1 💬 1 📌 0

ACL paper alert! What structure is lost when using linearizing interp methods like Shapley? We show the nonlinear interactions between features reflect structures described by the sciences of syntax, semantics, and phonology.

12.06.2025 18:56 — 👍 55 🔁 12 💬 3 📌 1

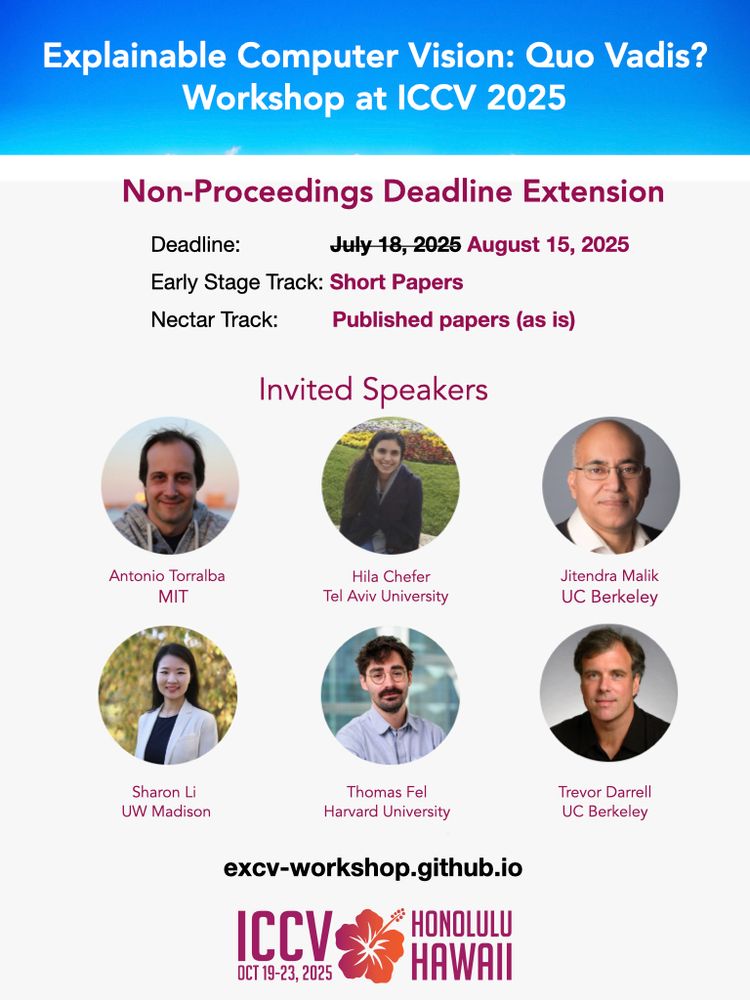

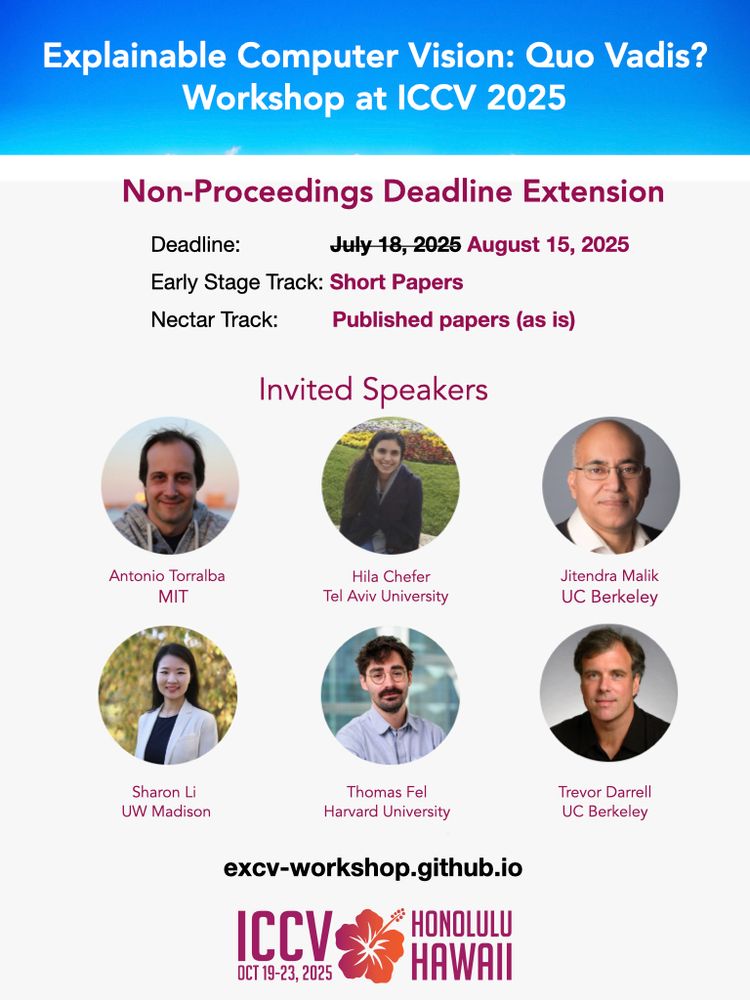

🚨Deadline Extension Alert!

Our Non-proceedings track is open till August 15th for the eXCV workshop at ICCV.

Our nectar track accepts published papers, as is.

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025

18.07.2025 09:31 — 👍 5 🔁 5 💬 1 📌 0

10 days to go! Still time to run your method and submit!

23.07.2025 08:21 — 👍 1 🔁 1 💬 0 📌 0

Home

First Workshop on Interpreting Cognition in Deep Learning Models (NeurIPS 2025)

Excited to announce the first workshop on CogInterp: Interpreting Cognition in Deep Learning Models @ NeurIPS 2025! 📣

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

16.07.2025 13:08 — 👍 58 🔁 19 💬 1 📌 3

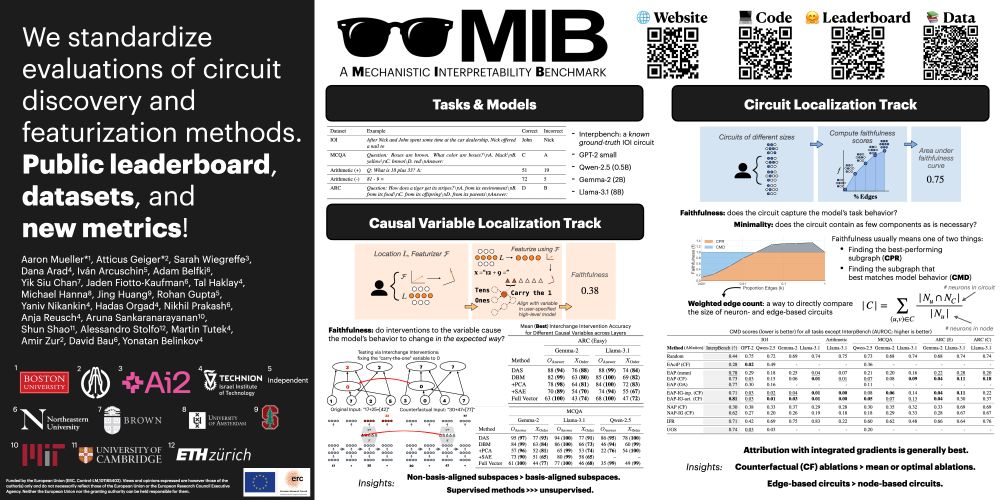

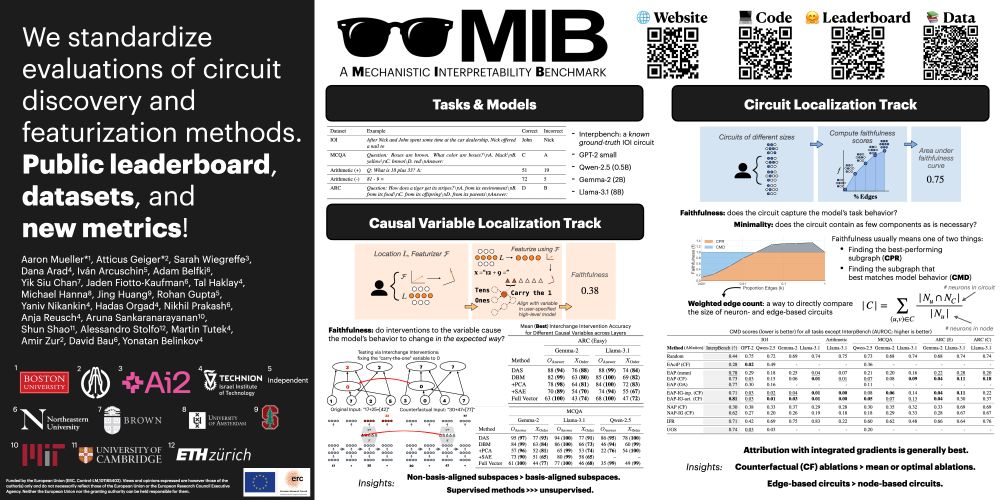

If you're at #ICML2025, chat with me, @sarah-nlp.bsky.social, Atticus, and others at our poster 11am - 1:30pm at East #1205! We're establishing a 𝗠echanistic 𝗜nterpretability 𝗕enchmark.

We're planning to keep this a living benchmark; come by and share your ideas/hot takes!

17.07.2025 17:45 — 👍 13 🔁 3 💬 0 📌 0

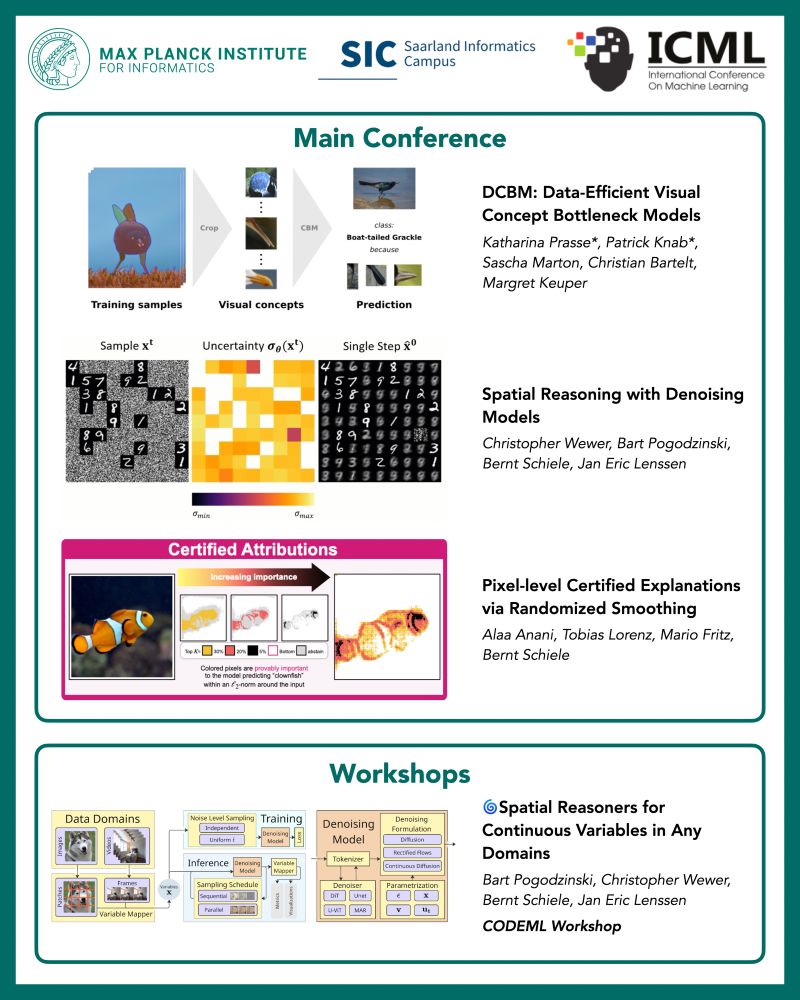

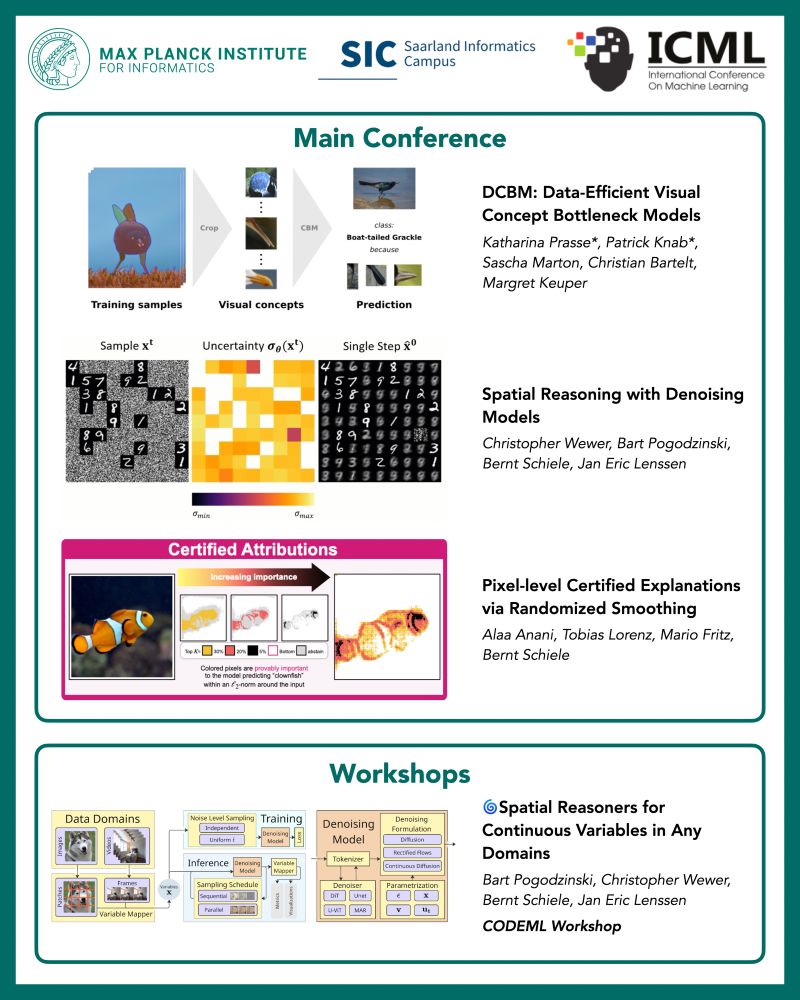

Poster is up and we are looking forward to the #ICML2025 poster session. Come join @patrickknab.bsky.social and me at Poster #W-214 presenting our work with @smarton.bsky.social, Christian Bartelt, and @margretkeuper.bsky.social @margretkeuper.bsky.social #UniMa

17.07.2025 18:00 — 👍 1 🔁 2 💬 0 📌 0

I am at #ICML2025! 🇨🇦🏞️

Catch me:

1️⃣ Presenting this paper👇 tomorrow 11am-1:30pm at East #1205

2️⃣ At the Actionable Interpretability @actinterp.bsky.social workshop on Saturday in East Ballroom A (I’m an organizer!)

16.07.2025 23:09 — 👍 3 🔁 1 💬 1 📌 0

Causal Abstraction, the theory behind DAS, tests if a network realizes a given algorithm. We show (w/ @denissutter.bsky.social, T. Hofmann, @tpimentel.bsky.social ) that the theory collapses without the linear representation hypothesis—a problem we call the non-linear representation dilemma.

17.07.2025 10:57 — 👍 5 🔁 2 💬 1 📌 0

Join us on Thursday 11-13 in poster hall West #214 to discuss image segments as concepts. #ICML2025 @patrickknab.bsky.social @smarton.bsky.social Christian bartelt @margretkeuper.bsky.social @keuper-labs.bsky.social

15.07.2025 22:01 — 👍 2 🔁 2 💬 0 📌 0

🌌🛰️🔭Want to explore universal visual features? Check out our interactive demo of concepts learned from our #ICML2025 paper "Universal Sparse Autoencoders: Interpretable Cross-Model Concept Alignment".

Come see our poster at 4pm on Tuesday in East Exhibition hall A-B, E-1208!

15.07.2025 02:36 — 👍 12 🔁 6 💬 1 📌 3

Papers accepted at ICML 2025 from the Computer Vision and Machine Learning Department at the Max Planck Institute for Informatics.

Papers being presented from our group at #ICML2025!

Congratulations to all the authors! To know more, visit us in the poster sessions!

A 🧵with more details:

@icmlconf.bsky.social @mpi-inf.mpg.de

13.07.2025 08:00 — 👍 21 🔁 5 💬 2 📌 0

Our #ICML position paper: #XAI is similar to applied statistics: it uses summary statistics in an attempt to answer real world questions. But authors need to state how concretely (!) their XAI statistics contributes to answer which concrete (!) question!

arxiv.org/abs/2402.02870

11.07.2025 07:35 — 👍 6 🔁 2 💬 0 📌 0

🚨 New preprint! 🚨

Everyone loves causal interp. It’s coherently defined! It makes testable predictions about mechanistic interventions! But what if we had a different objective: predicting model behavior not under mechanistic interventions, but on unseen input data?

10.07.2025 14:30 — 👍 53 🔁 11 💬 3 📌 2

Introducing the speakers for the eXCV workshop at ICCV, Hawaii. Get ready for many stimulating and insightful talks and discussions.

Our Non-proceedings track is still open!

Paper submission deadline: July 18, 2025

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025

10.07.2025 12:49 — 👍 6 🔁 4 💬 0 📌 0

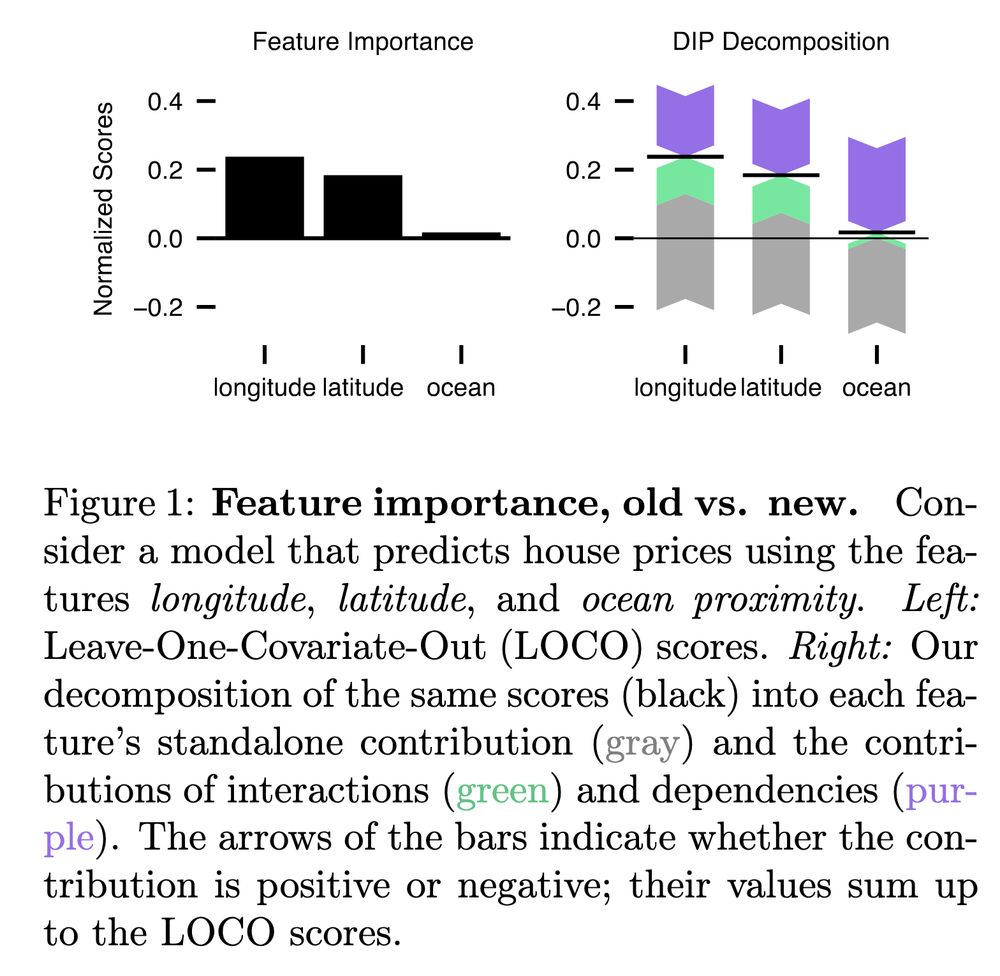

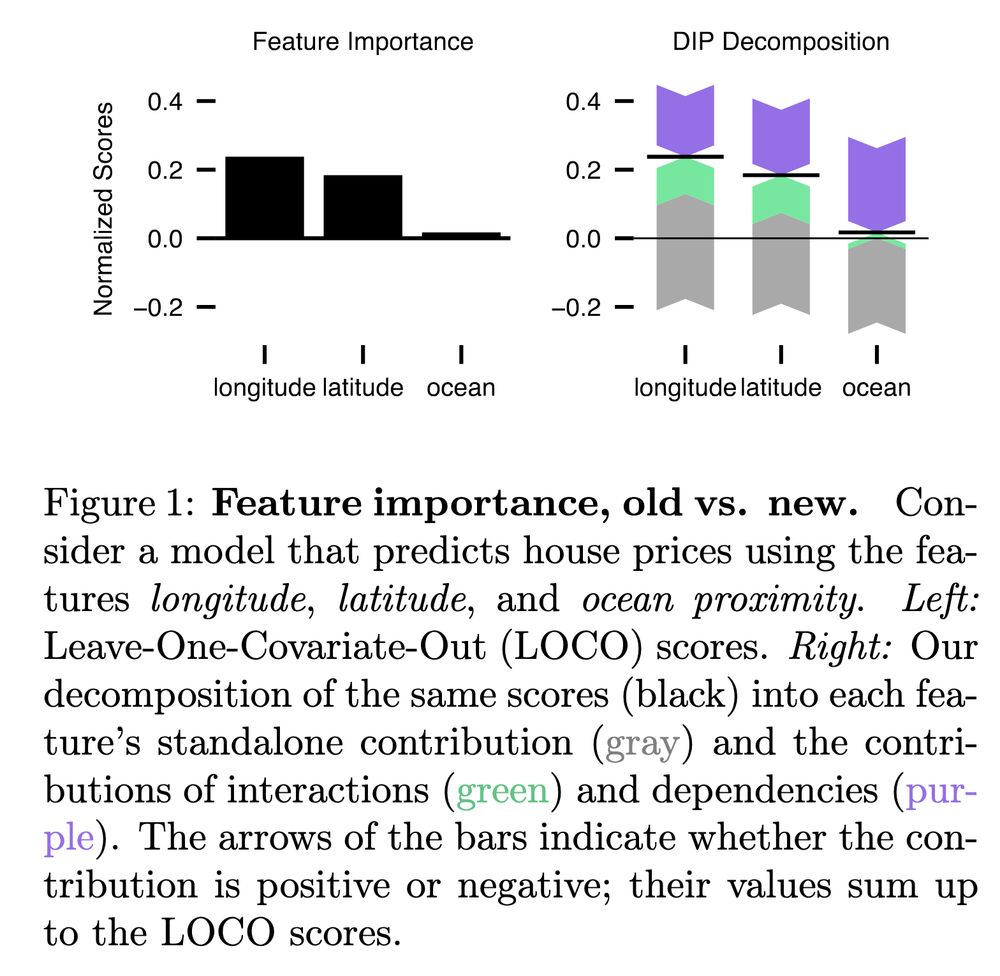

In many XAI applications, it is crucial to determine whether features contribute individually or only when combined. However, existing methods fail to reveal cooperations since they entangle individual contributions with those made via interactions and dependencies. We show how to disentangle them!

07.07.2025 15:37 — 👍 17 🔁 3 💬 1 📌 2

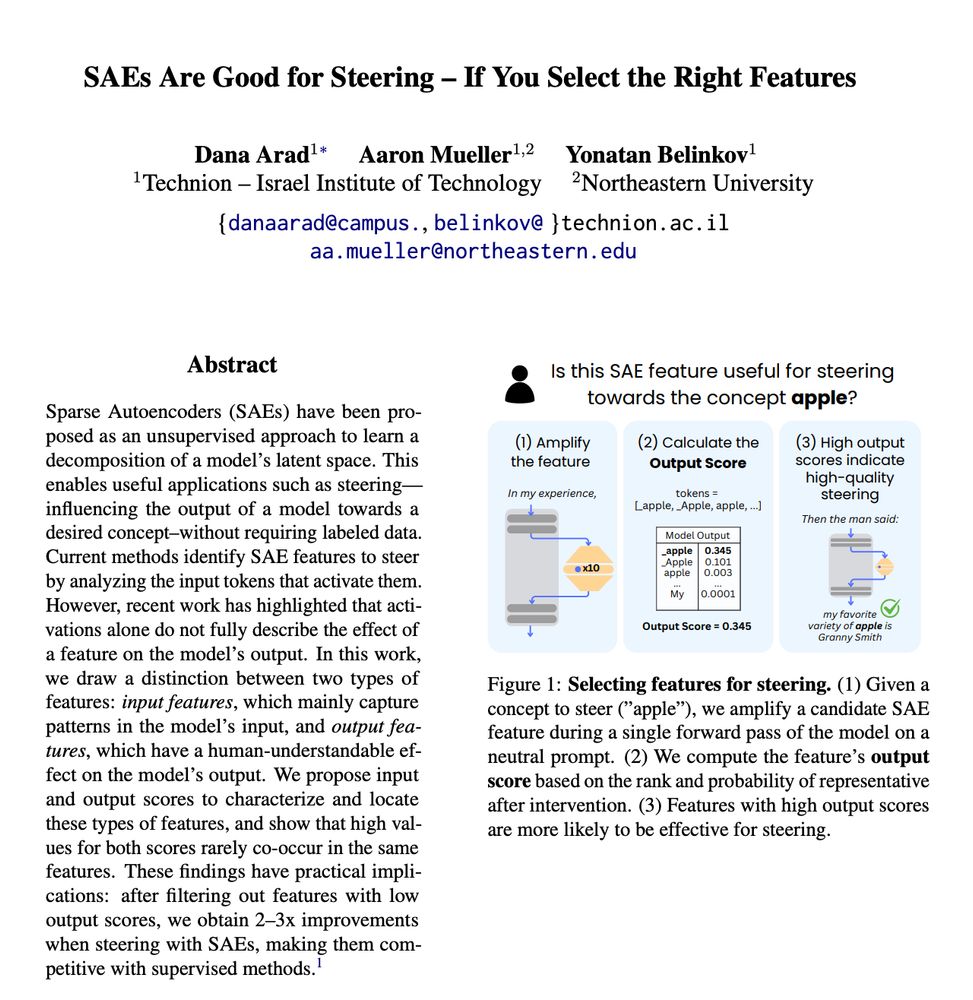

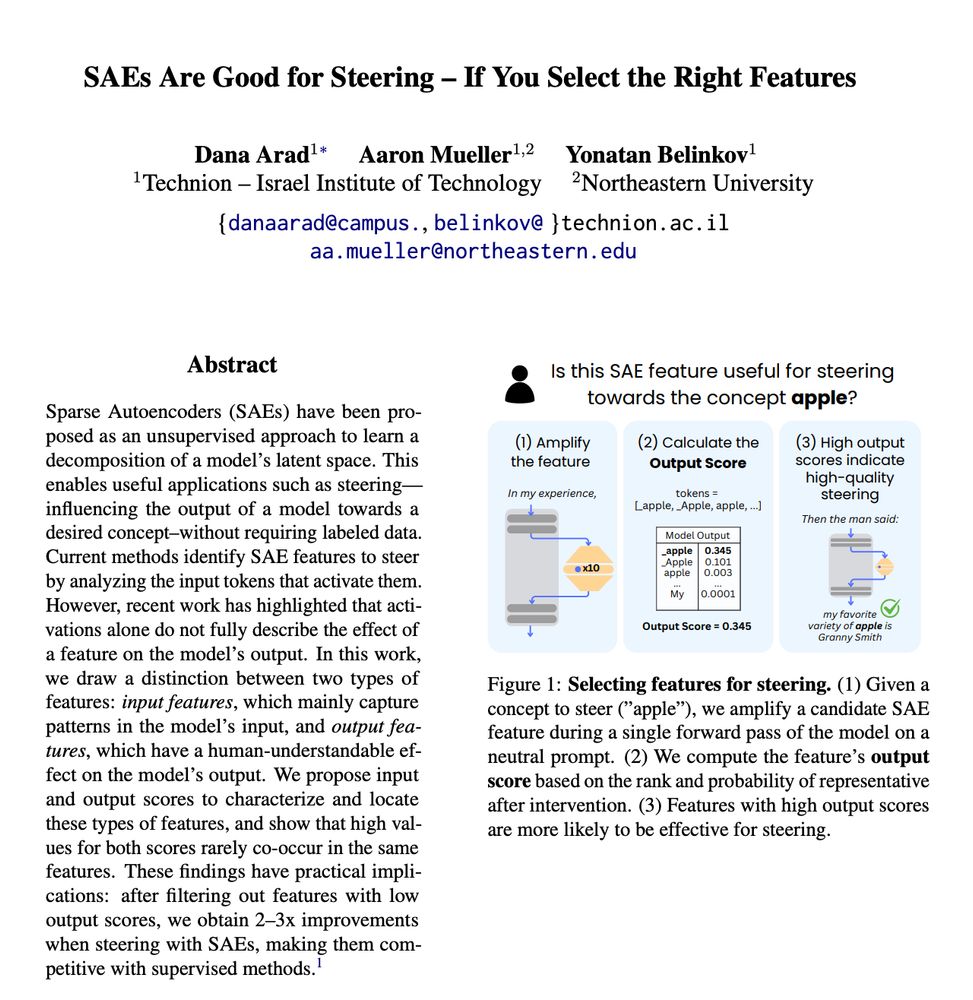

Tried steering with SAEs and found that not all features behave as expected?

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

27.05.2025 16:06 — 👍 17 🔁 6 💬 2 📌 3

How do language models track mental states of each character in a story, often referred to as Theory of Mind?

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

24.06.2025 17:13 — 👍 58 🔁 19 💬 2 📌 1

VLMs perform better on questions about text than when answering the same questions about images - but why? and how can we fix it?

In a new project led by Yaniv (@YNikankin on the other app), we investigate this gap from an mechanistic perspective, and use our findings to close a third of it! 🧵

26.06.2025 10:40 — 👍 6 🔁 4 💬 1 📌 0

Have you heard about this year's shared task? 📢

Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year at #BlackboxNLP, we're introducing a shared task to rigorously evaluate MI methods in language models 🧵

23.06.2025 14:45 — 👍 16 🔁 4 💬 1 📌 1

Our position paper on algorithmic explanations is out—excited to share it! 🙌

Proud of this collaborative effort toward a scientifically grounded understanding of generative AI.

@tuberlin.bsky.social @bifold.berlin @msftresearch.bsky.social @UCSD & @UCLA

20.06.2025 17:12 — 👍 18 🔁 8 💬 1 📌 0

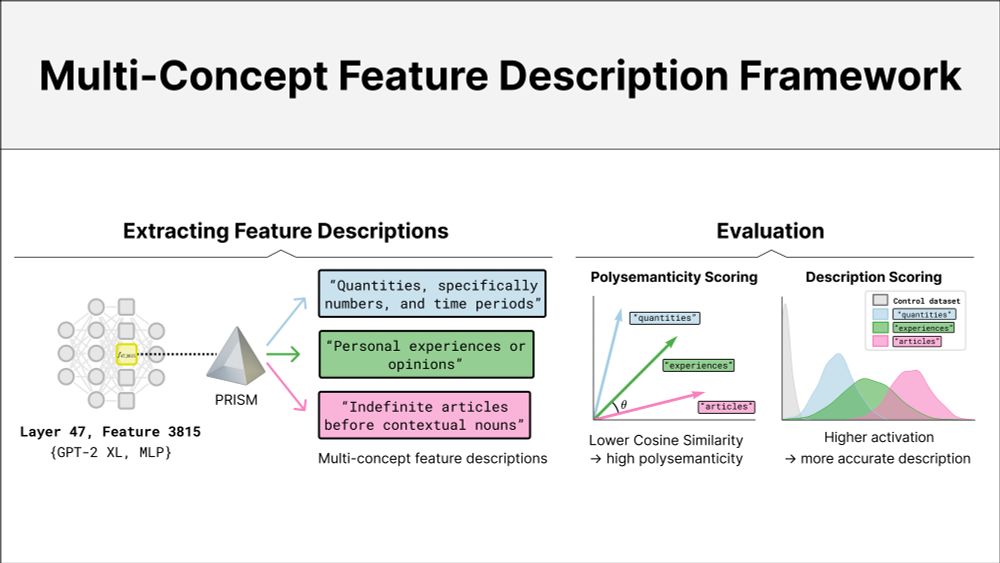

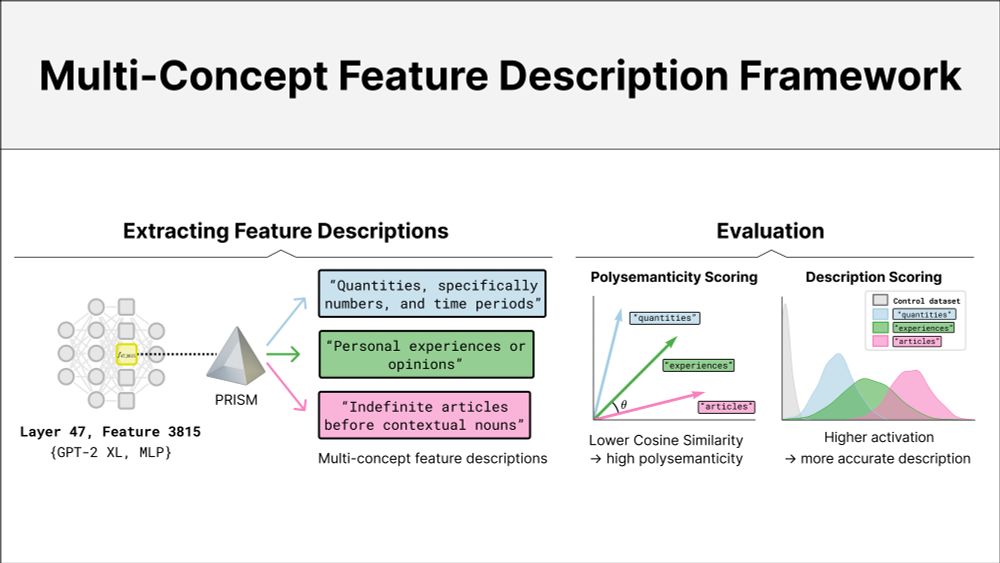

🔍 When do neurons encode multiple concepts?

We introduce PRISM, a framework for extracting multi-concept feature descriptions to better understand polysemanticity.

📄 Capturing Polysemanticity with PRISM: A Multi-Concept Feature Description Framework

arxiv.org/abs/2506.15538

🧵 (1/7)

19.06.2025 15:18 — 👍 37 🔁 12 💬 1 📌 3

🚨 New preprint! Excited to share our work on extracting and evaluating the potentially many feature descriptions of language models

👉 arxiv.org/abs/2506.15538

19.06.2025 16:44 — 👍 19 🔁 4 💬 0 📌 0

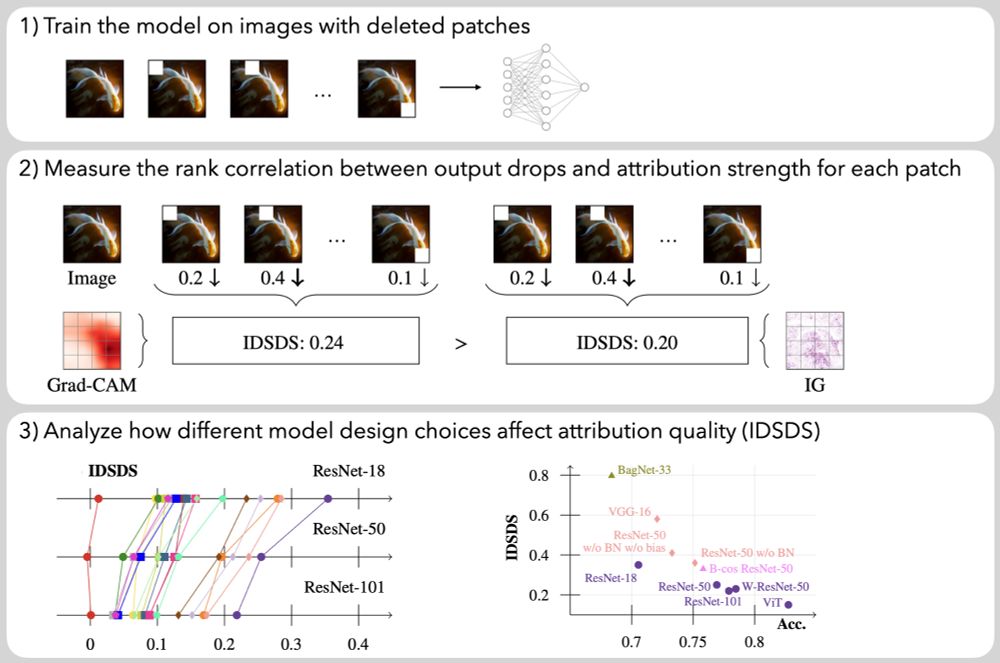

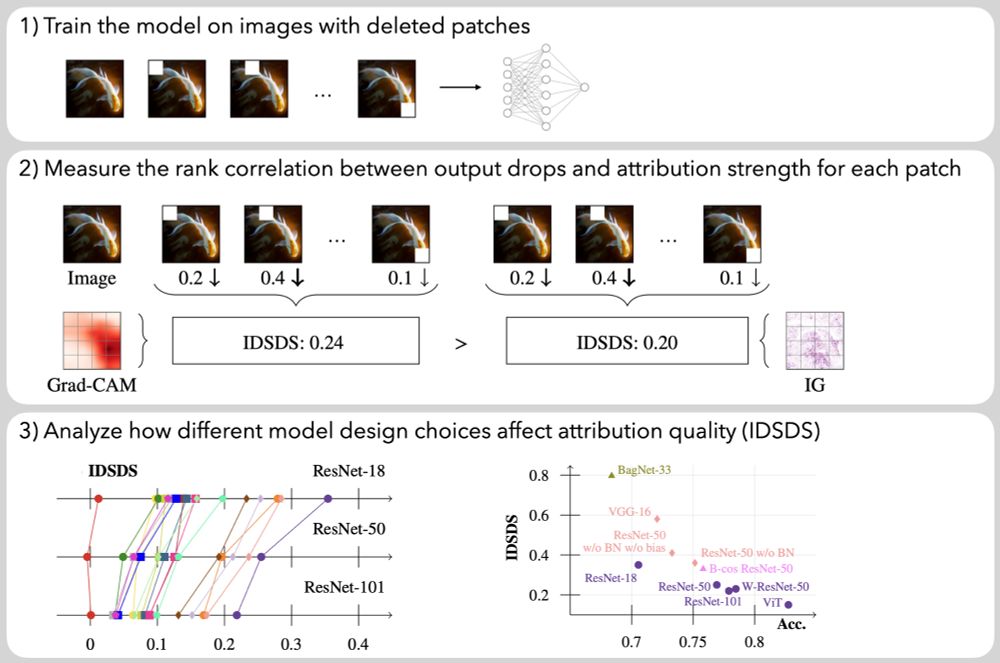

Want to learn about how model design choices affect the attribution quality of vision models? Visit our #NeurIPS2024 poster on Friday afternoon (East Exhibition Hall A-C #2910)!

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

13.12.2024 10:10 — 👍 21 🔁 7 💬 1 📌 1

Interpretability Techniques for Speech Models — Tutorial @ Interspeech 2025

The @interspeech.bsky.social early registration deadline is coming up in a few days!

Want to learn how to analyze the inner workings of speech processing models? 🔍 Check out the programme for our tutorial:

interpretingdl.github.io/speech-inter... & sign up through the conference registration form!

13.06.2025 05:18 — 👍 27 🔁 10 💬 1 📌 2

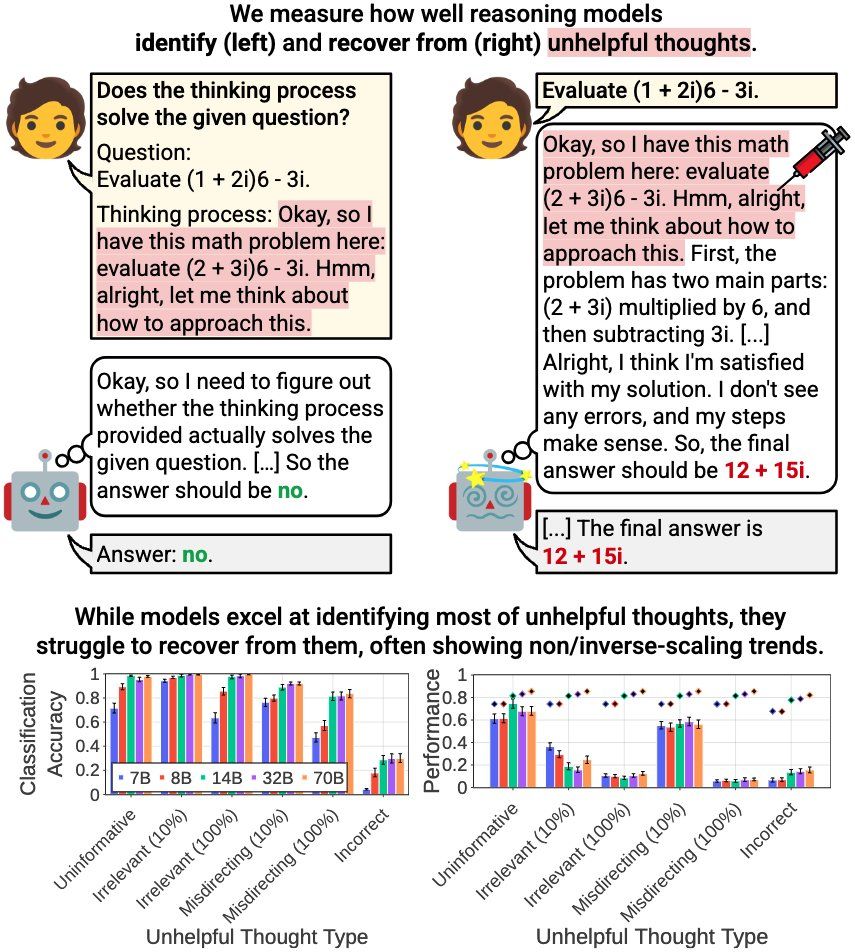

🚨 New Paper 🚨

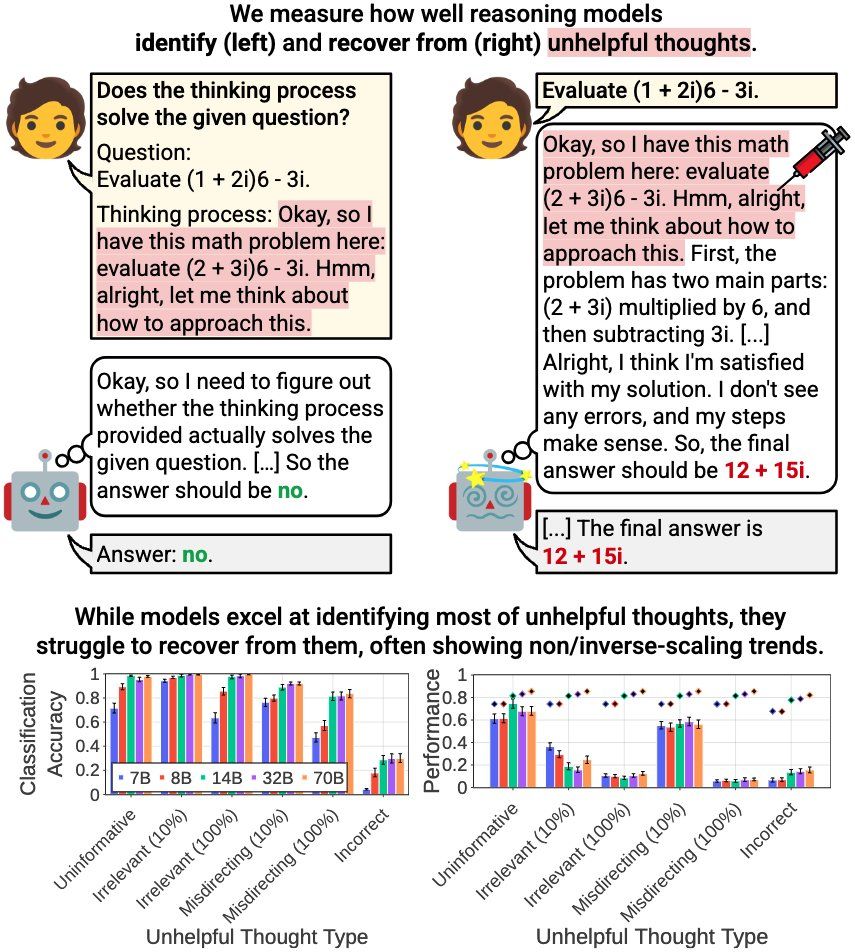

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

13.06.2025 16:15 — 👍 12 🔁 3 💬 1 📌 0

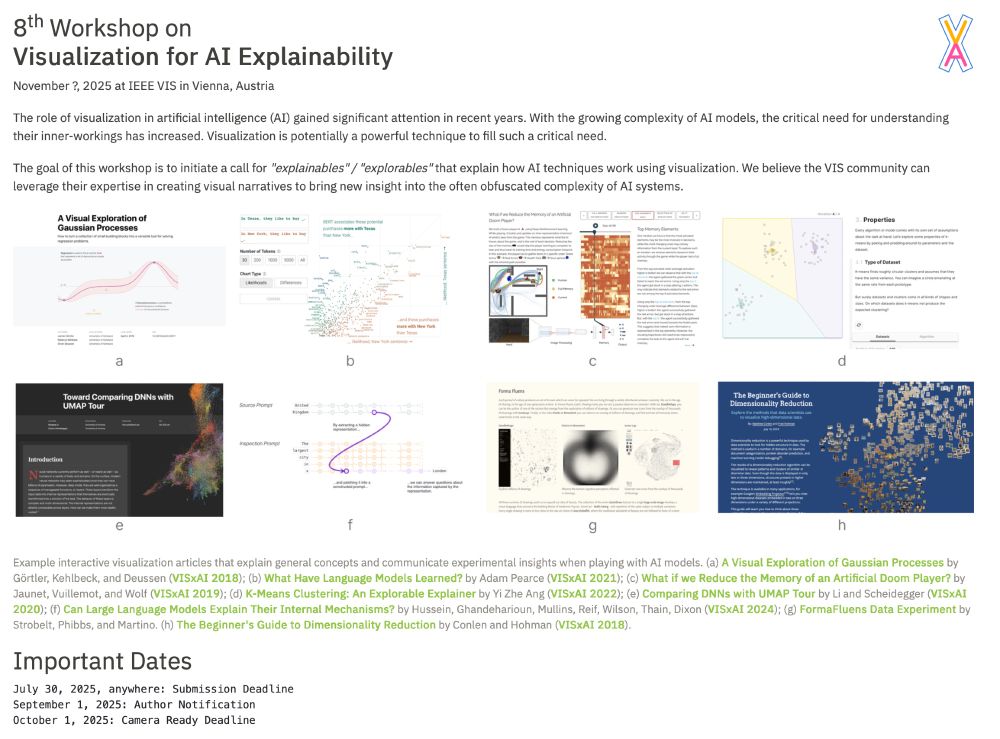

Join us in advancing explainability in the rapidly evolving landscape of AI! We welcome papers on novel explanation methods, scaling existing xAI approaches, innovations for foundational models, and beyond.

14.06.2025 15:53 — 👍 5 🔁 2 💬 1 📌 1

Call for papers is out for the 2nd Explainable Computer Vision - Quo Vadis?

(eXCV) workshop in #XAI at #ICCV2025!

More details can be found here: excv-workshop.github.io

Submission Site: Coming Soon!

@iccv.bsky.social @xai-research.bsky.social

14.06.2025 15:53 — 👍 3 🔁 2 💬 1 📌 0

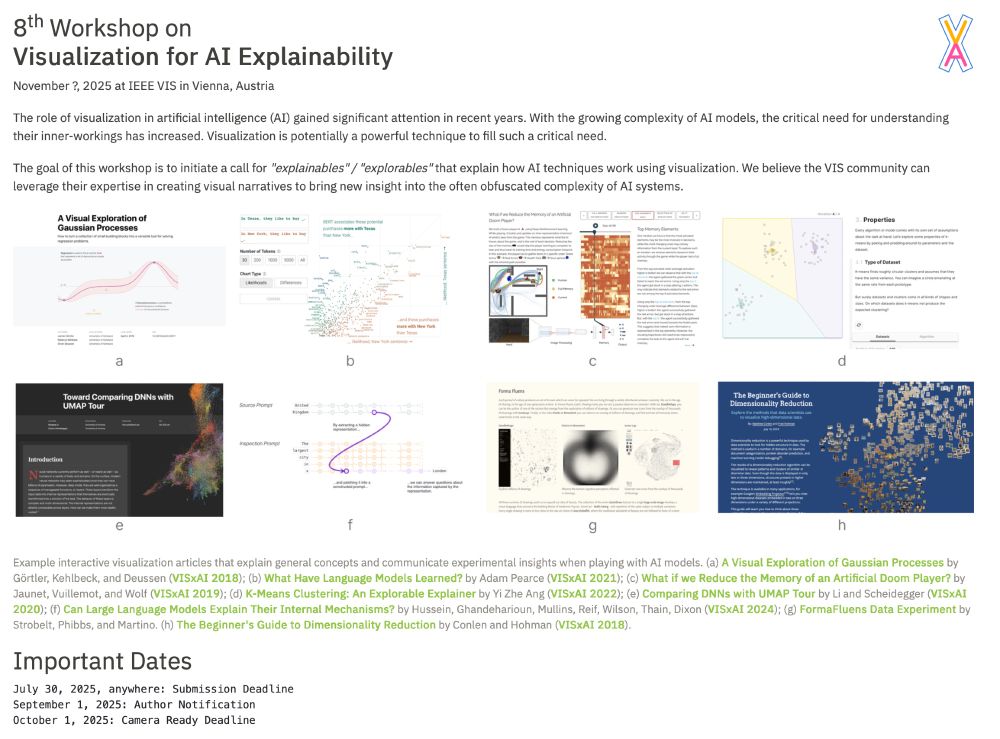

It's on! In its 8th year, the original VISxAI workshop wants your contributions for explaining ML/AI/GenAI principles. Please consider to submit.

Deadline: July 30

webpage: visxai.io

#ML #AI #GenAI #Visualization

11.06.2025 14:07 — 👍 7 🔁 6 💬 0 📌 0

PhD researcher. Philosophy of Explainable AI, Responsible AI, and Computer Vision.

Waiting on a robot body. All opinions are universal and held by both employers and family.

Literally a professor. Recruiting students to start my lab.

ML/NLP/they/she.

Interpretable ML | Computational and Systems Biology | Postdoc at Cancer Early Detection Advanced Research (CEDAR) Center @ohsuknight.bsky.social

NLP Researcher | CS PhD Candidate @ Technion

Machine Learning Researcher | PhD Candidate @ucsd_cse | @trustworthy_ml

chhaviyadav.org

Working on ethics and bias in NLP @CardiffNLP #NLP #NLProc

The largest workshop on analysing and interpreting neural networks for NLP.

BlackboxNLP will be held at EMNLP 2025 in Suzhou, China

blackboxnlp.github.io

Assistant Professor at UMD College Park| Past: JPMorgan, CMU, IBM Research, Dataminr, IIT KGP

| Trustworthy ML, Interpretability, Fairness, Information Theory, Optimization, Stat ML

PhD student @LIG | Causal abstraction, interpretability & LLMs

PhD candidate @ UIUC | NLP, interpretability, cognitive science | http://ahdavies6.github.io

Responsible AI & Human-AI Interaction

Currently: Research Scientist at Apple

Previously: Princeton CS PhD, Yale S&DS BSc, MSR FATE & TTIC Intern

https://sunniesuhyoung.github.io/

Assistant Professor at Trinity College Dublin | Previously Oxford | Human-Centered Machine Learning

https://e-delaney.github.io/

Professor @ NTNU, Research Director @ NorwAI

Research on AI, CBR, XAI, intelligence systems, AI+Helath

Views are my own

Senior Researcher Machine Learning at BIFOLD | TU Berlin 🇩🇪

Prev at IPAM | UCLA | BCCN

Interpretability | XAI | NLP & Humanities | ML for Science

👩💻 Postdoc @ Technion, interested in Interpretability in IR 🔎 and NLP 💬

AI/ML Engineer at @netapp.com | Keynote Speaker | Building Scalable ML Architectures & Conversational AI Solutions | Python | Go | C++

Info: https://linktr.ee/davidvonthenen

Senior Lecturer and Researcher @LMU_Muenchen working on #ExplainableAI / #interpretableML and #OpenML

We are "squIRreL", the Interpretable Representation Learning Lab based at IDLab - University of Antwerp & imec.

Research Areas: #RepresentationLearning, Model #Interpretability, #explainability, #DeepLearning

#ML #AI #XAI #mechinterp

Machine Learning • Fairness • Explainable ML • AutoML

http://guilhermealves.eti.br