AISTATS 2026 will be in Morocco!

30.07.2025 08:07 — 👍 35 🔁 10 💬 0 📌 0

Shoutout to my collaborators Nikhil Ghosh and Bin Yu for their help with this project.

30.06.2025 21:26 — 👍 0 🔁 0 💬 1 📌 0

✅ PLoP Consistently outperforms other strategies (Attn, MLP)

✅ Works across different post-training scenarios: supervised fine-tuning, reinforcement learning

✅ Minimal computational overhead

In the worst case, it ties with the best manual approach. Usually, it's better.

30.06.2025 21:26 — 👍 0 🔁 0 💬 1 📌 0

NFN measures the alignment between each module (in the pretrained model) and the finetuning task. NFN is a cheap metric that can be calculated in one forward pass. It is based on a large width analysis of module-data alignment and is well suited for LoRA finetuning.

30.06.2025 21:26 — 👍 0 🔁 0 💬 1 📌 0

Our solution: PLoP (Precise LoRA Placement) 🎯

Instead of guessing, it automatically identifies the optimal modules for LoRA placement based on a notion of module-data alignment that we call NFN (Normalised Feature Norms).

30.06.2025 21:26 — 👍 0 🔁 0 💬 1 📌 0

❌ Original LoRA paper: "Prioritize attention"

❌ Other papers: "Actually, put them in MLP"

❌ Everyone: just guessing and trying common target modules

30.06.2025 21:26 — 👍 0 🔁 0 💬 1 📌 0

LoRA is amazing for finetuning large models cheaply, but WHERE you place the adapters makes a huge difference. Most people are just guessing where to put them (Attention, MLP, etc).

Meet "PLoP" (Precise LoRA Placement) 🎯, our new method for automatic LoRA placement 🧵

30.06.2025 21:26 — 👍 1 🔁 0 💬 1 📌 0

The recent surge in available Research Scientist positions is correlated with the growing need for innovative approaches to scale and improve Large Language Models (LLMs). This trend is also driven by factors such as researchers leaving established companies for startups!

19.01.2025 01:51 — 👍 1 🔁 0 💬 0 📌 0

YouTube video by Rodrigo Amarante - Topic

Tuyo (Narcos Theme) (Extended Version)

By far, the best intro song in the history of humankind

www.youtube.com/watch?v=zNPX...

17.01.2025 01:00 — 👍 1 🔁 0 💬 0 📌 0

Are we hitting a wall with AI scaling? 🤔

That "plateau" you're seeing in scaling law charts might not be a fundamental limit, but a sign of suboptimal scaling strategies! I wrote a blogpost about this:

www.soufianehayou.com/blog/plateau...

10.01.2025 23:40 — 👍 1 🔁 0 💬 0 📌 0

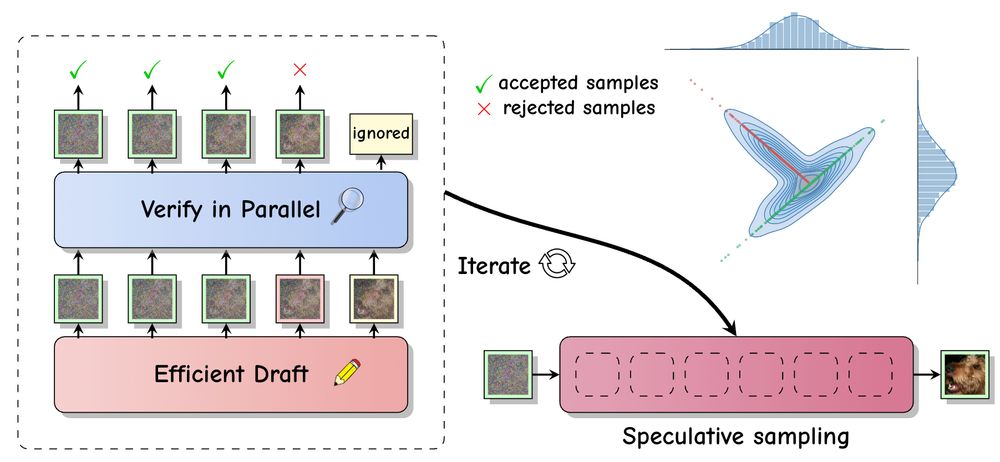

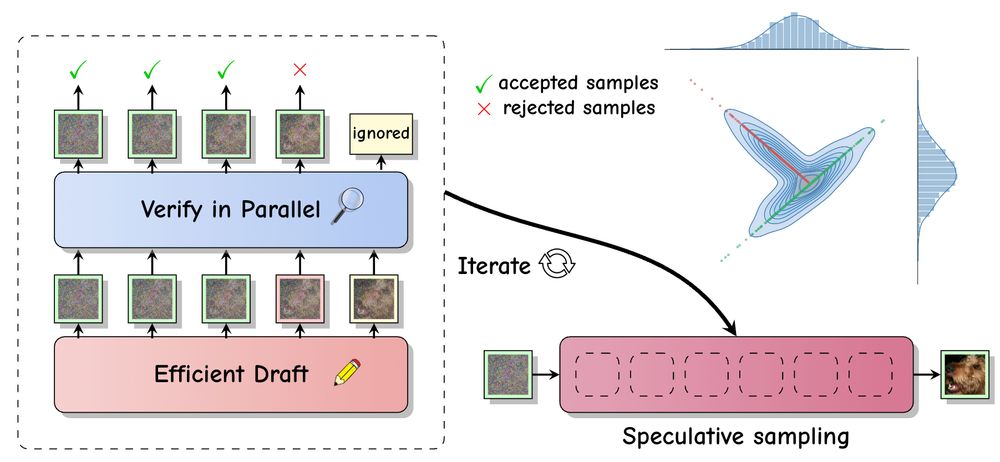

Speculative sampling accelerates inference in LLMs by drafting future tokens which are verified in parallel. With @vdebortoli.bsky.social , A. Galashov & @arthurgretton.bsky.social , we extend this approach to (continuous-space) diffusion models: arxiv.org/abs/2501.05370

10.01.2025 16:30 — 👍 45 🔁 10 💬 0 📌 0

People compare AI to past historic breakthroughs 🔄 (industrial revolution, internet, etc), but there's a crucial difference: In previous advancements, humans remained the most intelligent beings. This time, we're creating something that could surpass us 🤖. It's a singularity!⚡️

29.12.2024 22:27 — 👍 1 🔁 0 💬 0 📌 0

Professor a NYU; Chief AI Scientist at Meta.

Researcher in AI, Machine Learning, Robotics, etc.

ACM Turing Award Laureate.

http://yann.lecun.com

Senior Staff Research Scientist @Google DeepMind, previously Stats Prof @Oxford Uni - interested in Computational Statistics, Generative Modeling, Monte Carlo methods, Optimal Transport.

Entrepreneur

Costplusdrugs.com

Research Director, Founding Faculty, Canada CIFAR AI Chair @VectorInst.

Full Prof @UofT - Statistics and Computer Sci. (x-appt) danroy.org

I study assumption-free prediction and decision making under uncertainty, with inference emerging from optimality.

Recently a principal scientist at Google DeepMind. Joining Anthropic. Most (in)famous for inventing diffusion models. AI + physics + neuroscience + dynamical systems.

AI professor at Caltech. General Chair ICLR 2025.

http://www.yisongyue.com

Professor, Programmer in NYC.

Cornell, Hugging Face 🤗

Research fellow @OxfordStats @OxCSML, spent time at FAIR and MSR

Former quant 📈 (@GoldmanSachs), former former gymnast 🤸♀️

My opinions are my own

🇧🇬-🇬🇧 sh/ssh

Cofounded and lead PyTorch at Meta. Also dabble in robotics at NYU.

AI is delicious when it is accessible and open-source.

http://soumith.ch

work on theoretical foundations of AI, MLLM reliability/Eval, optimization, high dimensional probability/statistics, AI for science/healthcare; director of center on AIF4S @USC 🚲🏔️🥾🏊♂️

Computer science professor at Carnegie Mellon. Researcher in machine learning. Algorithmic foundations of responsible AI (e.g., privacy, uncertainty quantification), interactive learning (e.g., RLHF).

https://zstevenwu.com/

Cofounder & CTO @ Abridge, Raj Reddy Associate Prof of ML @ CMU, occasional writer, relapsing 🎷, creator of d2l.ai & approximatelycorrect.com

DeepMind Professor of AI @Oxford

Scientific Director @Aithyra

Chief Scientist @VantAI

ML Lead @ProjectCETI

geometric deep learning, graph neural networks, generative models, molecular design, proteins, bio AI, 🐎 🎶

Machine learning researcher. Professor in ML department at CMU.

Assistant Prof at Penn CIS | Postdoc at Microsoft Research | PhD from UT Austin CS | Co-founder LeT-All

I work on AI at OpenAI.

Former VP AI and Distinguished Scientist at Microsoft.

Professor @UCLA, Research Scientist @ByteDance | Recent work: SPIN, SPPO, DPLM 1/2, GPM, MARS | Opinions are my own

Professor of Applied Physics at Stanford | Venture Partner a16z | Research in AI, Neuroscience, Physics

https://Answer.AI & https://fast.ai founding CEO; previous: hon professor @ UQ; leader of masks4all; founding CEO Enlitic; founding president Kaggle; various other stuff…