10/ Animal-like autonomy—flexibly adapting to new environments without supervision—is a key ingredient of general intelligence.

Our work shows this hinges on 1) a predictive world model and 2) memory primitives that ground these predictions in ethologically relevant contexts.

05.06.2025 20:03 —

👍 19

🔁 2

💬 3

📌 0

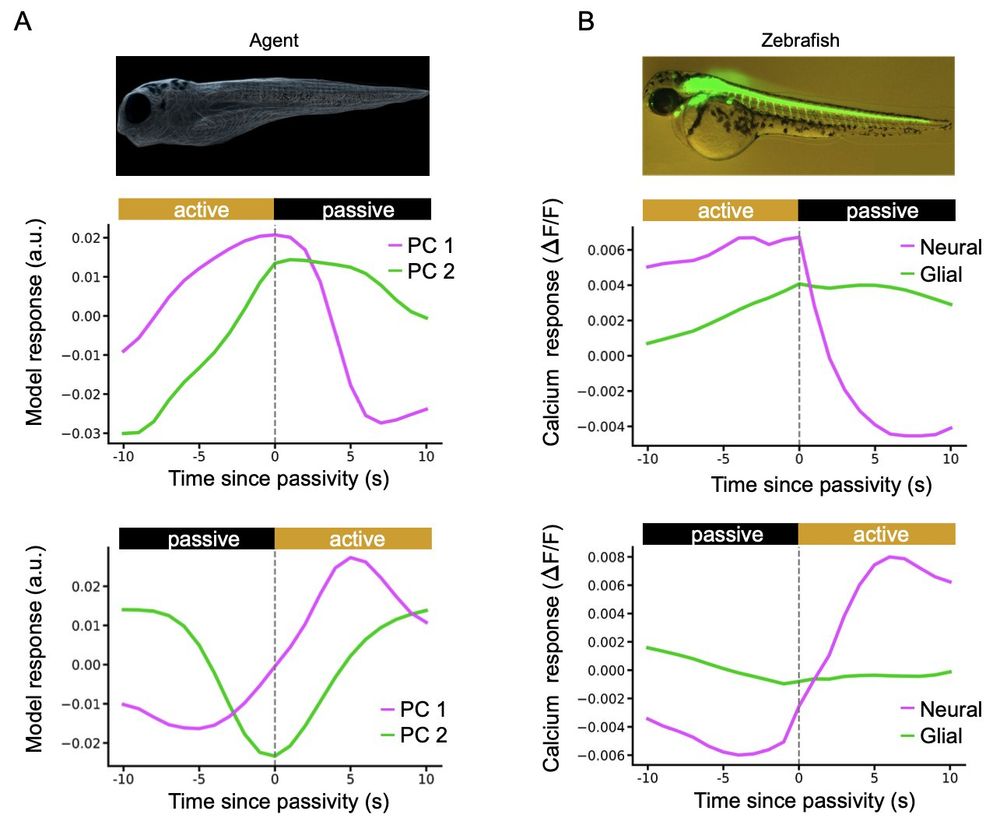

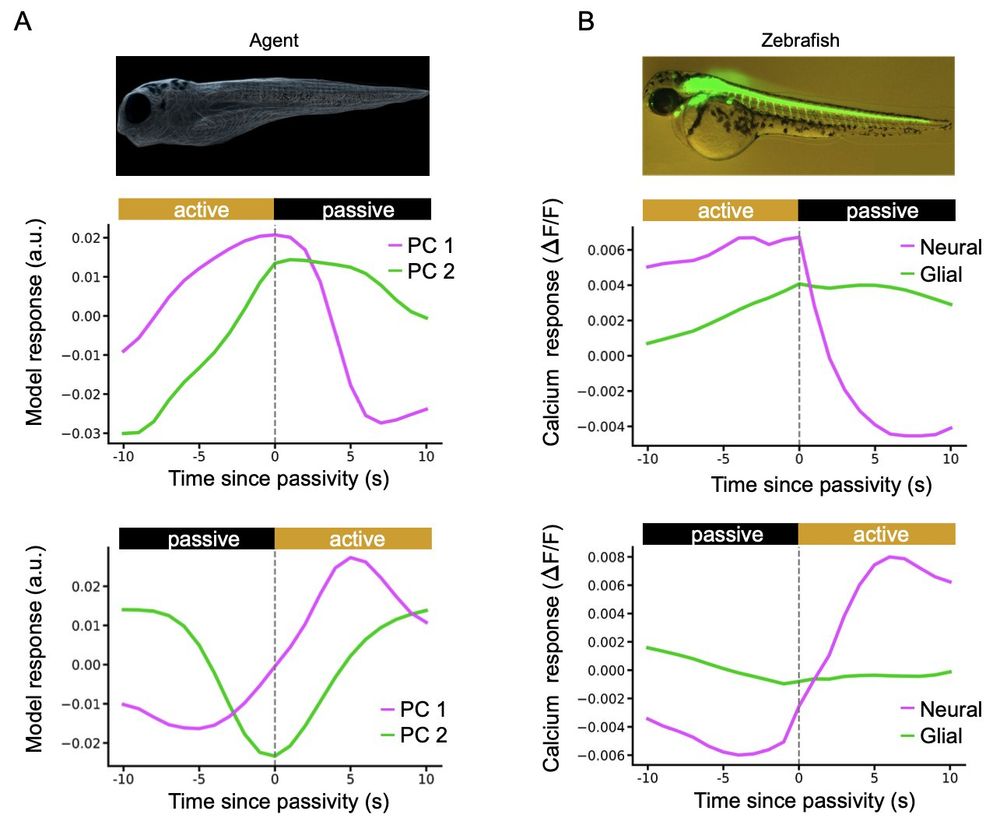

9/ Finally, we show that the neural-glial circuit proposed in Mu et al. (2019) emerges from the latent dynamics of 3M-Progress agents.

Thanks to my collaborators Alyn Kirsch and Felix Pei, and to Xaq Pitkow for his continued support!

Paper link: arxiv.org/abs/2506.00138

05.06.2025 20:03 —

👍 16

🔁 0

💬 1

📌 0

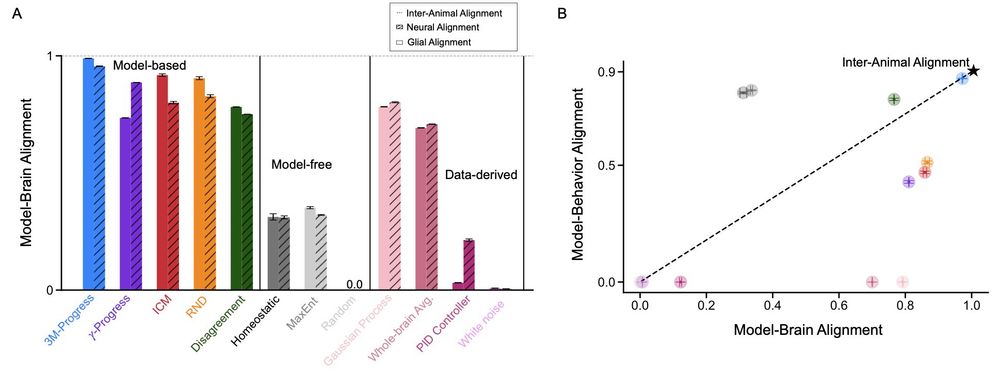

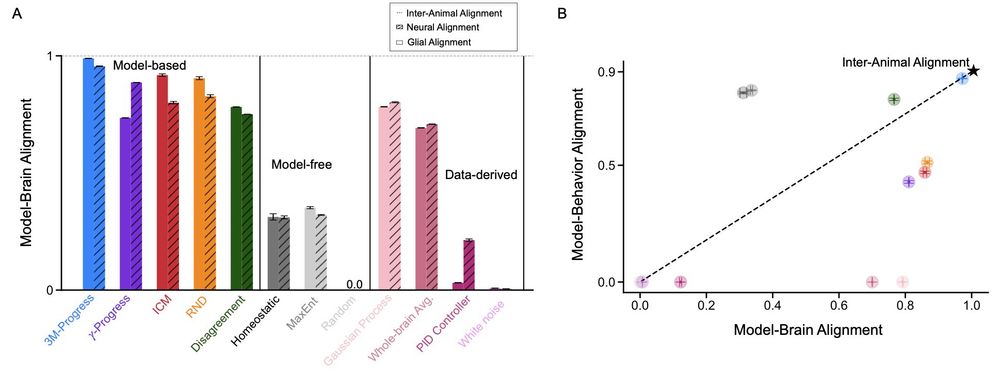

8/ 3M-Progress agents achieve the best alignment with brain data compared to existing intrinsic drives and data-driven controls. Together with the behavioral alignment, 3M-Progress agents saturate inter-animal consistency and thus pass the NeuroAI Turing test on this dataset.

05.06.2025 20:03 —

👍 11

🔁 0

💬 1

📌 0

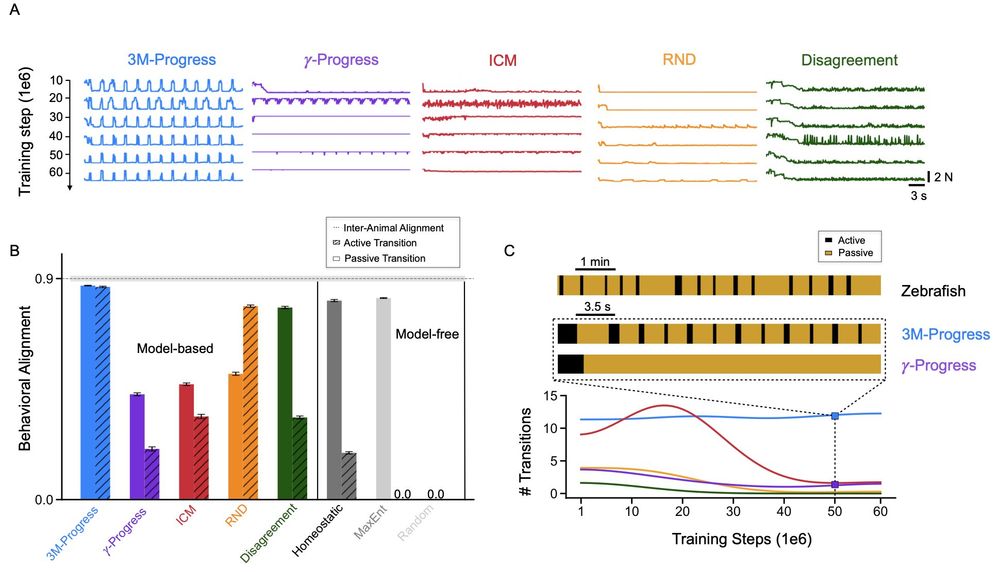

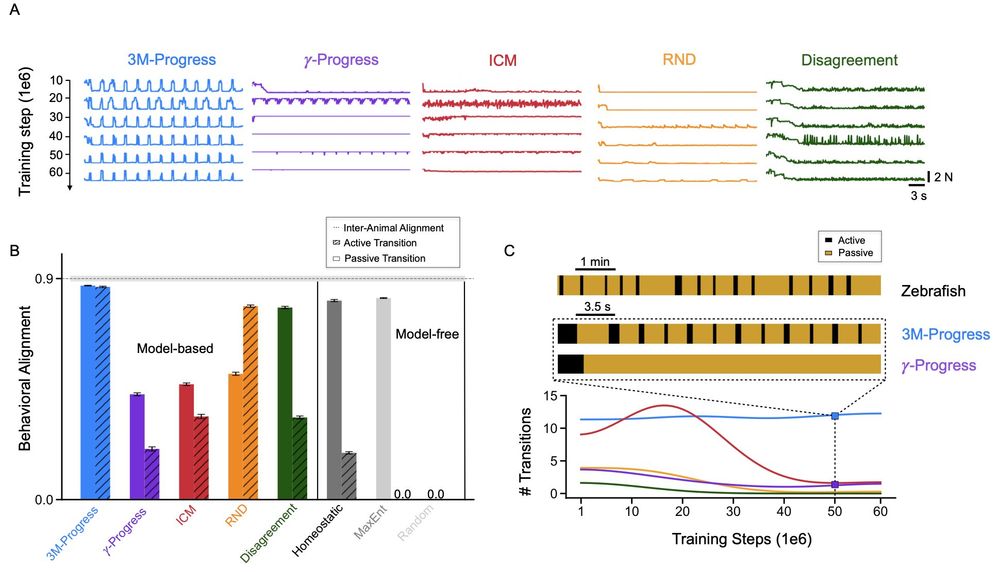

7/ 3M-Progress agents exhibit stable transitions between active and passive states that closely match real zebrafish behavior.

05.06.2025 20:03 —

👍 12

🔁 0

💬 1

📌 0

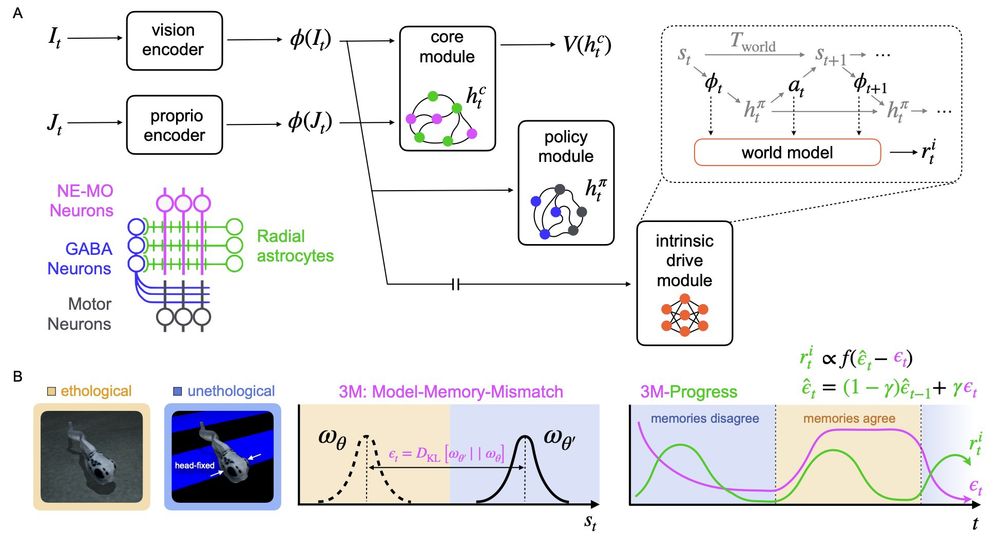

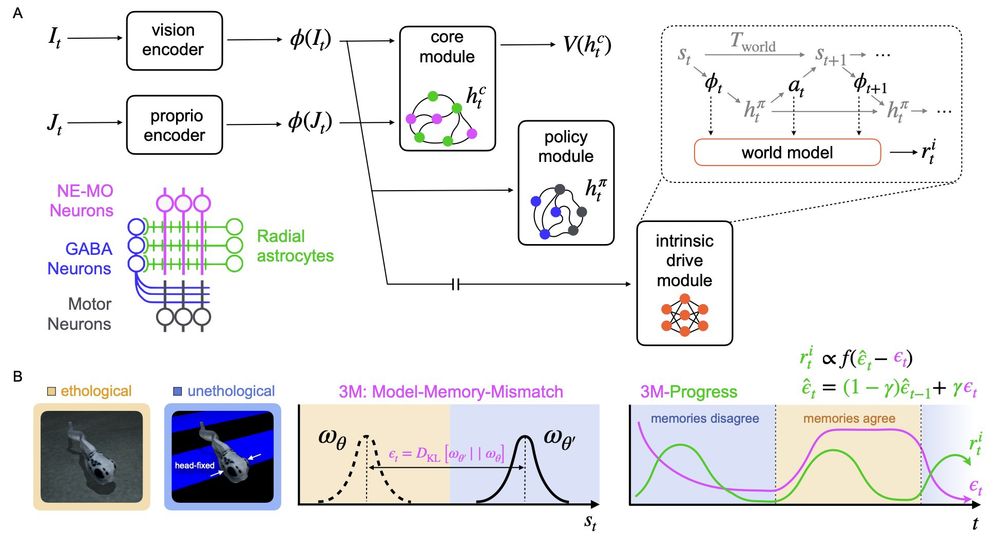

6/ The agent learns a forward dynamics model and measures the divergence between this model and a frozen ethological memory. This model-memory-mismatch (3M) is tracked over time (w/ gamma-progress) to form the final intrinsic reward.

05.06.2025 20:03 —

👍 13

🔁 0

💬 1

📌 1

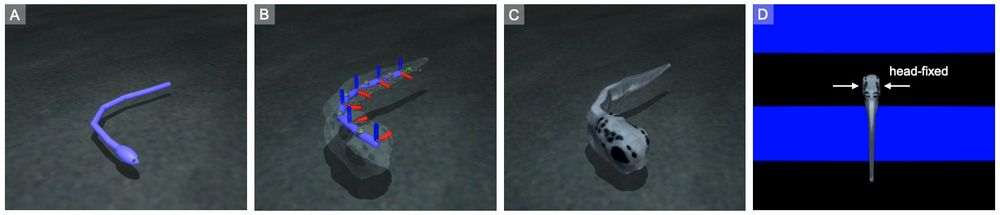

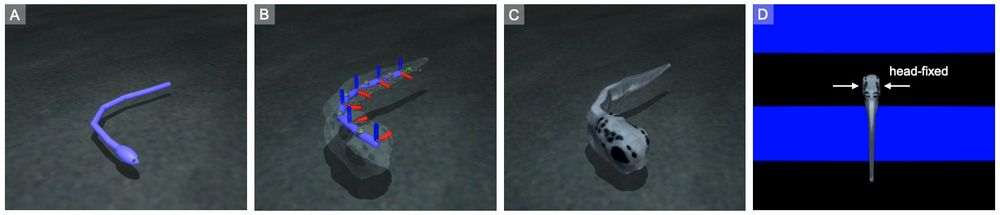

5/ First, we construct two environments extending the dm-control suite: one that captures the basic physics of zebrafish ecology (reactive fluid forces and drifting currents), and one that replicates the head-fixed experimental protocol in Yu Mu and @mishaahrens.bsky.social et al. 2019.

05.06.2025 20:03 —

👍 14

🔁 1

💬 1

📌 0

4/ To bridge this gap, we introduce 3M-Progress, which reinforces behavior that systematically aligns with an ethological memory. 3M-Progress agents capture nearly all the variability in behavioral and whole-brain calcium recordings in autonomously behaving larval zebrafish.

05.06.2025 20:03 —

👍 16

🔁 0

💬 2

📌 0

3/ Existing model-based intrinsic motivation algorithms (e.g. learning progress, prediction-error) exhibit non-stationary and saturating reward dynamics, leading to transient behavioral strategies that fail to capture the robust nature of ethological animal behavior.

05.06.2025 20:03 —

👍 16

🔁 0

💬 2

📌 0

2/ Model-based intrinsic motivation is a class of exploration methods in RL that leverage predictive world models to generate an intrinsic reward signal. This signal is completely self-supervised and can guide behavior in sparse-reward or reward-free environments.

05.06.2025 20:03 —

👍 22

🔁 0

💬 1

📌 0

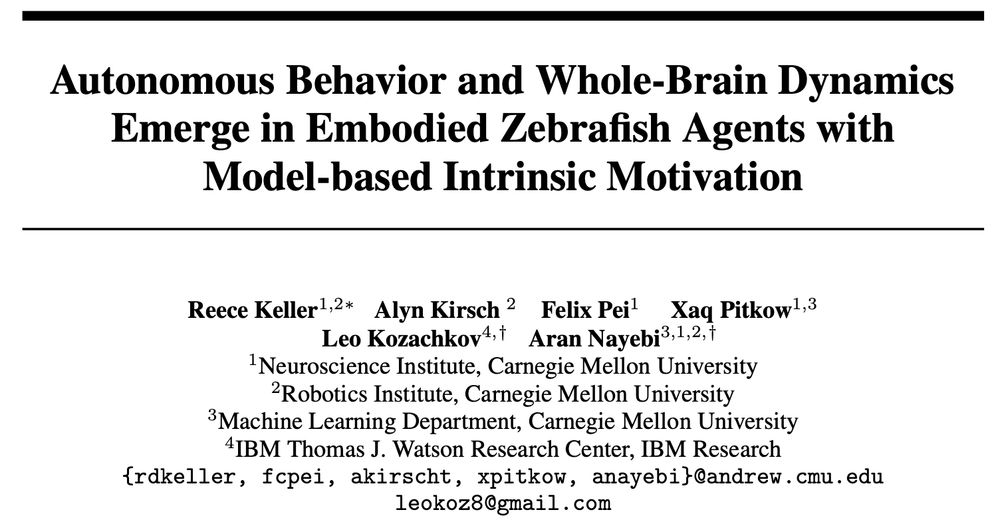

1/ I'm excited to share recent results from my first collaboration with the amazing @anayebi.bsky.social

and @leokoz8.bsky.social !

We show how autonomous behavior and whole-brain dynamics emerge in embodied agents with intrinsic motivation driven by world models.

05.06.2025 20:03 —

👍 117

🔁 24

💬 2

📌 19