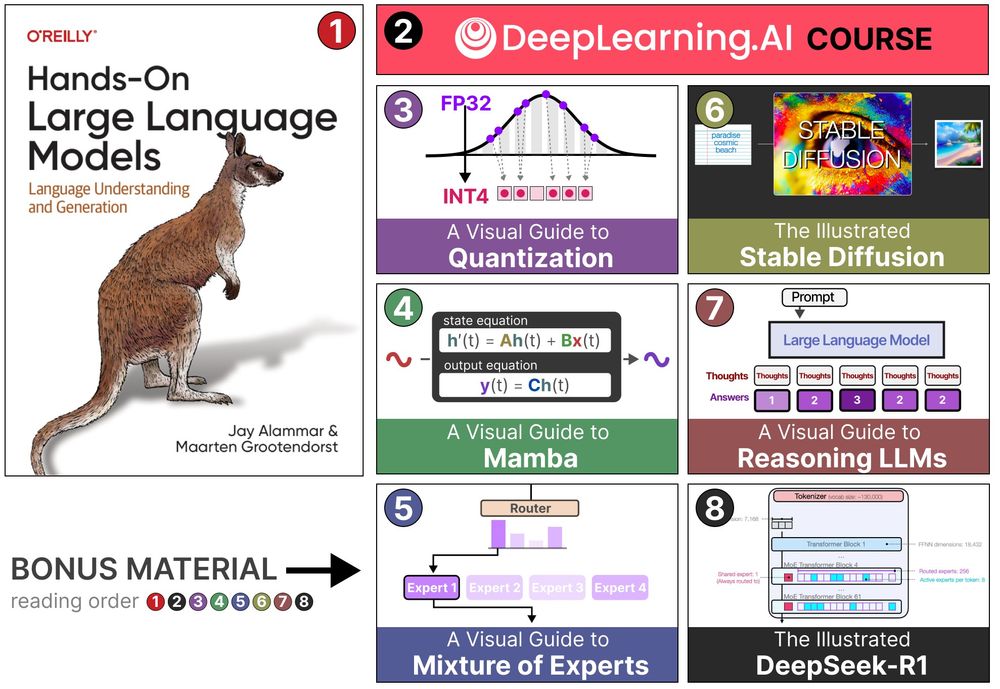

Building off the book's foundation, we created enough content to hopefully keep you busy for a while:

github.com/HandsOnLLM/H...

@maartengr.bsky.social

🧑💻 Data Scientist | Psychologist 📖 Author of "Hands-On LLMs" (http://LLM-book.com) 🧙♂️ Open Sourcerer (BERTopic, PolyFuzz, KeyBERT; github.com/MaartenGr) 💡 Demystifying LLMs (newsletter.maartengrootendorst.com)

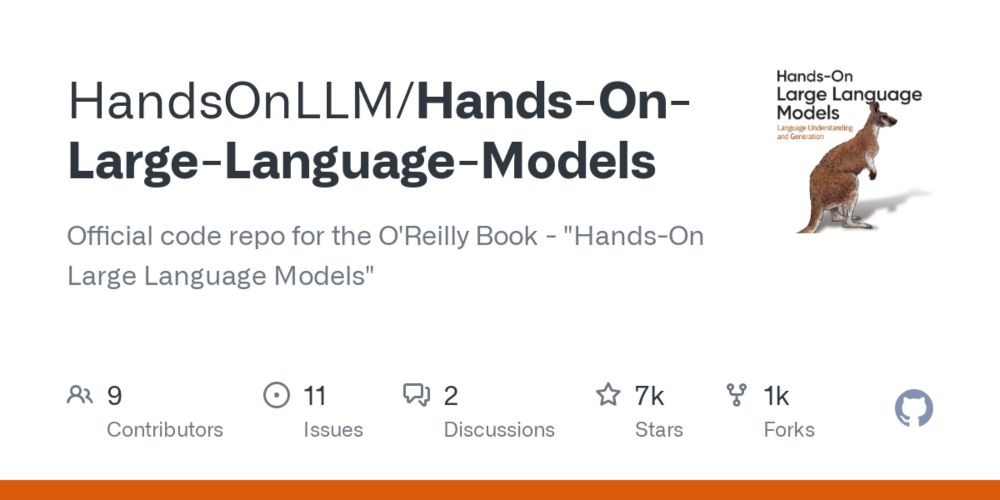

Building off the book's foundation, we created enough content to hopefully keep you busy for a while:

github.com/HandsOnLLM/H...

We've made so much extra (free!) content it could be its own book 😅

I counted more than 300 visuals across our illustrated and visual guides!

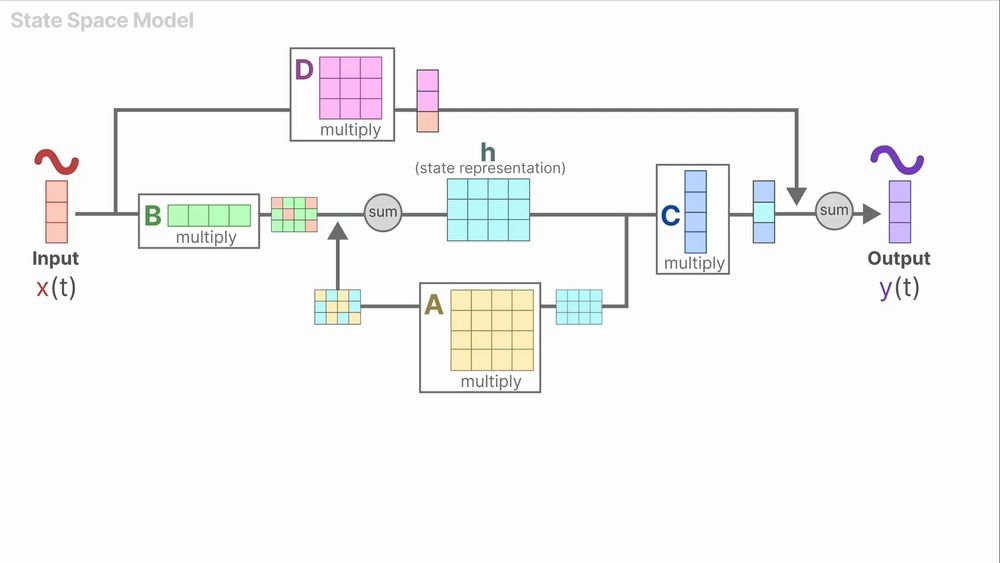

Happy to introduce my video on this alternative LLM architecture, Mamba and State Space Models!

I wanted to do it for a while now and finally found the time to work on animating my visual guide. Expect to gain an intuitive understanding of this alternative LLM architecture.

Link below 👇

You can find the full changelog here: github.com/MaartenGr/BE...

19.03.2025 17:08 — 👍 2 🔁 0 💬 0 📌 0Announcing BERTopic v0.17 🥳

This is a feature-packed update that includes the amazing Model2Vec, more interactive DataMapPlot functionalities, a method for lightweight installation, and much more!

I might have gone a little overboard with the number of visuals... but this is such an exciting topic! Here's the article: newsletter.maartengrootendorst.com/p/a-visual-g...

17.03.2025 15:57 — 👍 4 🔁 2 💬 1 📌 0

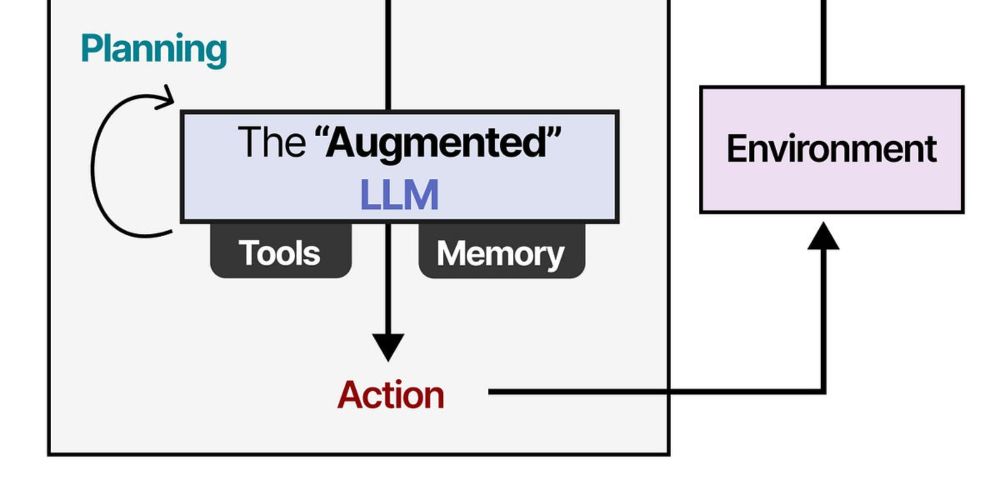

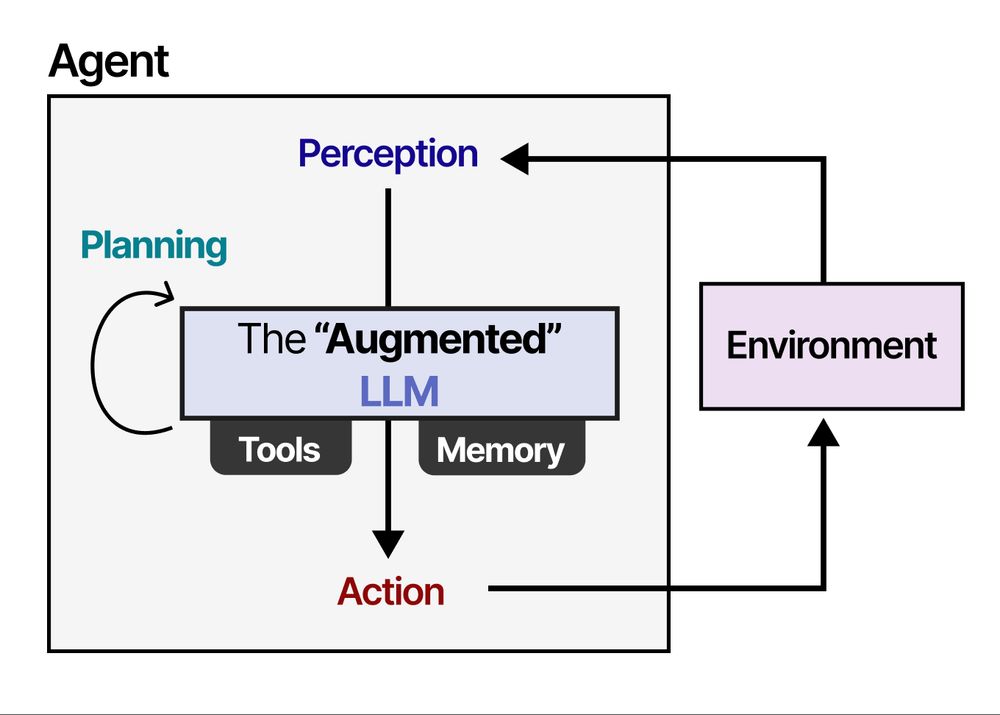

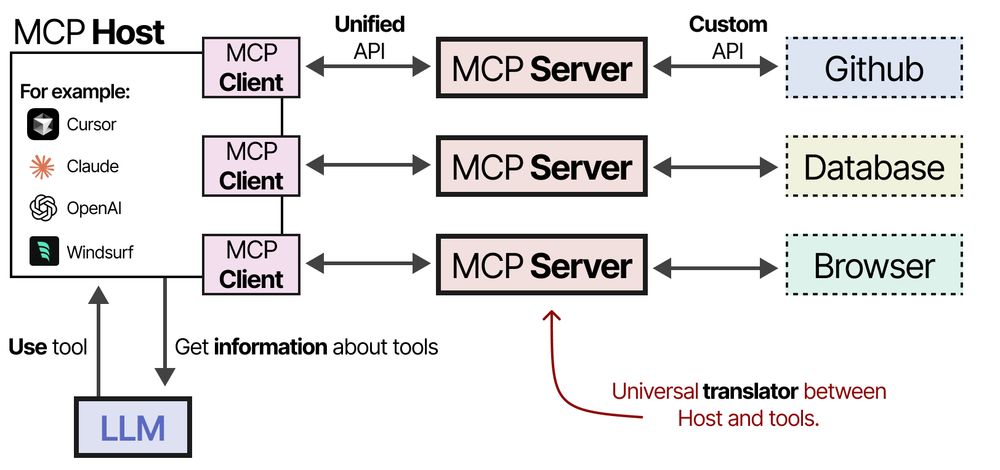

Introducing "A Visual Guide to LLM Agents" 🤖

With over 60 (!) custom visuals, explore how Agents work, how they are created, their reasoning behavior, Model Context Protocol (MCP), Multi-Agent frameworks, and much more!

Link below 👇

Can you guess which visual guide is coming?

14.03.2025 16:05 — 👍 0 🔁 0 💬 0 📌 0

MCP is such an interesting protocol and a welcome addition to LLM-based frameworks.

14.03.2025 16:05 — 👍 0 🔁 0 💬 1 📌 0I discuss Model Context Protocol (MCP) in an upcoming article and figured I would share a small video illustrating a simplified flow!

14.03.2025 16:05 — 👍 1 🔁 0 💬 1 📌 0

You can find the repo here: github.com/MaartenGr/Ke...

19.02.2025 15:36 — 👍 0 🔁 0 💬 0 📌 0

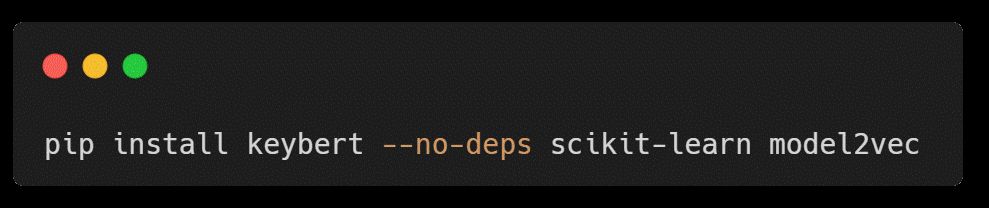

As a bonus, I created a lightweight installation of KeyBERT using ONLY scikit-learn and Model2Vec! Installation is straightforward:

19.02.2025 15:36 — 👍 0 🔁 0 💬 1 📌 0

Blazingly fast keyword generation with KeyBERT v0.9 and Model2Vec 🔥

I have been a big fan of the amazing embedding models by The Minish Lab, so I had to integrate them with KeyBERT.

A release for the GPU-poor 😉

You can find everything at our repo: github.com/HandsOnLLM/H...

11.02.2025 16:23 — 👍 1 🔁 0 💬 0 📌 0

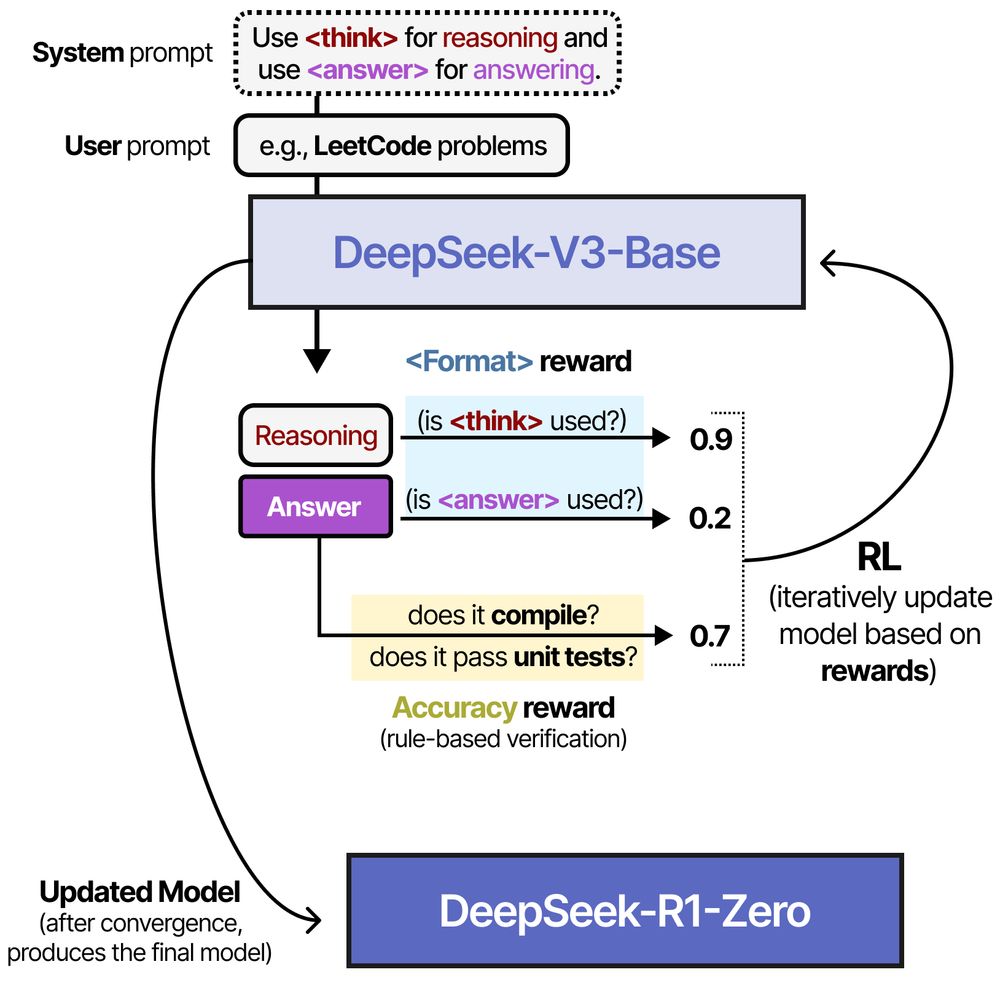

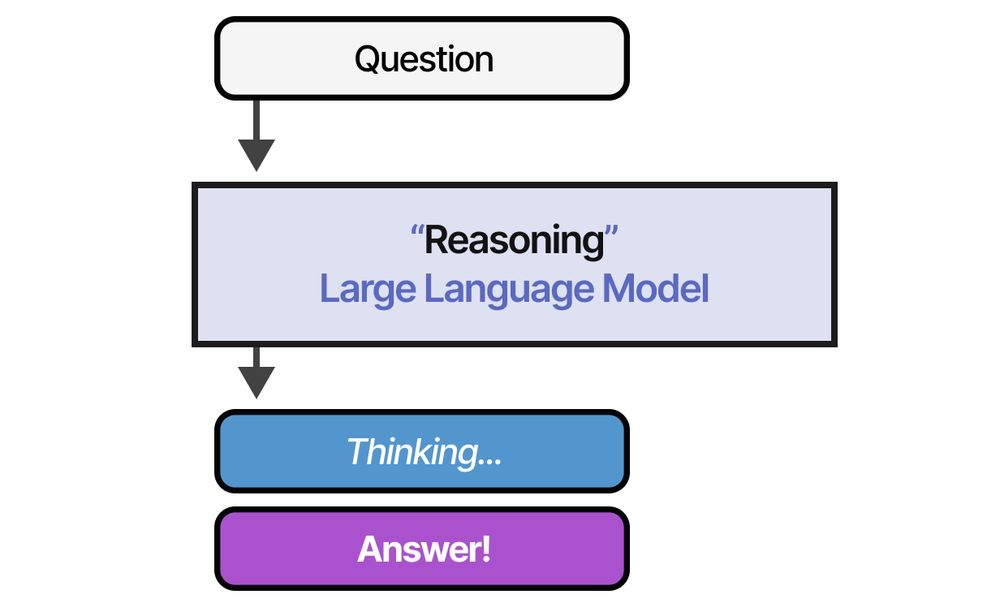

To Reasoning LLMs and DeepSeek-R1:

11.02.2025 16:23 — 👍 0 🔁 0 💬 1 📌 0

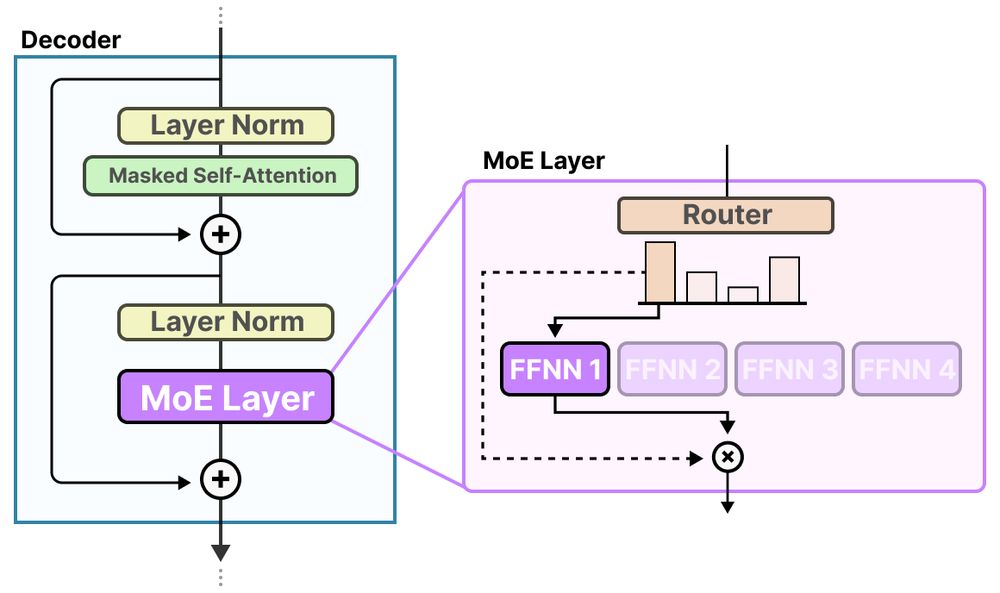

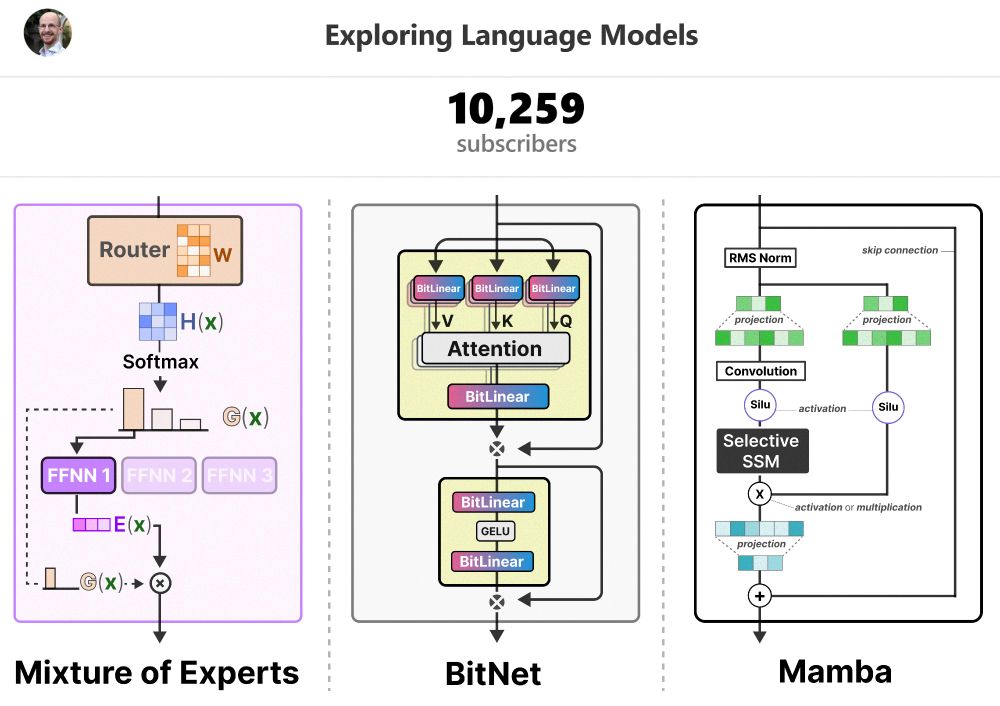

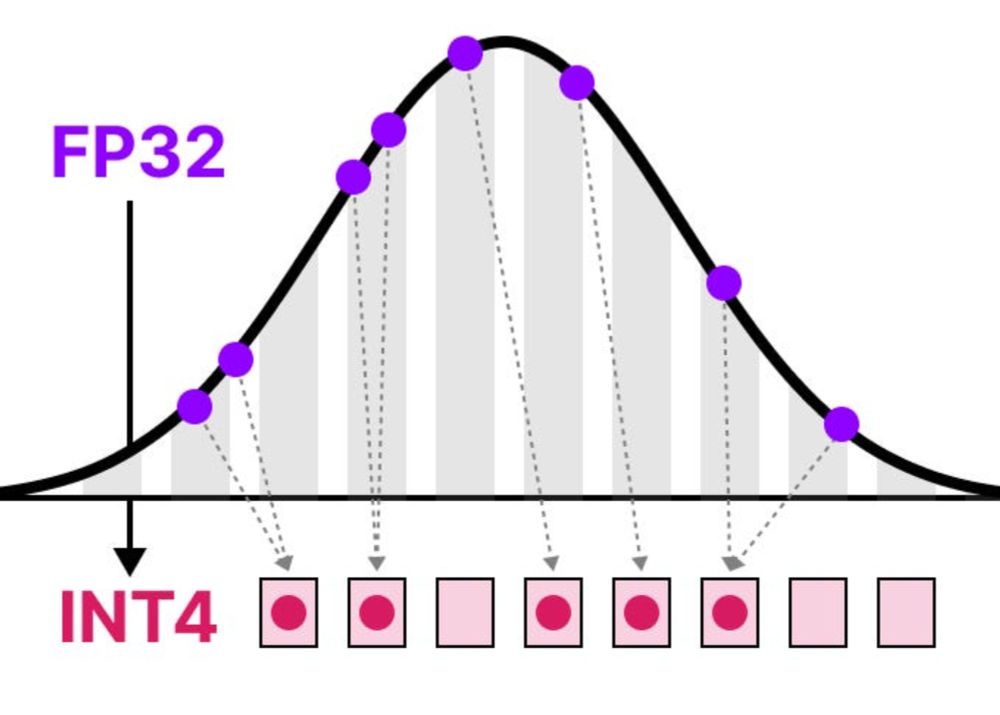

There are also guides to common principles like Quantization and Mixture of Experts:

11.02.2025 16:23 — 👍 0 🔁 0 💬 1 📌 0

@jayalammar.bsky.social and I are incredibly proud to bring you this highly animated (and free 😉) course:

11.02.2025 16:23 — 👍 0 🔁 0 💬 1 📌 0

Did you know we continue to develop new content for the "Hands-On Large Language Models" book?

There's now even a free course available with

@deeplearningai.bsky.social!

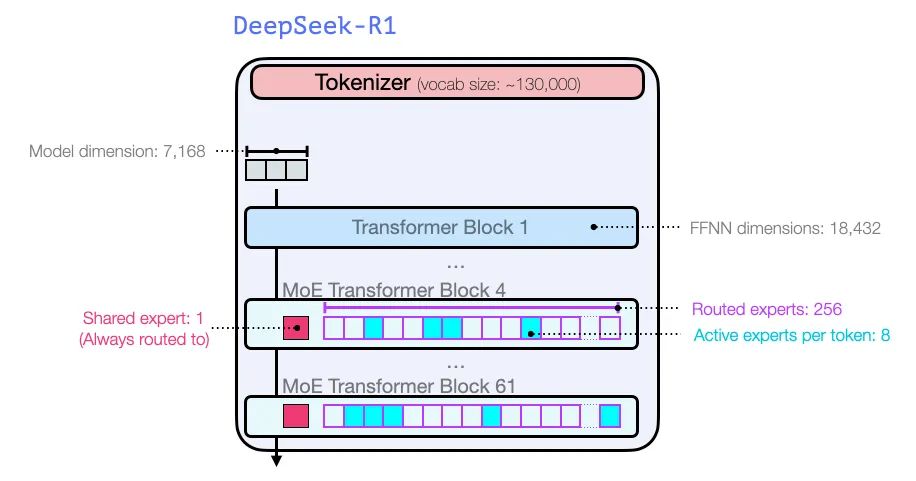

All the way to DeepSeek-R1-(zero):

03.02.2025 15:51 — 👍 0 🔁 0 💬 1 📌 0

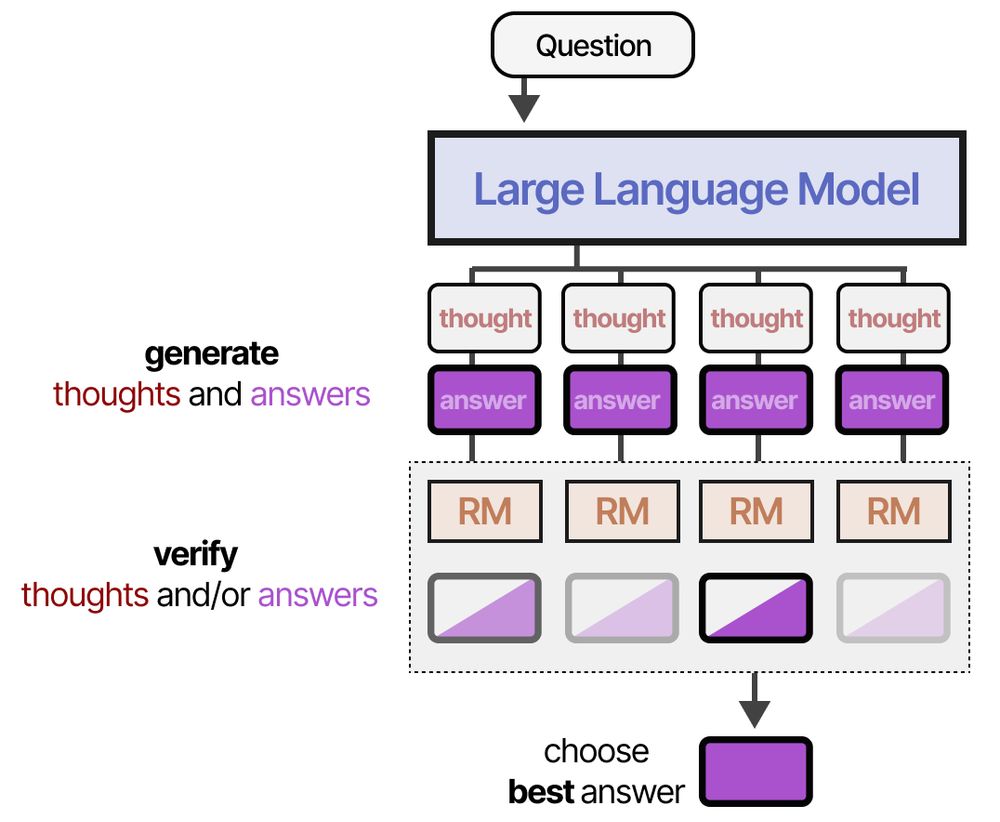

From exploring verifiers for distilling reasoning:

03.02.2025 15:51 — 👍 0 🔁 0 💬 1 📌 0

A Visual Guide to Reasoning LLMs 💭

With over 40 custom visuals, explore DeepSeek-R1, the train-time compute paradigm shift, test-time compute techniques, verifiers, STaR, and much more!

Link below

To celebrate this milestone, I'm working hard on an update to the Mamba guide (with animations!) and there's also a video version almost ready to record!

Link to the newsletter:

newsletter.maartengrootendorst.com

I'm incredibly grateful that "Exploring Language Models" has reached over 10k subscribers🔥

A big thank you to all readers who have enjoyed my visual guides to Mixture of Experts (MoE), Quantization, State Space Models (SSMs) and Mamba 🤗

I started working on animating "A Visual Guide to Mamba" and it's been a blast.

Perhaps I should just add animations as a default to upcoming visual guides 😉

🍿 Introducing the animated "Visual Guide to Mixture of Experts (MoE)"!

This was a blast to make and contains more in-depth descriptions than the original already had! Expect even more intuition as we break down visuals and discover the nuances behind MoE.

www.youtube.com/watch?v=sOPD...

You can find the guide here: newsletter.maartengrootendorst.com/p/a-visual-g...

12.11.2024 15:40 — 👍 0 🔁 0 💬 0 📌 0

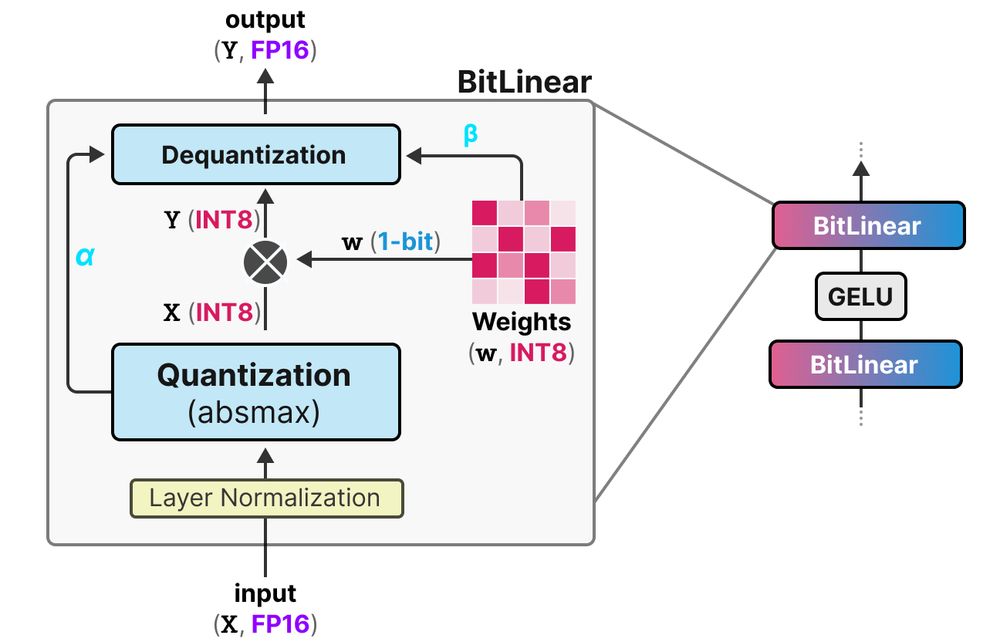

All the way up to BitNet 1.58b with Bitlinear:

12.11.2024 15:40 — 👍 0 🔁 0 💬 1 📌 0

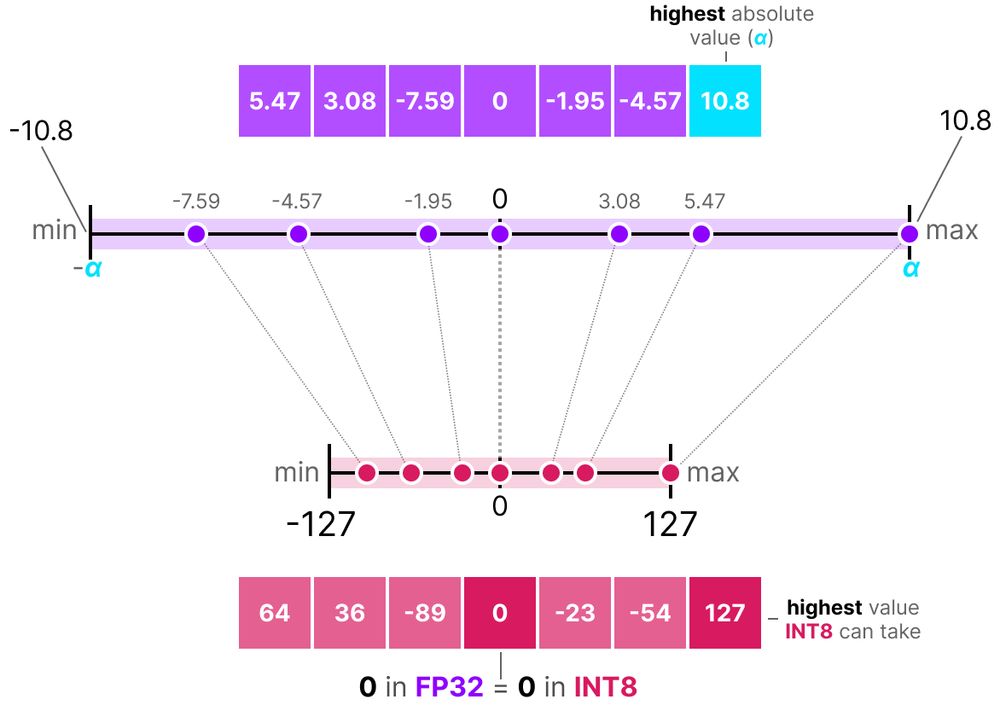

We start with the basics of quantization like the absmax method:

12.11.2024 15:40 — 👍 0 🔁 0 💬 1 📌 0