Cool setup

30.10.2025 13:13 — 👍 0 🔁 0 💬 0 📌 0

Been feeling FOMO from all the ICLR posts the past 2 days. Will finally be at the conference tomorrow. Please do come by our poster and I’m happy to chat! 😊

⏰: Sat, 26 Apr 3pm

📍: Hall 3 + Hall 2B Poster 374

25.04.2025 17:40 — 👍 2 🔁 1 💬 0 📌 0

Also, I'll be presenting this work at ICLR next month, please do come by!

05.03.2025 06:59 — 👍 4 🔁 0 💬 0 📌 0

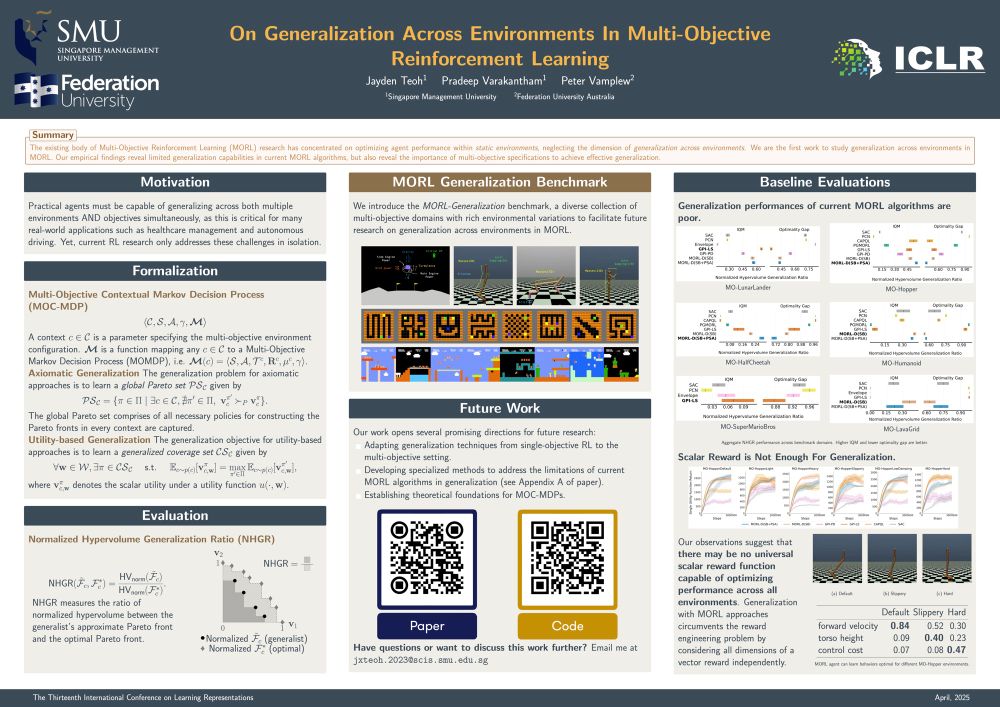

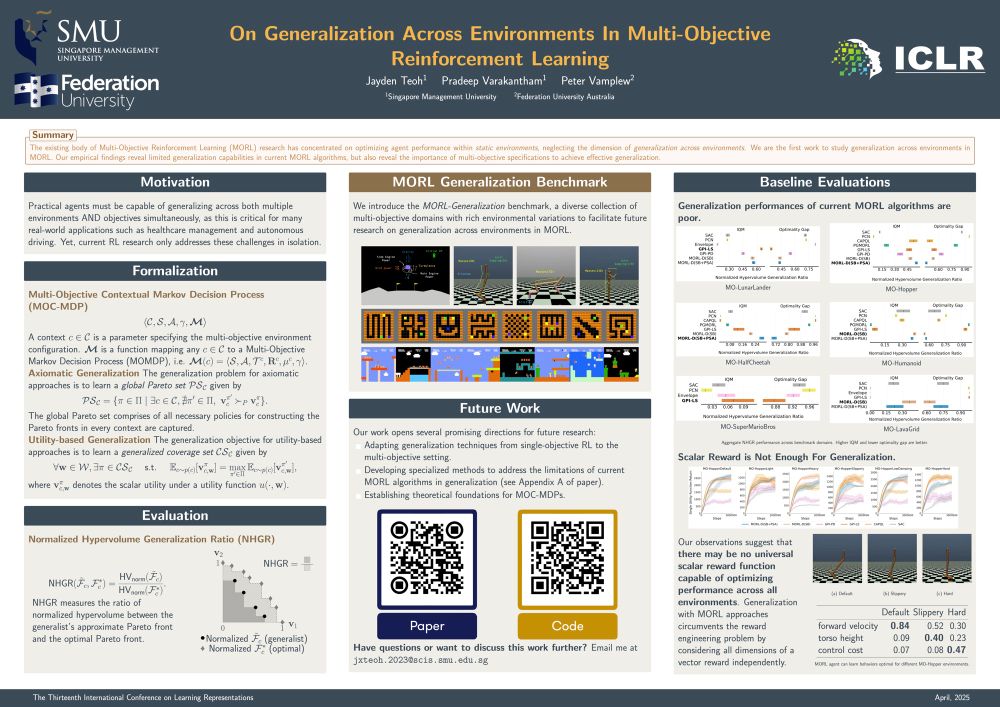

Our benchmark code is already available for testing out new algorithms and I will be sharing additional instructions on using our code in the coming days. Stay tuned. I look forward to engaging and collaborating with anyone interested in advancing this new area of research! 🙂

05.03.2025 06:59 — 👍 2 🔁 0 💬 1 📌 0

There are numerous promising avenues for further exploration, particularly in adapting techniques and insights from single-objective RL generalization research to tackle this harder problem setting!

05.03.2025 06:59 — 👍 0 🔁 0 💬 1 📌 0

Ultimately, a priori scalarization of rewards in single-objective RL limits the agent's flexibility to adapt its behavior to environment changes and objective tradeoffs. Developing agents capable of generalizing across multiple environments AND objectives will become a crucial research direction.

05.03.2025 06:59 — 👍 0 🔁 0 💬 1 📌 0

We also introduce a benchmark featuring diverse multi-objective domains with parameterized environment configurations to facilitate studies in this area.

05.03.2025 06:59 — 👍 0 🔁 0 💬 1 📌 0

Despite its importance, the intersection of generalization and multi-objectivity remains a significant gap in RL literature.

In this paper, we formalize generalization in Multi-Objective Reinforcement Learning (MORL) and how it can be evaluated.

05.03.2025 06:59 — 👍 1 🔁 0 💬 1 📌 0

Consider an autonomous vehicle, which must not only generalize across varied environmental conditions—different weather patterns, lighting, and road surfaces—but also learn optimal trade-offs between competing objectives such as travel time, passenger's comfort, and safety.

05.03.2025 06:59 — 👍 0 🔁 0 💬 1 📌 0

Real-world sequential decision-making tasks often involves balancing trade-offs among conflicting objectives and generalizing across diverse environments.

05.03.2025 06:59 — 👍 0 🔁 0 💬 1 📌 0

On Generalization Across Environments In Multi-Objective Reinforcement Learning

Real-world sequential decision-making tasks often require balancing trade-offs between multiple conflicting objectives, making Multi-Objective Reinforcement Learning (MORL) an increasingly prominent f...

Our ICRL paper on generalization in multi-objective reinforcement learning is now on arxiv: arxiv.org/abs/2503.00799

This work lead by Jayden Teoh is the first to examine generalization of RL across multiple multi-objective environments, and is a great basis for an exciting new field of research.

04.03.2025 20:42 — 👍 5 🔁 1 💬 0 📌 0

TMLR Homepage: https://jmlr.org/tmlr/

TMLR Infinite Conference: https://tmlr.infinite-conf.org/

Honors Computer Science, Applied Mathematics, and Mathematics Student at University of Colorado Boulder | Undergraduate Researcher | 2022 Boettcher Scholar.

https://officialadithya.github.io

Applying to Ph. D. programs to start in Fall 2026!

Research Fellow, University of Oxford

Theology, philosophy, ethics, politics, environmental humanities

Associate Director @LSRIOxford

Anglican Priest

https://www.theology.ox.ac.uk/people/revd-dr-timothy-howles

PhD @SMU/ASTAR doing social learning stuff

Building scalable, intelligent #ecosystems that enhance productivity and efficiency, using advanced #technology to transform #industries and streamline operations.

☎️ +212660010350

✉️ hello@rocketmeup.com

🌐 rocketmeup.com

📍 Marrakesh, Morocco 🇲🇦

International Conference on Learning Representations https://iclr.cc/

Math Assoc. Prof. (On leave, Aix-Marseille, France)

Teaching Project (non-profit): https://highcolle.com/

Lecturer @kcl-spe.bsky.social @kingscollegelondon.bsky.social

Game Theory, Econ & CS, Pol-Econ, Sport

Chess ♟️

Game Theory Corner at Norway Chess

Studied in Istanbul -> Paris -> Bielefeld -> Maastricht

https://linktr.ee/drmehmetismail

Views are my own

Research Scientist at Google DeepMind; AI for Science and Sustainability

Prev: PhD @csail.mit.edu

PhD Student in Reinforcement Learning at University of Edinburgh 🏴

San Diego Dec 2-7, 25 and Mexico City Nov 30-Dec 5, 25. Comments to this account are not monitored. Please send feedback to townhall@neurips.cc.

Frontier alignment research to ensure the safe development and deployment of advanced AI systems.

CEO of Bluesky, steward of AT Protocol.

dec/acc 🌱 🪴 🌳

Executive Publisher at T&F. Publish journals. Walk dogs. ❤️ doughnuts & ice cream. #scicomm #physicalsciences #mathematics #statistics #datascience #history #science #STS

Professor in IT @ Federation Uni. Multi-objective reinforcement learning. Human-aligned AI. Best known for the f*cking mailing list paper. Jambo & Bengals fan. https://t.co/UNoOrbGApz

Recently a principal scientist at Google DeepMind. Joining Anthropic. Most (in)famous for inventing diffusion models. AI + physics + neuroscience + dynamical systems.

web: http://maxim.ece.illinois.edu

substack: https://realizable.substack.com

Blog: https://argmin.substack.com/

Webpage: https://people.eecs.berkeley.edu/~brecht/