He did it before Double Machine Learning

I met with professor Mark van der Laan because I think his work is pretty incredible and it sometimes feels like a secret that only a few people know about, especially in industry.

1/

#CausalSky #StatSky #CausalInference

12.09.2025 08:29 — 👍 5 🔁 3 💬 3 📌 0

What does ‘biased’ mean here? It would be biased in expectation, since if you were to repeat the experiment many times, some power users would join. If you define your estimator as the empirical mean over non-power users, then it might be unbiased.

18.06.2025 01:24 — 👍 3 🔁 0 💬 0 📌 0

I’d be surprised if this actually works in practice, since neural networks are often overfitting (e.g. perfectly fitting labels with double descent), which violates donsker conditions. And, the neural tangent kernel ridge approximation of neural networks has been shown to not hold empirically.

26.05.2025 23:39 — 👍 2 🔁 0 💬 1 📌 0

I've advised 15 PhD students—10 were international students. All graduates continue advancing U.S. excellence in research and education. Cutting off this pipeline of talent would be shortsighted.

23.05.2025 03:36 — 👍 8 🔁 2 💬 0 📌 0

I had a hard time believing it was as simple as this until Lars taught me how to implement it - calibrate=True and you're done

github.com/apoorvalal/a...

19.05.2025 00:59 — 👍 15 🔁 3 💬 0 📌 0

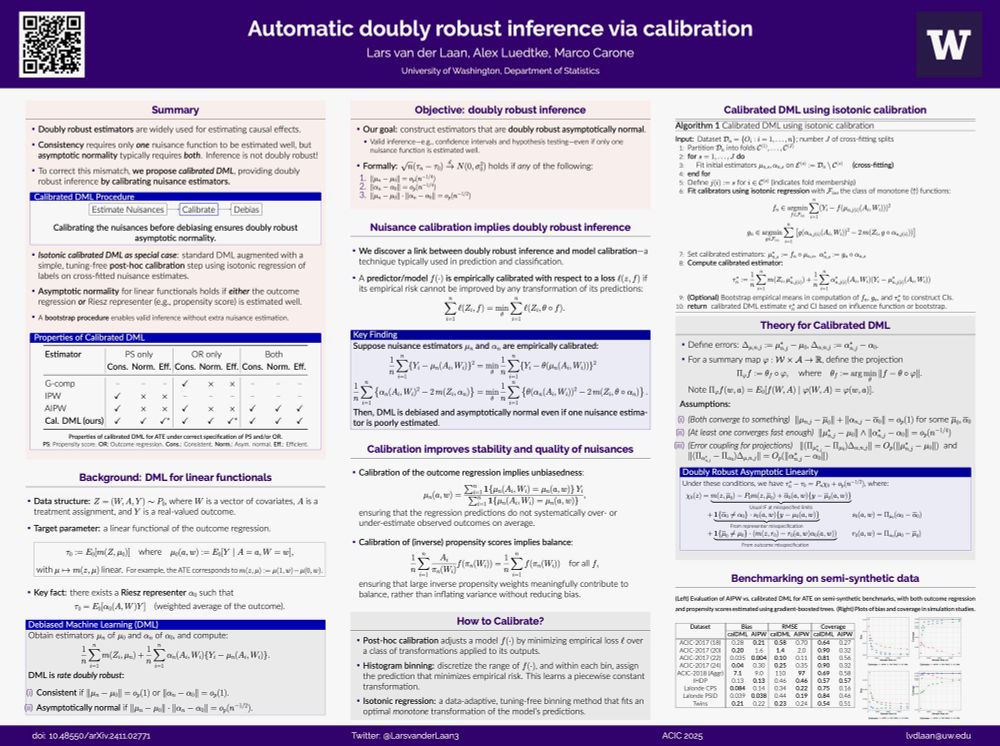

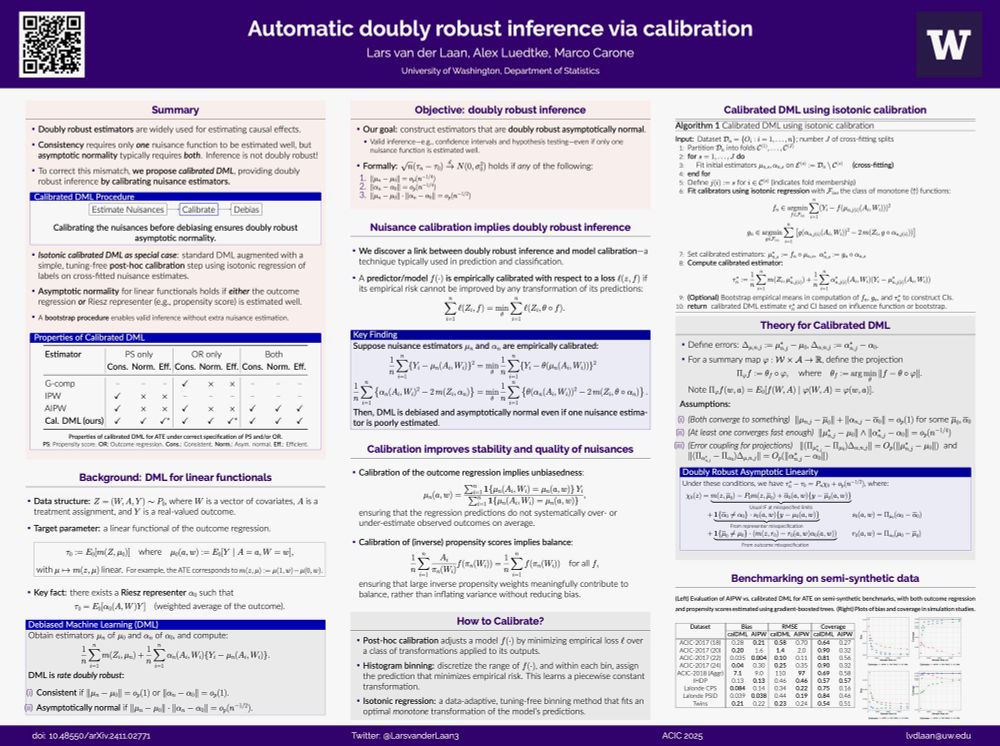

Calibrate your outcome predictions and propensities using isotonic regression as follows:

mu_hat <- as.stepfun(isoreg(mu_hat, Y))(mu_hat)

pi_hat <- as.stepfun(isoreg(pi_hat, A))(pi_hat)

(Or use the isoreg_with_xgboost function given in the paper, which I recommend)

19.05.2025 00:07 — 👍 1 🔁 0 💬 0 📌 0

Had a great time presenting at #ACIC on doubly robust inference via calibration

Calibrating nuisance estimates in DML protects against model misspecification and slow convergence.

Just one line of code is all it takes.

19.05.2025 00:02 — 👍 19 🔁 1 💬 1 📌 2

link 📈🤖

Nonparametric Instrumental Variable Inference with Many Weak Instruments (Laan, Kallus, Bibaut) We study inference on linear functionals in the nonparametric instrumental variable (NPIV) problem with a discretely-valued instrument under a many-weak-instruments asymptotic regime, where the

13.05.2025 16:43 — 👍 1 🔁 1 💬 0 📌 0

I’ll be giving an oral presentation at ACIC in the Advancing Causal Inference session with ML on Wednesday!

My talk will be on Automatic Double Reinforcement Learning and long term causal inference!

I’ll discuss Markov decision processes, Q-functions, and a new form of calibration for RL!

12.05.2025 18:09 — 👍 9 🔁 1 💬 1 📌 0

New preprint with #Netflix out!

We study the NPIV problem with a discrete instrument under a many-weak-instruments regime.

A key application: constructing confounding-robust surrogates using past experiments as instruments.

My mentor Aurélien Bibaut will be presenting a poster at #ACIC2025!

13.05.2025 10:43 — 👍 5 🔁 0 💬 0 📌 0

Our work on stabilized inverse probability weighting via calibration was accepted to #CLeaR2025! I gave an oral presentation last week and was honored to receive the Best Paper Award.

I’ll be giving a related poster talk at #ACIC on calibration and DML and how it provides doubly robust inference!

12.05.2025 18:32 — 👍 5 🔁 1 💬 0 📌 0

Link to paper:

arxiv.org/pdf/2501.06926

12.05.2025 18:19 — 👍 0 🔁 0 💬 0 📌 0

This work is a result of my internship at Netflix over the summer and is joint with Aurelien Bibaut and Nathan Kallus.

12.05.2025 18:10 — 👍 0 🔁 0 💬 1 📌 0

I’ll be giving an oral presentation at ACIC in the Advancing Causal Inference session with ML on Wednesday!

My talk will be on Automatic Double Reinforcement Learning and long term causal inference!

I’ll discuss Markov decision processes, Q-functions, and a new form of calibration for RL!

12.05.2025 18:09 — 👍 9 🔁 1 💬 1 📌 0

Inference for smooth functionals of M-estimands in survival models, like regularized coxPH and the beta-geometric model (see our experiments section) are one application of this approach.

12.05.2025 17:56 — 👍 1 🔁 0 💬 0 📌 0

By targeting low dimensional summaries, there is no need to establish asymptotic normality of the entire infinite dimensional M-estimator (which isn’t possible in general). It allows for the use of ML and regularization to estimate it, and valid inference via a one step bias correction.

12.05.2025 17:53 — 👍 1 🔁 0 💬 1 📌 0

If you’re willing to consider smooth functionals of the infinite dimensional M-estimand, then there is a general theory for inference, where the sandwich variance estimator now involves the derivative of the loss and a Riesz representer of the functional.

Working paper:

arxiv.org/pdf/2501.11868

12.05.2025 17:51 — 👍 4 🔁 0 💬 2 📌 0

The motivation should have been something like a confounder that is somewhat predictive of both the treatment and outcome might be more important to adjust for then a variable that is super predictive of the outcome but doesn’t predict treatment. TR might help give more importance to such variables

25.04.2025 16:45 — 👍 1 🔁 0 💬 0 📌 0

One could have given an analogous theorem saying that E[Y | T, X] is a sufficient deconfounding score and argued that one should only adjust for features predictive of the outcome. So yeah I think it’s wrong/poorly phrased

25.04.2025 05:12 — 👍 1 🔁 0 💬 1 📌 0

The OP’s approach is based on the conditional probability of Y given the treatment is intervened upon and set to some value. But, they don’t seem to define what this means formally, which is exactly what potential outcomes/NPSEM achieve.

01.04.2025 03:33 — 👍 2 🔁 0 💬 0 📌 0

The second stage coefficients are the estimand (identifying the structural coefficients/treatment effect). The first stage coefficients are nuisances, and typically not of direct interest.

20.03.2025 21:35 — 👍 2 🔁 0 💬 1 📌 0

🚨 Excited about this new paper on Generalized Venn Calibration and conformal prediction!

We show that Venn and Venn-Abers can be extended to general losses, and that conformal prediction can be viewed as Venn multicalibration for the quantile loss!

#calibration #conformal

11.02.2025 18:37 — 👍 1 🔁 0 💬 0 📌 0

Lars van der Laan, Ahmed Alaa

Generalized Venn and Venn-Abers Calibration with Applications in Conformal Prediction

https://arxiv.org/abs/2502.05676

11.02.2025 08:10 — 👍 3 🔁 1 💬 0 📌 1

Great discussion! there we use that balancing conditional on the propensity score (or Riesz rep more generally) is sufficient for ipw and DR inference.

To add to the discussion this paper connects balancing with debiasing in aipw

arxiv.org/pdf/2304.14545

25.01.2025 15:59 — 👍 5 🔁 0 💬 0 📌 0

Your comment also reminds me of this paper where they ensure the estimators solve a certain equation (which I think can be viewed as a kind of balance) using isotonic regression and they show this leads to DR inference:

arxiv.org/pdf/2411.02771

25.01.2025 15:28 — 👍 4 🔁 1 💬 1 📌 0

Thrilled to share our new paper! We introduce a generalized autoDML framework for smooth functionals in general M-estimation problems, significantly broadening the scope of problems where automatic debiasing can be applied!

22.01.2025 13:54 — 👍 19 🔁 7 💬 1 📌 0

link 📈🤖

Automatic Debiased Machine Learning for Smooth Functionals of Nonparametric M-Estimands (Laan, Bibaut, Kallus et al) We propose a unified framework for automatic debiased machine learning (autoDML) to perform inference on smooth functionals of infinite-dimensional M-estimands, defined as

22.01.2025 17:17 — 👍 5 🔁 2 💬 0 📌 0

Thrilled to share our new paper! We introduce a generalized autoDML framework for smooth functionals in general M-estimation problems, significantly broadening the scope of problems where automatic debiasing can be applied!

22.01.2025 13:54 — 👍 19 🔁 7 💬 1 📌 0

Biostatistician @IDEXX formerly at harvardmed, @BIDMChealth, @nasa. Big data, clinical trials, and medical diagnostics. Mainer. Opinions are my own. he/him

Assistant Professor, Case Western Reserve University (CCLCM). Staff Biostatistician, Cleveland Clinic.

Epidemiologist interested in causal inference, infectious disease, trial design

https://christopherbboyer.com/about.html

#causalsky #statssky #episky

CMU postdoc, previously MIT PhD. Causality, pragmatism, representation learning, and AI for biology / science more broadly. Proud rat dad.

Research Staff Member at IBM Research.

Causal Inference 🔴→🟠←🟡.

Machine Learning 🤖🎓.

Data Communication 📈.

Healthcare ⚕️.

Creator of 𝙲𝚊𝚞𝚜𝚊𝚕𝚕𝚒𝚋: https://github.com/IBM/causallib

Website: https://ehud.co

Heisenberg Professor for Biostatistics at the Department of Statistics, LMU München | causal inference - missing data - HIV

michaelschomaker.github.io

Assistant professor of biostatistics at Columbia University

Casual inference, statistics, etc

Pauca sed Matura

Biostatistics phd student @University of Washington

Interested in non-parametric statistics, causal inference, and science!

Assistant Professor of "Data Science in Economics" at Uni Tübingen. Interested in the intersection of causal inference and so-called machine learning.

Teaching material: https://github.com/MCKnaus/causalML-teaching

Homepage: mcknaus.github.io

dorothy gilford endowed chair and professor of statistics/biostatistics at university of washington, all views my own

source: https://arxiv.org/rss/stat.ML

maintainer: @tmaehara.bsky.social

asst. prof. in (bio)statistics at harvard—causal inference, semi-parametric estimation, machine learning, open-source software for statistical science. research webpage: https://nimahejazi.org

avid runner, concertgoer, timezone hopper

Columbia postdoc and ex-Quantco. Personal website: www.ohines.com

Professor of Biostatistics, University of Washington School of Public Health.

Affiliate Investigator, Fred Hutch Vaccine and Infectious Disease Division.

Causal inference, ML, survival analysis, statistical epi, viruses and vaccine science.

🇨🇦🇮🇹🇦🇲

LTI PhD at CMU on evaluation and trustworthy ML/NLP, prev AI&CS Edinburgh University, Google, YouTube, Apple, Netflix. Views are personal 👩🏻💻🇮🇩

athiyadeviyani.github.io

Assistant Prof. of CS at Johns Hopkins

Visiting Scientist at Abridge AI

Causality & Machine Learning in Healthcare

Prev: PhD at MIT, Postdoc at CMU

Assistant Professor at UC Berkeley and UCSF.

Machine Learning and AI for Healthcare. https://alaalab.berkeley.edu/

Biostatistician • Associate Prof @ Wake Forest University • former postdoc @ Hopkins Biostat • PhD @ Vandy Biostat • 🎙 Casual Inference • lucymcgowan.com

Fostering a dialogue between industry and academia on causal data science.

Causal Data Science Meeting 2025: causalscience.org