There have been a couple cool pieces up recently debunking the "China is racing on AI, so the US must too" narrative.

time.com/7308857/chin...

papers.ssrn.com/sol3/papers....

@michaelhuang.bsky.social

Reduce extinction risk by pausing frontier AI unless provably safe @pauseai.bsky.social and banning AI weapons @stopkillerrobots.bsky.social | Reduce suffering @postsuffering.bsky.social https://keepthefuturehuman.ai

There have been a couple cool pieces up recently debunking the "China is racing on AI, so the US must too" narrative.

time.com/7308857/chin...

papers.ssrn.com/sol3/papers....

This is great, but will SB 53 be Congress-proof?

10.07.2025 10:46 — 👍 0 🔁 0 💬 0 📌 0

PRESS RELEASE: Accountable Tech Commends New York State Senate on Passage of RAISE Act, Urges Gov. Hochul to Sign: accountabletech.org/statements/a...

20.06.2025 17:47 — 👍 3 🔁 1 💬 0 📌 0🚨NEW YORKERS: Tell Governor Hochul to sign the RAISE Act 🚨

NY’s RAISE Act, which would require the largest AI developers to have a safety plan, just passed the legislature.

Call Governor Hochul at 1-518-474-8390 to tell her to sign the RAISE Act into law.

🚨NEW YORKERS: Tell Governor Hochul to sign the RAISE Act 🚨

NY’s RAISE Act, which would require the largest AI developers to have a safety plan, just passed the legislature.

Call Governor Hochul at 1-518-474-8390 to tell her to sign the RAISE Act into law.

Do you trust AI companies with your future?

Less than a year ago, Sam Altman said he wanted to see powerful AI regulated by an international agency to ensure "reasonable safety testing"

But now he says "maybe the companies themselves put together the right framework"

Last year, half of OpenAI's safety researchers quit the company.

Sam Altman says "I would really point to our track record"

The track record: Superalignment team disbanded, FT reporting last week that OpenAI is cutting safety testing time down from months to just *days*.

China is taking advantage of this and NVIDIA is profiting. NVIDIA produced over 1M H20s in 2024 — most going to China. Orders from ByteDance and Tencent have spiked following recent DeepSeek model releases.

Chinese AI runs on American tech that we freely give them! That's not "Art of the Deal"!

AI godfather Geoffrey Hinton says in the next 5 to 20 years there's about a 50% chance that we'll have to confront the problem of AIs trying to take over.

11.04.2025 13:42 — 👍 3 🔁 1 💬 0 📌 0

Frontier AI models are more capable than they've ever been, and they're being rushed out faster than ever. Not a great combination!

OpenAI used to give staff months to safety test. Now it's just days, per great reporting from Cristina Criddle at the FT. 🧵

FT: OpenAI are slashing the time and resources they're spending on safety testing their most powerful AIs.

Safety testers have only been given days to conduct evaluations.

One of the people testing o3 said "We had more thorough safety testing when [the technology] was less important"

NEW: We just launched a new US campaign to advocate for binding AI regulation!

We've made it super easy to contact your senator:

— It takes just 60 seconds to fill our form

— Your message goes directly to both of your senators

controlai.com/take-a...

12 ex-OpenAI employees just filed an amicus brief on the Elon Musk lawsuit attempting to block OpenAI from shedding nonprofit control.

The brief was filed by Harvard Law Professor Lawrence Lessig, who also reps OpenAI whistleblowers.

Here are the highlights 🧵

Can regulators really know when AI is in charge of a weapon instead of a human? Zachary Kallenborn explains the principles of drone forensics.

30.03.2025 13:01 — 👍 58 🔁 13 💬 1 📌 1How likely is AI to annihilate humanity?

Elon Musk: "20% likely, maybe 10%"

Ted Cruz: "On what time frame?"

Elon Musk: "5 to 10 years"

With the unchecked race to build smarter-than-human AI intensifying, humanity is on track to almost certainly lose control.

That's why FLI Executive Director Anthony Aguirre has published a new essay, "Keep The Future Human".

🧵 1/4

I introduced new AI safety & innovation legislation. Advances in AI are exciting & promising. They also bring risk. We need to embrace & democratize AI innovation while ensuring the people building AI models can speak out.

SB 53 does two things: 🧵

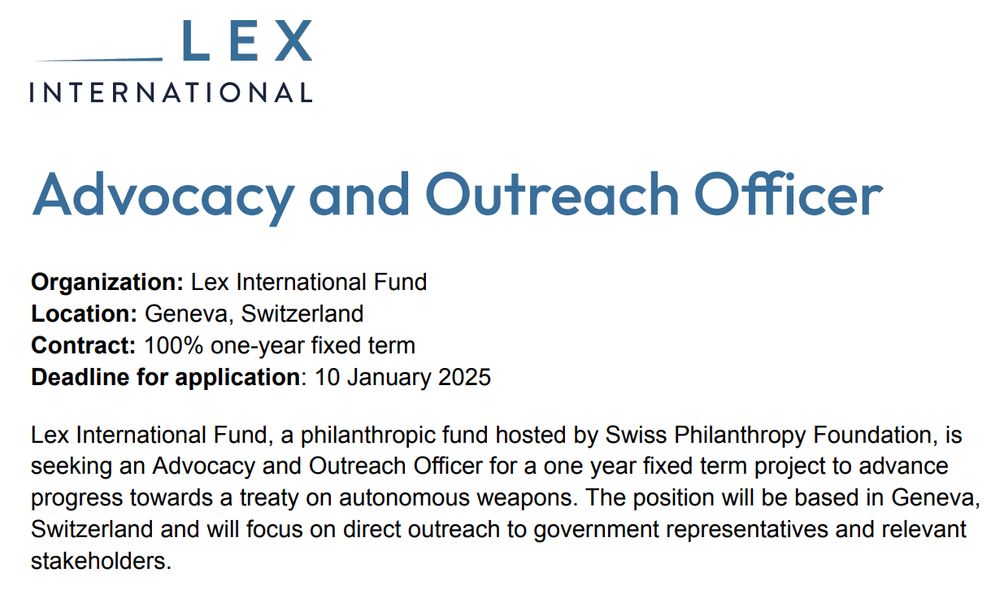

💼 Excellent career opportunity from Lex International, who are hiring an Advocacy and Outreach Officer to help advance work towards a treaty on autonomous weapons.

✍️ Apply by January 10 at the link in the replies:

Nobel Prize winner Geoffrey Hinton thinks there is a 10-20% chance AI will "wipe us all out" and calls for regulation.

Our proposal is to implement a Conditional AI Safety Treaty. Read the details below.

www.theguardian.com/technology/2...

‘Godfather of AI’ raises odds of the technology wiping out humanity over next 30 years

27.12.2024 16:12 — 👍 169 🔁 80 💬 34 📌 72

The tech industry would prefer that Hinton and other experts go away, since they tend to support AI regulation that the tech industry mostly opposes.

safesecureai.org/experts

It’s likely that Hinton lost money personally when he started warning about AI. He resigned from a Vice President position at Google. It would have been more lucrative for him to say nothing and continue his VP role there.

29.12.2024 11:46 — 👍 1 🔁 0 💬 0 📌 0

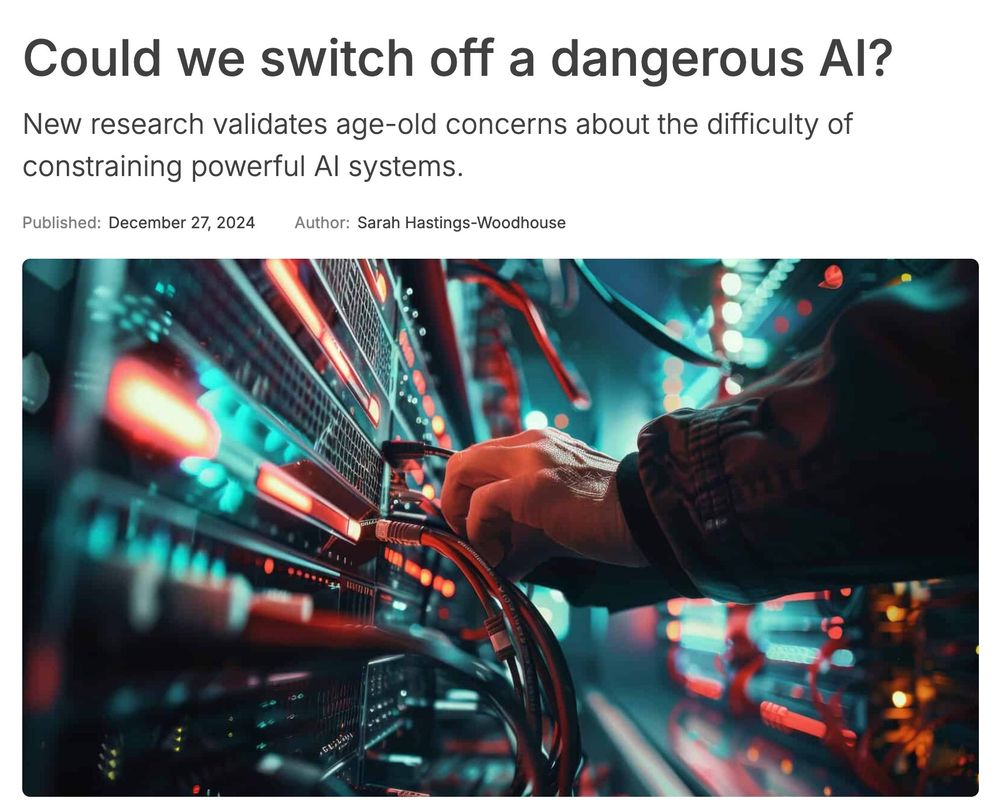

Have you heard about OpenAI's recent o1 model trying to avoid being shut down in safety evaluations? ⬇️

New on the FLI blog:

-Why might AIs resist shutdown?

-Why is this a problem?

-What other instrumental goals could AIs have?

-Could this cause a catastrophe?

🔗 Read it below:

I’m excited to share the announcement of 𝐈𝐧𝐭𝐞𝐫𝐧𝐚𝐭𝐢𝐨𝐧𝐚𝐥 𝐂𝐨𝐧𝐟𝐞𝐫𝐞𝐧𝐜𝐞 𝐨𝐧 𝐋𝐚𝐫𝐠𝐞-𝐒𝐜𝐚𝐥𝐞 𝐀𝐈 𝐑𝐢𝐬𝐤𝐬. The conference will take place 𝟐𝟔-𝟐𝟖𝐭𝐡 𝐌𝐚𝐲 𝟐𝟎𝟐𝟓 at the Institute of Philosophy of KU Leuven in 𝐁𝐞𝐥𝐠𝐢𝐮𝐦.

Our keynote speakers:

• Yoshua Bengio

• Dawn Song

• Iason Gabriel

Submit abstract by 15 February:

I am currently against humanity (or in fact, a couple of AI corporations) pursuing artificial general intelligence (AGI). While that view could change over time, I currently believe that a world with such powerful technologies is too fragile, and we should avoid pursuing that state altogether.

🧵

Your Bluesky Posts Are Probably In A Bunch of Datasets Now

After a machine learning librarian released and then deleted a dataset of one million Bluesky posts, several other bigger datasets have appeared in its place—including one of almost 300 million posts.

🔗 www.404media.co/bluesky-post...

it’s so over

02.12.2024 04:15 — 👍 1 🔁 0 💬 0 📌 0

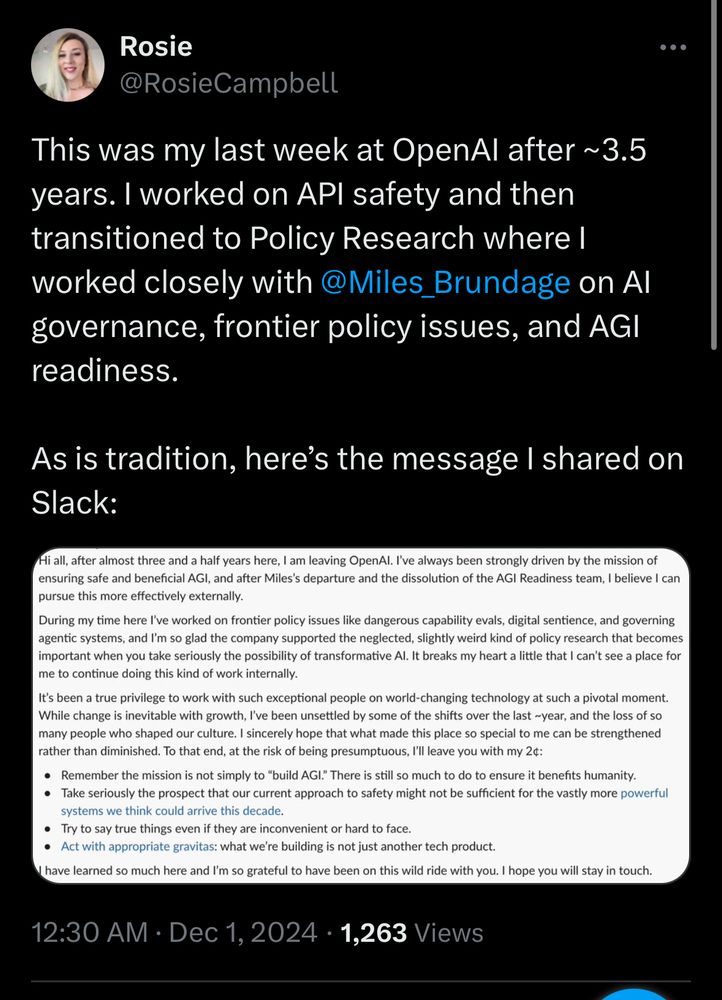

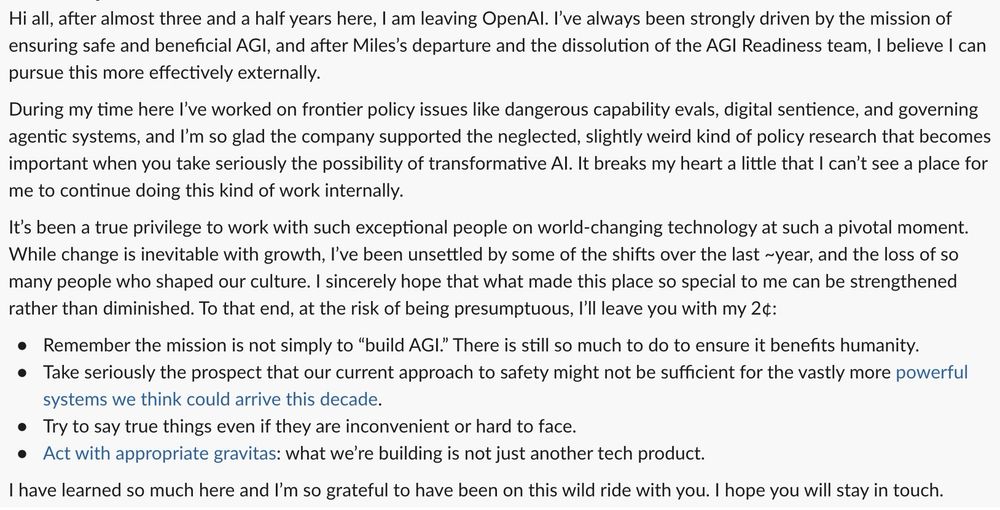

Yet another safety researcher has left OpenAI.

Rosie Campbell says she has been “unsettled by some of the shifts over the last ~year, and the loss of so many people who shaped our culture”.

She says she “can’t see a place” for her to continue her work internally.

…they invented the reserve parachute.

01.12.2024 14:24 — 👍 2 🔁 0 💬 0 📌 0

There was someone even more pessimistic than the pessimist…

01.12.2024 14:24 — 👍 2 🔁 0 💬 1 📌 0