H200 GPUs are now available on Sherlock:

news.sherlock.stanford.edu/publications...

#Sherlock #HPC #Stanford

@stanford-rc.bsky.social

Advancing science, one batch job at a time. https://srcc.stanford.edu

H200 GPUs are now available on Sherlock:

news.sherlock.stanford.edu/publications...

#Sherlock #HPC #Stanford

We're back to job #1 again! news.sherlock.stanford.edu/publications...

#Sherlock #HPC #Stanford

Introducing a new service partition on Sherlock

news.sherlock.stanford.edu/publications...

#Sherlock #HPC #Stanford

An update about our plans to retire Sherlock 2.0

news.sherlock.stanford.edu/publications...

#Sherlock #HPC #Stanford

@stanford-rc.bsky.social was proud to host the Lustre User Group 2025 organized with OpenSFS! Thanks to everyone who participated and our sponsors! Slides are already available at srcc.stanford.edu/lug2025/agenda 🤘Lustre! #HPC #AI

04.04.2025 17:05 — 👍 10 🔁 2 💬 0 📌 1

Join us for the Lustre User Group 2025 hosted by @stanford-rc.bsky.social in collaboration with OpenSFS.

Check out the exciting agenda! 👉 srcc.stanford.edu/lug2025/agenda

Getting things ready for next week's Lustre User Group 2025 at Stanford University!

28.03.2025 19:07 — 👍 6 🔁 1 💬 0 📌 0

Doubling the FLOPs, another milestone for Sherlock's performance

news.sherlock.stanford.edu/publications...

#Sherlock #HPC #Stanford

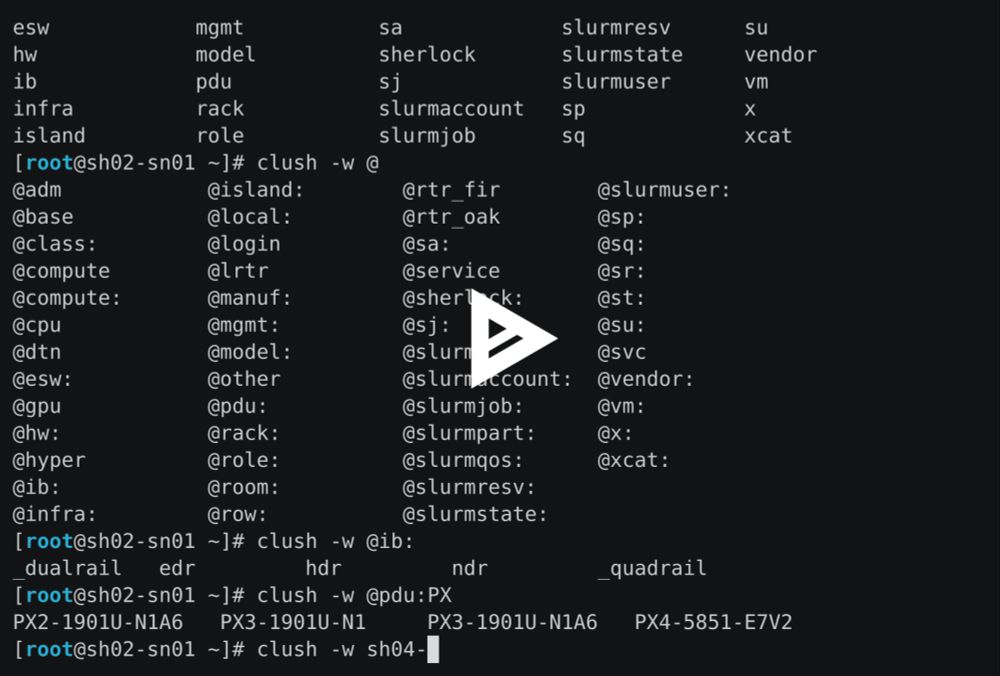

ClusterShell 1.9.3 is now available in EPEL and Debian. Not using clustershell groups on your #HPC cluster yet?! Check out the new bash completion feature! Demo recorded on Sherlock at @stanford-rc.bsky.social with ~1,900 compute nodes and many group sources!

asciinema.org/a/699526

Dual Astek SAS switches on top of TFinity tape library.

We started it! blocksandfiles.com/2025/01/28/s...

Check out my LAD'24 presentation:

www.eofs.eu/wp-content/u...

Lustre User Group 2025 save-the-date card: April 1-2, 2025 at Stanford University

Newly announced at the #SC24 Lustre BoF! Lustre User Group 2025, organized by OpenSFS, will be hosted at Stanford University on April 1-2, 2025. Save the date!

20.11.2024 14:40 — 👍 10 🔁 8 💬 0 📌 1

Just another day for Sherlock's home-built scratch Lustre filesystem at Stanford: Crushing it with 136+GB/s aggregate read on real research workload! 🚀 #Lustre #HPC #Stanford

11.01.2025 19:59 — 👍 24 🔁 3 💬 0 📌 0Hello BlueSky!

20.01.2025 18:47 — 👍 9 🔁 3 💬 2 📌 0