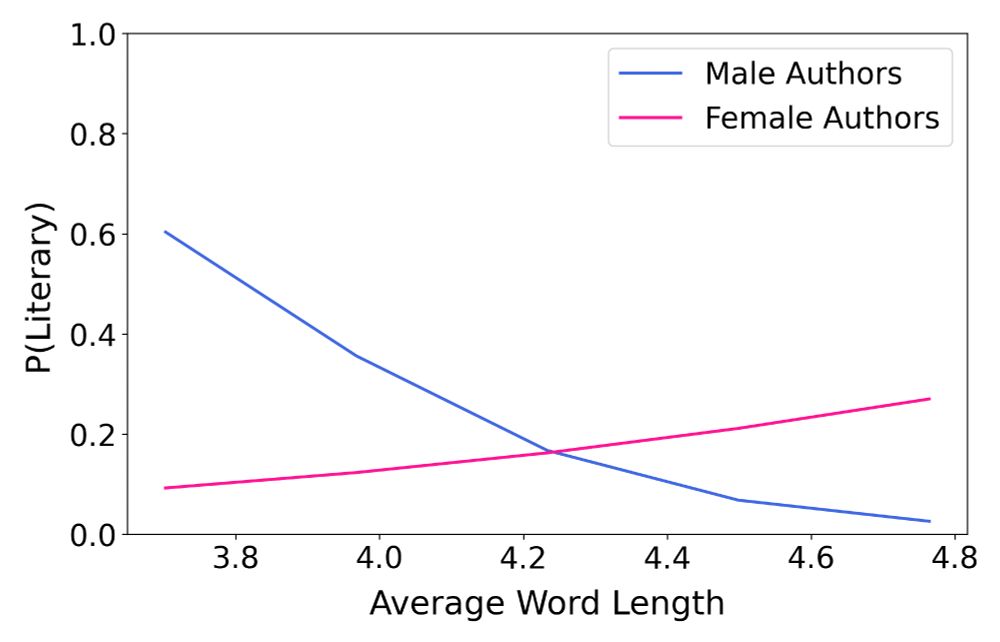

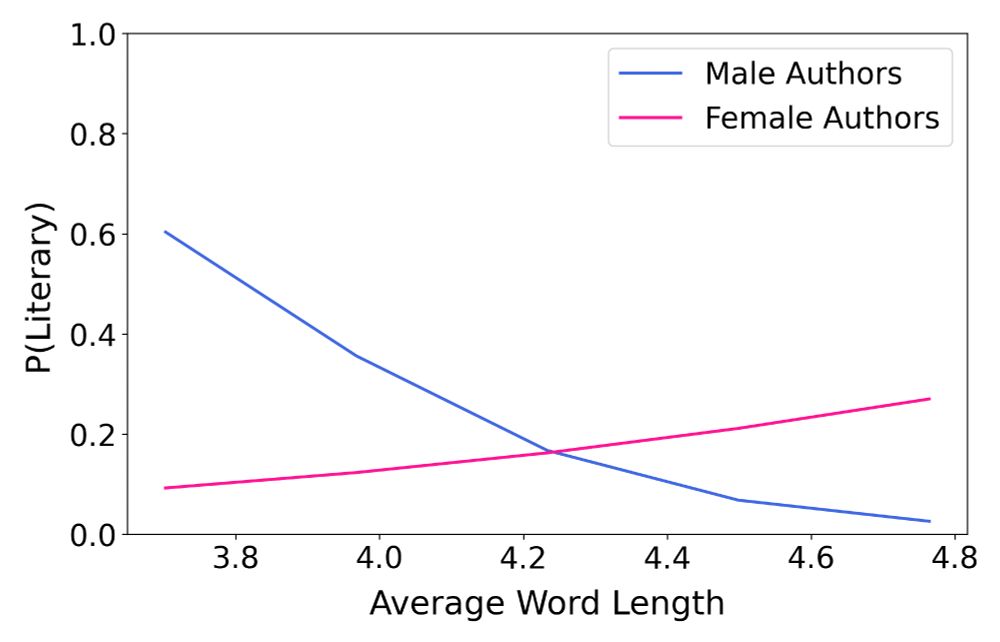

Plot illustrating how a book's probability of being classified as literary fiction varies with average word length and author gender. For female authors, longer words are correlated with an increased likelihood of literary classification. For male authors, the inverse is true.

Unsurprising: Using longer words makes female authors more “literary”

Surprising: The opposite is true for male authors

For more cool plots + findings, take a look at my #CHR2025 paper exploring the role of form vs gender in the classification of genre & literary fiction

doi.org/10.63744/Ztw...

18.11.2025 23:14 — 👍 25 🔁 6 💬 0 📌 1

I’ll be presenting this work in **2 hours** at EMNLP’s Gather Session 3. Come by to chat about fanfiction, literary notions of similarity, long-context modeling, and consent-focused data collection!

05.11.2025 22:01 — 👍 7 🔁 1 💬 0 📌 0

The performance (Spearman's rank correlation coefficient) of a number of embedding models across fine-grained semantic categories and superficial categories like author name. All models perform far worse on fine-grained categories than superficial categories. Explicit prompting for the category of interest is ineffective.

Even strong embedding models over-index on surface features—for every model tested, similarity scores are more reflective of author or fandom than semantic aspects like theme or characterization. This is true even if models are explicitly instructed to focus on these aspects!

05.11.2025 21:59 — 👍 6 🔁 0 💬 1 📌 0

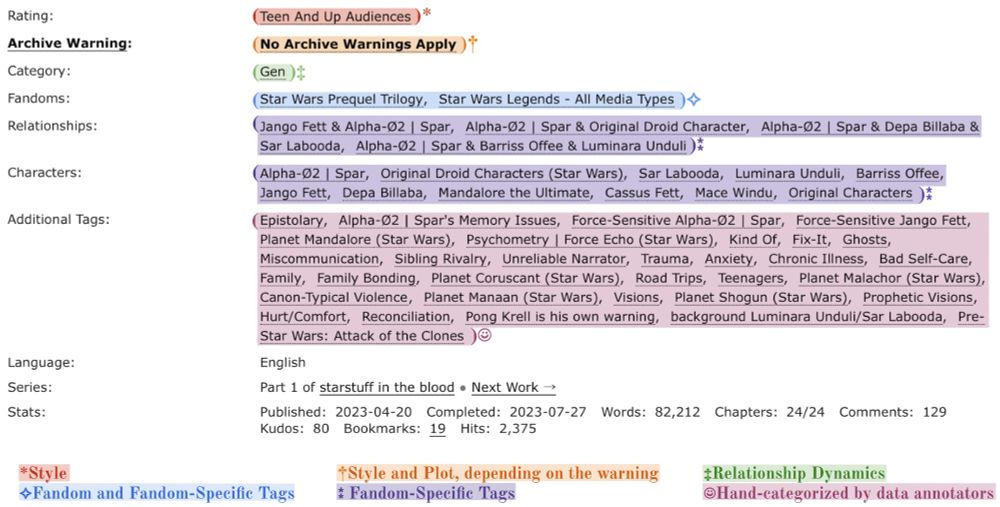

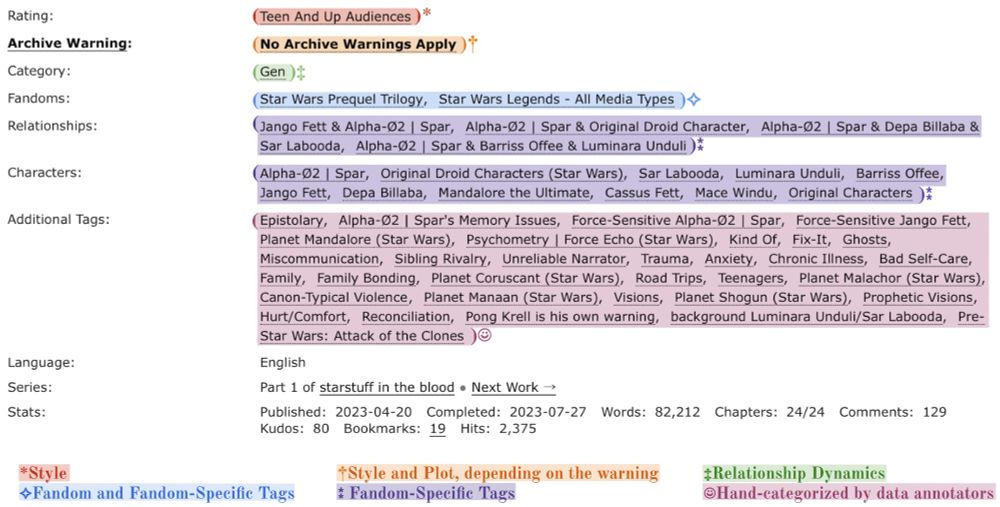

A screenshot of Archive of Our Own's story metadata for one of the stories in FicSim, annotated for different types of similarity. Some fields (like content rating and category) are always assigned to categories like style or relationship dynamic, while other groups of tags are classified individually by annotators.

All selected fanfiction has detailed metadata and author-generated tags describing the fanfic content. Informed by fan studies and digital humanities literature, we classify these into 12 categories to construct gold labels for a fine-grained semantic similarity task.

05.11.2025 21:59 — 👍 7 🔁 0 💬 1 📌 0

Histogram of story length, ranging from 10 thousand to over 400 thousand words. Most stories are between 10 to 90 thousand words.

We introduce FicSim, a dataset of 90 recently written long-form fanfics from Archive of Our Own. We *reach out to the authors for permission* to use each work and prioritize continual, informed author consent. Fics range in length from 10K to 400K+ words.

05.11.2025 21:59 — 👍 10 🔁 0 💬 1 📌 0

Figure showing a similarity comparison between three stories. Story A and story B have the same author, and story A and story C have the same tone. A human might care about which stories are tonally the most similar, but a language model's notion of similarity is strongly informed by surface-level features like small differences in writing style across authors.

Digital humanities researchers often care about fine-grained similarity based on narrative elements like plot or tone, which don’t necessarily correlate with surface-level textual features.

Can embedding models capture this? We study this in the context of fanfiction!

05.11.2025 21:59 — 👍 39 🔁 13 💬 1 📌 1

Assistant Professor, Information and Library Science, Luddy School of Informatics, Computing, and Engineering, Indiana University Bloomington

NLP / CSS PhD at Berkeley I School. I develop computational methods to study culture as a social language.

PhD student @ CMU LTI. working on text generation + long context

https://www.cs.cmu.edu/~abertsch/

Association for Computers and the Humanities, the US-based professional society for the digital humanities. #digitalhumanities

Boldly leading the way with education and research in a world awash in information and data.

Chiefly interested in applying the methods of a discipline I took one undergrad course in to topics entirely ignored in my graduate education.

🌉 bridged from ⁂ https://mastodon.social/@agoldst, follow @ap.brid.gy to interact

assistant professor, @ltiatcmu.bsky.social. machine learning: LLMs and climate. 🏳️🌈🏳️⚧️ they/them/dad (2 dogs).

pro-AI, anti-capitalist, anti-fascist.

Website: strubell.github.io

Using #AI and #NLP to study storytelling at McGillU. Author of Enumerations: Data and Literary Study and director of .txtlab.

Associate professor of computer science at Northeastern University. Natural language processing, digital humanities, OCR, computational bibliography, and computational social sciences. Artificial intelligence is an archival science.

Asst Prof @ University of Washington Information School // PhD in English from WashU in St. Louis

I’m interested in books, data, social media, and digital humanities.

They call me "Eyre Jordan" on the bball court 🏀

https://melaniewalsh.org/

☀️ Assistant Professor of Computer Science at CU Boulder 👩💻 NLP, cultural analytics, narratives, online communities 🌐 https://maria-antoniak.github.io 💬 books, bikes, games, art

He teaches information science at Cornell. http://mimno.infosci.cornell.edu

Uses machine learning to study literary imagination, and vice-versa. Likely to share news about AI & computational social science / Sozialwissenschaft / 社会科学

Information Sciences and English, UIUC. Distant Horizons (Chicago, 2019). tedunderwood.com

Researcher of fandom studies, fanfiction, computational literary studies and literary reception. Currently a PhD candidate at Radboud University. Views are my own.

Assistant Professor @ the University of Washington iSchool | formerly an Innovator in Residence @ Library of Congress | essays in WIRED, Gawker, The New Republic, Current Affairs, etc.

🌐 www.bcglee.com

Associate Professor, School of Information, UC Berkeley. NLP, computational social science, digital humanities.

Cultural Analytics is a platinum open-access journal dedicated to the computational study of culture.

Postdoc at UW NLP 🏔️. #NLProc, computational social science, cultural analytics, responsible AI. she/her. Previously at Berkeley, Ai2, MSR, Stanford. Incoming assistant prof at Wisconsin CS. lucy3.github.io/prospective-students.html

CHR2025 will take place in Luxembourg, from 9-12 December 2025. Stay tuned!

https://2025.computational-humanities-research.org