Read our paper or reach out to me and my collaborators if interested. This work wouldn't be possible without my collaborators: @aditijc.bsky.social , Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social , Chuwei Wang, @anima-anandkumar.bsky.social 17/

12.06.2025 15:47 — 👍 0 🔁 0 💬 0 📌 0

Takeaway II: Equivariance is one example of global properties that can be used to regularize diffusion models; one may realize other forms of regularization to reweight and penalize trajectories deviating from the data manifold. 16/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

Takeaway I: EquiReg provides a new lens to correct diffusion-based inverse solvers, not by rewriting the models, but by guiding them with symmetry. The key is to find functions that are Manifold-Preferential Equivariant, showing low equivariance error for desired solutions. 15/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

Our preliminary results show that EquiReg can also be used to improve diffusion-based text-to-image guidance and generate images that are more natural-looking and feasible (see the paper for more visualizations). 14/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

Improved PDE reconstruction performance: EquiReg enhances performance on PDE solving tasks. For instance, Equi-FunDPS reduces the error of FunDPS on Helmholtz and Navier-Stokes inverse problems. 13/

12.06.2025 15:47 — 👍 1 🔁 0 💬 1 📌 0

EquiReg improves the perceptual quality in image restoration tasks. 12/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

Improved image restoration performance: We demonstrate that EquiReg significantly improves performance on linear and nonlinear image restoration tasks. EquiReg also consistently shows improvement in performance across many noise levels. 11/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

We develop flexible plug-and-play losses to be integrated into a variety of pixel- and latent-space diffusion models for inverse problems. EquiReg guides the sampling trajectory toward symmetry-preserving regions, lie close to the data manifold, improving posterior sampling. 10/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

We present two strategies for finding MPE functions:

a) training induced: equivariance emerges in encoder-decoder architectures trained with symmetry-preserving augmentations,

b) data inherent: MPE arises from inherent data symmetries, commonly observed in physical systems. 9/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

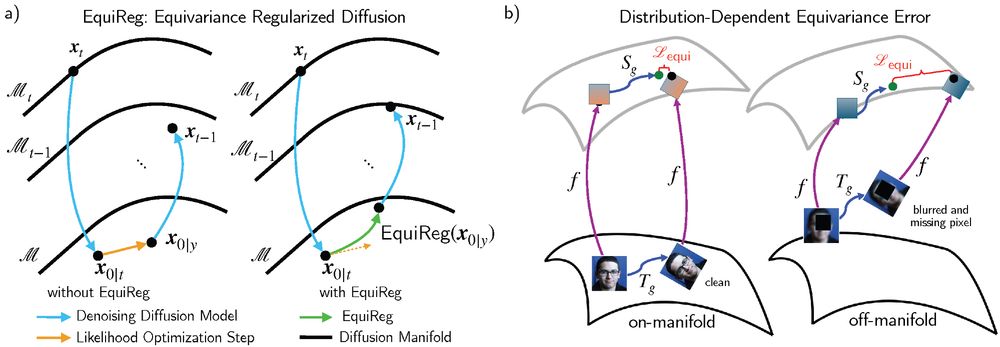

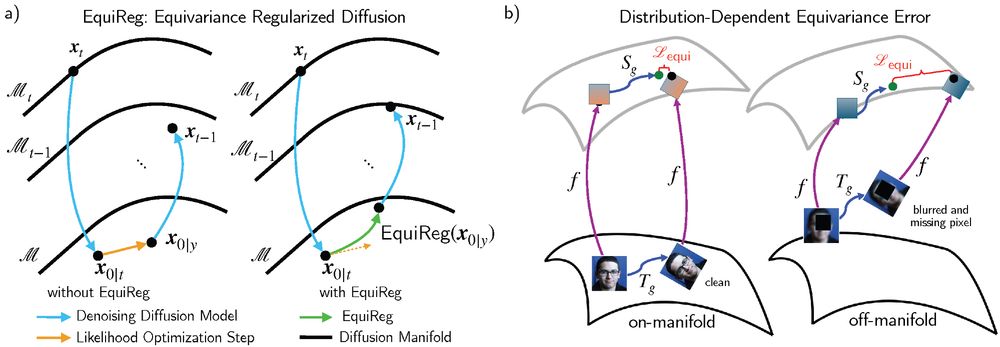

We introduce Manifold-Preferential Equivariant (MPE) functions, which exhibit low equivariance error on the support of the data manifold (in-distribution) and higher error off-manifold (out-of-distribution). Our proposed regularization, EquiReg, is based on this MPE property. 8/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

EquiReg with distribution-dependent equivariance errors: We propose a new class of regularizers grounded in distribution-dependent equivariance error, a formalism that quantifies how symmetry violations vary depending on whether samples lie on- or off-manifold. 7/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

We propose EquiReg, taking equivariance as one such global property that can enforce geometric symmetries. 6/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

This addresses errors arising in the likelihood score due to poor posterior approx and prior score errors resulting from off-manifold trajectories. To realize such a regularizer, we seek an approach for manifold alignment via global properties of the data distribution. 5/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

We reinterpret the reversed conditional diffusion as a Wasserstein-2 gradient flow minimizing a functional over sample trajectories. This suggests employing a regularizer that reweights and penalizes trajectories deviating from the data manifold (see Propositions 4.1-4.2). 4/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

This breaks down for highly complex distributions: Conditional expectation, as in the posterior mean expectation, computes a linear combination of all possible x0; hence, from the manifold perspective, the posterior mean expectation may end up being located off-manifold. 3/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

Inverse problems are ill-posed: the inversion process can have many solutions; hence, they require prior information about the desired solution. SOTA methods use diffusion models as learned priors. However, they rely on an isotropic Gaussian approximation of the posterior. 2/

12.06.2025 15:47 — 👍 0 🔁 0 💬 1 📌 0

🚨We propose EquiReg, a generalized regularization framework that uses symmetry in generative diffusion models to improve solutions to inverse problems. arxiv.org/abs/2505.22973

@aditijc.bsky.social, Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social, Chuwei Wang, @anima-anandkumar.bsky.social 1/

12.06.2025 15:47 — 👍 3 🔁 1 💬 1 📌 0

I am joining @ualberta.bsky.social as a faculty member and

@amiithinks.bsky.social!

My research group is recruiting MSc and PhD students at the University of Alberta in Canada. Research topics include generative modeling, representation learning, interpretability, inverse problems, and neuroAI.

29.05.2025 18:53 — 👍 11 🔁 2 💬 1 📌 0

Our decoding package also offers a movement-imitated augmentation framework (VARS-fUSI++). By augmenting the image for decoder training with small, randomly rotated and translated images, you can increase the decoder's robustness, hence, performance. 12/

28.04.2025 17:55 — 👍 0 🔁 0 💬 0 📌 0

We show that VARS-fUSI can be generalized to human participants while maintaining decodeable behavior-correlated information, generating decoding accuracies and activation maps comparable to ground truth. 11/

28.04.2025 17:55 — 👍 0 🔁 0 💬 1 📌 0

In a motor decoding experiment, the direction of planned saccadic eye movements can be decoded as accurately from VARS-fUSI as from ground truth. The decoding relied on the same region of posterior parietal cortex, showing that VARS-fUSI conserves behavioral information. 10/

28.04.2025 17:55 — 👍 0 🔁 0 💬 1 📌 0

Despite the extensive reduction of required pulses, VARS-fUSI can still be used reliably for low-latency and efficient behavioral decoding in brain-computer interfaces (BCIs). We demonstrate this in monkey and human experiments. 9/

28.04.2025 17:55 — 👍 0 🔁 0 💬 1 📌 0

Why does VARS-fUSI perform better than other AI models?

- It respects the complex-valued nature of the data,

- It treats the temporal aspect of the data as a continuous function using neural operators,

- It captures decomposed spatially local and temporally global features. 8/

28.04.2025 17:55 — 👍 0 🔁 0 💬 1 📌 0

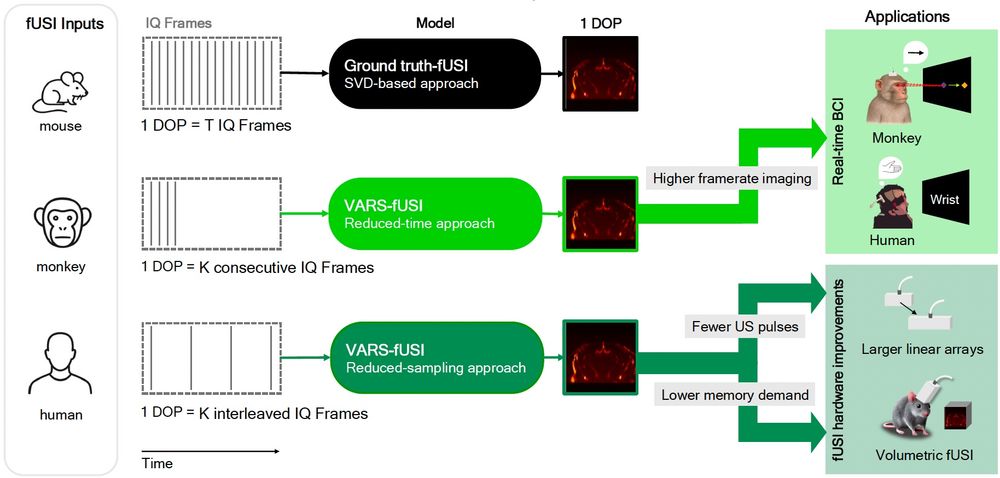

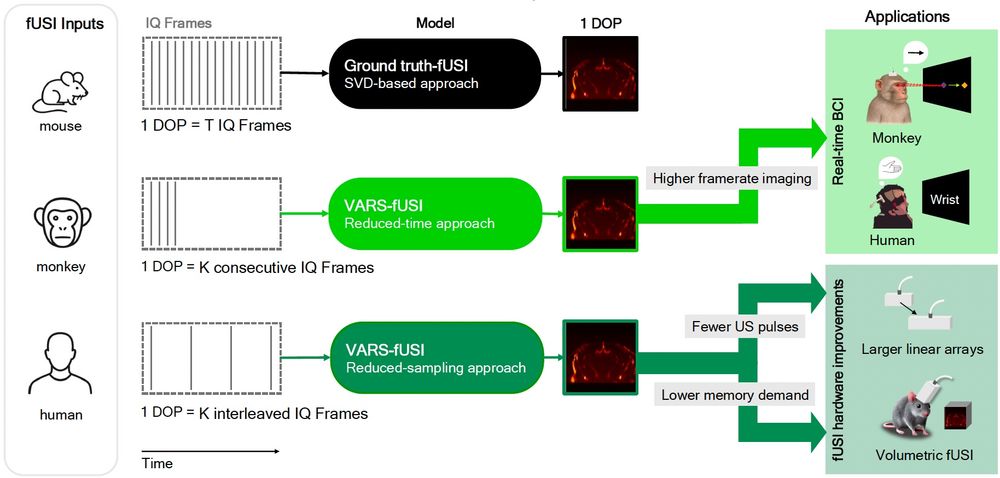

What does VARS-fUSI offer to practitioners using fUSI in clinical or neuroscience research?

VARS-fUSI offers a training pipeline, a fine-tuning procedure, and an accelerated, low-latency online imaging system. You can use VARS-fUSI to reduce the acquisition duration or rate. 7/

28.04.2025 17:55 — 👍 0 🔁 0 💬 1 📌 0

VARS-fUSI is SOTA!

We applied VARS-fUSI to brain images collected from mouse, monkey, and human. Our method achieves state-of-the-art performance and shows superior generalization to unseen sessions in new animals, and even across species. 6/

28.04.2025 17:55 — 👍 0 🔁 0 💬 1 📌 0

What are the challenges of fUSI?

fUSI is a promising neuroimaging method, inferring neural activity by detecting cerebral blood volume changes. Current methods require hundreds of frames, leading to high computational cost and memory demands.

VARS-fUSI addresses above. 5/

28.04.2025 17:55 — 👍 0 🔁 0 💬 1 📌 0

Change the sampling rate or duration of your data acquisition at inference time, and VARS-fUSI will still provide high-quality images. This is not possible with current AI models. VARS-fUSI reconstructs images using 10% of the time or sampling rate typically needed per image. 4/

28.04.2025 17:55 — 👍 1 🔁 0 💬 1 📌 0

This is part of a multi-collaboration between three research labs at @caltech.edu. Check out our manuscript and source code for VARS-fUSI.

bioRxiv: biorxiv.org/content/10.1...

code: github.com/neuraloperat... 3/

28.04.2025 17:55 — 👍 0 🔁 0 💬 1 📌 0

Joint work with Lydia Lin, Thierri Callier, Peter Wang, Sanvi Pal, Aditi Chandrashekar, Claire Rabut, @zongyili.bsky.social, Chase Blagden, @sumnerln.bsky.social,

@azizzadenesheli.bsky.social, Charles Liu, @mikhailshapiro.bsky.social, Richard Andersen, @anima-anandkumar.bsky.social. 2/

28.04.2025 17:55 — 👍 2 🔁 2 💬 1 📌 0

We have released VARS-fUSI: Variable sampling for fast and efficient functional ultrasound imaging (fUSI) using neural operators.

The first deep learning fUSI method to allow for different sampling durations and rates during training and inference. biorxiv.org/content/10.1... 1/

28.04.2025 17:55 — 👍 12 🔁 2 💬 1 📌 0

Associate prof @ UAlberta (CS and Psychology) studying language and semantic representations in the brain. TED speaker

Flatiron Research Fellow | Theoretical Neuroscience and Computational Vision

https://www.cns.nyu.edu/~fiquet/

Senior Research Fellow @ ucl.ac.uk/gatsby & sainsburywellcome.org

{learning, representations, structure} in 🧠💭🤖

my work 🤓: eringrant.github.io

not active: sigmoid.social/@eringrant @eringrant@sigmoid.social, twitter.com/ermgrant @ermgrant

Bio-Acousto-Magneto-Neuro-Chemical Engineer

Max Delbrück Professor @Caltech

Investigator @HHMI

Fulbright-Tocqueville Chair @PhysMedParis @ESPCI_Paris '24-5

shapirolab.caltech.edu

HBHL is an interdisciplinary program at @mcgill.ca dedicated to advancing brain health research. HBHL invests in facilities, research teams, faculty recruiting, scholarships and community outreach to support #neuroscience at McGill. 🧪⚗️🧬🧠

mcgill.ca/hbhl

Postdoctoral Fellow at Harvard Kempner Institute. Trying to bring natural structure to artificial neural representations. Prev: PhD at UvA. Intern @ Apple MLR, Work @ Intel Nervana

The Kempner Institute for the Study of Natural and Artificial Intelligence at Harvard University.

Professor of Computing Science at the University of Alberta. Interested in AI, reinforcement learning, and games of all sorts.

Research news from Canada's largest research hospital.

🔗 uhnresearch.ca

Asst. Prof. in Machine Learning at UofT. #LongCOVID patient.

https://www.cs.toronto.edu/~cmaddis/

AI Pioneer, AI+Science, Professor at Caltech, Former Senior Director of AI at NVIDIA, Former Principal Scientist at AWS AI.

Computational & Systems Neuroscience (COSYNE) Conference

🧠🧠🧠

Next: Mar 27-April 1 2025, Montreal/Tremblant

🧠🧠🧠

Here too:

@CosyneMeeting@neuromatch.social

@CosyneMeeting on Twitter

Capoeira balança mas não cai | Large eligibility trace, large temporal discount factor | She/her | 🇵🇹 in 🇺🇸

Deep Learning + Topology + Human Movement

Physics PhD candidate

Now at Geometric Intelligence Lab, UC Santa Barbara and Los Angeles Dodgers

Prev McGill Physics

mathildep.ca

Experimental neuroscientist working on olfactory processing in mice. 🇦🇷

Research Scientist at Meta | AI and neural interfaces | Interested in data augmentation, generative models, geometric DL, brain decoding, human pose, …

📍Paris, France 🔗 cedricrommel.github.io

Assistant Professor and Director of Critical ML@ WaterlooENG | Postdoc @ CS USCViterbi | PhD @ UMN | Representation Learning | AI for Manufacturing & Ops | AI for Health | Theory-guided ML for the Real-World |Views Personal |

https://sirisharambhatla.com/