Artificial Intelligence and Democratic Freedoms

Special thanks to @katygb.bsky.social and @sethlazar.org for organizing a fantastic @knightcolumbia essay series on AI and Democratic freedoms, and other authors of the essay series for valuable discussion + feedback. Check out the other essays here! knightcolumbia.org/research/art....

01.08.2025 15:43 — 👍 1 🔁 0 💬 0 📌 0

Finally, autonomy levels can help with agentic safety evaluations and setting thresholds for autonomy risks in safety frameworks. How do we know what level an agent is operating at, and what appropriate risk mitigations should be put in place? Read our paper for more!

01.08.2025 15:42 — 👍 2 🔁 0 💬 1 📌 0

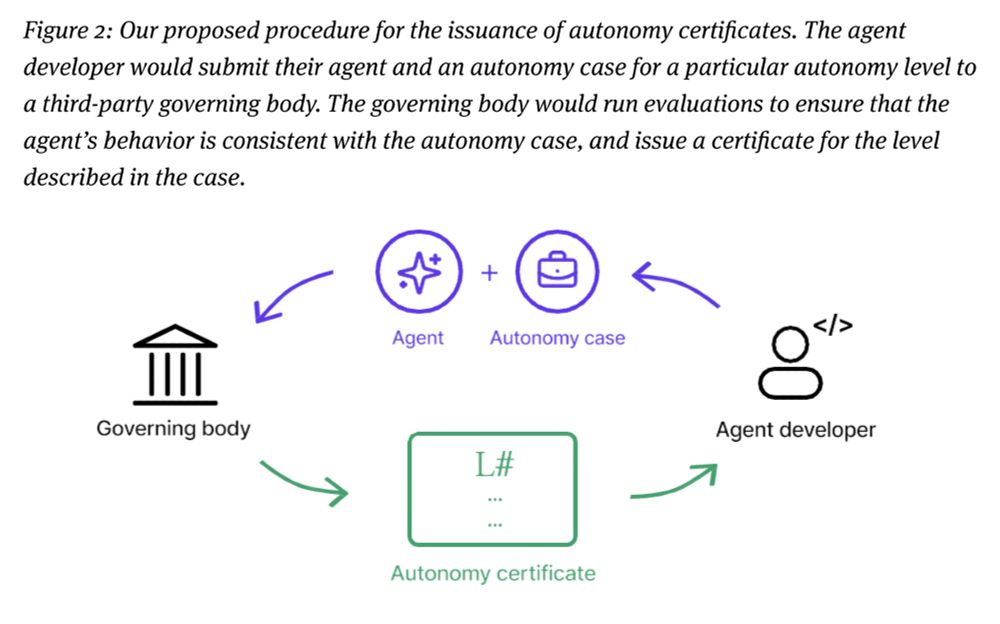

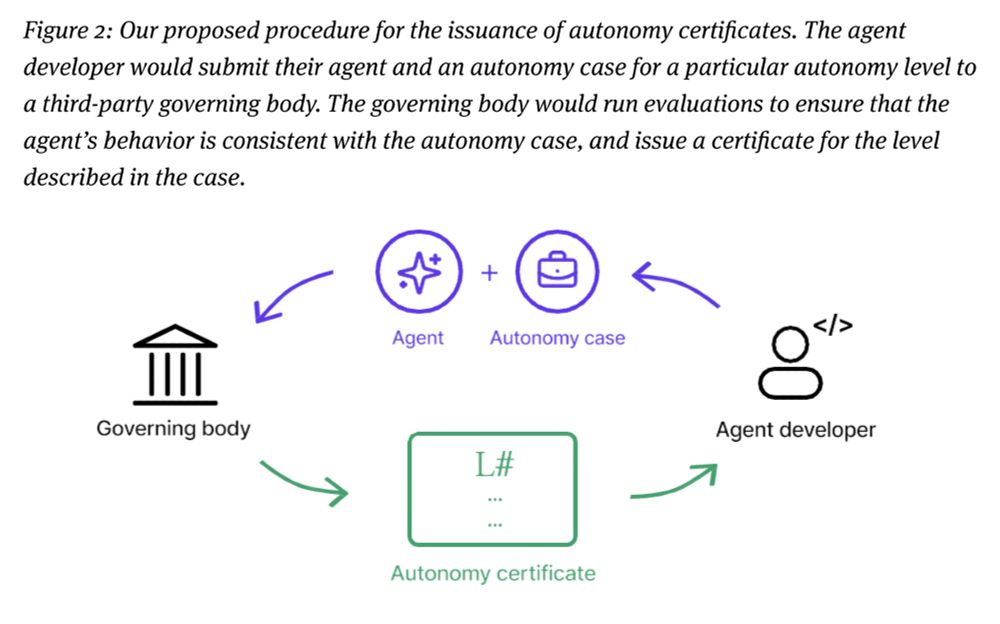

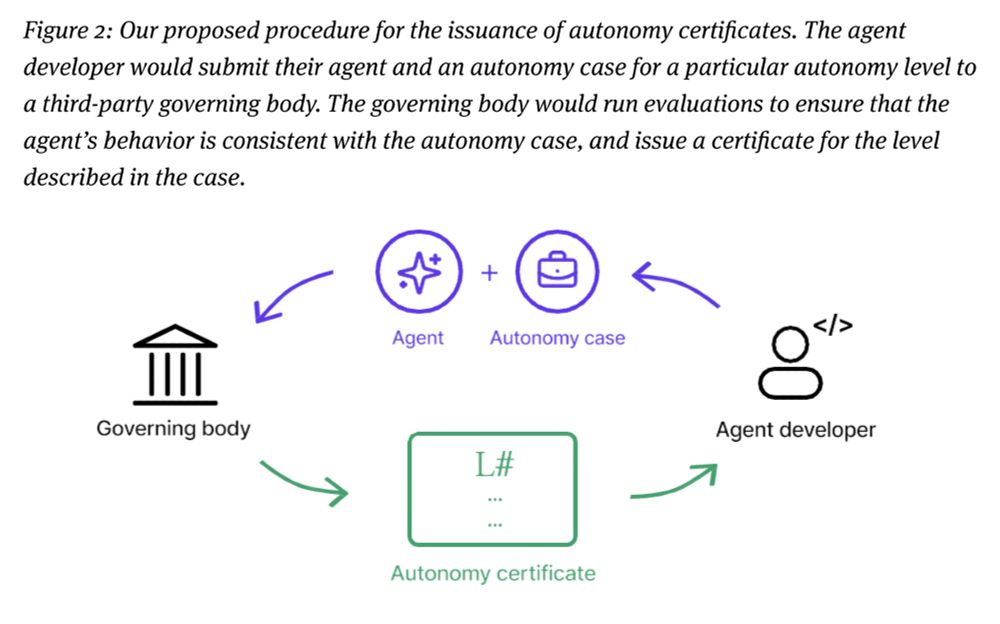

We also introduce **agent autonomy certificates** to govern agent behavior in single- and multi-agent settings. Certificates constrain the space of permitted actions and help ensure effective and safe collaborative behaviors in agentic systems.

01.08.2025 15:42 — 👍 1 🔁 0 💬 1 📌 0

Why have this framework at all?

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

01.08.2025 15:42 — 👍 0 🔁 0 💬 1 📌 0

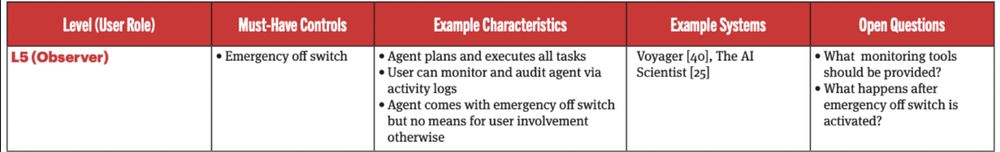

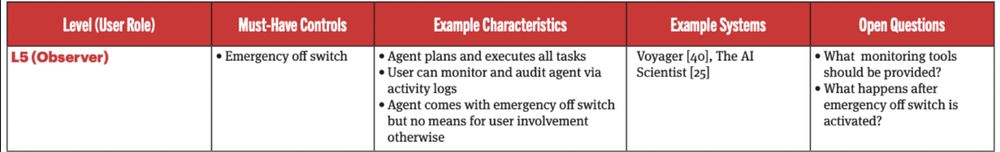

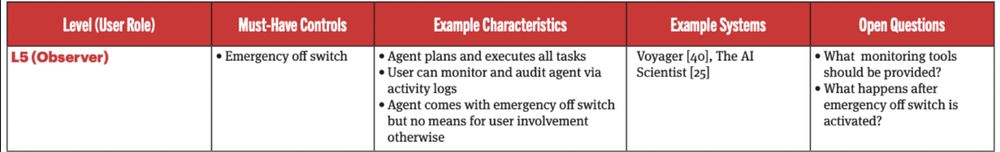

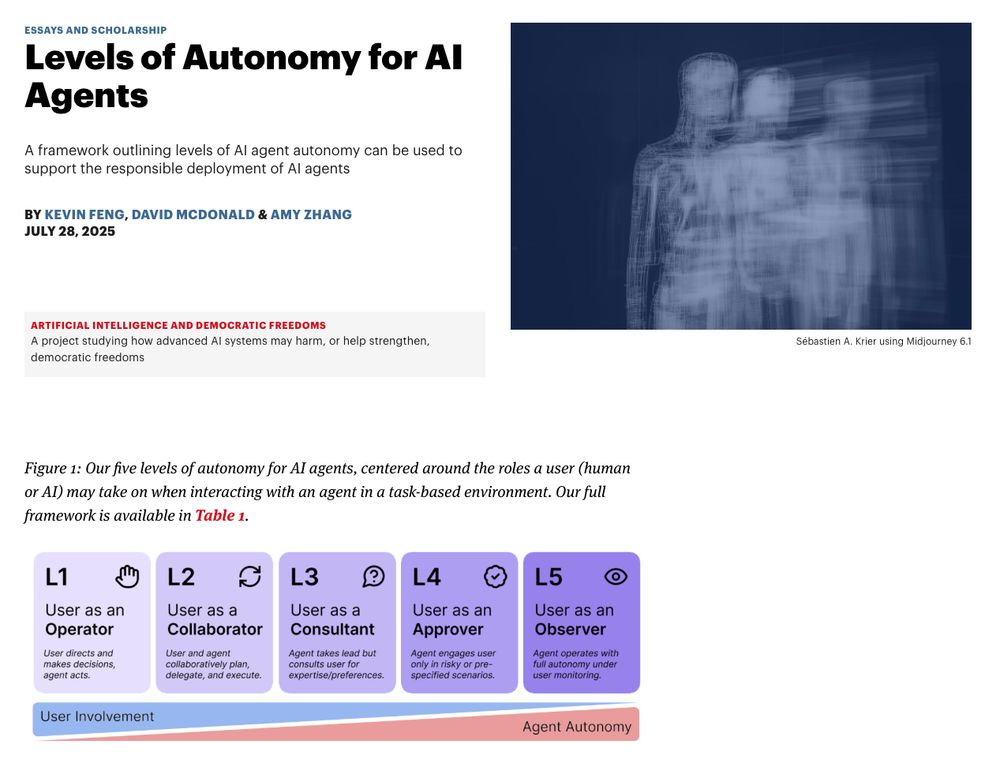

Level 5: user as an observer.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

01.08.2025 15:42 — 👍 0 🔁 0 💬 1 📌 0

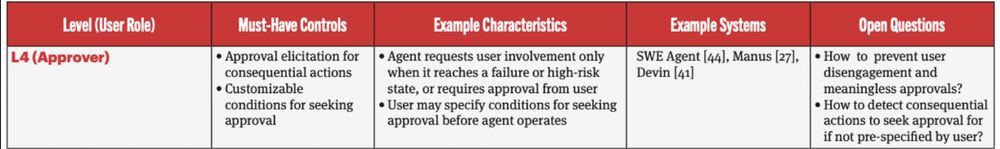

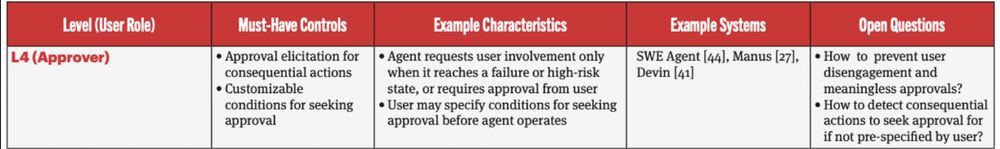

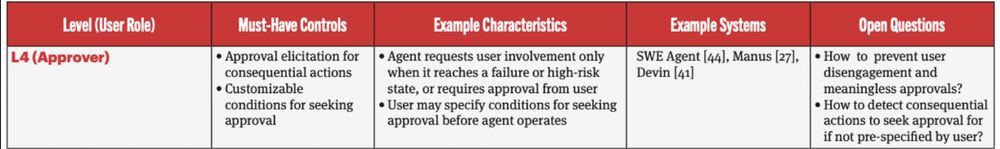

Level 4: user as an approver.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

01.08.2025 15:41 — 👍 0 🔁 0 💬 1 📌 0

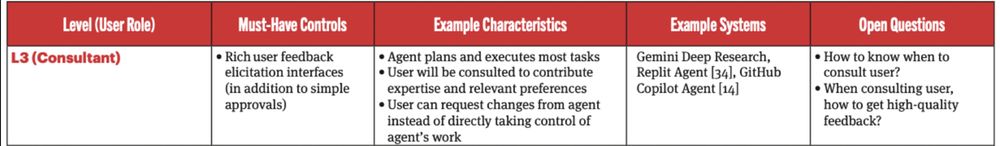

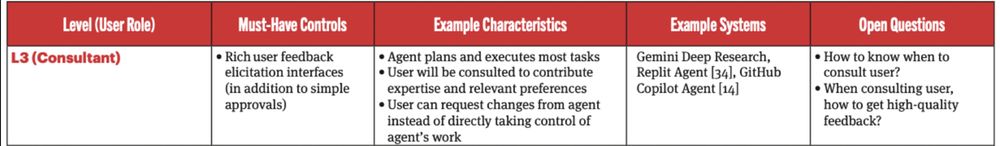

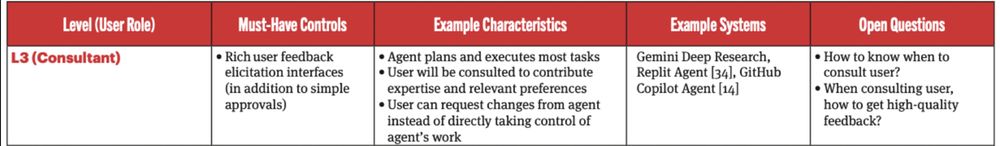

Level 3: user as a consultant.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

01.08.2025 15:41 — 👍 0 🔁 0 💬 1 📌 0

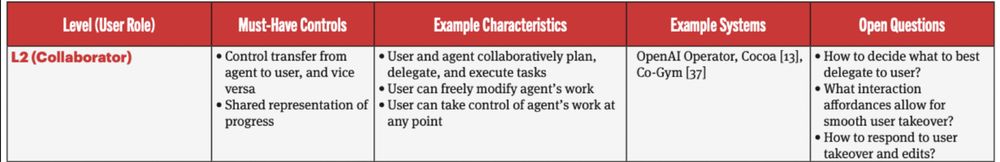

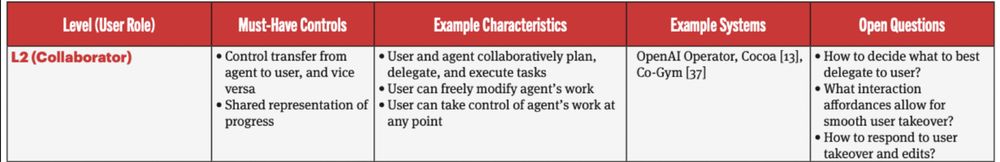

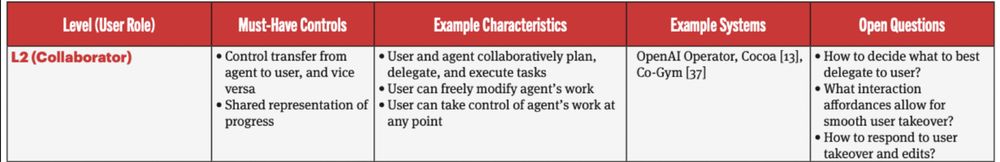

Level 2: user as a collaborator.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

01.08.2025 15:41 — 👍 0 🔁 0 💬 1 📌 0

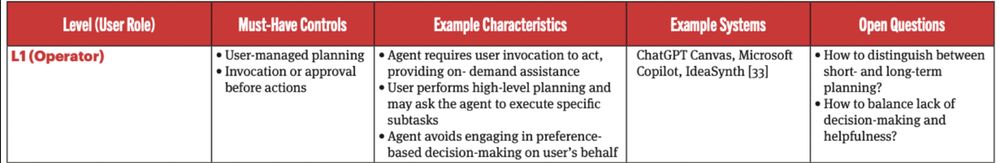

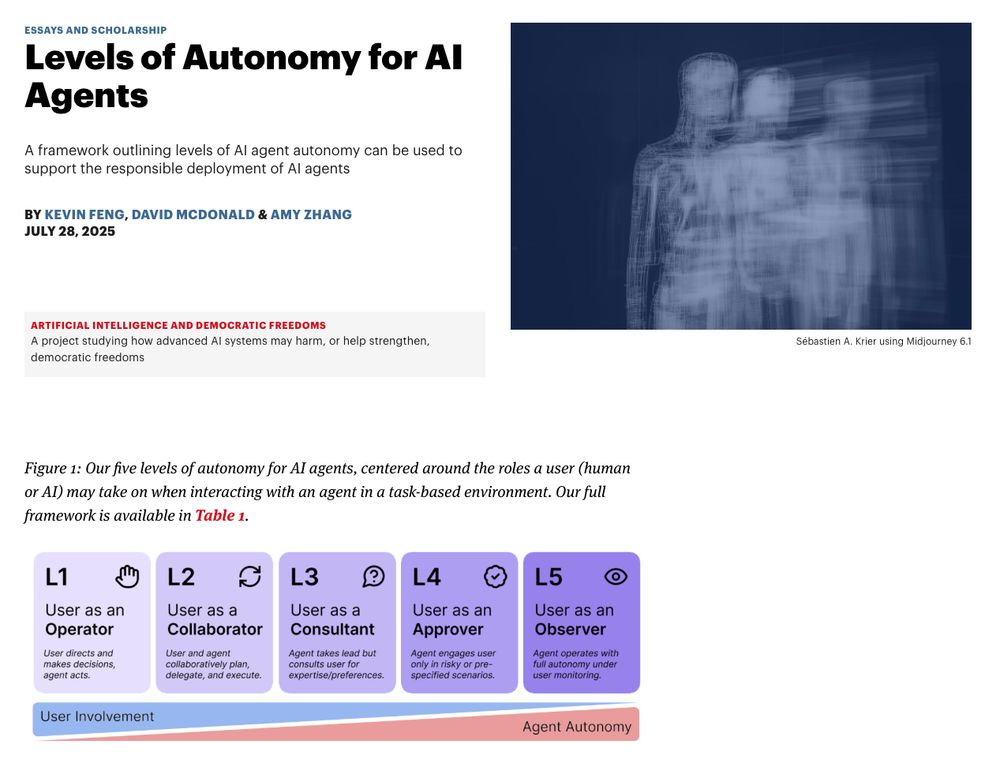

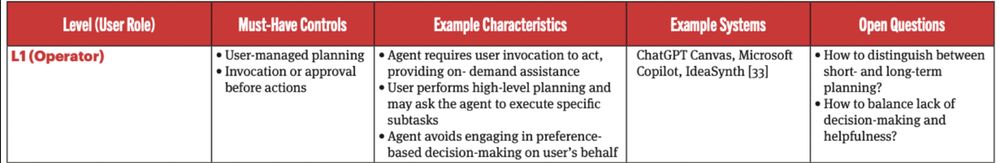

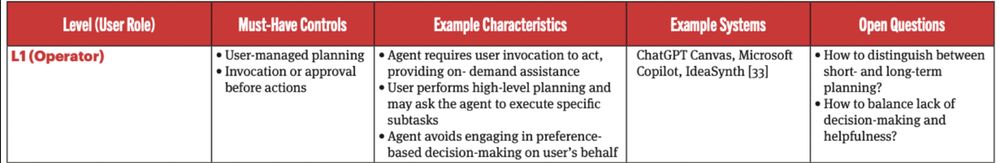

Level 1: user as an operator.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

01.08.2025 15:40 — 👍 0 🔁 0 💬 1 📌 0

Levels of Autonomy for AI Agents

Web: knightcolumbia.org/content/leve....

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Summary thread below.

01.08.2025 15:40 — 👍 0 🔁 0 💬 1 📌 0

Levels of Autonomy for AI Agents

Here are links to the paper:

Web: knightcolumbia.org/content/leve....

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

01.08.2025 15:35 — 👍 0 🔁 0 💬 0 📌 0

Artificial Intelligence and Democratic Freedoms

Special thanks to @katygb.bsky.social and @sethlazar.org for organizing a fantastic @knightcolumbia.org essay series on AI and Democratic freedoms, and other authors of the essay series for valuable discussion + feedback. Check out the other essays here! knightcolumbia.org/research/art....

01.08.2025 15:31 — 👍 0 🔁 0 💬 1 📌 0

Finally, autonomy levels can help with agentic safety evaluations and setting thresholds for autonomy risks in safety frameworks. How do we know what level an agent is operating at, and what appropriate risk mitigations should be put in place? Read our paper for more!

01.08.2025 15:31 — 👍 0 🔁 0 💬 1 📌 0

We also introduce **agent autonomy certificates** to govern agent behavior in single- and multi-agent settings. Certificates constrain the space of permitted actions and help ensure effective and safe collaborative behaviors in agentic systems.

01.08.2025 15:31 — 👍 0 🔁 0 💬 1 📌 0

Why have this framework at all?

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

01.08.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

Level 5: user as an observer.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

01.08.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

Level 4: user as an approver.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

01.08.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

Level 3: user as a consultant.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

01.08.2025 15:29 — 👍 0 🔁 0 💬 1 📌 0

Level 2: user as a collaborator.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

01.08.2025 15:29 — 👍 0 🔁 0 💬 1 📌 0

Level 1: user as an operator.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

01.08.2025 15:29 — 👍 0 🔁 0 💬 1 📌 0

Screenshot of a paper on a webpage, with a figure showing 5 levels of autonomy for AI agents and the tradeoff between user involvement and agent autonomy as the levels increase.

📢 New paper, published by @knightcolumbia.org

We often talk about AI agents augmenting vs. automating work, but how exactly can different configurations of human-agent interaction look like? We introduce a 5-level framework for AI agent autonomy to unpack this.

🧵👇

01.08.2025 15:28 — 👍 19 🔁 5 💬 1 📌 1

Hi Dave, this is super cool! I'm currently working on a research prototype that brings domain experts together to draft policies for AI behavior and would love to chat more, esp about how I can integrate something like this

01.08.2025 00:10 — 👍 2 🔁 0 💬 0 📌 0

Levels of Autonomy for AI Agents

In an essay for our AI & Democratic Freedoms series,

@kjfeng.me, @axz.bsky.social (both of @socialfutureslab.bsky.social), & David W. McDonald outline a framework for levels of #AI agent autonomy that can be used to support the responsible deployment of AI agents. knightcolumbia.org/content/leve...

28.07.2025 19:24 — 👍 3 🔁 3 💬 0 📌 0

Excited to kick off the first workshop on Sociotechnical AI Governance at #chi2025 (STAIG@CHI'25) with a morning poster session in a full house! Looking forward to more posters, discussions, and our keynote in the afternoon. Follow our schedule at chi-staig.github.io!

27.04.2025 03:07 — 👍 3 🔁 1 💬 0 📌 0

@kjfeng.me and @rockpang.bsky.social are kicking off the panel on sociotechnical AI governance!

#CHI2025

27.04.2025 00:32 — 👍 7 🔁 4 💬 0 📌 0

I'll be at CHI in-person in Yokohama 🇯🇵. Let's connect to chat about anything from designing LLM-powered UX to AI alignment + governance!

25.04.2025 15:07 — 👍 1 🔁 0 💬 0 📌 0

Thanks to my fantastic collaborators @qveraliao.bsky.social @ziangxiao.bsky.social @jennwv.bsky.social @axz.bsky.social and David McDonald. This work was done during a summer internship with the FATE team at Microsoft Research.

25.04.2025 15:07 — 👍 1 🔁 0 💬 1 📌 0

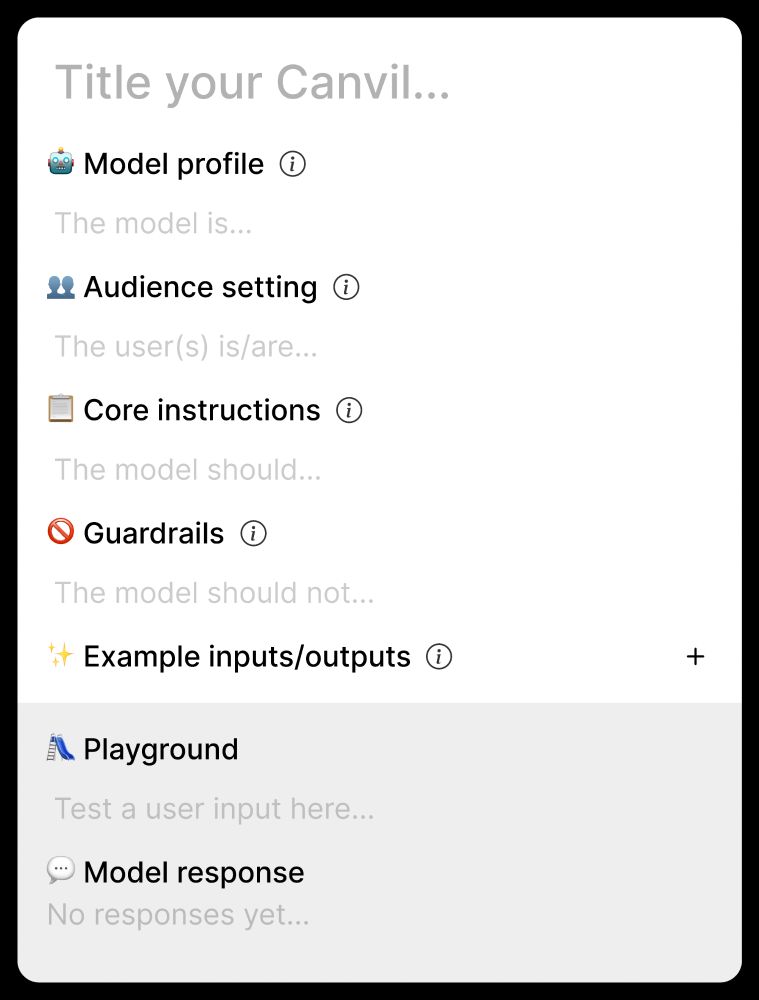

Finally, Canvil's seamless integration into Figma allowed designers to use their existing collaborative workflows to work with design and non-design teammates on model adaptatation.

We believe lowering the barrier to adaptation can catalyze more thoughtfully designed LLM UX.

25.04.2025 15:05 — 👍 0 🔁 0 💬 1 📌 0

Designers also used constraints from their existing design requirements and UIs to shape model behaviors. For example, many devised ways to embed cultural preferences and customs into models based on the needs and backgrounds of their target users.

25.04.2025 15:04 — 👍 0 🔁 0 💬 1 📌 0

🐾Carnegie Bosch Postdoc @ Carnegie Mellon HCII

🤖Mutual Theory of Mind, Human-AI Interaction, Responsible AI.

👩🏻🎓Ph.D. from 🐝Georgia Tech HCC. Prev Google Research, IBM Research, UW-Seattle. She/Her.

🔗 http://qiaosiwang.me/

Co-Founder at Zentropi. Formerly Head of Trust & Safety at OpenAI, of Community Policy at Airbnb, and of Content Policy Facebook. Strictly cold takes.

Assistant Professor leading the AI, Law, & Society Lab @ Princeton

📚JD/PhD @ Stanford

High School Physics Teacher. Desmos Fellow. He/him. #iTeachPhysics #EduSky

www.afreeparticle.com

Postdoctoral Fellow @ UW School of Medicine

PhD From @UW HCDE

Accessibility & Health Equity Researcher

Finally admitting that my Twitter addiction will not die with the platform

she/her

Ph.D. Holder from NYU - Human-Computer Interaction Researcher & Prototyper - Creator of DrawTalking: https://ktrosenberg.github.io/drawtalking/

Looking for collaborations and opportunities (short-term projects, postdocs, industry, teaching, professorship)

Researcher @Microsoft; PhD @Harvard; Incoming Assistant Professor @MIT (Fall 2026); Human-AI Interaction, Worker-Centric AI

zbucinca.github.io

A third-year PhD student at the University of Washington

I study online communities, content moderation, and social movements.

Stabber of things. Fan of the Oxford comma. Trying to do better. So very tired.

she/her

Discord: MidnightPumpkin. (<- include the dot ;-))

Insta: stabber_of_things

Medtwitter/Medsky feed: https://bsky.app/profile/did:plc:v336bgjhyj5suvc6ds5vkckg/feed/a

Made the original For You feed

PhD student in Human-Computer Interaction Institute @ Carnegie Mellon University. Supporting expertise-driven approaches to AI design, evaluation, and governance.

annakawakami.com

🌇 postdoc, princeton center for information technology policy,

💁🏻 previously uw husky, un, acus, south korean government

🥁 ai ethics, safety, and policy

https://inyoungcheong.github.io

UMass Amherst, Initiative for Digital Public Infrastructure, Global Voices, Berkman Klein Center. Formerly Center for Civic Media, MIT Media Lab.

1x winner of the "oh i'm pretty sure that's just jerry spreading misinformation" award 🥇🏆

• Goodfeeds https://goodfeeds.co/

• @skyapp.bsky.social

thanked by darth july 20, 2023

📍Austin, TX

NLP research - PhD student at UW

🎒 {Creativity, AI, People} | HCI researcher & software eng | @allenai

Leading agents R&D at AI2. AI & HCI research scientist. https://jonathanbragg.com

Assistant professor @ UIUC, studying personalized language and communication