How well do LLMs handle multilinguality? 🌍🤖

🔬We brought the rigor from Machine Translation evaluation to multilingual LLM benchmarking and organized the WMT25 Multilingual Instruction Shared Task spanning 30 languages and 5 subtasks.

How well do LLMs handle multilinguality? 🌍🤖

🔬We brought the rigor from Machine Translation evaluation to multilingual LLM benchmarking and organized the WMT25 Multilingual Instruction Shared Task spanning 30 languages and 5 subtasks.

Ready for our poster today at #COLM2025!

💭This paper has had an interesting journey, come find out and discuss with us! @swetaagrawal.bsky.social @kocmitom.bsky.social

Side note: being a parent in research does have its perks, poster transportation solved ✅

This project wouldn’t have been possible without the brilliant minds driving the work: Lorenzo Proietti, @sted19.bsky.social and @zouharvi.bsky.social

16.09.2025 09:51 — 👍 3 🔁 0 💬 0 📌 0One way to raise the bar is by rethinking the source selection process: instead of random samples, we built model that chooses the most difficult data for translation. And we’ve already put our work into practice: this year’s WMT25 General MT test set use our approach to make eval more challenging.

16.09.2025 09:51 — 👍 2 🔁 0 💬 1 📌 0

🚩Machine Translation is far from “solved” - the test sets just got too easy. 🚩

Yes, the systems are much stronger. But the other half of the story is that test sets haven’t kept up. It’s no longer enough to just take a random news article and expect systems to stumble.

Oh, and the best part: we’re releasing the weights so researchers can run wild with it. Stay tuned for our upcoming technical report!

cohere.com/blog/command...

🚀 Thrilled to share what I’ve been working on at Cohere!

What began in January as a scribble in my notebook “how challenging would it be...” turned into a fully-fledged translation model that outperforms both open and closed-source systems, including long-standing MT leaders.

A correction: we obtained 22 multilingual systems while in contrast we got only 14 bilingual systems, highlighting a shift in the field towards multilinguality.

26.08.2025 19:06 — 👍 0 🔁 0 💬 0 📌 0

We received 14 specialized systems while 10 are multilingual. And almost all participants finetuned some LLMs.

In contrast to previous years, constrained systems are now reaching top-tier rankings, challenging the dominance of unconstrained ones.

Stay tuned for the 20th anniversary WMT conference.

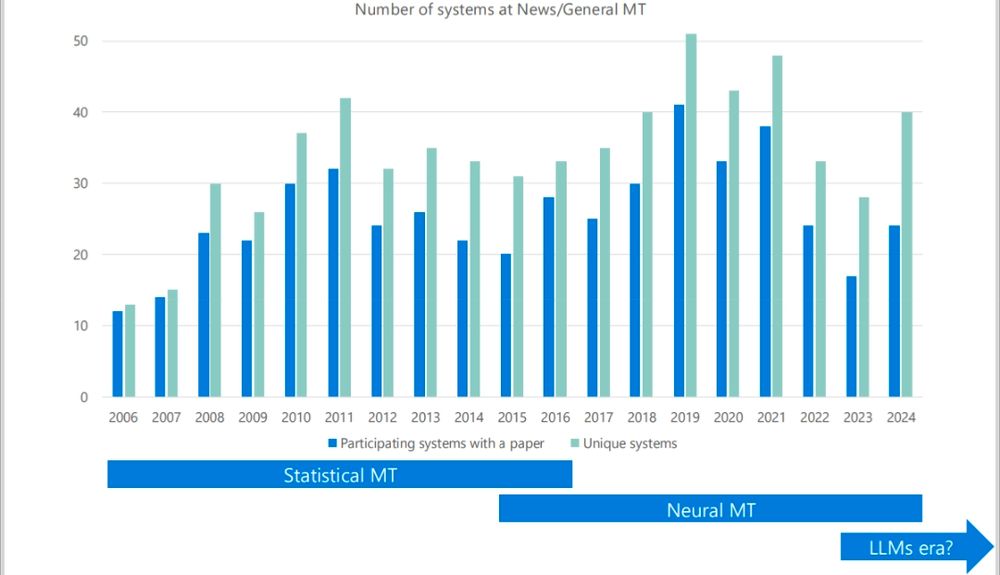

We saw increased momentum in participation growth this year: 36 unique teams competing to improve the performance of MT. Furthermore, we added collected outputs of 24 popular LLMs and online systems. Reaching 50 evaluated systems in our annual benchmark.

23.08.2025 09:28 — 👍 3 🔁 1 💬 1 📌 0

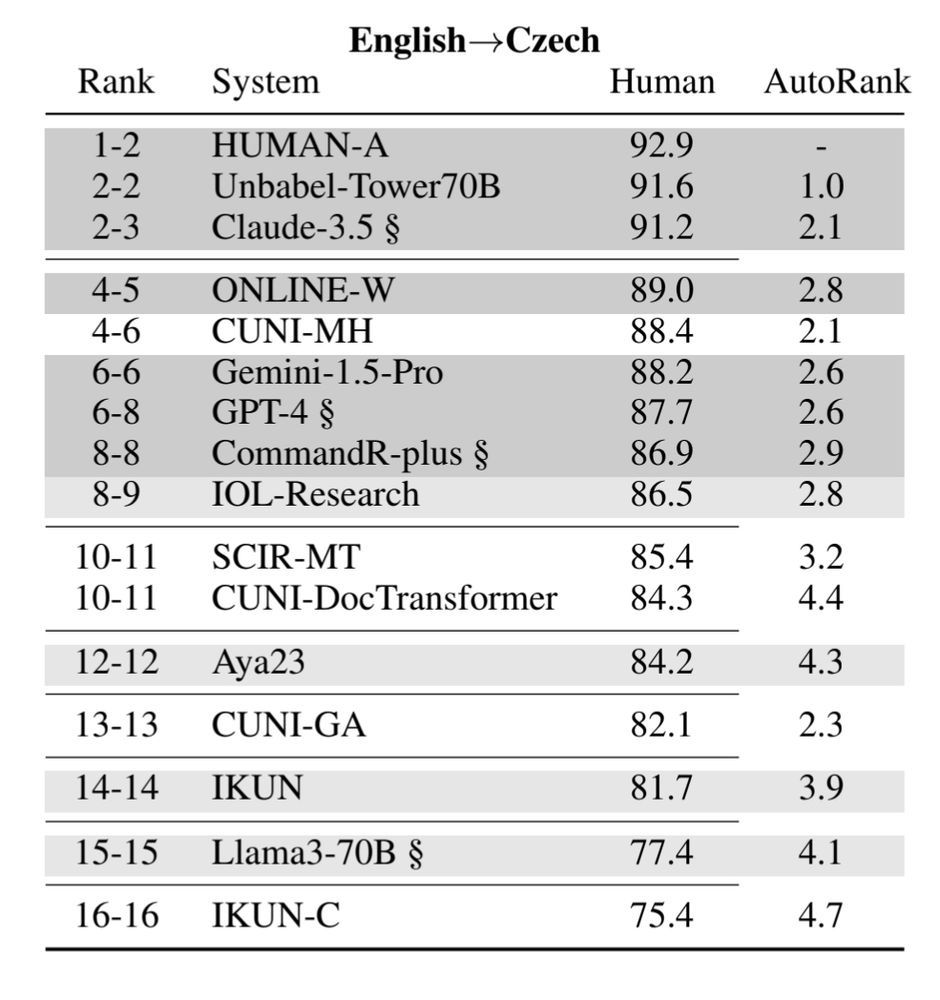

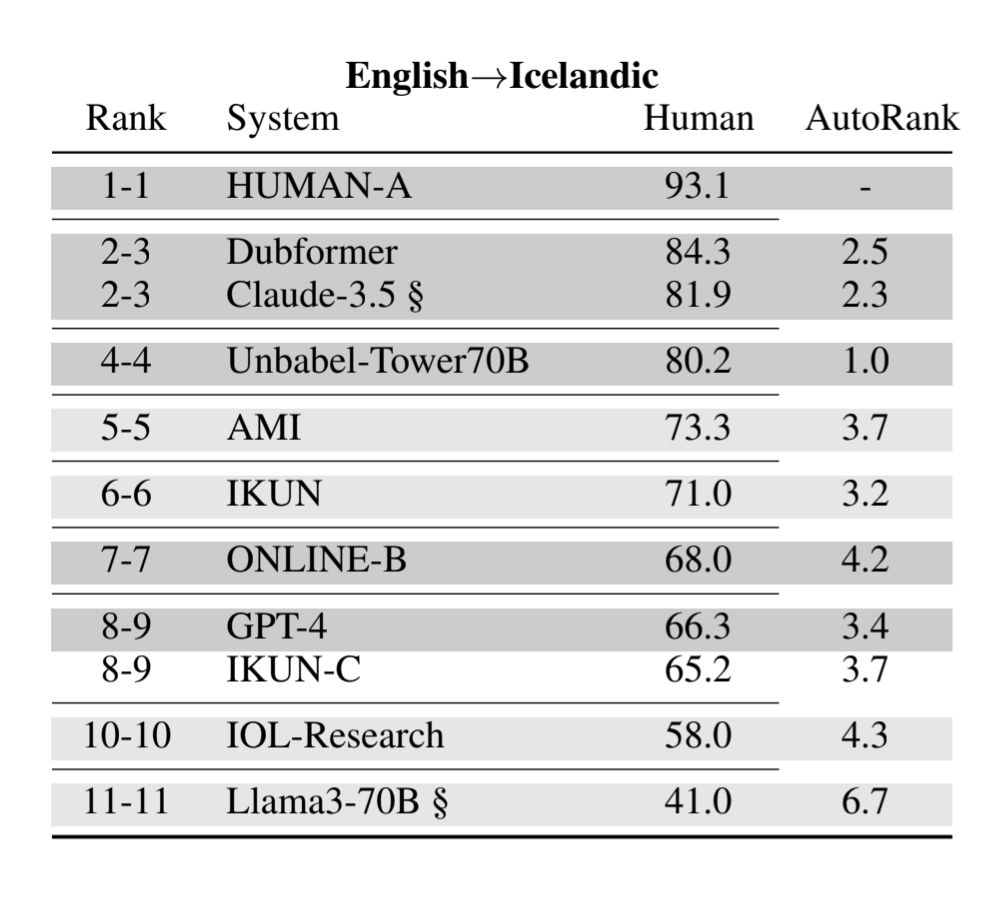

📊 Preliminary ranking of WMT 2025 General Machine Translation benchmark is here!

But don't draw conclusions just yet - automatic metrics are biased for techniques like metric as a reward model or MBR. The official human ranking will be part of General MT findings at WMT.

arxiv.org/abs/2508.14909

Hey, hey! 🎉 We’ve released the blind test set for this year’s WMT General MT and multilingual instruction tasks. Submit your systems to the special 20th anniversary of the conference and see how you compare to others!

The deadline is next week on 3rd July.

www2.statmt.org/wmt25/

Tired of messy non-replicable multilingual LLM evaluation? So were we.

In our new paper, we experimentally illustrate common eval. issues and present how structured evaluation design, transparent reporting, and meta-evaluation can help us to build stronger models.

☀️ Summer internship at Cohere!

Are you excited about multilingual evaluation, human judgment, or meta-eval? Come help us explore how a rigorous eval really looks like while questioning the status quo in LLM evaluation.

I’m looking for an intern (EU timezone preferred), are you interested? Ping me!

It’s here! Our new model’s technical report is out. I'm especially proud of the work we did on its multilingual capabilities - this was a massive, collective effort!

27.03.2025 16:42 — 👍 1 🔁 0 💬 0 📌 0

Big news from WMT! 🎉 We are expanding beyond MT and launching a new multilingual instruction shared task. Our goal is to foster truly multilingual LLM evaluation and best practices in automatic and human evaluation. Join us and build the winning multilingual system!

www2.statmt.org/wmt25/multil...

AI is evolving fast, and Aya Vision is proof of that. This open-weights model is designed to make LLM more powerful across languages and modalities, especially vision! Can’t wait to see the real-world applications, perhaps at WMT this year 😇

04.03.2025 14:40 — 👍 2 🔁 0 💬 0 📌 0

Huge shoutout to colleagues at Google & Unbabel for extending our WMT24 testset to 55 languages in four domains, this is game changer! 🚀

I really hope it puts the final nail in the coffin of FLORES or WMT14. The field is evolving, legacy testsets can't show your progress

arxiv.org/abs/2502.124...

* Revamped constrained track – No restrictions on training data except licensing; all open models under 20B parameters are allowed.

* More challenging sources; long-context translation; prompt preambles; and much more.

📌 All details are available at www2.statmt.org/wmt25/transl...

* New human-evaluated language pairs: EN–Arabic, EN–Estonian, EN–Korean, EN–Serbian, Czech–German, Bhojpuri–EN, Maasai–EN

* New multilingual subtask – Can you build a system that translates 30 languages?

* New modalities – Additional context from video and image (text-to-text remains the core).

Guess what? The jubilee 🎉 20th iteration of WMT General MT 🎉 is here, and we want you to participate - as the entry barrier to make an impact is so low!

This isn’t just any repeat. We’ve kept what worked, removed what was outdated, and introduced many exciting new twists! Among the key changes are:

Yeah, I haven't wrote a paper since it's just a different prompt. It's published in the github repository of GEMBA

09.02.2025 10:14 — 👍 0 🔁 0 💬 1 📌 0That one is extremely large, but we haven't used it either in the automatic ranking. Unfortunately I'm not aware of any API service for metrics

08.02.2025 11:44 — 👍 0 🔁 0 💬 1 📌 0🙏 A huge thank you to all organizers, partners, and participants for making this year's WMT General MT Shared Task a success! Stay tuned for WMT25 - many exciting changes are coming! 🎉

20.11.2024 10:16 — 👍 2 🔁 0 💬 0 📌 0

🏆 Highlights from top systems:

✅ IOL-Research: led in constrained/open, winning 10/11 in its category.

✅ Unbabel-Tower70B: Best participant, winning 8/11 pairs.

✅ Claude-3.5-Sonnet: Best overall with 9/11 wins.

✅ Shoutout to Dubformer (speech) & CUNI-MH (strong constrained)

📊 We introduced new robust and efficient human evaluation protocol: Error Span Annotations (ESA).

📄 Test sets are now finally document-level!

🌍 We've added three new language pairs, including English-Spanish where translations are near-perfect.

For more details, read our findings paper.

Exciting time at this year's WMT24 General MT Shared Task:

🚀 Participant numbers increased by over 50%!

🏗️ Decoder-only architectures are leading the way.

🔊 We've introduced a new speech audio modality domain.

🌐 Online systems are losing ground to LLMs.