Built by:

👨🔬 Tommie Kerssies, Niccolò Cavagnero, Alexander Hermans, Narges Norouzi, Giuseppe Averta, Bastian Leibe, Gijs Dubbelman, Daan de Geus

📍 TU Eindhoven, Polytechnic of Turin, RWTH Aachen University

#ComputerVision #DeepLearning #ViT #ImageSegmentation #EoMT #CVPR2025

(6/6)

31.03.2025 20:35 — 👍 2 🔁 0 💬 0 📌 0

Your ViT is Secretly an Image Segmentation Model (CVPR 2025)

CVPR 2025: EoMT shows ViTs can segment efficiently and effectively without adapters or decoders.

Segmentation, simplified.

We’re excited to see what you build on top of it. 🛠️

🌐 Project: tue-mps.github.io/eomt

📝 Paper: arxiv.org/abs/2503.19108

💻 Code: github.com/tue-mps/eomt

🤗 Models: huggingface.co/tue-mps

(5/6)

31.03.2025 20:35 — 👍 2 🔁 0 💬 1 📌 0

Why does EoMT work?

Large ViTs pre-trained on rich visual data (like DINOv2 🦖) can learn the inductive biases needed for segmentation, with no extra components required.

✅ EoMT removes the clutter and lets the ViT do it all.

(4/6)

31.03.2025 20:35 — 👍 2 🔁 0 💬 1 📌 0

How fast can segmentation get while still maintaining accuracy?

✅ EoMT achieves an optimal trade-off between accuracy (PQ) 📊 and speed (FPS) ⚡ on COCO, thanks to its simple encoder-only design.

❌ No complex additional components.

❌ No bottlenecks.

🚀 Just performance.

(3/6)

31.03.2025 20:35 — 👍 2 🔁 0 💬 1 📌 0

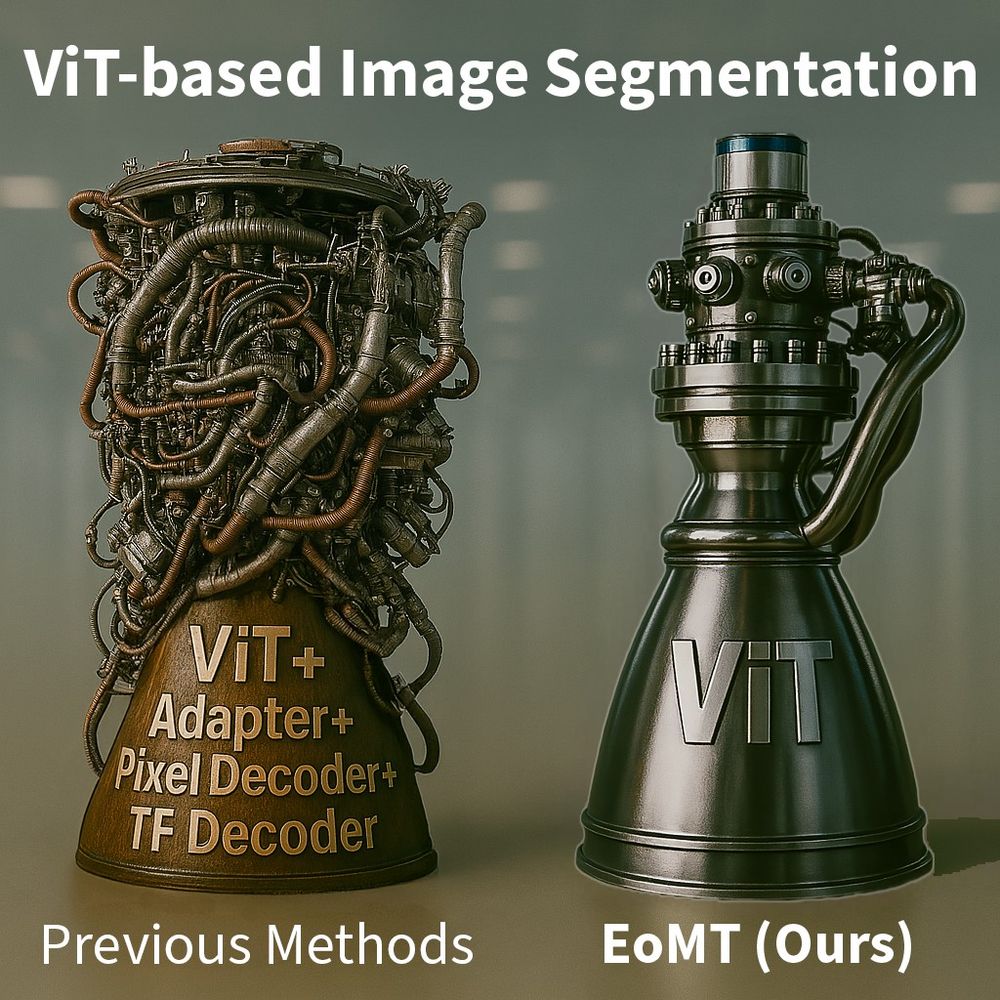

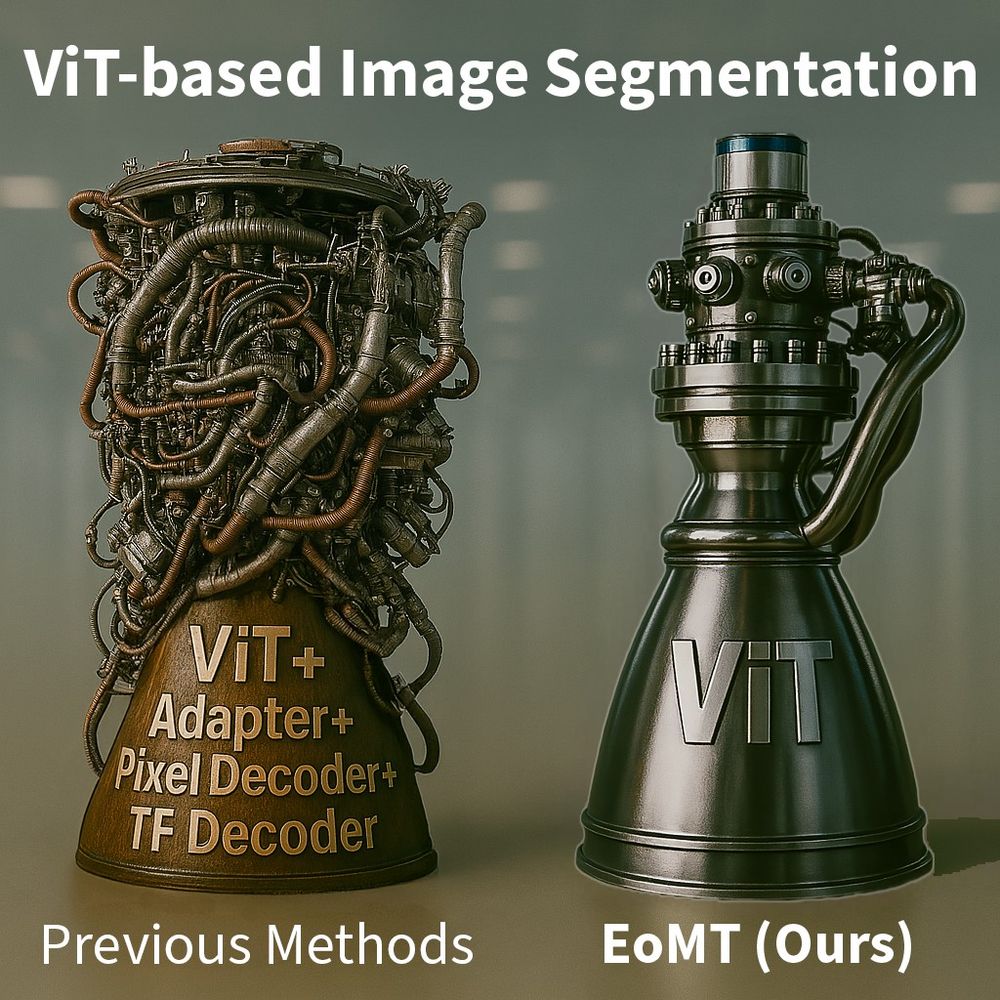

How do modern segmentation models work?

🚫 They chain together complex components:

ViT → Adapter → Pixel Decoder → Transformer Decoder…

✅ EoMT removes them all.

It keeps only the ViT and adds a few query tokens that guide it to predict masks, no decoder needed.

(2/6)

31.03.2025 20:35 — 👍 2 🔁 0 💬 1 📌 0

Image segmentation doesn’t have to be rocket science. 🚀

Why build a rocket engine full of bolted-on subsystems when one elegant unit does the job? 💡

That’s what we did for segmentation.

✅ Meet the Encoder-only Mask Transformer (EoMT): tue-mps.github.io/eomt (CVPR 2025)

(1/6)

31.03.2025 20:35 — 👍 8 🔁 4 💬 1 📌 1