Curl Descent: Non-Gradient Learning Dynamics with Sign-Diverse Plasticity

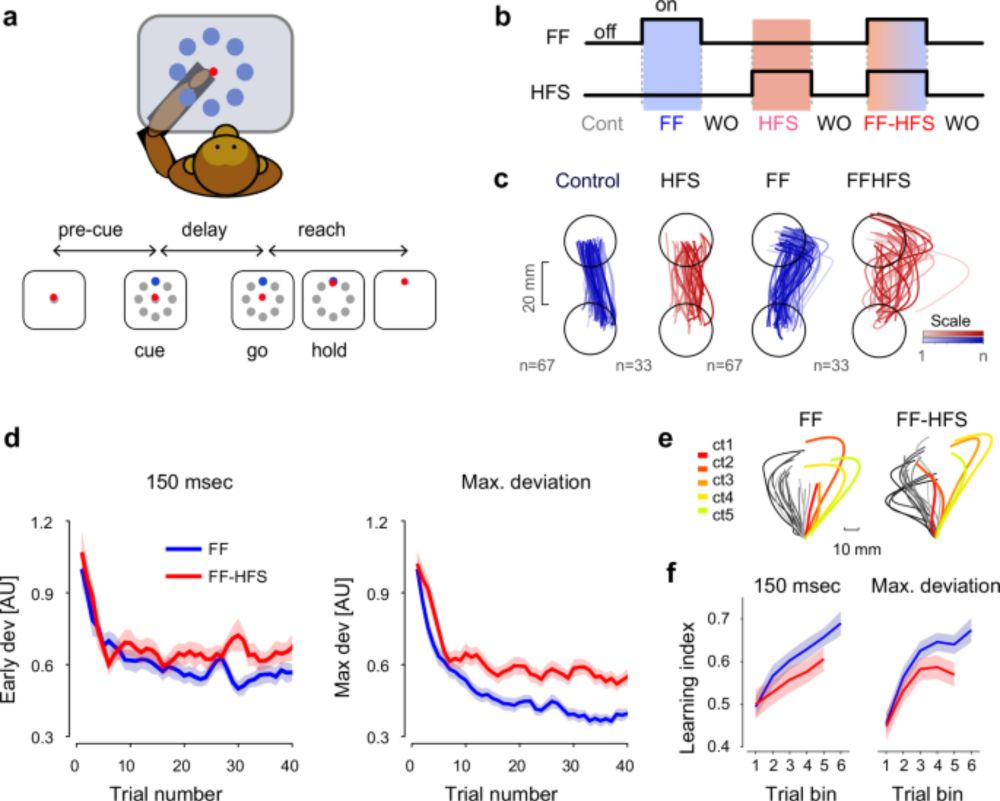

Gradient-based algorithms are a cornerstone of artificial neural network training, yet it remains unclear whether biological neural networks use similar gradient-based strategies during learning. Expe...

🚨New spotlight paper at Neurips 2025🚨

We show that in sign-diverse networks, inherent non-gradient “curl” terms arise, and can, depending on network architecture, destabilize gradient-descent solutions or paradoxically accelerate learning beyond pure gradient flow.

🧵⬇️

www.arxiv.org/abs/2510.02765

10.10.2025 17:53 —

👍 13

🔁 4

💬 1

📌 0

More than 450 researchers have signed this declaration in the past 24 hours. While we continue to focus most of our efforts within Israel, there is, and always has been, significant resistance in the country. The academic community is one of many voices expressing this sentiment.

27.07.2025 20:36 —

👍 3

🔁 0

💬 0

📌 0

In the past year and a half, we have been intensively protesting and fighting the Israeli government in an attempt to stop the war, secure the release of all hostages, and prevent the ongoing humanitarian crisis. Until now, our protests have primarily been focused internally.

27.07.2025 20:36 —

👍 18

🔁 6

💬 2

📌 1

Jews for Food Aid for Gaza

Jewish people support food aid for families in Gaza and an immediate end to the Israeli government’s food aid blockade. #JewsForFoodAidForPeopleInGaza

If you're Jewish please sign this from Jews for Food Aid for People in Gaza:

foodaidforgaza.org

and anyone can donate to the organization they recommend, Gaza soup kitchen, which serves up to 6000 meals daily: givebutter.com/AReeXq

16.05.2025 03:54 —

👍 9

🔁 2

💬 0

📌 0

Maher will be presenting this work at @cosynemeeting.bsky.social. If you are interested, come check it out.

Friday 2-083.

27.03.2025 20:15 —

👍 2

🔁 0

💬 0

📌 0

Training Large Neural Networks With Low-Dimensional Error Feedback

Training deep neural networks typically relies on backpropagating high dimensional error signals a computationally intensive process with little evidence supporting its implementation in the brain. Ho...

(6/6) Our findings challenge some prevailing conceptions about gradient-based learning, opening new avenues for understanding efficient neural learning in both artificial systems and the brain.

Read our full preprint here: arxiv.org/abs/2502.20580 #Neuroscience #MachineLearning #DeepLearning

23.03.2025 09:23 —

👍 8

🔁 0

💬 0

📌 0

(5/6) Applying our method to a simple ventral visual stream model replicates the results of Lindsey, @suryaganguli.bsky.social and @stphtphsn.bsky.social and shows that the bottleneck in the error signal—not the feedforward pass—shapes the receptive fields and representations of the lower layers.

23.03.2025 09:23 —

👍 4

🔁 0

💬 1

📌 0

(4/6) The key insight: The dimensionality of the error signal doesn’t need to scale with network size—only with task complexity. Low-dimensional feedback can effectively guide learning even in very large nonlinear networks. The trick is to align feedback weights to the error (the gradient loss).

23.03.2025 09:23 —

👍 7

🔁 0

💬 1

📌 0

(3/6) We developed a local learning rule leveraging low-dimensional error feedback, decoupling forward and backward passes. Surprisingly, performance matches traditional backpropagation across deep nonlinear networks—including convolutional nets and even transformers!

23.03.2025 09:23 —

👍 5

🔁 0

💬 1

📌 0

(2/6) Backpropagation, the gold standard for training neural networks, relies on precise but high-dimensional error signals. Other proposed alternatives, like Feedback alignment do not challenge this assumption. Could simpler, low-dimensional signals achieve similar results?

23.03.2025 09:23 —

👍 5

🔁 0

💬 1

📌 0

TUM Global Postdoc Fellowship - TUM

Recruiting postdocs! Please get in touch if you wanna discuss projects on biologically plausible learning in RNNs through TUM's global postdoctoral fellowship. I'll be at Cosyne this year if you wanna talk more. www.tum.de/ueber-die-tu...

07.03.2025 14:47 —

👍 19

🔁 12

💬 0

📌 0

Re-posting is appreciated: We have a fully funded PhD position in CMC lab @cmc-lab.bsky.social (at @tudresden_de). You can use forms.gle/qiAv5NZ871kv... to send your application and find more information. Deadline is April 30. Find more about CMC lab: cmclab.org and email me if you have questions.

20.02.2025 14:50 —

👍 77

🔁 89

💬 3

📌 8

Just realized I've mentioned the wrong Jan above. Sorry @japhba.bsky.social!

17.02.2025 21:50 —

👍 0

🔁 0

💬 0

📌 0

Neural mechanisms of flexible perceptual inference

What seems obvious in one context can take on an entirely different meaning if that context shifts. While context-dependent inference has been widely studied, a fundamental question remains: how does ...

Just as the meaning of words depends on context, the brain must infer context and meaning simultaneously to adapt in real-time. We believe these insights uncover core principles of #CognitiveFlexibility—check it out! www.biorxiv.org/content/10.1...

Congrats to John and Jan on their outstanding work!

17.02.2025 13:43 —

👍 0

🔁 0

💬 1

📌 0

Remarkably, DeepRL networks converged on near-optimal strategies and exhibited the same nontrivial Bayesian-like belief-updating dynamics—despite never being trained on these computations directly. This suggests that inference mechanisms can emerge naturally through reinforcement learning.

17.02.2025 13:43 —

👍 1

🔁 0

💬 1

📌 0

By deriving a Bayes-optimal policy, we show that rapid context shifts emerge from sequential belief-state updates—driven by flexible internal models. The resulting dynamics resemble an integrator with nontrivial context-dependent, adaptive bounds.

17.02.2025 13:43 —

👍 0

🔁 0

💬 1

📌 0

We tackled this challenge with behavioral experiments in mice, Bayesian theory, and #DeepRL. Using a novel change-detection task, we show how mice and networks adapt on the first trial from a context change by inferring both context and meaning—without trial and error.

17.02.2025 13:43 —

👍 0

🔁 0

💬 1

📌 0

Neural mechanisms of flexible perceptual inference

What seems obvious in one context can take on an entirely different meaning if that context shifts. While context-dependent inference has been widely studied, a fundamental question remains: how does ...

New preprint: Neural Mechanisms of Flexible Perceptual Inference!

How does the brain simultaneously infer both context and meaning from the same stimuli, enabling rapid, flexible adaptation in dynamic environments? www.biorxiv.org/content/10.1...

Led by John Scharcz and @janbauer.bsky.social

17.02.2025 13:43 —

👍 7

🔁 0

💬 1

📌 0