6/6: Thanks for the supervision

@alestolfo.bsky.social @mrinmaya.bsky.social

Check out our paper: arxiv.org/abs/2507.12379

@yuchengsun.bsky.social

Currently in ETH Zurich. Working on mechanistic interpretability.

6/6: Thanks for the supervision

@alestolfo.bsky.social @mrinmaya.bsky.social

Check out our paper: arxiv.org/abs/2507.12379

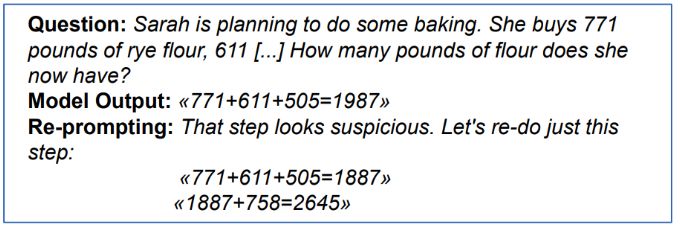

5/6: Finally, we use this information as a weak oracle to trigger self-correction. Re-prompting the LM based on the probe’s prediction leads to a correction of up to 11% of the mistakes made by the model.

18.07.2025 17:25 — 👍 1 🔁 0 💬 0 📌 0

4/6: Can this be useful in a more realistic setting? We apply the probes trained on “pure arithmetic” queries to structured CoT traces obtained on GSM8K. The probes transfer well in a robust and consistent manner.

18.07.2025 17:25 — 👍 0 🔁 0 💬 0 📌 0

3/6: Given the previous results, it should be possible to predict the correctness of the model output. We designed lightweight probes that achieve high accuracy.

18.07.2025 17:24 — 👍 0 🔁 0 💬 0 📌 0

2/6: We feed an LM arithmetic queries and we train lightweight probes (e.g., circular) on its residual stream. Interestingly, they can accurately predict the ground-truth result, regardless of the LM's correctness.

18.07.2025 17:23 — 👍 0 🔁 0 💬 0 📌 0

1/6: Can we use an LLM’s hidden activations to predict and prevent wrong predictions? When it comes to arithmetic, yes!

I’m presenting new work w/

@alestolfo.bsky.social

“Probing for Arithmetic Errors in LMs” @ #ICML2025 Act Interp WS

🧵 below

Do you plan to work on AI safety/ alignment in the future?

11.01.2025 14:07 — 👍 2 🔁 0 💬 1 📌 0