StarSEM 2025 ARR Commitment

Welcome to the OpenReview homepage for StarSEM 2025 ARR Commitment

*SEM 2025 call for ARR commit papers! *SEM 2025 will be co-located with EMNLP and accepts commits of ARR papers with complete sets of ARR reviews. Commit your paper here by Aug 22: openreview.net/group?id=acl...

#NLP #NLProc

31.07.2025 07:38 — 👍 5 🔁 5 💬 0 📌 0

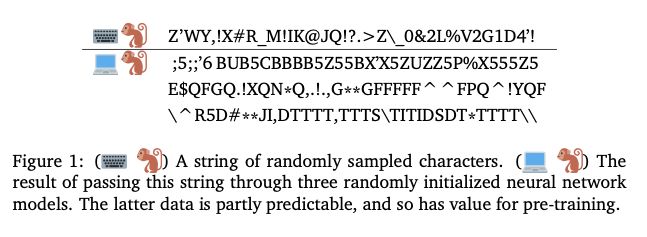

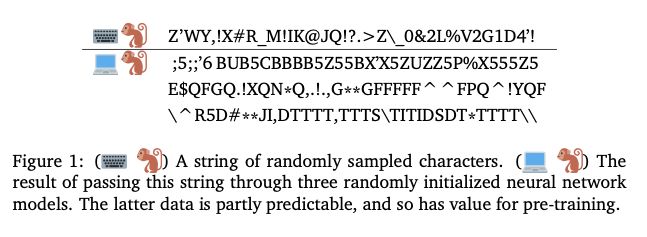

A table showing one string of random characters next to an emoji of a monkey next to a keyboard (representing a typewriter). Below it, three strings, also of random characters, but with more structure. Some characters and n-grams repeat. Next to these three strings is an emoji of a monkey next to a laptop computer. The caption reads: (⌨️🐒) A string of randomly sampled characters. (💻🐒) The result of passing this string through three randomly initialized neural network models. The latter data is partly predictable, and so has value for pre-training.

New pre-print!

**Universal pre-training by iterated random computation.**

⌨️🐒 A monkey behind a typewriter will produce the collected works of Shakespeare eventually.

💻🐒 But what if we put a monkey behind a computer?

⌨️🐒 needs to be lucky enough to type […]

[Original post on sigmoid.social]

26.06.2025 10:41 — 👍 2 🔁 0 💬 2 📌 0

Book cover titled “The AI Con” with subtitle “How to Fight Big Tech’s Hype and Create the Future We Want” by Emily M. Bender and Alex Hanna.

Excited to start reading this book by @emilymbender and @alex! Immediately went to a nearby Readings after work after finding out that Emily is coming to Melbourne in July for a book talk. Can’t miss it!

#AI #LLM #Melbourne

04.06.2025 13:11 — 👍 1 🔁 1 💬 0 📌 0

Original post on sigmoid.social

My university’s leadership just cited “academic freedom” and “national interest” to justify ties with weapon manufacturers. I don’t think I can accept that the two are more important than human lives. Leadership also mentioned how such research collabs must uphold ethics. It’s clear that my […]

03.06.2025 05:07 — 👍 0 🔁 0 💬 0 📌 0

Travel Subsidies

Official website of the 14th Joint Conference on Lexical and Computational Semantics

*SEM 2025 offers travel subsidies up to USD 750 for lead student authors with an accepted paper. Priority will be given to applicants from developing countries who would not have adequate financial support to attend in person. Please see starsem2025.github.io/subsidies for details.

#NLP #NLProc

28.05.2025 03:40 — 👍 9 🔁 3 💬 0 📌 1

Call for Papers

Official website of the 14th Joint Conference on Lexical and Computational Semantics

*SEM 2025: Direct submissions deadline is now EXTENDED to June 13, 2025! *SEM will be co-located with EMNLP in Suzhou, China. It will be a hybrid event, virtual attendance possible (more info on this will be posted later). Full CFP: starsem2025.github.io/cfp

#NLP #NLProc

28.05.2025 03:38 — 👍 7 🔁 3 💬 0 📌 2

Call for Papers

Official website of the 14th Joint Conference on Lexical and Computational Semantics

*SEM 2025 final call for DIRECT SUBMISSIONS! starsem2025.github.io/cfp Direct submissions are due next week on May 30, 2025. We also accept papers via ARR commitment, due on August 22, 2025. Give your strong-but-overlooked ACL paper the second chance it deserves! 😉

#NLProc #NLP

21.05.2025 05:05 — 👍 2 🔁 6 💬 0 📌 1

a blue background with the words follow me written in white

Alt: a blue background with the words follow me written in white

Dear NLP folks, if you have an account in the Fediverse (e.g., Mastodon), you can now follow us by this handle: @starsem.bsky.social@bsky.brid.gy. To do this seamlessly, simply go to our followers page (fed.brid.gy/bsky/starsem...), put your Fediverse address and click Follow!

#NLProc #CompLing

01.05.2025 07:10 — 👍 0 🔁 1 💬 0 📌 0

Original post on sigmoid.social

[Australian universities’ ties to weapon manufacturers]

Finally got around to watch this debate on whether it is ethical for universities to work with weapon companies: https://www.mapw.org.au/watch-bill-williams-memorial-debate/ It wasn’t really a debate as UoM leadership failed to attend or […]

28.04.2025 04:52 — 👍 0 🔁 0 💬 0 📌 0

Original post on tldr.nettime.org

"LLM did something bad, then I asked it to clarify/explain itself" is not critical analysis but just an illustration of magic thinking.

Those systems generate tokens. That is all. They don't "know" or "understand" or can "explain" anything. There is no cognitive system at work that could […]

02.04.2025 15:51 — 👍 18 🔁 118 💬 5 📌 0

Original post on sigmoid.social

🚨NEW PREPRINT ALERT🚨

Many training methods and evaluation metrics embrace human label variation, but which is best in what settings? In our new preprint (http://arxiv.org/abs/2502.01891), we propose new soft metrics and study 14 training methods across 6 datasets, 2 language models, and 6 […]

02.04.2025 11:59 — 👍 0 🔁 0 💬 0 📌 0