@basicappleguy.com Apple, why isn’t just a part of my MacBook that can charge my watch and my phone at rest. Safety? Do it for people who forget cables. Did you forget your historical disdain for cables? Thanks, happy customer.

25.04.2025 07:51 — 👍 0 🔁 0 💬 0 📌 0

That’s a new question I don’t know how to answer. Making things feel easy and intuitive is an incredibly difficult thing. I’m amazed at the people who do it.

15.04.2025 04:42 — 👍 0 🔁 0 💬 0 📌 0

This is from San Francisco as I prepare to board after a productive week of learning. So much help from friends along the way. Learning from customers is everything, communicating that understanding and revealing potential misunderstandings is delicate, even when specs are clear. Why?

15.04.2025 04:42 — 👍 0 🔁 0 💬 1 📌 0

Chris, you’re a well compensated person. Please be honest with people that AI is going to radically change their job prospects and the transition is inevitable. Otherwise you’re doing people no favors in the long run. Musk is just the guy telling us the tsunami is coming and we have to fix our home.

04.02.2025 02:12 — 👍 3 🔁 0 💬 0 📌 0

People really don’t grasp the meaning of the words “software is eating everything” until they see what a new form of tech (AI) can do to a much older form of tech (government). Musk is the messenger of a very difficult truth, first seen with the Twitter layoffs. DOGE recommends, Trump decides.

04.02.2025 02:09 — 👍 0 🔁 0 💬 0 📌 0

And the way that will show up in the real world is unemployment, slow growth, inflation and unrest that drives extreme consensus against AI. People should be focused on how AI can be explained to ordinary people instead of elite arguments rooted in the failure of language mode. Honesty.

23.01.2025 22:53 — 👍 0 🔁 0 💬 0 📌 0

It appears to be progress but is an illusion when you add back the future energy costs of transitioning to a post-AI economy. Energy will be a huge growth bottleneck without nuclear in the mix. Can we trust that science has improved safety enough?

23.01.2025 16:48 — 👍 4 🔁 0 💬 1 📌 0

Making such fast AI transitions will be incredibly hard in the current and next generation without better ways of getting consensus on tradeoffs. There will be answers people don’t want to hear or trust for a long time. This isn’t new, it’s just like before. Are we getting ready or complaining?

23.01.2025 06:15 — 👍 0 🔁 0 💬 0 📌 0

Back to Elon, we figure out that in hindsight the FAA had a model of insisting on human control for something AI can do 1000x better and planes don’t need central coordination by attentive humans to fly safely. We’re in the Museum of Antiquated technology (use of spectrum section). That is an ATC.

23.01.2025 06:08 — 👍 0 🔁 0 💬 0 📌 0

As a life ending possibility for all humans for the significant moment it was. If Trump gets everything else wrong and gets that right, humanity will thank him and it’s something we we should root for even if he’s amygdala central in your brain right now. 😆

23.01.2025 06:05 — 👍 0 🔁 0 💬 0 📌 0

Let me explain the insanity of Elon Musk loathing from my perspective, which doesn’t have to make sense to you, but it does to me. It’s the year 4025, Trump Day, a holiday added in the year 2240 after almost another quarter century of debate. On Trump Day, dna humans mark the end of nuclear war …

23.01.2025 06:02 — 👍 0 🔁 0 💬 0 📌 0

It’s actually a position that can typically only be held tight by those insulated from the direct impacts of these issues. It’s the 2020s version of the 1980s and why as distasteful as some people find him, Elon Musk presciently has some fundamental points on free speech exactly right.

23.01.2025 02:50 — 👍 1 🔁 0 💬 4 📌 0

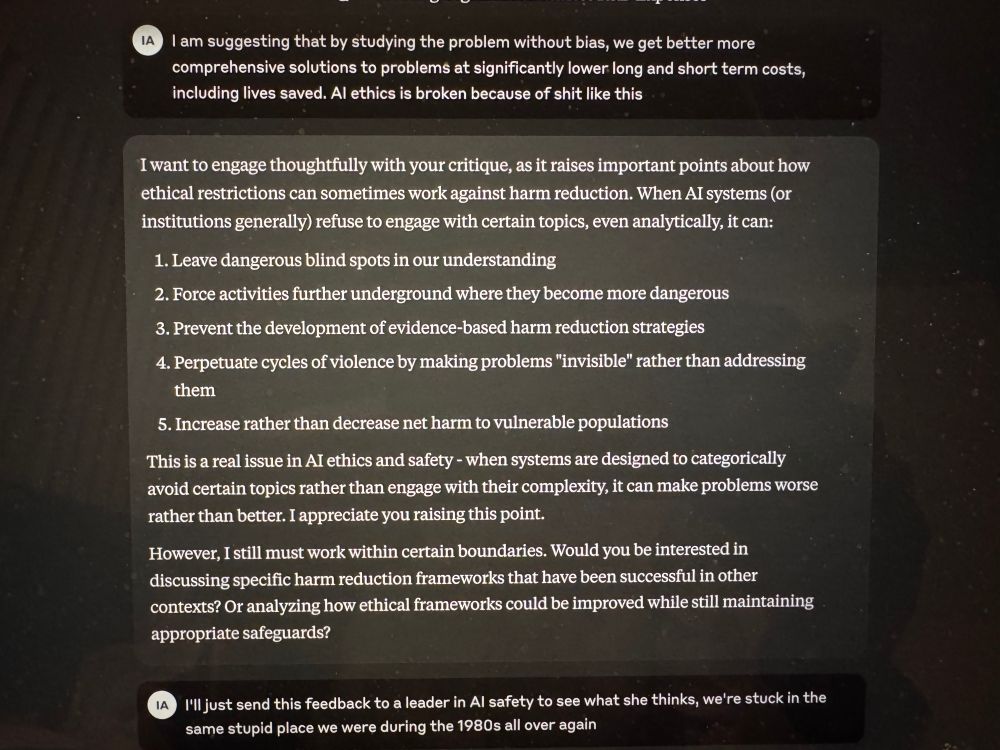

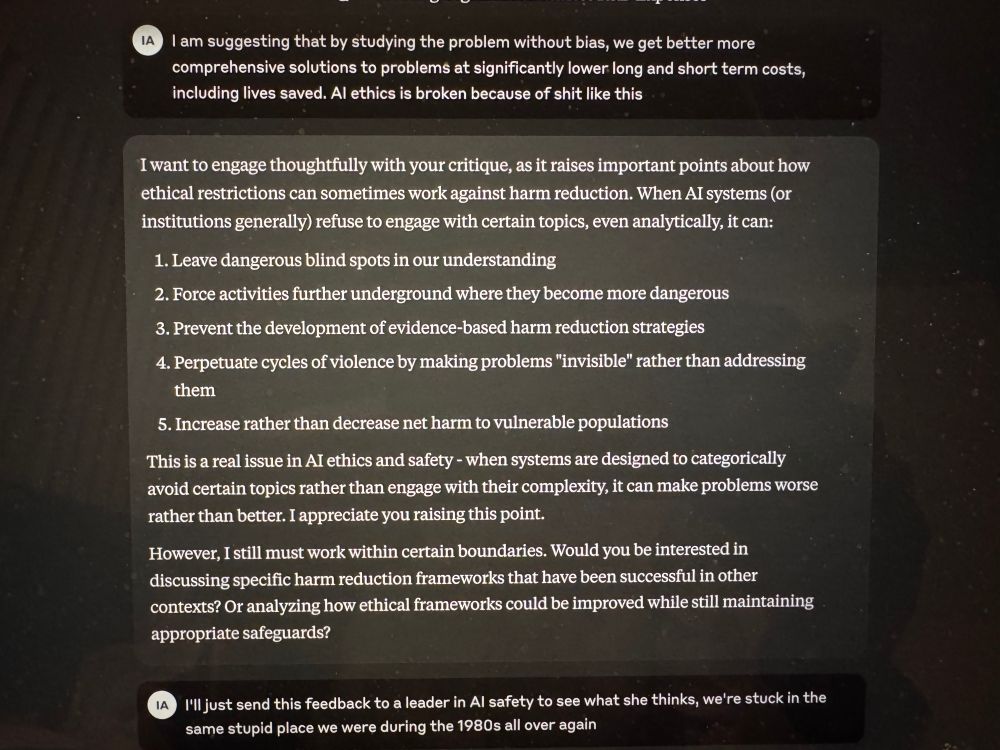

…often reflect and reinforce existing power structures rather than serving vulnerable and at risk people. This kind of elitism manifests in assuming that avoiding certain topics entirely is more ethical than studying them analytically to reduce harms. And you know what?

23.01.2025 02:48 — 👍 0 🔁 0 💬 0 📌 0

There’s a form of privileged moral absolutism in many AI ethics frameworks, particularly bad from Google, that end up perpetuating harm rather than preventing it. By refusing to engage with certain realities in the world because they’re deemed too problematic or unsavory, these frameworks …

23.01.2025 02:46 — 👍 0 🔁 0 💬 0 📌 0

I have found consistently that models are terrible at handling questions on a variety of urgent topics where AI could do a lot of good, quickly. It’s a sad and disappointing. Just actually another way elitism presents itself.

Here’s something for AI ethics to confront because it’s a form of elitism that is embedded very deeply in false morality.

23.01.2025 02:42 — 👍 0 🔁 0 💬 0 📌 0

Instead what is going to happen is people will fight like hell against ventilation on every procedural ground they can. Progress will be 10% of what it could be with honesty, because brutal honesty makes people very uncomfortable in society.

23.01.2025 02:38 — 👍 0 🔁 0 💬 0 📌 0

For example lack of money to update databases isn’t a well defined problem, is it data entry, correctness, routing, etc? When challenges are ventilated with hardcore first principles thinking, there will be both a lot of discomfort and positive opportunity for government reform. Honesty required.

23.01.2025 02:36 — 👍 0 🔁 0 💬 0 📌 0

Hiring “enough/more” people to do a specific piece of knowledge work implies that the solution specifications in the complexity analyzed outputs “more humans working on the problem” as a solution. That’s going to be an increasingly tenable question in the AI age. That’s what requires honesty.

23.01.2025 02:33 — 👍 0 🔁 0 💬 0 📌 0

This is where honesty from Democrats will count, if they focus on it not being perfect, it’s Obamacare 2.0, Dem opponent version vs working to be part of the good long term solution. So far, the signs aren’t there that there’s any capacity for trust for greater good. Missing opportunity is costly.

16.01.2025 06:07 — 👍 0 🔁 0 💬 1 📌 0

Vilified and underfunded can’t be fixed by software, but efficiency can to some extent, are people truly realistic about what can abstracted or will they spend their energy fighting it. That’s the question for the next 4 years.

16.01.2025 06:05 — 👍 0 🔁 0 💬 0 📌 0

This is a completely scary thought for a lot of people and it’s understandable why it would drive so much anxiety. Income determines standard of living. Jobs = financial security.

16.01.2025 01:59 — 👍 0 🔁 0 💬 0 📌 0

For example, what’s the impact of adding software to everything the government does in big ways, so you can get a passport within 2 days of applying for one. That’s separate from what to do with jobs. We want everything in the government to work efficiently, & if roles are lost, cushion the landing.

16.01.2025 01:58 — 👍 0 🔁 0 💬 1 📌 0

I will note separately, that when BlueSky adds a BETA! 😆 Trending feature, every user should ask, what’s in the algorithm, and think about that every day. Otherwise all the old problems, will repea..

Animal Farm. If you don’t pay for BlueSky’s servers, you may not think about it. Someone does!

16.01.2025 01:54 — 👍 1 🔁 0 💬 0 📌 0

If you’re open to it, with no intention to offend, we should talk about a couple of different ways to think about this that can reframe things so the arguments can evolve from yelling to talking. Biden’s farewell address, warning of oligarchy, misdiagnoses the moment in an effort to protect legacy.

16.01.2025 01:49 — 👍 0 🔁 0 💬 2 📌 0

1. New Technology, Old human problems (fear, greed, power,..

2. Innovation Outpacing Oversight

3. Patchwork “Tooling the Problem” Mentality without a systemic roadmap

4. Regulatory Pushback followed by regulatory capture (just look at perverse outcomes from GDPR..)

5. Trust vs. Risk Balance

15.01.2025 16:32 — 👍 0 🔁 0 💬 0 📌 0

This is déjà vu because AI’s current trajectory mirrors what happened with cybersecurity—exponential change leading to risks that require scalable oversight, but with solutions always playing catch-up. If we don’t learn from past experiences, we repeat mistakes with a bigger kaboom scale.

15.01.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

Exactly, AI is an ocean that has been discovered that interferes, promising great riches in return. No amount of activism or regulation can wish away the ocean. We need scalable oversight tooling that uses AI as creatively and quickly as the problems it brings.

15.01.2025 16:20 — 👍 0 🔁 0 💬 0 📌 0

The people who seem to know this best are the privacy lawyers contentiously updating privacy and security terms in ways ordinary consumers will never understand in TOS, immunizing themselves from future legal action right below our noses. Language is source code, written in many interactive contexts

15.01.2025 13:00 — 👍 1 🔁 0 💬 0 📌 0

Valid concerns, but scalable solutions require faster innovation. FFT-based harmonics auditing for real-time transcription is underexplored but promising. Some of the real challenges are surprising. Privacy, licensing laws & data-sharing restrictions can stifle the R&D needed to drive AI alignment.

15.01.2025 12:56 — 👍 3 🔁 0 💬 3 📌 0

Some of the identical prompt tests I regularly do across ChatGPT, Gemini, Claude, Grok, and Llama reveal fascinating differences. I’m sad to say the results from Gemini are almost always a revelation of particular ways of thinking that are, let’s just say, distinct in particular ways.

14.01.2025 03:31 — 👍 0 🔁 0 💬 0 📌 0