There are three chapters in Early Release on the O’Reilly Platform (Intro, Memory, and Tools): learning.oreilly.com/library/view...

24.11.2025 16:27 — 👍 3 🔁 1 💬 0 📌 0@jayalammar.bsky.social

Writer http://jalammar.github.io. O'Reilly Author http://LLM-book.com. LLM Builder Cohere.com.

There are three chapters in Early Release on the O’Reilly Platform (Intro, Memory, and Tools): learning.oreilly.com/library/view...

24.11.2025 16:27 — 👍 3 🔁 1 💬 0 📌 0

And here you have it! The cover of our “An Illustrated Guide to AI Agents” book!

Why a Dolphin? The process of choosing cover animals is a closely guarded secret held deep within the legendary halls of O'Reilly. @jayalammar.bsky.social and I do not get to choose it, but we love it!

Inside NeurIPS 2025: The Year’s AI Research, Mapped

New blog post!

NeurIPS 2025 papers are out—and it’s a lot to take in. This visualization lets you explore the entire research landscape interactively, with clusters and

@cohere.com LLM-generated explanations that make it easier to grasp.

Excited to share that @jayalammar.bsky.social and I are writing the book “An Illustrated Guide to AI Agents” with @oreilly.bsky.social 🥳

Our new book will contain chapters on the fundamentals of agents (memory, tools, and planning), alongside more advanced concepts like RL and reasoning LLMs.

We're also eager to hear what you'd like to see in such a book. What concepts do you think are central to understanding, building, and properly using AI agents?

Early release coming soon!

This will be our most visually-rich project yet. We will drill into the main concepts for understanding and building AI agents -- likely the most promising area of AI as of today. It will cover tools, memory, code generation, reasoning, multimodality, RLVR/GRPO, and much more.

13.10.2025 15:13 — 👍 1 🔁 0 💬 1 📌 0

The Illustrated Guide to AI Agents

New book announcement!

Thrilled that together with @maartengr.bsky.social , we're writing a new book titled “An Illustrated Guide to AI Agents” and published by @oreilly.bsky.social.

The next generation of open LLMs should be inclusive, compliant, and multilingual by design. That’s why we @icepfl.bsky.social @ethz.ch @cscsch.bsky.social ) built Apertus.

03.09.2025 09:26 — 👍 24 🔁 8 💬 2 📌 2

The Illustrated GPT-OSS

New post! A visual tour of the architecture, message formatting, and reasoning of the latest GPT.

newsletter.languagemodels.co/p/the-illust...

The legendary John Carmack at #upperbound:

- Current AI focus is RL (with Richard Sutton) solving Atari games

- Thinking in line with the Alberta Plan.

- It was a misstep to start working too low-level (e.g., at the cuda level). I kept stepping up the stack chain until now in pytorch

I'm really excited for this year's PyData London conference - there are some awesome talks on the schedule and I'm excited to hear the keynote speakers @jayalammar.bsky.social, Tony Wears, & Leanne Fitzpatrick

#pydata #datascience

Advertisement for PyData London 2025 conference. Headline: Meet your keynote speakers - Jay Alammar - Tony Mears - Leanne Fitzpatrick Book your tickets https://pydata.org/london2025

Unleash your inner data aficionado at PyData London 2025, 6-8 June at Convene Sancroft, St. Paul’s!

We have 3 top flight keynotes lined up for you this year from @jayalammar.bsky.social, Leanne Kim Fitzpatrick and Tony Mears.

Just 17 days left. Book your tickets now!

pydata.org/london2025

I'm excited to share the tech report for our @cohere.com @cohereforai.bsky.social Command A and Command R7B models. We highlight our novel approach to model training including self-refinement algorithms and model merging techniques at scale. Read more below! ⬇️

27.03.2025 15:01 — 👍 11 🔁 4 💬 1 📌 3

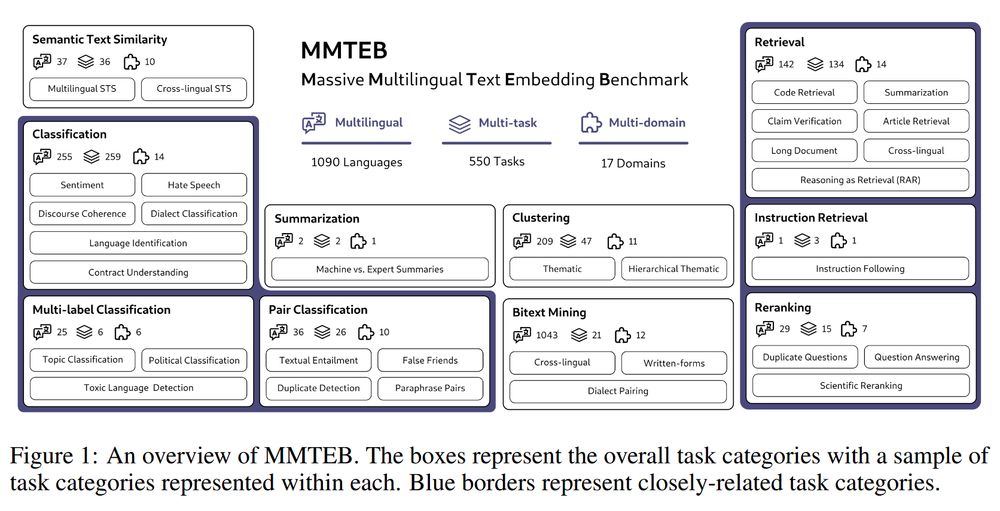

We've just released MMTEB, our multilingual upgrade to the MTEB Embedding Benchmark!

It's a huge collaboration between 56 universities, labs, and organizations, resulting in a massive benchmark of 1000+ languages, 500+ tasks, and a dozen+ domains.

Details in 🧵

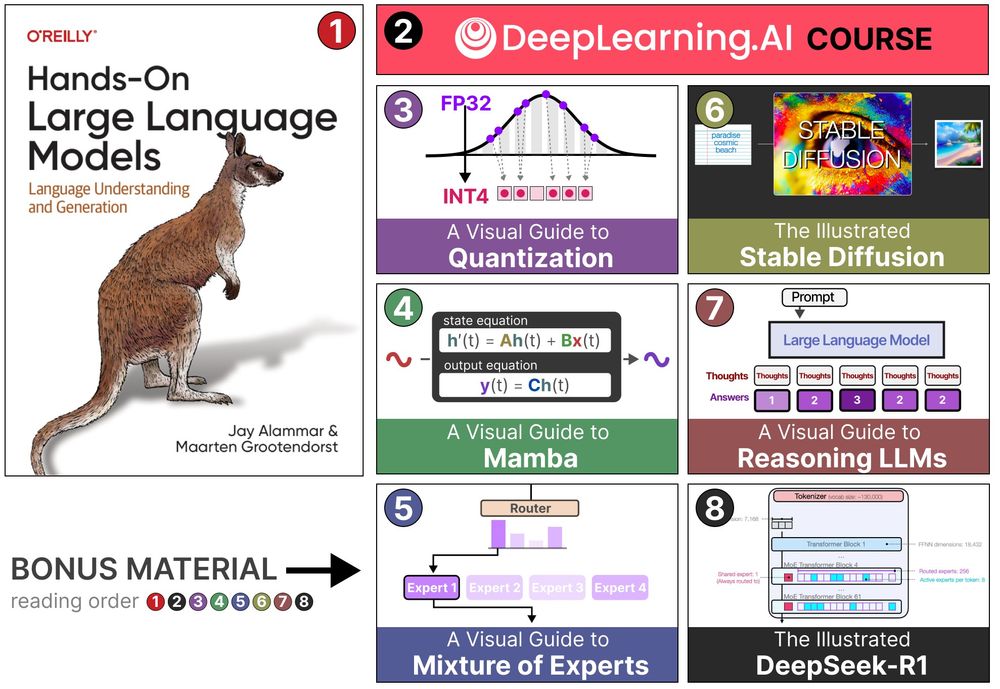

Did you know we continue to develop new content for the "Hands-On Large Language Models" book?

There's now even a free course available with

@deeplearningai.bsky.social!

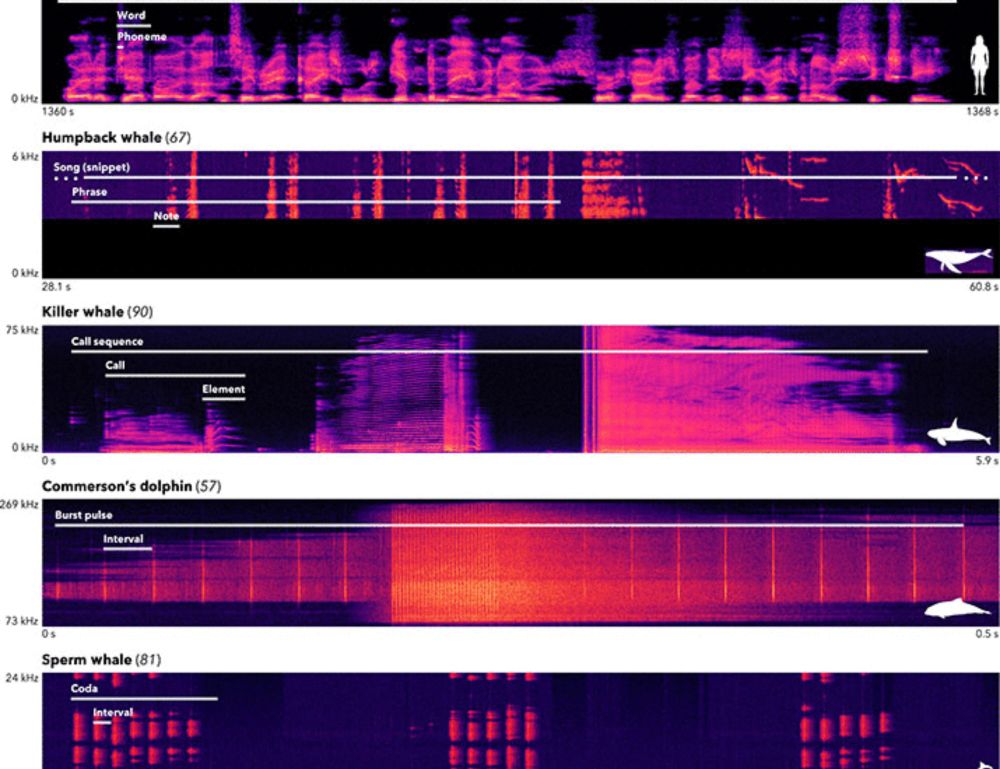

Do whales optimize their vocalizations for efficiency, just like human language? 🐋🎶 My latest study in

Science Advances (@science.org) suggests they do—following linguistic laws seen in human speech. 🧵 www.science.org/doi/10.1126/...

We uncovered the same statistical structure that is a hallmark of human language in whale song, published today in Science. @inbalarnon.bsky.social @simonkirby.bsky.social @jennyallen13.bsky.social @clairenea.bsky.social @emma-carroll.bsky.social

www.science.org/doi/10.1126/...

One of my grand interpretability goals is to improve human scientific understanding by analyzing scientific discovery models, but this is the most convincing case yet that we CAN learn from model interpretation: Chess grandmasters learned new play concepts from AlphaZero's internal representations.

27.01.2025 21:43 — 👍 109 🔁 22 💬 2 📌 1

The Illustrated DeepSeek-R1

Spent the weekend reading the paper and sorting through the intuitions. Here's a visual guide and the main intuitions to understand the model and the process that created it.

newsletter.languagemodels.co/p/the-illust...

Alphaxiv is an awesome way to discuss ML papers -- often with the authors themselves. Here's an intro and demo by @rajpalleti.bsky.social we shot at #Neurips2024

www.youtube.com/watch?v=-Kwl...

The newest extremely strong embedding model based on ModernBERT-base is out: `cde-small-v2`. Both faster and stronger than its predecessor, this one tops the MTEB leaderboard for its tiny size!

Details in 🧵

It contains a notebook per chapter containing all the code examples in the book.

Check it out here: github.com/HandsOnLLM/H...

Floored that the repo for Hands-On Large Language Models is now at 3.6k Github stars!

And excited that professors are starting to use the book to teach LLM courses. Reach out to us if we can be of assistance!

And if you've liked the book, leave us a review on Amazon or Goodreads!

SWE-Bench has been one of the most important tasks measuring the progress of agents tackling software engineering in 2024. I caught up with two of its creators, @ofirpress.bsky.social and Carlos E. Jimenez to share their ideas on the state of LLM-backed agents.

www.youtube.com/watch?v=bivZ...

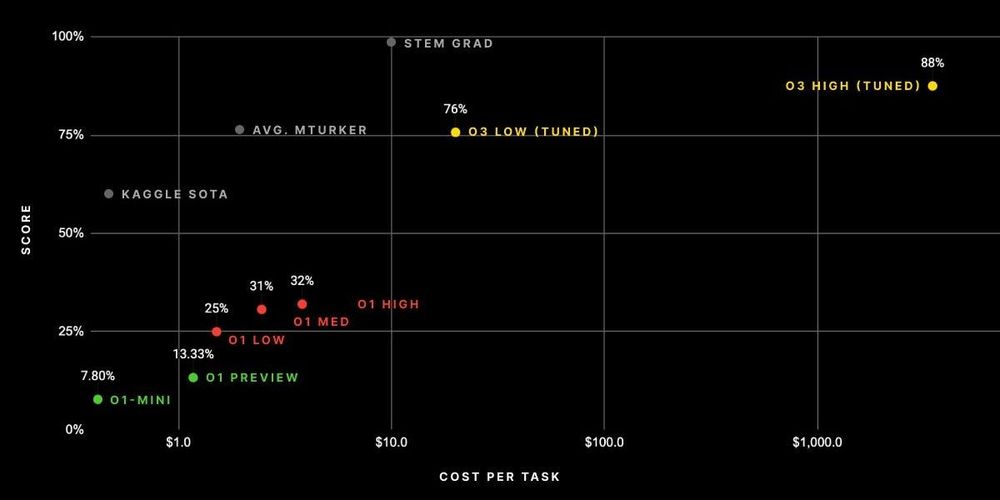

OpenAI's o3: The grand finale of AI in 2024

A step change as influential as the release of GPT-4. Reasoning language models are the current and next big thing.

I explain:

* The ARC prize

* o3 model size / cost

* Dispelling training myths

* Extreme benchmark progress

Good morning #NeurIPS2024! Stop by the @cohere.com booth at 3PM today (Thursday) for a signed copy of Hands-On Large Language Models - it will introduce you to LLMs, their applications, as well as Cohere's Embed, Rerank, and Command-R models.

Come early as quantities are limited!

I'll be in the Cohere #NeurIPS2024 booth most of this afternoon. Come say hi, ask questions, and yes, we're hiring!

Tomorrow I'll be signing copies of my book at 3PM! Limited copies available!

Hi NeurIPS!

Explore ~4,500 NeurIPS papers in this interactive visualization:

jalammar.github.io/assets/neuri...

(Click on a point to see the paper on the website)

Uses @cohere.com models and @lelandmcinnes.bsky.social's datamapplot/umap to help make sense of the overwhelming scale of NeurIPS.

Sure to be thought provoking. The previous interview had fascinating thoughts on scifi (Dune Vs. Foundation), on AI competition for AI safety, and on successful scifi as a self-preventing prophecy.

29.11.2024 02:48 — 👍 5 🔁 0 💬 0 📌 0