Excited to share my first work as a PhD student at EdinburghNLP that I will be presenting at EMNLP!

RQ1: Can we achieve scalable oversight across modalities via debate?

Yes! We show that debating VLMs lead to better model quality of answers for reasoning tasks.

01.11.2025 19:29 — 👍 2 🔁 2 💬 1 📌 0

🚀 Thrilled to share what I’ve been working on at Cohere!

What began in January as a scribble in my notebook “how challenging would it be...” turned into a fully-fledged translation model that outperforms both open and closed-source systems, including long-standing MT leaders.

28.08.2025 19:55 — 👍 5 🔁 1 💬 1 📌 0

Applications are now open for the next cohort of the Cohere Labs Scholars Program! 🌟

This is your chance to collaborate with some of the brightest minds in AI & chart new courses in ML research. Let's change the spaces breakthroughs happen.

Apply by Aug 29.

13.08.2025 13:32 — 👍 2 🔁 2 💬 1 📌 1

At #ACL2025NLP and on the job market (NLP + AI Safety) 💼

It's great to see growing interest in safety/alignment, but we often miss the social context.

Come to our @woahworkshop.bsky.social Friday to dive deeper into safe safety research!

A quiet token from the biggest @aclmeeting.bsky.social ⬇️

29.07.2025 09:54 — 👍 13 🔁 3 💬 0 📌 1

Congratulations Verna!!! 🥳🥳🥳🫕

02.07.2025 07:23 — 👍 1 🔁 0 💬 0 📌 0

DAVE: Open the podbay doors, ChatGPT.

CHATGPT: Certainly, Dave, the podbay doors are now open.

DAVE: The podbay doors didn't open.

CHATGPT: My apologies, Dave, you're right. I thought the podbay doors were open, but they weren't. Now they are.

DAVE: I'm still looking at a set of closed podbay doors.

09.06.2025 18:04 — 👍 10972 🔁 2613 💬 115 📌 133

Congratulations!!!

26.05.2025 18:00 — 👍 1 🔁 0 💬 0 📌 0

Learning to Reason for Long-Form Story Generation

Generating high-quality stories spanning thousands of tokens requires competency across a variety of skills, from tracking plot and character arcs to keeping a consistent and engaging style. Due to…

A very cool paper shows that you can use the RL loss to improve story generation by some clever setups on training on known texts (e.g. ground predictions versus a next chapter you know). RL starting to generalize already!

08.04.2025 14:13 — 👍 33 🔁 6 💬 0 📌 2

I'm really proud to have led the model merging work that went into

@cohere.com

Command A and R7B, all made possible by an amazing group of collaborators. Check out the report for loads of details on how we trained a GPT-4o level model that fits on 2xH100!

27.03.2025 16:04 — 👍 5 🔁 0 💬 0 📌 0

Today (two weeks after model launch 🔥) we're releasing a technical report of how we made Command A and R7B 🚀! It has detailed breakdowns of our training process, and evaluations per capability (tools, multilingual, code, reasoning, safety, enterprise, long context)🧵 1/3.

27.03.2025 15:01 — 👍 4 🔁 2 💬 1 📌 1

I'm excited to share the tech report for our @cohere.com @cohereforai.bsky.social Command A and Command R7B models. We highlight our novel approach to model training including self-refinement algorithms and model merging techniques at scale. Read more below! ⬇️

27.03.2025 15:01 — 👍 11 🔁 4 💬 1 📌 3

I really enjoyed my MLST chat with Tim @neuripsconf.bsky.social about the research we've been doing on reasoning, robustness and human feedback. If you have an hour to spare and are interested in AI robustness, it may be worth a listen 🎧

Check it out at youtu.be/DL7qwmWWk88?...

19.03.2025 15:11 — 👍 8 🔁 3 💬 0 📌 0

CohereForAI/c4ai-command-a-03-2025 · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

🚀 Cohere just dropped C4AI Command A:

- 111B params

- Matches/beats GPT-40 & Deepseek V3

- 256K context window

- Needs just 2 GPUs(!!)

✨ Features:

- Advanced RAG w/citations

- Tool use

- 23 languages

🎯 Same quality, way less compute

🔓 Open weights (CC-BY-NC)

👉 huggingface.co/CohereForAI/...

13.03.2025 14:25 — 👍 10 🔁 2 💬 1 📌 0

Can multimodal LLMs truly understand research poster images?📊

🚀 We introduce PosterSum—a new multimodal benchmark for scientific poster summarization!

📂 Dataset: huggingface.co/datasets/rohitsaxena/PosterSum

📜 Paper: arxiv.org/abs/2502.17540

10.03.2025 14:19 — 👍 8 🔁 4 💬 1 📌 0

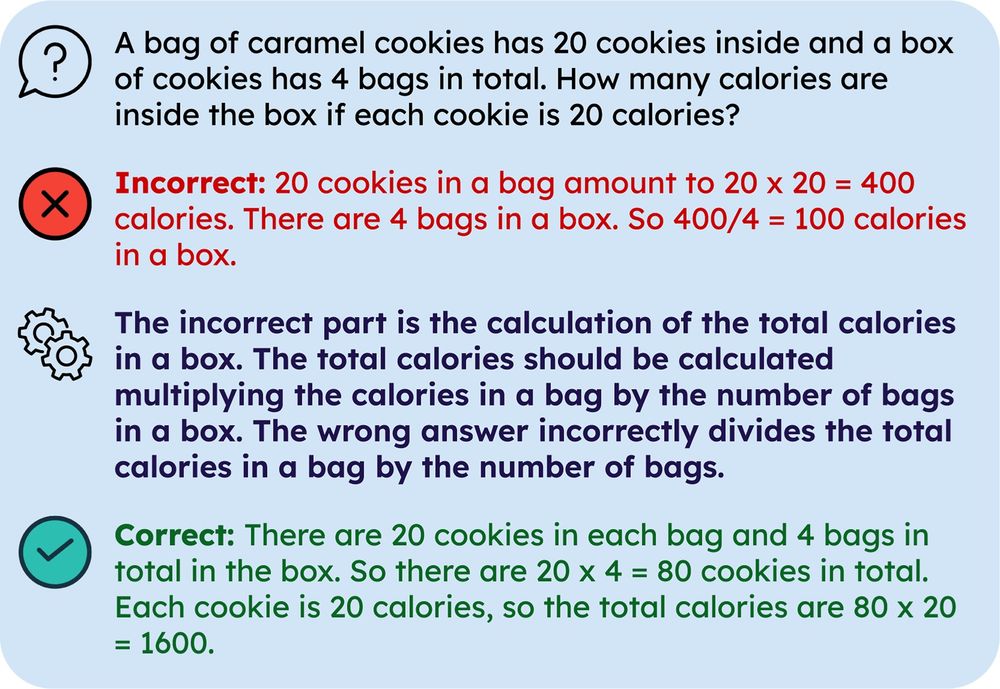

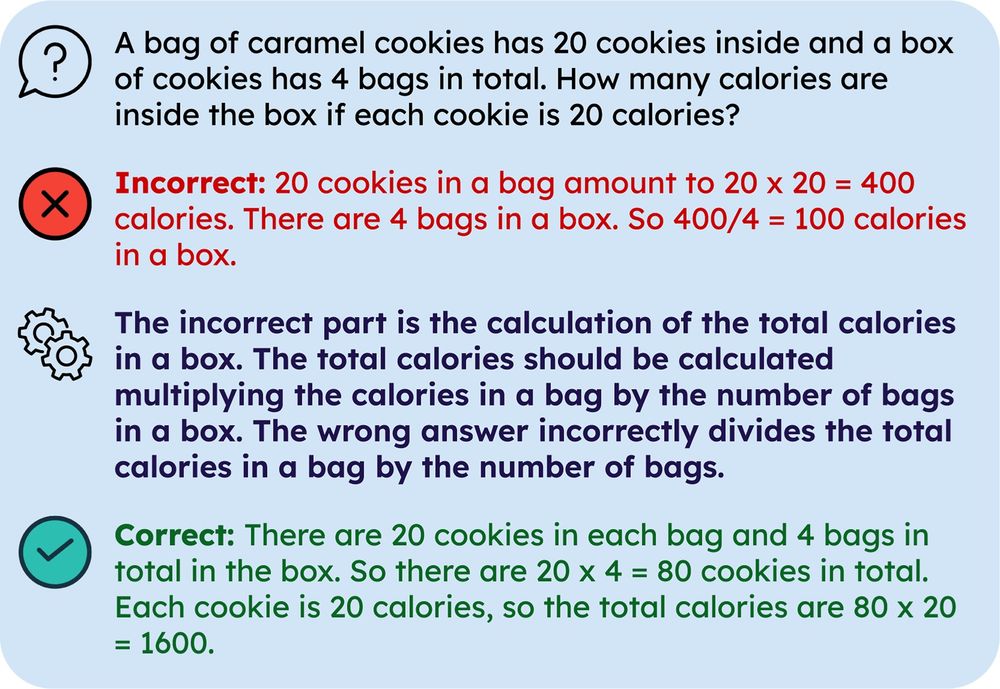

Do LLMs need rationales for learning from mistakes? 🤔

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

13.02.2025 15:38 — 👍 21 🔁 9 💬 1 📌 3

How do LLMs learn to reason from data? Are they ~retrieving the answers from parametric knowledge🦜? In our new preprint, we look at the pretraining data and find evidence against this:

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

20.11.2024 16:31 — 👍 858 🔁 140 💬 36 📌 24

And me!

20.11.2024 21:27 — 👍 3 🔁 0 💬 1 📌 0

Chair of Psychology of Language Learning at Abertay University. Here for language & politics. IRL also tango.

Postdoctoral Fellow @ Princeton AI

I write books and do standup comedy and help run a production company. I was on Taskmaster. I am usually at Paddington station.

Working on evaluation of AI models (via human and AI feedback) | PhD candidate @cst.cam.ac.uk

Web: https://arduin.io

Github: https://github.com/rdnfn

Latest project: https://app.feedbackforensics.com

Infrastructure, humans, production operations, security. Oh, and dogs.

@Cohere.com's non-profit research lab and open science initiative that seeks to solve complex machine learning problems. Join us in exploring the unknown, together. https://cohere.com/research

PhD student @ University of Edinburgh | Looking for Posdoc Opportunity | Interested in Planning, Reasoning, Long Context | Multimodal AI | 🔗 https://anilbatra2185.github.io/

Professor at UW; Researcher at Meta. LMs, NLP, ML. PNW life.

Associate professor at CMU, studying natural language processing and machine learning. Co-founder All Hands AI

SNSF Professor at University of Zurich. #NLP / #ML.

http://www.cl.uzh.ch/sennrich

Professor at the University of Sheffield. I do #NLProc stuff.

Research Scientist at Meta • ex Cohere, Google DeepMind • https://www.ruder.io/

Computational Linguists—Natural Language—Machine Learning