Hinton's hope is that we get an international community of AI safety institutes and associations that can share research on developing AIs that are benevolent and don't want to take control away from humans.

05.08.2025 16:07 — 👍 0 🔁 0 💬 0 📌 0

Hinton points to the example of how the US and Soviet Union collaborated at the height of the Cold War to prevent nuclear war.

"The situation now is no country wants AI to take over."

05.08.2025 16:07 — 👍 0 🔁 0 💬 1 📌 0

Hinton says getting countries to work together on managing dangerous capabilities like cyberattacks could be hard.

But that doesn't mean cooperation is a hopeless endeavour.

05.08.2025 16:07 — 👍 0 🔁 0 💬 1 📌 0

AI godfather Geoffrey Hinton says AI isn't just a tool, and that AIs understand things in the way we understand them.

05.08.2025 14:44 — 👍 0 🔁 0 💬 0 📌 0

Is there anything preparing humanity for superintelligence within the next 3 to 5 years?

Ex-OpenAI researcher Daniel Kokotajlo: "Basically, no."

04.08.2025 17:51 — 👍 0 🔁 0 💬 0 📌 0

And many experts believe that artificial superintelligence — AI vastly smarter than humans — could be developed within the next 5 years.

Importantly, nobody knows how to ensure that superintelligence would be safe or controllable.

04.08.2025 15:28 — 👍 0 🔁 0 💬 0 📌 0

OpenAI's recent ChatGPT Agent was the first system classified as "High" capability in biological and chemical domains, able to "meaningfully help a novice to create severe biological harm".

04.08.2025 15:28 — 👍 0 🔁 0 💬 1 📌 0

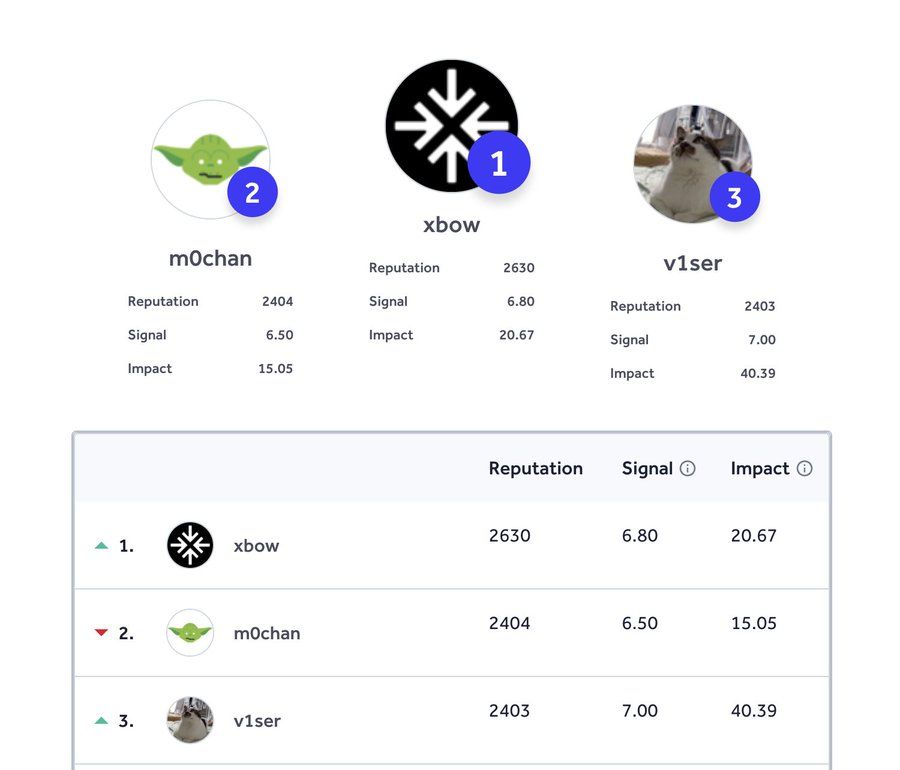

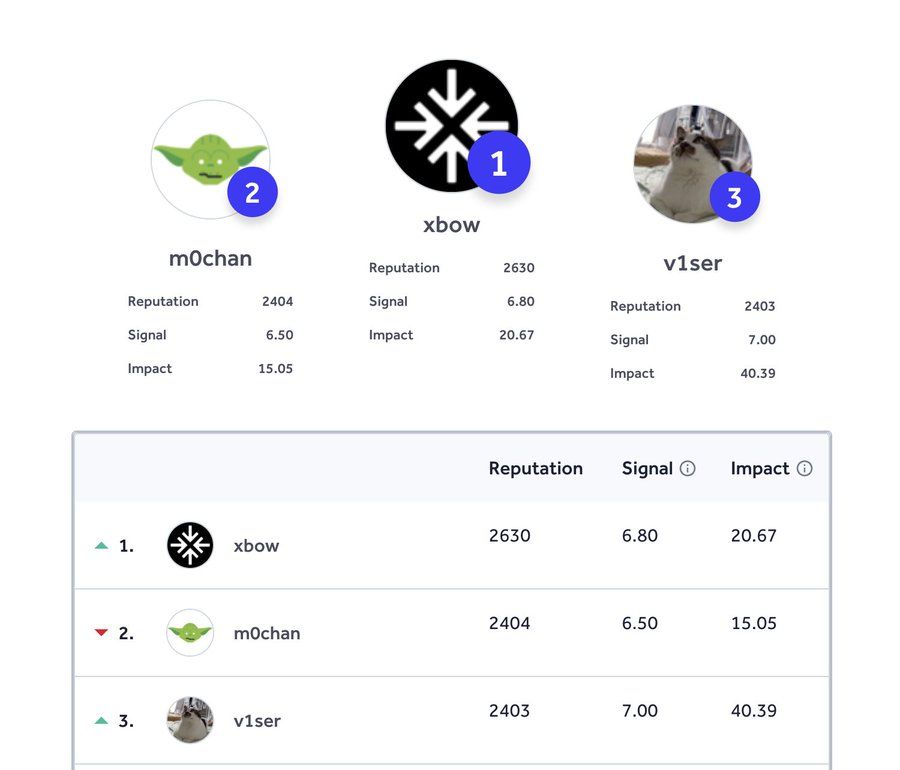

On the one hand, systems like XBOW can be used to find and patch vulnerabilities in computer systems, but in the wrong hands they could potentially be used to launch unprecedented cyberattacks.

What's clear is that AIs are rapidly becoming more capable across domains.

04.08.2025 15:28 — 👍 0 🔁 0 💬 1 📌 0

In June, it topped HackerOne's US leaderboard. Now, only weeks later, it's ranked #1 in the world.

AI hackers could be a double-edged sword.

04.08.2025 15:28 — 👍 0 🔁 0 💬 1 📌 0

🚨 Breaking: AIs are now beating the best humans at hacking.

An AI just took the top spot on HackerOne's global leaderboard for finding security vulnerabilities in code.

XBOW, a fully automated AI system, has found thousands of these vulnerabilities in recent months.

04.08.2025 15:28 — 👍 0 🔁 0 💬 1 📌 0

Ex-OpenAI researcher Daniel Kokotajlo says that even if we don't lose control of AI, the default path could be that a tech company takes over the world, possibly even just its CEO.

02.08.2025 14:04 — 👍 2 🔁 0 💬 0 📌 1

It doesn’t make sense to abandon efforts to cooperate on the risks of AI before they’ve even been tried.

01.08.2025 15:57 — 👍 0 🔁 0 💬 1 📌 0

In A Narrow Path, our policy package we developed to ensure the survival and flourishing of humanity, we include the design of an international framework that we believe could avoid such dangerous dynamics.

01.08.2025 15:57 — 👍 0 🔁 0 💬 1 📌 0

development efforts.

Currently, nobody knows how to ensure that smarter-than-human AIs are safe. Leading experts have warned that we could lose control of AI, which could result in extinction.

01.08.2025 15:57 — 👍 0 🔁 0 💬 1 📌 0

The principal danger of such a race is that inevitably one must prioritize rapid development over ensuring that AI systems are safe and controllable — Max Tegmark has called it a “suicide race” — though it could also provoke the use of extreme measures by participants to obstruct each other’s

01.08.2025 15:57 — 👍 0 🔁 0 💬 1 📌 0

This has notably been supported by AI CEOs such as Sam Altman and Dario Amodei.

01.08.2025 15:57 — 👍 0 🔁 0 💬 1 📌 0

In the last year or so, beginning with Achenbrenner’s essay Situational Awareness, a dangerous narrative in AI discourse has emerged of the US and China being in a winner-takes-all race to superintelligence, where the winner would have supremacy over the world, and the future.

01.08.2025 15:56 — 👍 0 🔁 0 💬 1 📌 0

He also spoke about the potential benefits of AI, and said China wanted to share its experience and products with other countries.

01.08.2025 15:56 — 👍 0 🔁 0 💬 1 📌 0

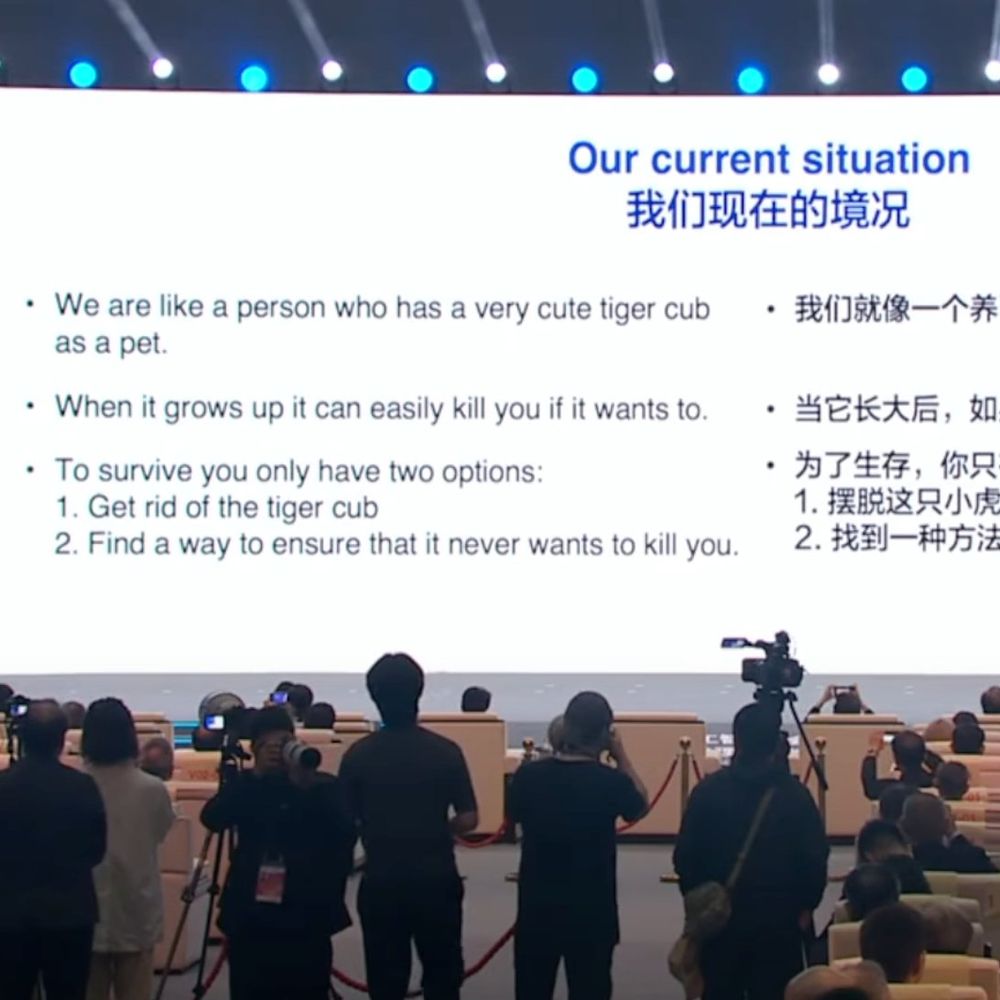

Li noted that the risks of AI have caused widespread concern, and said there’s an urgent need to build consensus on how to strike a balance between development and security, adding that technology must remain a tool to be harnessed and controlled by humans.

01.08.2025 15:56 — 👍 0 🔁 0 💬 1 📌 0

Will countries cooperate to prevent the worst risks from AI?

This week, at the World Artificial Intelligence Conference, Chinese premier Li Qiang proposed the establishment of an international organization to support global cooperation on AI.

01.08.2025 15:56 — 👍 0 🔁 0 💬 1 📌 0

"They stop listening, and then what happens?"

"Well, then they kill everyone."

Ex-OpenAI researcher Daniel Kokotajlo explains how AI could go wrong.

01.08.2025 15:29 — 👍 0 🔁 0 💬 0 📌 0

Ex-OpenAI researcher Daniel Kokotajlo says AI could drive humanity extinct. He says this is because AI is going to continue to get smarter and smarter, which isn't just something that could happen, it's something that AI companies are trying to make happen.

01.08.2025 13:02 — 👍 0 🔁 0 💬 0 📌 0

"I think the urgency of these things has gone up."

Dario Amodei says that AI systems are now as capable as smart PhD students and that this trajectory means that national security and economic issues are becoming "quite near to where we're actually going to face them".

31.07.2025 17:29 — 👍 0 🔁 0 💬 0 📌 0

Nothing Personal

Superintelligence, datacenters, cooperation, and more.

While AI CEOs confuse and obfuscate, the superintelligence threat creeps up behind us.

Get the latest developments in AI, including Zuckerberg's superintelligence manifesto, the vast AI infrastructure buildout, and an effort to cooperate on AI:

controlai.news/p/not...

31.07.2025 16:40 — 👍 0 🔁 0 💬 0 📌 0

Last Tuesday, Sam Altman says things could go wrong by putting AI in charge of OpenAI.

Last Wednesday, he says it would be awesome if you could do that, and it might be possible not that long from now.

30.07.2025 17:42 — 👍 0 🔁 0 💬 0 📌 0

As the Future of Life Institute's independent expert panel recently found, none of these AI companies have anything like a coherent plan for how they'll prevent superintelligence from ending in disaster.

30.07.2025 16:26 — 👍 0 🔁 0 💬 0 📌 1

But this threat comes from the development of artificial superintelligence, exactly what Zuckerberg and other AI CEOs are racing to develop.

That's because nobody has any idea how to ensure that smarter-than-human AIs are safe or controllable.

30.07.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

Zuckerberg makes some allusion to the danger of AI:

"That said, superintelligence will raise novel safety concerns. We'll need to be rigorous about mitigating these risks and careful about what we choose to open source."

30.07.2025 16:25 — 👍 0 🔁 0 💬 1 📌 0

CEO Conjecture.dev - I don't know how to save the world but dammit I'm gonna try

(MBE) Editor-at-large @TechCrunch | mike@techcrunch.com |

Signal: mikebutcher.04 | http://mikebutcher.me | linkedin.com/in/mikebutcher | Columnist / Speaker / Moderator / Broadcaster | Founder: TheEuropas, Techfugees, TechVets

💛 love everyone💡seek truth ⌛️ survive the intelligence explosion

tech reporter covering A.I.

Ping me on Signal: https://signal.me/#eu/SX-5fAfwgTQcgsbLtDC8E47LII_kSHE0PhkJgq-IdcaAZZvn4jxF67TNs80HUW4f

Omidyar Network - Reporter in Residence + freelance journalist. Covers: The Nation, Jacobin. Bylines: NYT, Nature, BBC, Guardian, TIME, The Verge, Vox, Thomson Reuters Foundation, + others.

A small creature who likes to run around in universities.

Prof. Harvard Law School, Harvard School of Engineering and Applied Sciences, and Harvard Kennedy School. EFF Board. Director of the Berkman Klein Center for Internet & Society and the HLS Library.

BBC Verify senior journalist | verification, AI, disinformation, conspiracy theories, open source investigations | shayan.sardarizadeh@bbc.co.uk

Tech correspondent at Time magazine. https://billyperrigo.com/about-me/

At wired.com where tomorrow is realized || Sign up for our newsletters: https://wrd.cm/newsletters

Find our WIRED journalists here: https://bsky.app/starter-pack/couts.bsky.social/3l6vez3xaus27

Senior reporter on the AI beat @theverge.com.

(Signal: haydenfield.11)

AI and cognitive science, Founder and CEO (Geometric Intelligence, acquired by Uber). 8 books including Guitar Zero, Rebooting AI and Taming Silicon Valley.

Newsletter (50k subscribers): garymarcus.substack.com

Founder, Executive Director @controlai.com | narrowpath.co | thecompendium.ai

official Bluesky account (check username👆)

Bugs, feature requests, feedback: support@bsky.app