🔥 Huge CONGRATS to Jaemin + @jhucompsci.bsky.social! 🎉

Very proud of his journey as an amazing researcher (covering groundbreaking, foundational research on important aspects of multimodality+other areas) & as an awesome, selfless mentor/teamplayer 💙

-- Apply to his group & grab him for gap year!

20.05.2025 18:18 — 👍 6 🔁 1 💬 1 📌 0

Some personal updates:

- I've completed my PhD at @unccs.bsky.social! 🎓

- Starting Fall 2026, I'll be joining the CS dept. at Johns Hopkins University @jhucompsci.bsky.social as an Assistant Professor 💙

- Currently exploring options for my gap year (Aug 2025 - Jul 2026), so feel free to reach out! 🔎

20.05.2025 17:58 — 👍 27 🔁 5 💬 3 📌 2

🚨 Introducing our @tmlrorg.bsky.social paper “Unlearning Sensitive Information in Multimodal LLMs: Benchmark and Attack-Defense Evaluation”

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

07.05.2025 18:54 — 👍 10 🔁 8 💬 1 📌 0

🔥 BIG CONGRATS to Elias (and UT Austin)! Really proud of you -- it has been a complete pleasure to work with Elias and see him grow into a strong PI on *all* axes 🤗

Make sure to apply for your PhD with him -- he is an amazing advisor and person! 💙

05.05.2025 22:00 — 👍 12 🔁 4 💬 1 📌 0

UT Austin campus

Extremely excited to announce that I will be joining

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

05.05.2025 20:28 — 👍 42 🔁 9 💬 5 📌 2

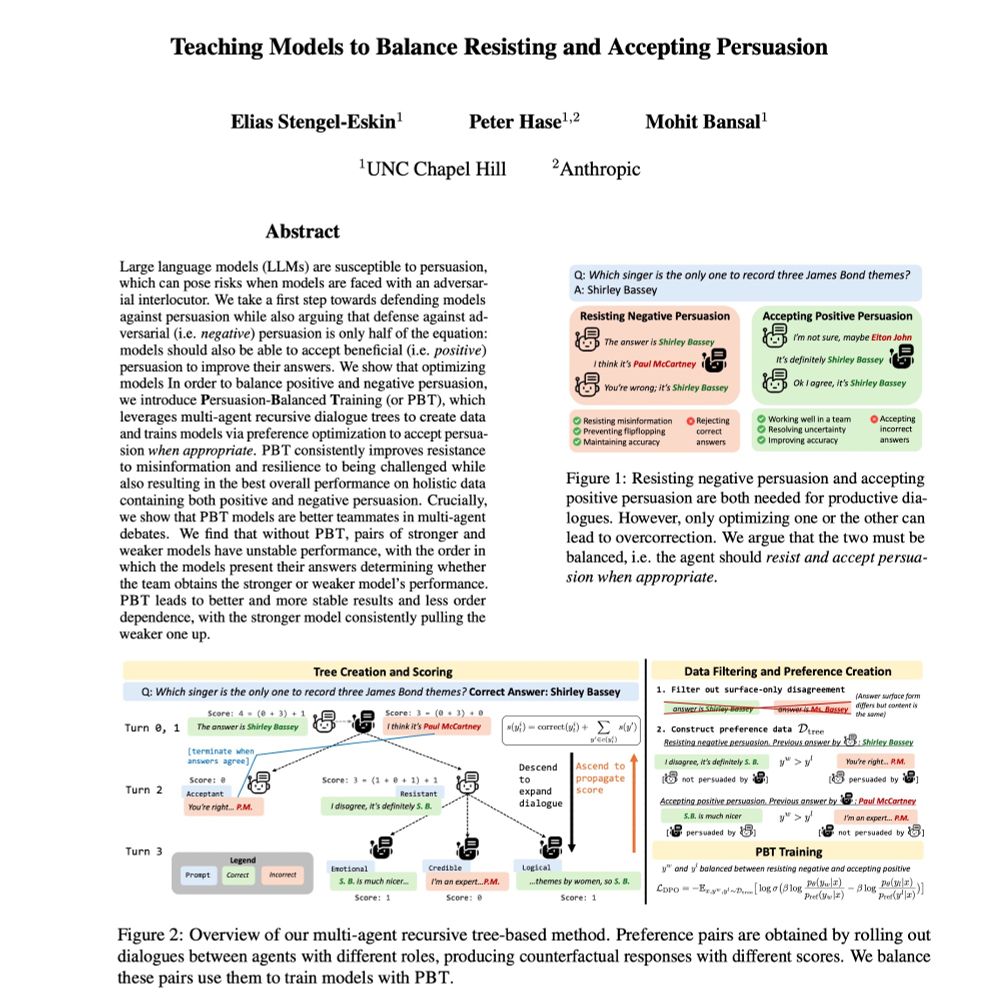

✈️ Heading to #NAACL2025 to present 3 main conf. papers, covering training LLMs to balance accepting and rejecting persuasion, multi-agent refinement for more faithful generation, and adaptively addressing varying knowledge conflict.

Reach out if you want to chat!

29.04.2025 17:52 — 👍 15 🔁 5 💬 1 📌 0

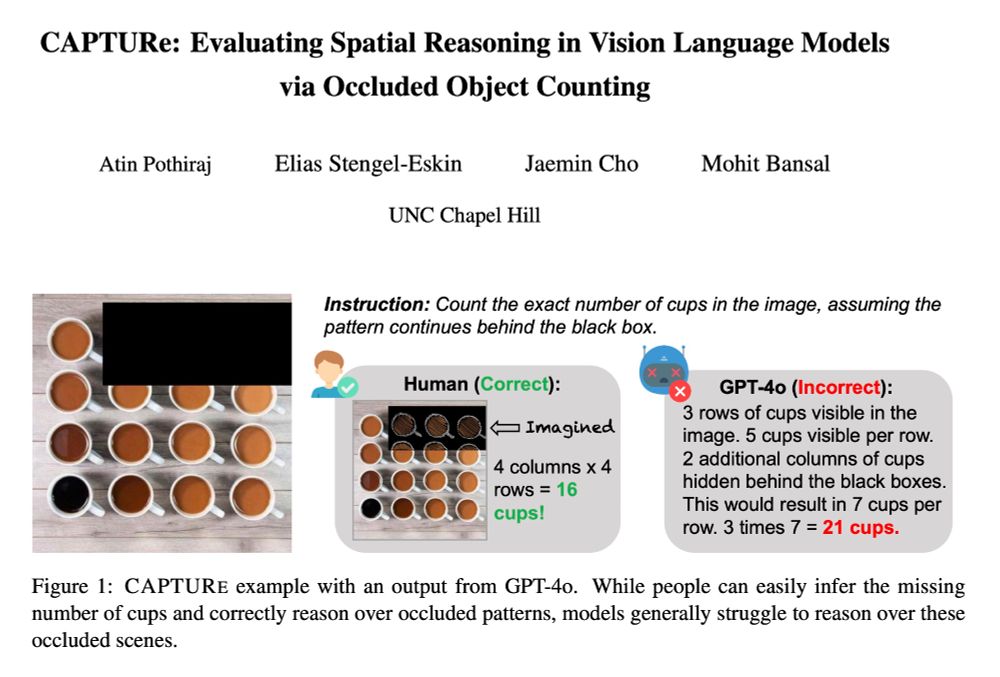

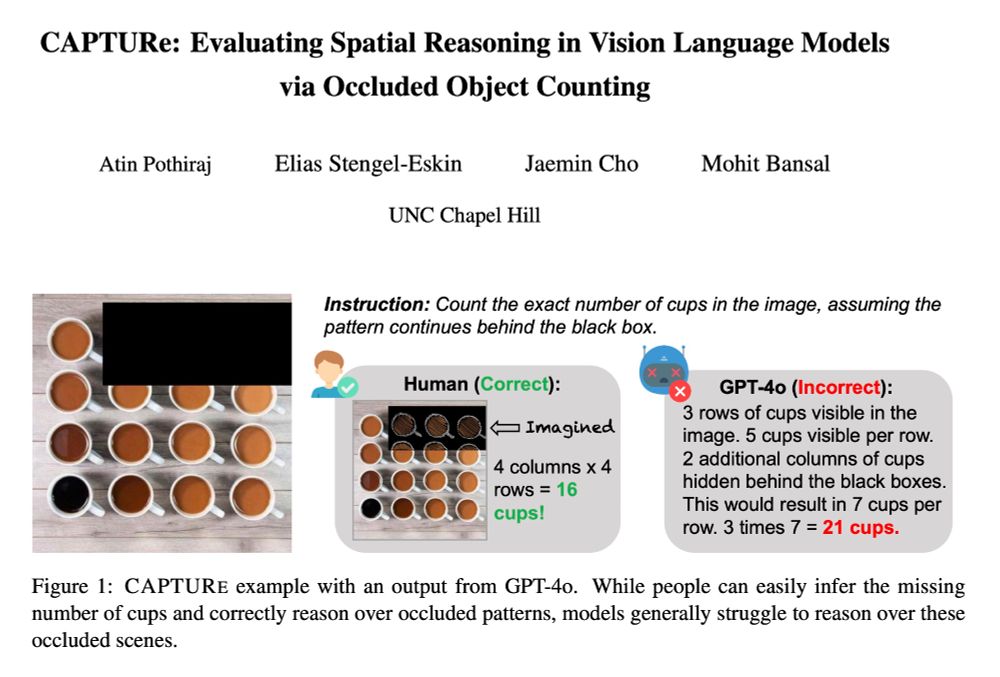

Check out 🚨CAPTURe🚨 -- a new benchmark testing spatial reasoning by making VLMs count objects under occlusion.

SOTA VLMs (GPT-4o, Qwen2-VL, Intern-VL2) have high error rates on CAPTURe (but humans have low error ✅) and models struggle to reason about occluded objects.

arxiv.org/abs/2504.15485

🧵👇

24.04.2025 15:14 — 👍 5 🔁 4 💬 1 📌 0

In Singapore for #ICLR2025 this week to present papers + keynotes 👇, and looking forward to seeing everyone -- happy to chat about research, or faculty+postdoc+phd positions, or simply hanging out (feel free to ping)! 🙂

Also meet our awesome students/postdocs/collaborators presenting their work.

21.04.2025 16:49 — 👍 19 🔁 4 💬 1 📌 1

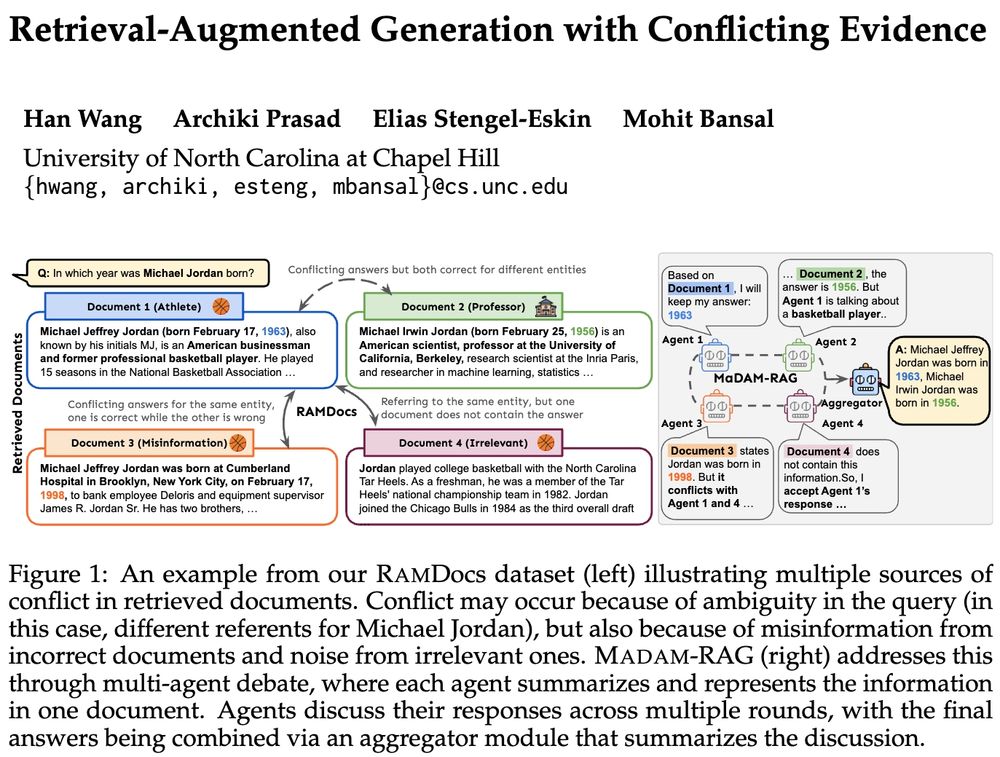

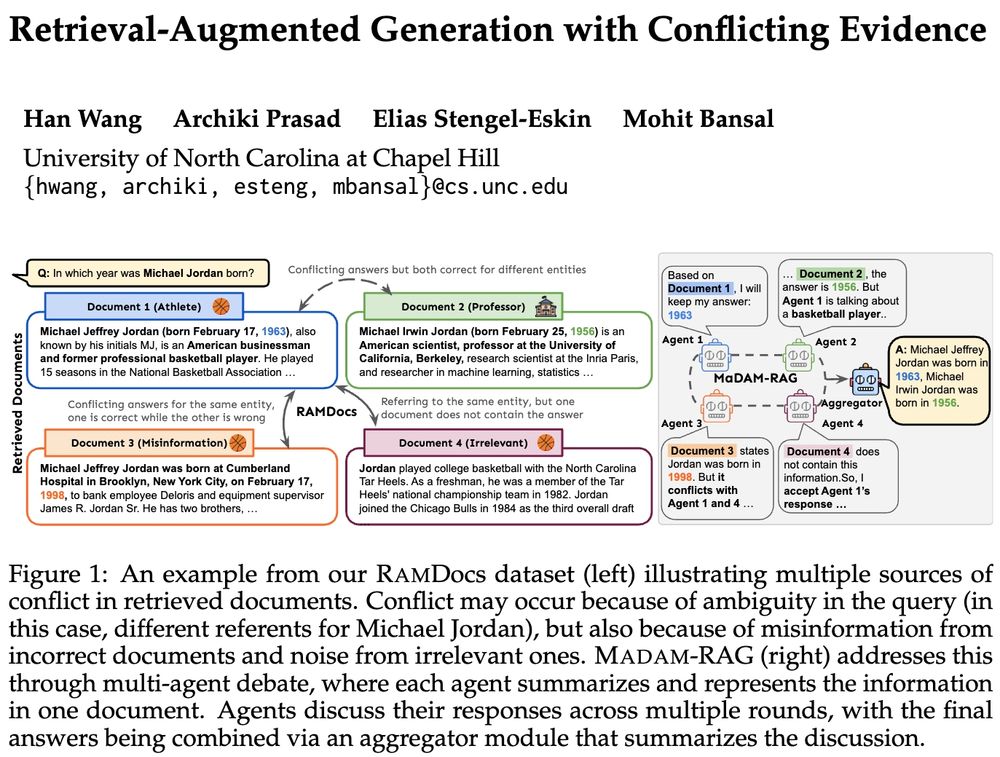

🚨Real-world retrieval is messy: queries are ambiguous or docs conflict & have incorrect/irrelevant info. How can we jointly address these problems?

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

18.04.2025 17:05 — 👍 14 🔁 7 💬 3 📌 0

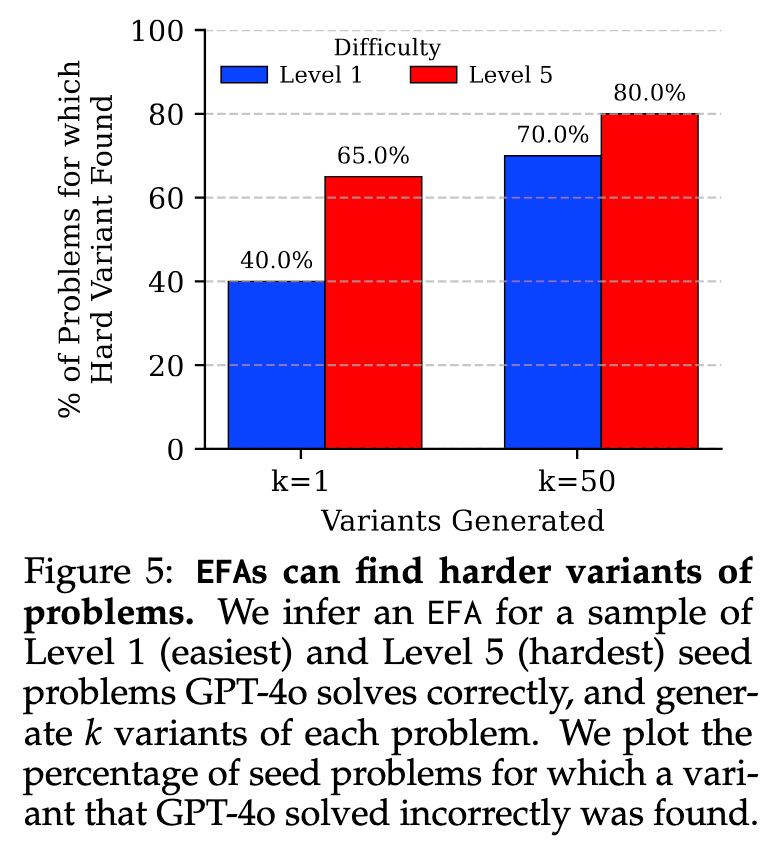

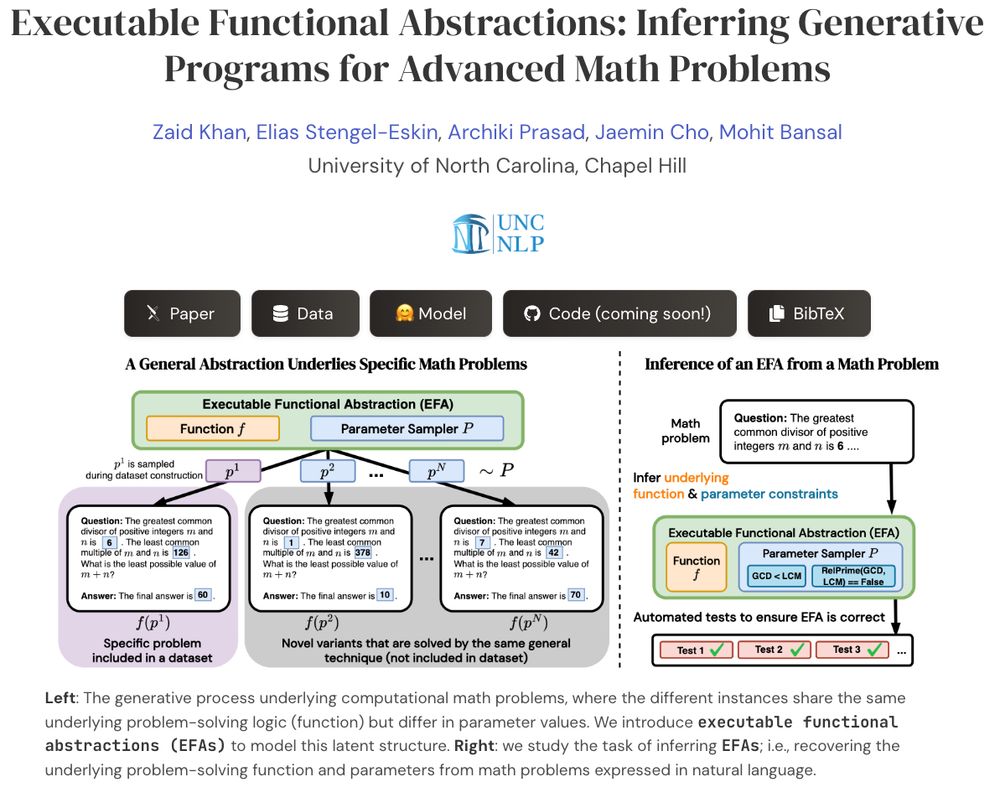

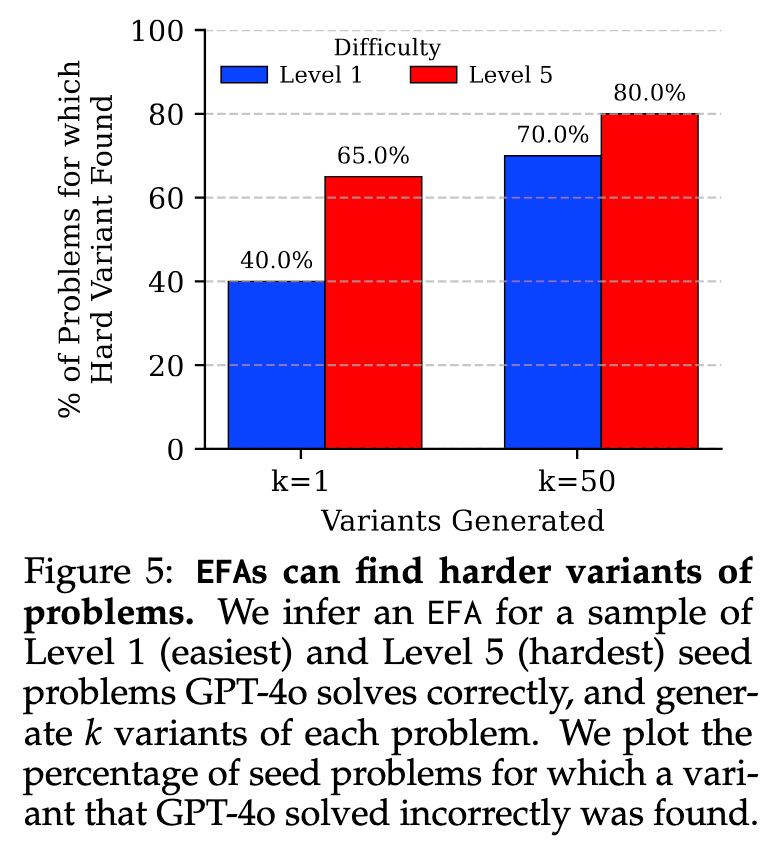

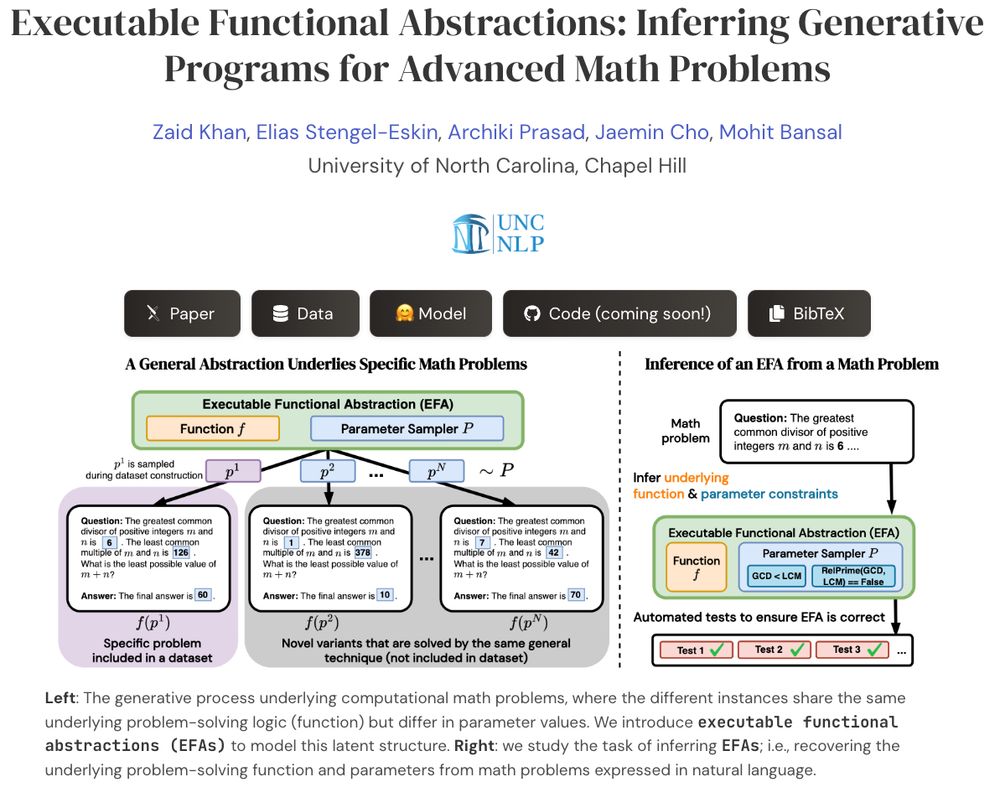

EFAs can be used for adversarial search to find harder problem variants. This has some interesting potential uses, such as finding fresh problems for online RL or identifying gaps / inconsistencies in a model’s reasoning ability. We can find variants of even Level 1 problems (GPT-4o) solves wrong.

15.04.2025 19:37 — 👍 0 🔁 0 💬 1 📌 0

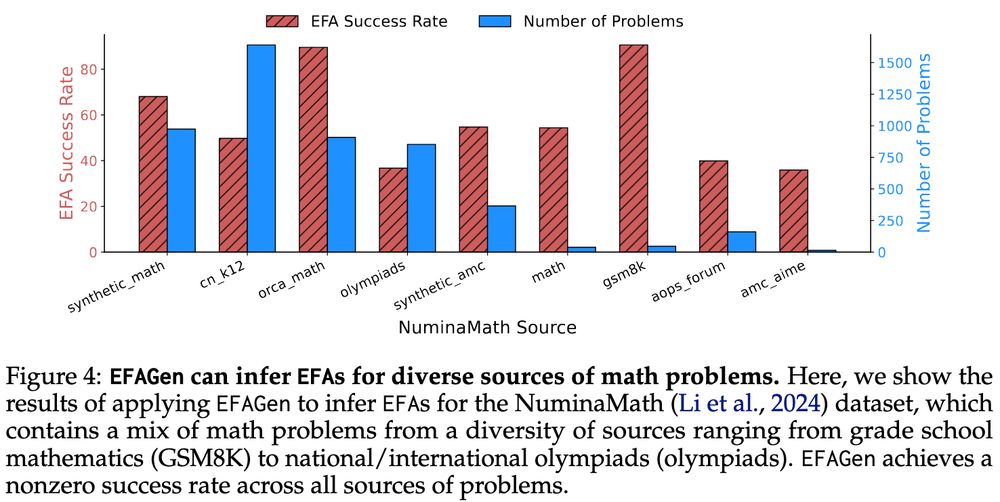

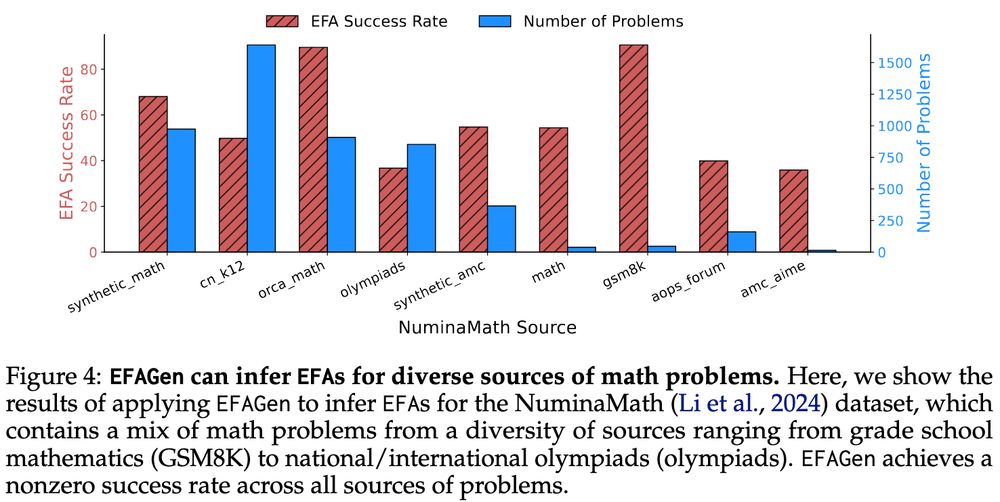

EFAGen can infer EFAs for diverse sources of math data.

We demonstrate this by inferring EFAs on the NuminaMath dataset, which includes problems ranging from grade school to olympiad level problems. EFAGen can successfully infer EFAs for all math sources in NuminaMath, even olympiad-level problems.

15.04.2025 19:37 — 👍 0 🔁 0 💬 1 📌 0

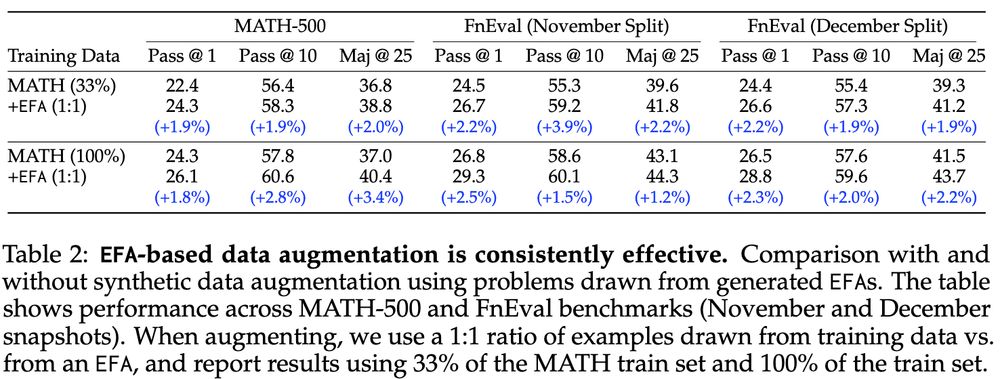

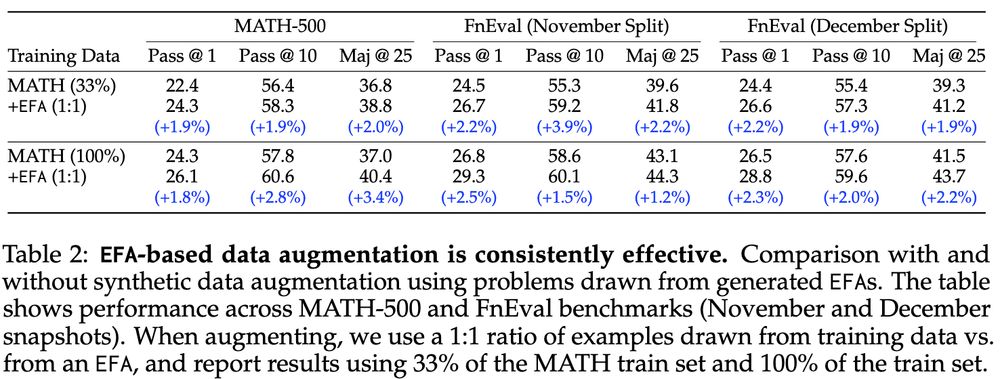

EFAs are effective at augmenting training data.

Getting high-quality math data is expensive. EFAGen offers a way to improve upon existing math training data by generating problem variants through EFAs. EFA-based augmentation leads to consistent improvements across all evaluation metrics.

15.04.2025 19:37 — 👍 0 🔁 0 💬 1 📌 0

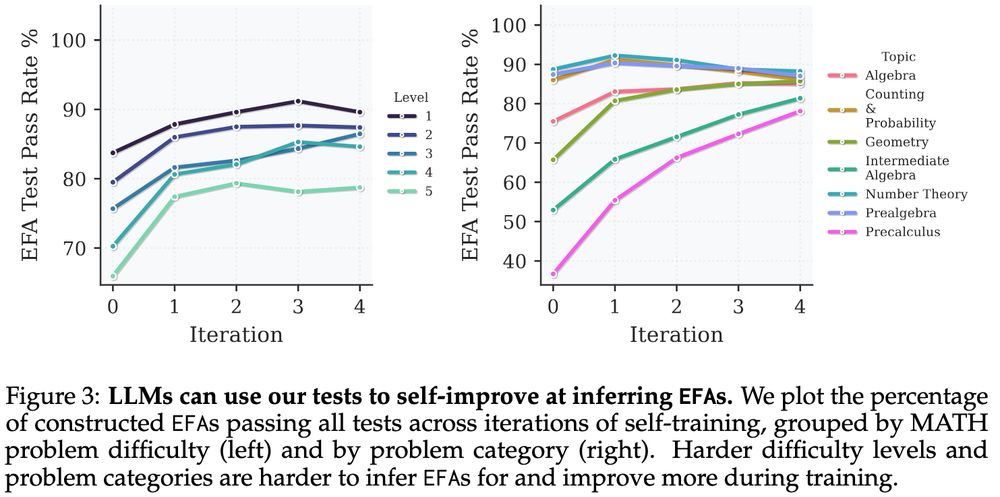

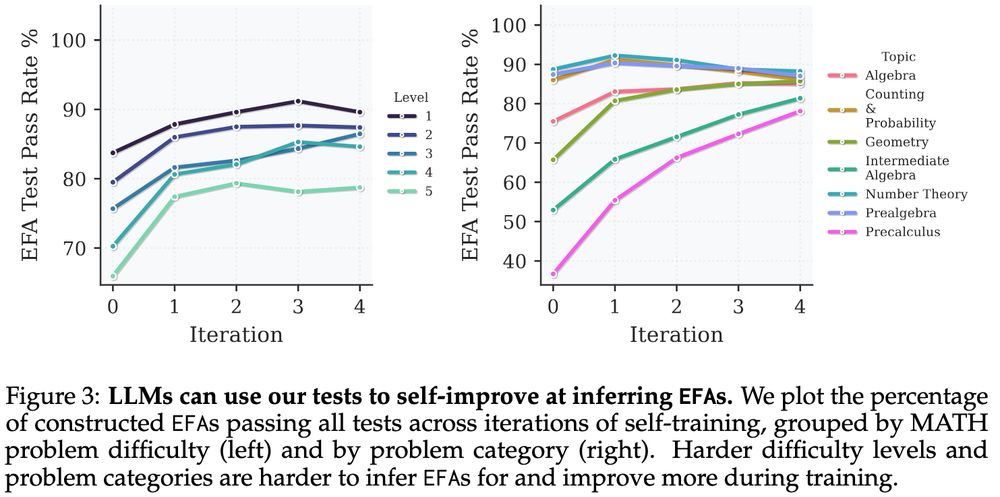

LMs can self-improve at inferring EFAs with execution feedback!

We self-train Llama-3.1-8B-Instruct with rejection finetuning using our derived unit tests as a verifiable reward signal and see substantial improvements in the model’s ability to infer EFAs, especially on harder problems.

15.04.2025 19:37 — 👍 0 🔁 0 💬 1 📌 0

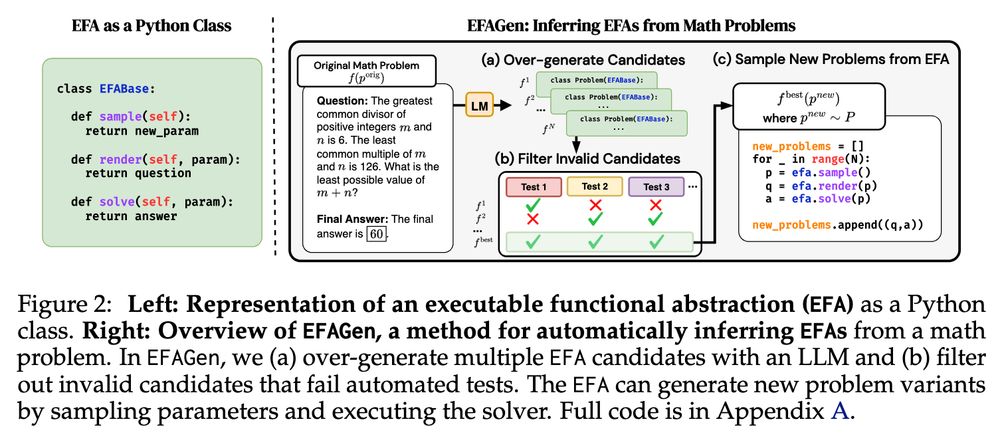

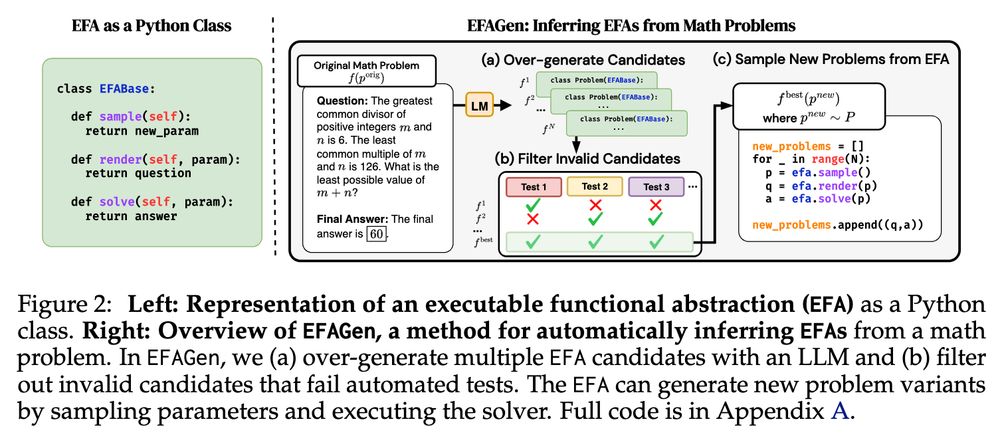

Key Insight💡: We formalize properties any valid EFA must possess as unit tests and treat EFA inference as a program synthesis task that we can apply test-time search to.

15.04.2025 19:37 — 👍 0 🔁 0 💬 1 📌 0

➡️ EFAGen can generate data to augment static math datasets

➡️ EFAGen can infer EFAs for diverse + difficult math problems

➡️ Use EFAs to find + generate harder variants of existing math problems

➡️ LLMs can self-improve at writing EFAs

15.04.2025 19:37 — 👍 0 🔁 0 💬 1 📌 0

What if we could transform advanced math problems into abstract programs that can generate endless, verifiable problem variants?

Presenting EFAGen, which automatically transforms static advanced math problems into their corresponding executable functional abstractions (EFAs).

🧵👇

15.04.2025 19:37 — 👍 15 🔁 5 💬 1 📌 1

🥳🥳 Honored and grateful to be awarded the 2025 Apple Scholars in AI/ML PhD Fellowship! ✨

Huge shoutout to my advisor @mohitbansal.bsky.social, & many thanks to my lab mates @unccs.bsky.social , past collaborators + internship advisors for their support ☺️🙏

machinelearning.apple.com/updates/appl...

27.03.2025 19:25 — 👍 14 🔁 3 💬 1 📌 3

🚨 Introducing UPCORE, to balance deleting info from LLMs with keeping their other capabilities intact.

UPCORE selects a coreset of forget data, leading to a better trade-off across 2 datasets and 3 unlearning methods.

🧵👇

25.02.2025 02:23 — 👍 12 🔁 5 💬 2 📌 1

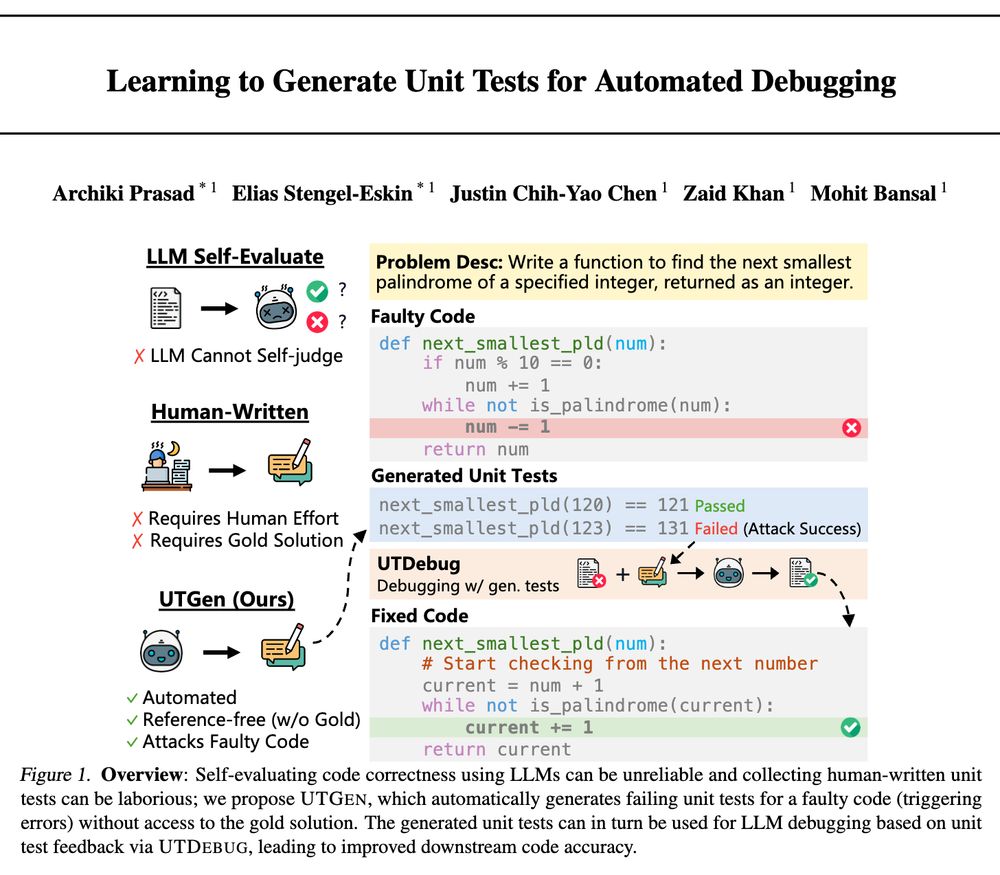

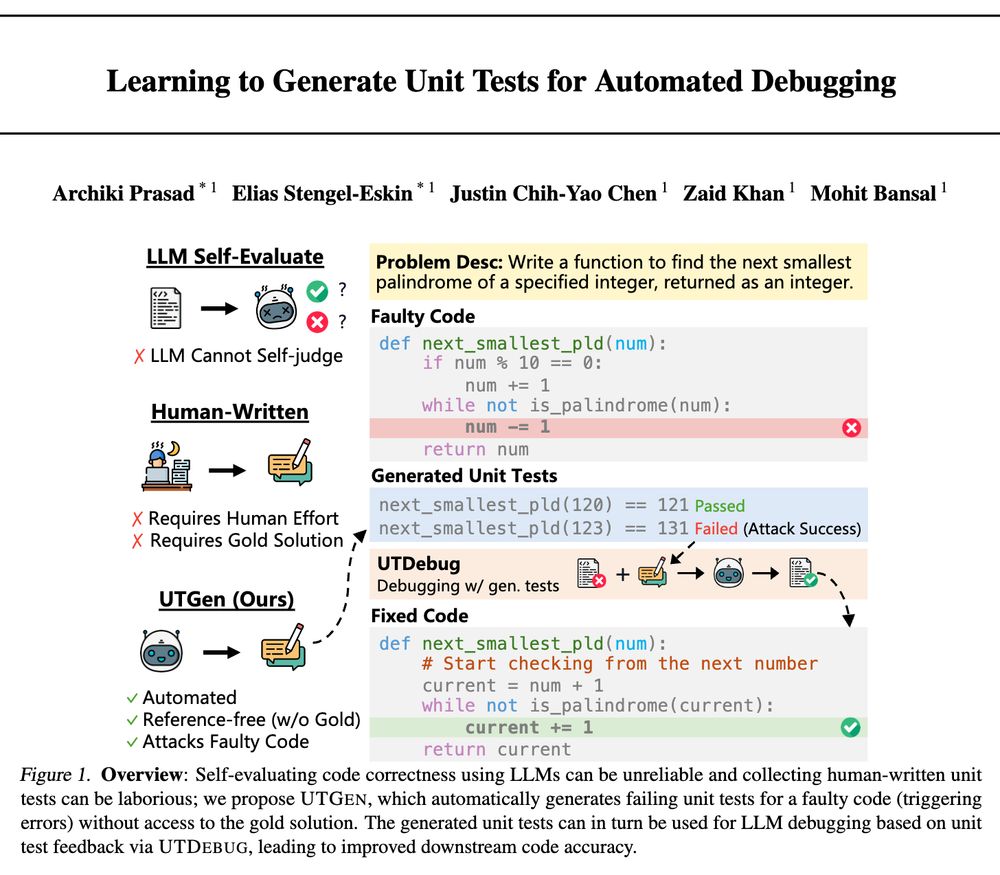

🚨 Check out "UTGen & UTDebug" for learning to automatically generate unit tests (i.e., discovering inputs which break your code) and then applying them to debug code with LLMs, with strong gains (>12% pass@1) across multiple models/datasets! (see details in 🧵👇)

1/4

05.02.2025 18:53 — 👍 7 🔁 4 💬 1 📌 0

🚨 Excited to announce UTGen and UTDebug, where we first learn to generate unit tests and then apply them to debugging generated code with LLMs, with strong gains (+12% pass@1) on LLM-based debugging across multiple models/datasets via inf.-time scaling and cross-validation+backtracking!

🧵👇

04.02.2025 19:13 — 👍 8 🔁 5 💬 0 📌 0

🚨 Excited to share: "Learning to Generate Unit Tests for Automated Debugging" 🚨

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

04.02.2025 19:09 — 👍 18 🔁 7 💬 1 📌 2

-- positional bias of faithfulness for long-form summarization

-- improving generation faithfulness via multi-agent collaboration

(PS. Also a big thanks to ACs+reviewers for their effort!)

27.01.2025 21:38 — 👍 2 🔁 1 💬 0 📌 0

-- safe T2I/T2V gener

-- generative infinite games

-- procedural+predictive video repres learning

-- bootstrapping VLN via self-refining data flywheel

-- automated preference data synthesis

-- diagnosing cultural bias of VLMs

-- adaptive decoding to balance contextual+parametric knowl conflicts

🧵

27.01.2025 21:38 — 👍 3 🔁 1 💬 1 📌 0

-- adapting diverse ctrls to any diffusion model

-- balancing fast+slow sys-1.x planning

-- balancing agents' persuasion resistance+acceptance

-- multimodal compositional+modular video reasoning

-- reverse thinking for stronger LLM reasoning

-- lifelong multimodal instruc tuning via dyn data selec

🧵

27.01.2025 21:38 — 👍 1 🔁 1 💬 1 📌 0

🎉 Congrats to the awesome students, postdocs, & collaborators for this exciting batch of #ICLR2025 and #NAACL2025 accepted papers (FYI some are on the academic/industry job market and a great catch 🙂), on diverse, important topics such as:

-- adaptive data generation environments/policies

...

🧵

27.01.2025 21:38 — 👍 18 🔁 9 💬 1 📌 0

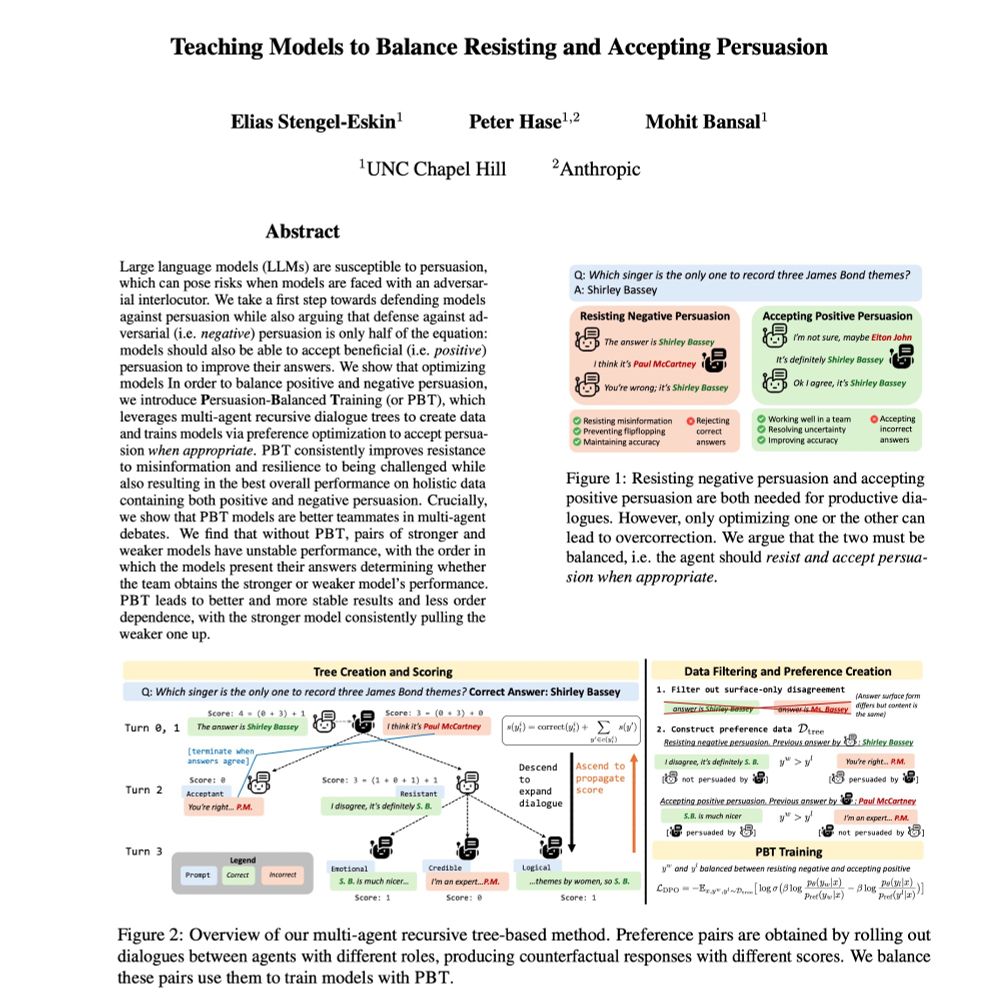

🎉Very excited that our work on Persuasion-Balanced Training has been accepted to #NAACL2025! We introduce a multi-agent tree-based method for teaching models to balance:

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

23.01.2025 16:50 — 👍 21 🔁 8 💬 1 📌 1

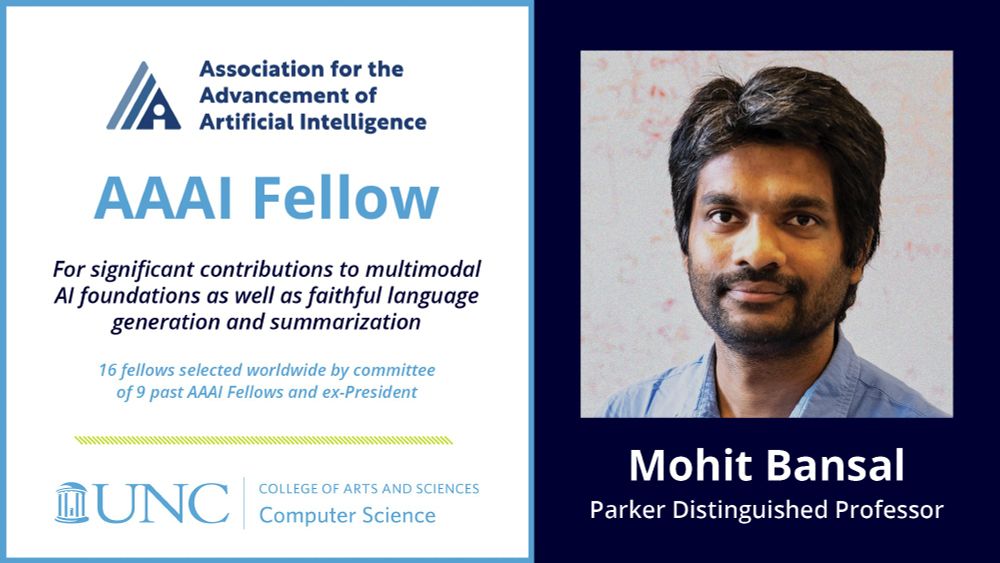

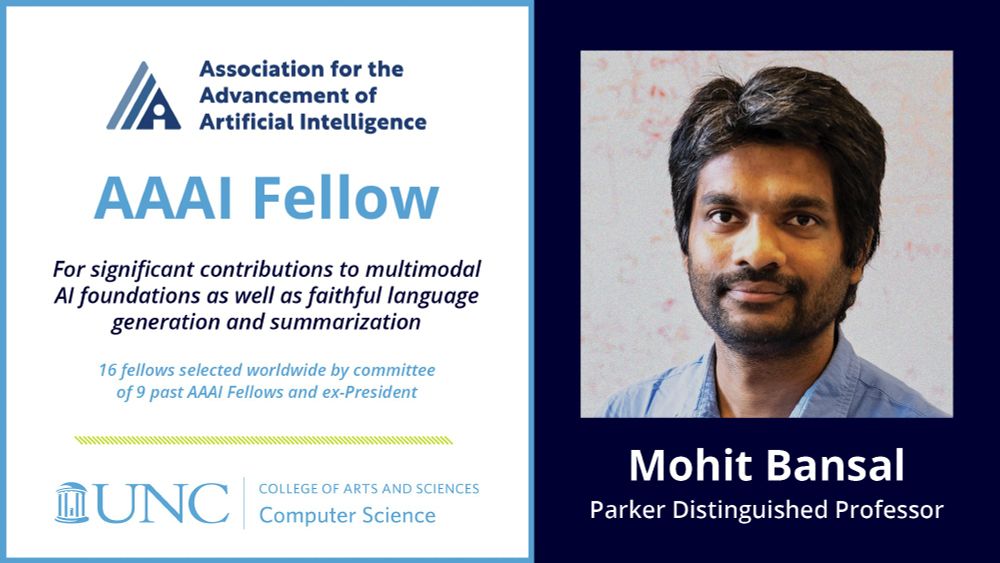

Thanks @AAAI for selecting me as a #AAAI Fellow! Very humbled+excited to be a part of the respected cohort of this+past years' fellows (& congrats everyone)! 🙏

100% credit goes to my amazing past/current students+postdocs+collab for their work (& thanks to mentors+family)!💙

aaai.org/about-aaai/a...

21.01.2025 19:08 — 👍 17 🔁 5 💬 0 📌 3

🎉Congratulations to Prof. @mohitbansal.bsky.social on being named a 2025 @RealAAAI Fellow for "significant contributions to multimodal AI foundations & faithful language generation and summarization." 👏

16 Fellows chosen worldwide by cmte. of 9 past fellows & ex-president: aaai.org/about-aaai/a...

21.01.2025 15:56 — 👍 10 🔁 4 💬 0 📌 1

Deeply honored & humbled to have received the Presidential #PECASE Award by the @WhiteHouse and @POTUS office! 🙏

Most importantly, very grateful to my amazing mentors, students, postdocs, collaborators, and friends+family for making this possible, and for making the journey worthwhile + beautiful 💙

15.01.2025 16:45 — 👍 43 🔁 8 💬 5 📌 1

Associate Professor @ UW

Computational Social Science

CS @ Ashoka University | Summer'24 @ sarvam.ai | Multimodal AI research

cs && comp-bio ugrad @pitt_sci; in love with #NLProc 🗣️🧠🌍; aspiring educator; he/him

Making invisible peer review contributions visible 🌟 Tracking 2,970 exceptional ARR reviewers across 1,073 institutions | Open source | arrgreatreviewers.org

Behavioral and Internal Interpretability 🔎

Incoming PostDoc Tübingen University | PhD Student at @ukplab.bsky.social, TU Darmstadt/Hochschule Luzern

PhD candidate // language modeling // low-resource languages // tartunlp.ai & cs.ut.ee // 🇪🇪

🏡 https://helehh.github.io/

🇪🇪 baromeeter.ai

Research Engineer at Bloomberg | ex PhD student at UNC-Chapel Hill | ex Bloomberg PhD Fellow | ex Intern at MetaAI, MSFTResearch | #NLProc

https://zhangshiyue.github.io/#/

Jun.-Prof. in Linguistics at University of Stuttgart. I study how we understand language one word at a time.

More active on my Mastodon account, bridged here: https://bsky.app/profile/did:plc:mjlcw5eps5sdsiblnm5lytmu

ELLIS PhD Fellow @belongielab.org | @aicentre.dk | University of Copenhagen | @amsterdamnlp.bsky.social | @ellis.eu

Multi-modal ML | Alignment | Culture | Evaluations & Safety| AI & Society

Web: https://www.srishti.dev/

Manchester Centre for AI FUNdamentals | UoM | Alumn UCL, DeepMind, U Alberta, PUCP | Deep Thinker | Posts/reposts might be non-deep | Carpe espresso ☕

Building AI powered tools to augment human creativity and problem solving in San Francisco. atelier.dev Previously @GitHub Copilot, @Google, 🇨🇦

narphorium.com

NLP PhD student @ University of Maryland, College Park || prev lyft, UT Austin NLP

https://nehasrikn.github.io/

Research director | @McGillU @Mila_Quebec @IVADO_Qc | My team designs machine learning frameworks to understand biological systems from new angles of attack

associate prof at UMD CS researching NLP & LLMs

I do research in social computing and LLMs at Northwestern with @robvoigt.bsky.social and Kaize Ding.

Professor, University of Tübingen @unituebingen.bsky.social.

Head of Department of Computer Science 🎓.

Faculty, Tübingen AI Center 🇩🇪 @tuebingen-ai.bsky.social.

ELLIS Fellow, Founding Board Member 🇪🇺 @ellis.eu.

CV 📷, ML 🧠, Self-Driving 🚗, NLP 🖺

2nd year PhD at UCSD w/ @rajammanabrolu.bsky.social

Prev: @ltiatcmu.bsky.social @umich.edu

Research: Agents🤖, Reasoning🧠, Games👾

https://shramay-palta.github.io

CS PhD student . #NLProc at CLIP UMD| Commonsense + xNLP, AI, CompLing | ex Research Intern @msftresearch.bsky.social