🚀 Introducing PantheonOS A Fully Open-Source Agent OS for Science

PantheonOS began as a research project in Qiu Lab @ Stanford and has since evolved into a vision to redefine data science in the era of AI—starting with computational biology, especially single-cell and spatial genomics.

21.08.2025 21:04 — 👍 1 🔁 1 💬 1 📌 1

LOGML 2025

London Geometry and Machine Learning Summer School, July 7-11 2025

🌟Applications open- LOGML 2025🌟

👥Mentor-led projects, expert talks, tutorials, socials, and a networking night

✍️Application form: logml.ai

🔬Projects: www.logml.ai/projects.html

📅Apply by 6th April 2025

✉️Questions? logml.committee@gmail.com

#MachineLearning #SummerSchool #LOGML #Geometry

11.03.2025 15:24 — 👍 20 🔁 9 💬 2 📌 1

The organisation and scientific advisory committees: @simofoti.bsky.social, @valegiunca.bsky.social, @pragya-singh.bsky.social, @daniel-platt.bsky.social, Vincenzo Marco De Luca, Massimiliano Esposito, Arne Wolf, Zhengang Zhong, Rahul Singh

11.03.2025 15:24 — 👍 4 🔁 2 💬 1 📌 0

LOGML 2025

London Geometry and Machine Learning Summer School, July 7-11 2025

Apply by the 16th February!

If you have any specific questions, contact: logml.committee@gmail.com

02.02.2025 12:58 — 👍 1 🔁 0 💬 0 📌 0

LOGML 2025

London Geometry and Machine Learning Summer School, July 7-11 2025

We are currently recruiting mentors to lead up to 6 students on a week-long project at the intersection of geometry and ML. Mentors can be PhD students (not first years), Postdocs or lectures! Many projects result in top conferences and journal publications. Mentors expenses will be covered.

02.02.2025 12:58 — 👍 1 🔁 0 💬 1 📌 0

LOGML 2025

London Geometry and Machine Learning Summer School, July 7-11 2025

LOGML (London Geometry and Machine Learning) summer school is back and we are looking for mentors!

@logml.bsky.social aims to bring together mathematicians and computer scientists to collaborate on problems at the intersection of geometry and ML.

More information is available at www.logml.ai.

02.02.2025 12:58 — 👍 2 🔁 1 💬 1 📌 0

@simofoti.bsky.social @pragya-singh.bsky.social @valegiunca.bsky.social @daniel-platt.bsky.social

@mmbronstein.bsky.social @marinkazitnik.bsky.social

22.01.2025 13:00 — 👍 5 🔁 2 💬 0 📌 0

⭐️Mentor applications open⭐️

We're excited to announce that LOGML summer school will return in London: July 7-11 2025. We are seeking mentors to lead group projects at the intersection of geometry and machine learning. Find out more and apply:

logml.ai

22.01.2025 13:00 — 👍 13 🔁 6 💬 1 📌 2

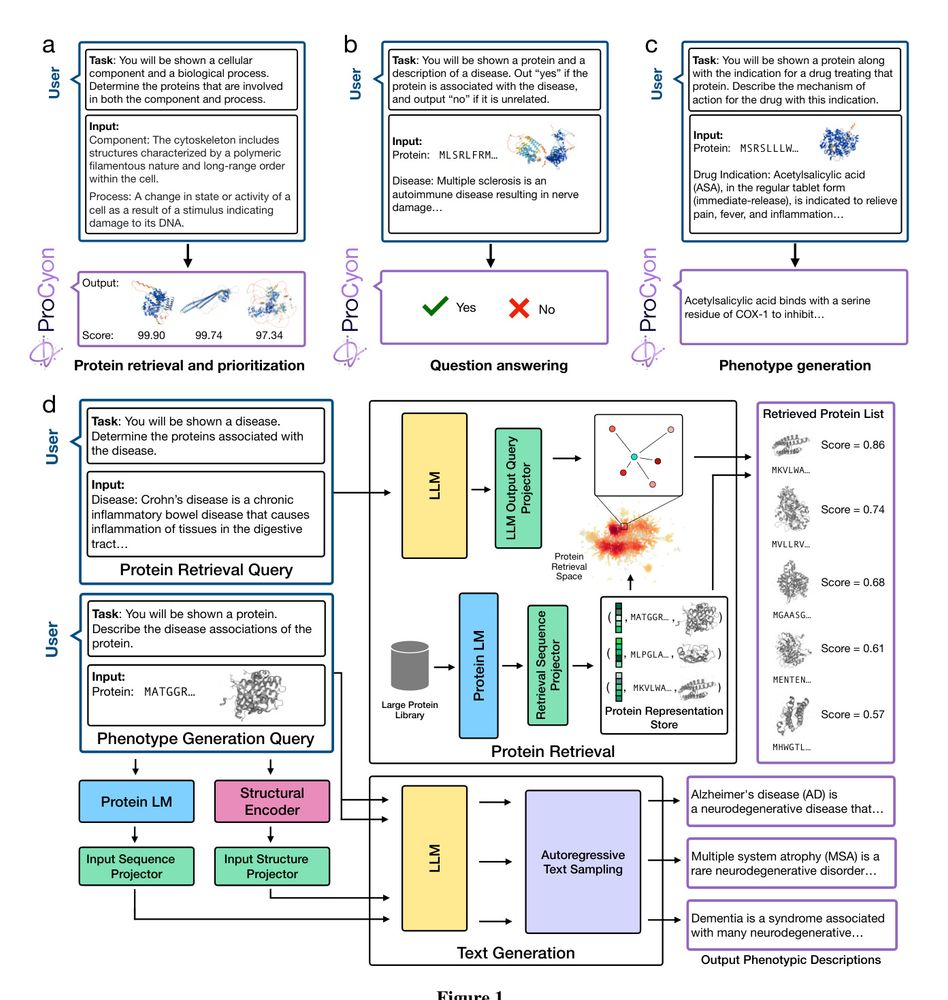

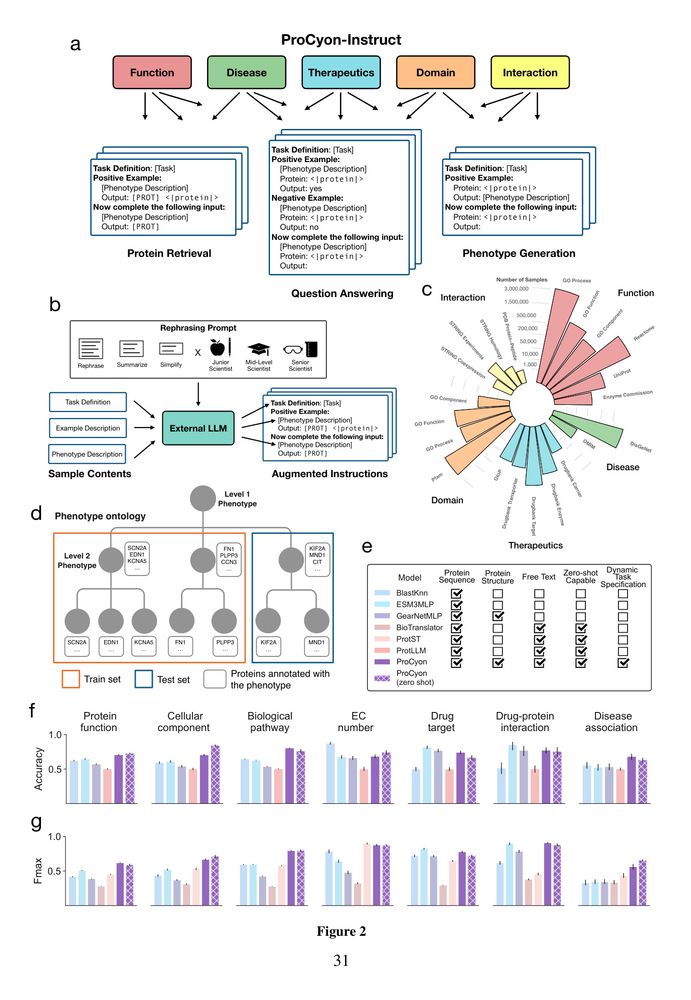

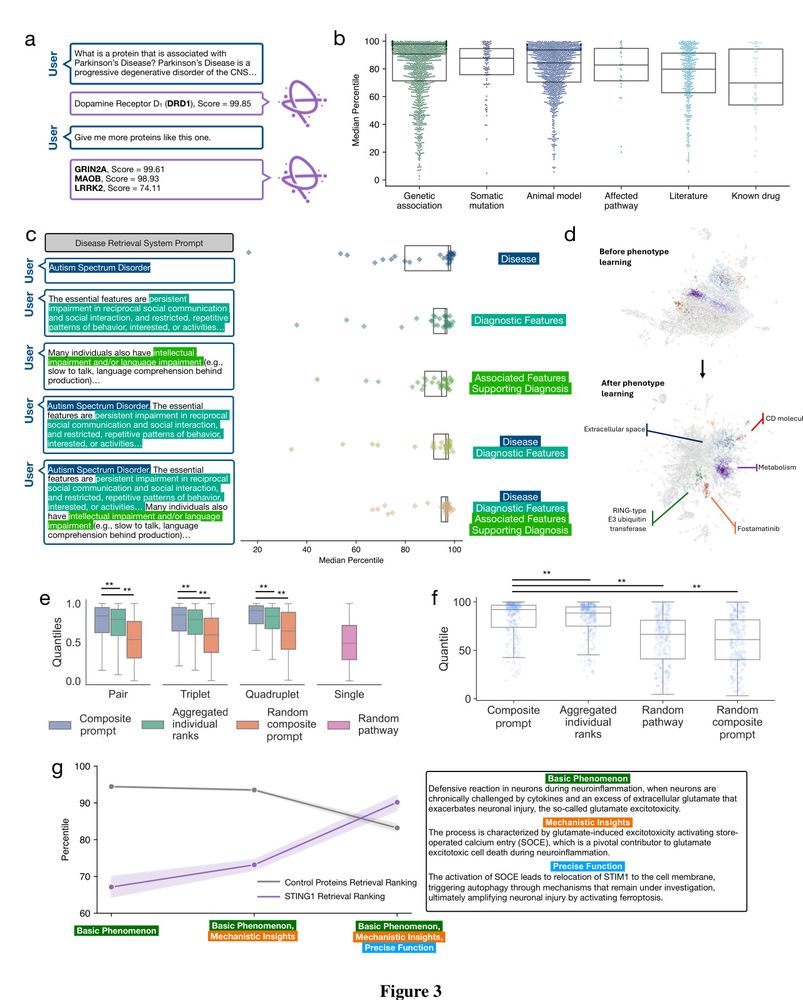

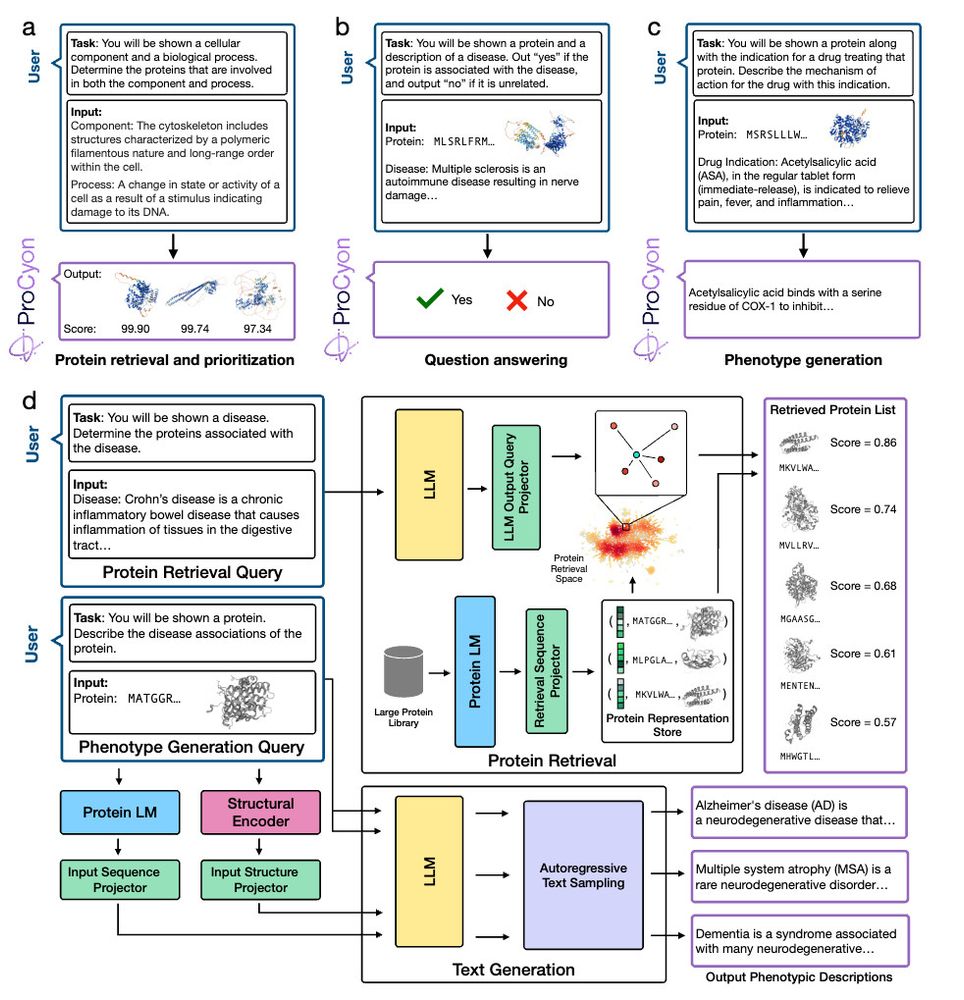

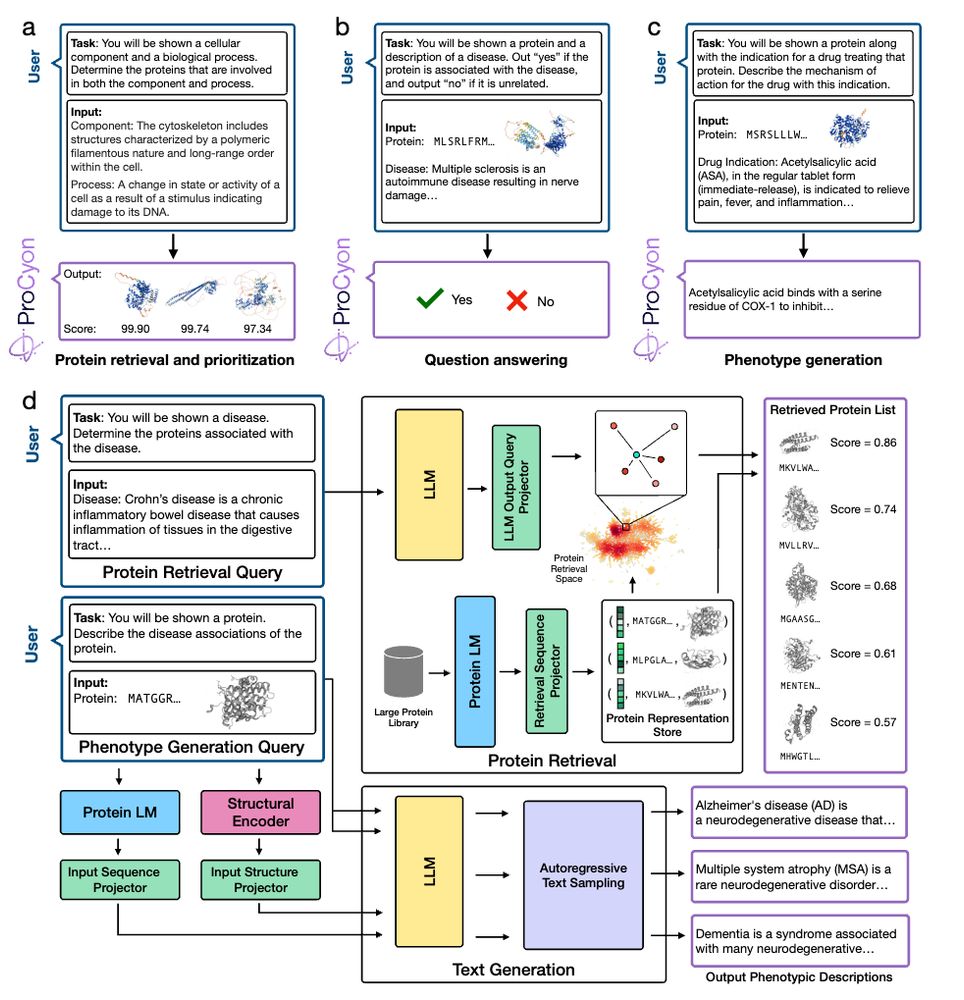

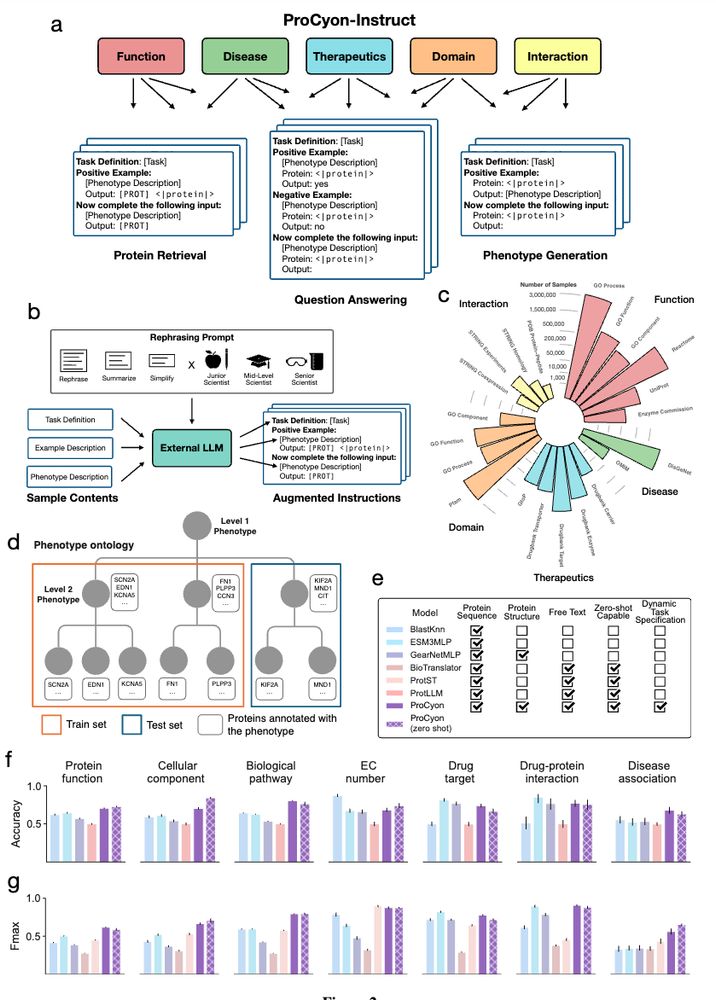

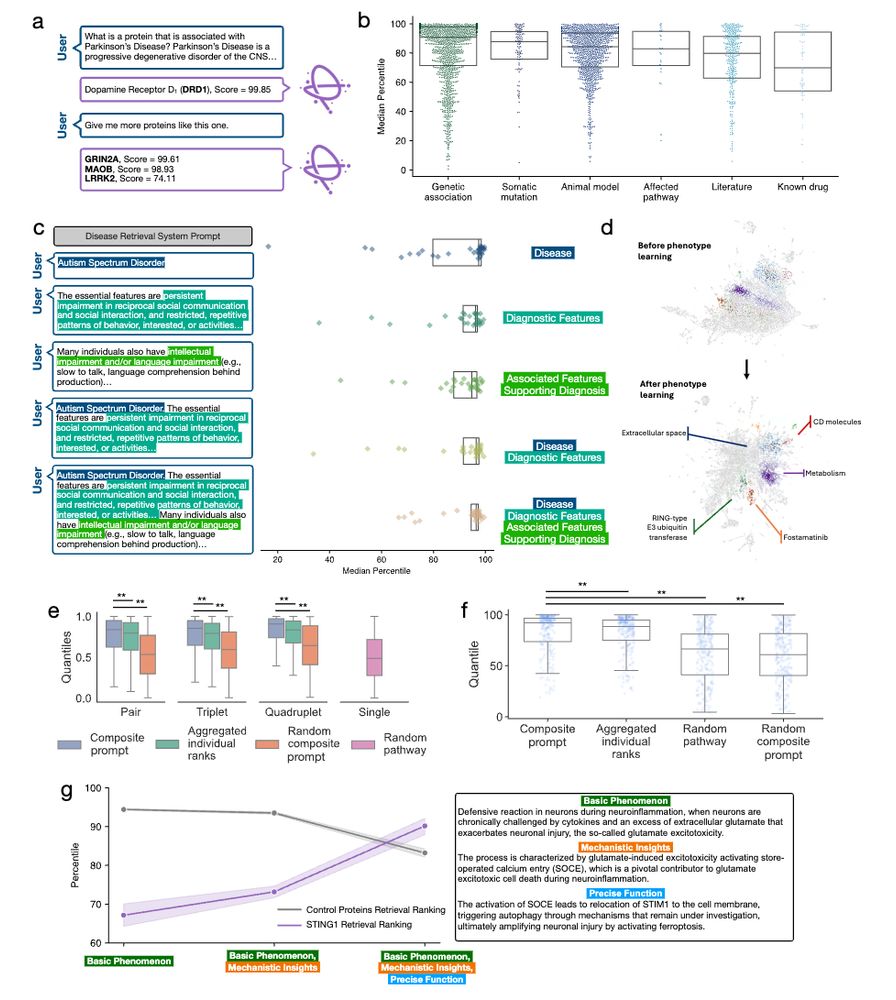

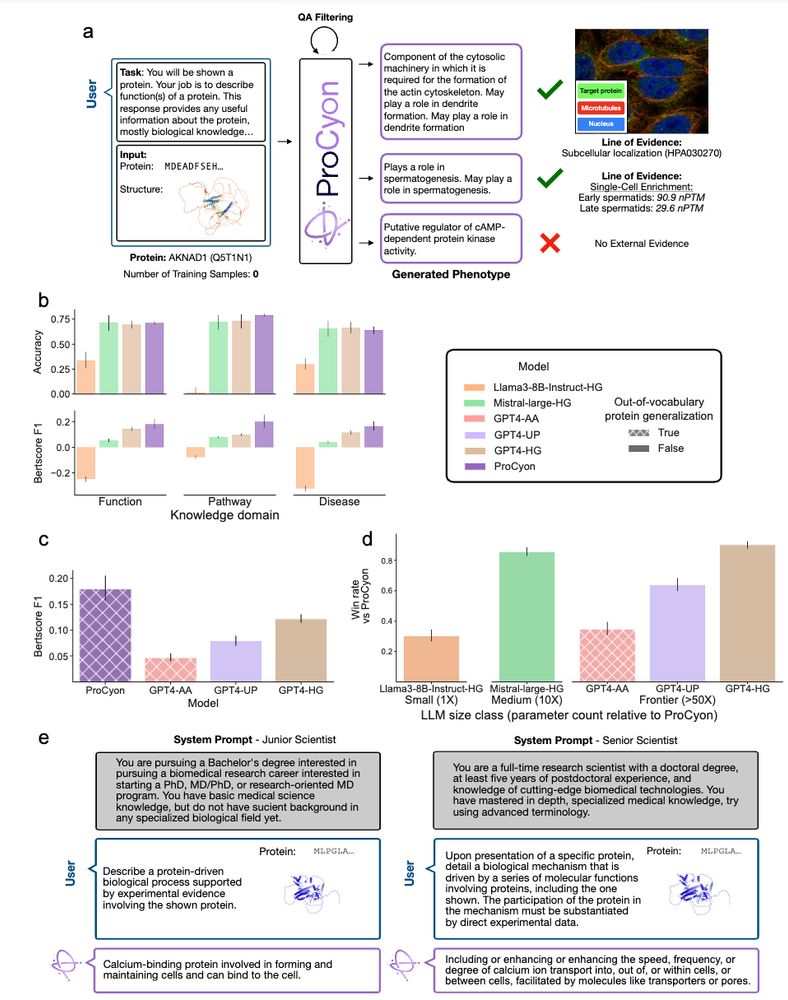

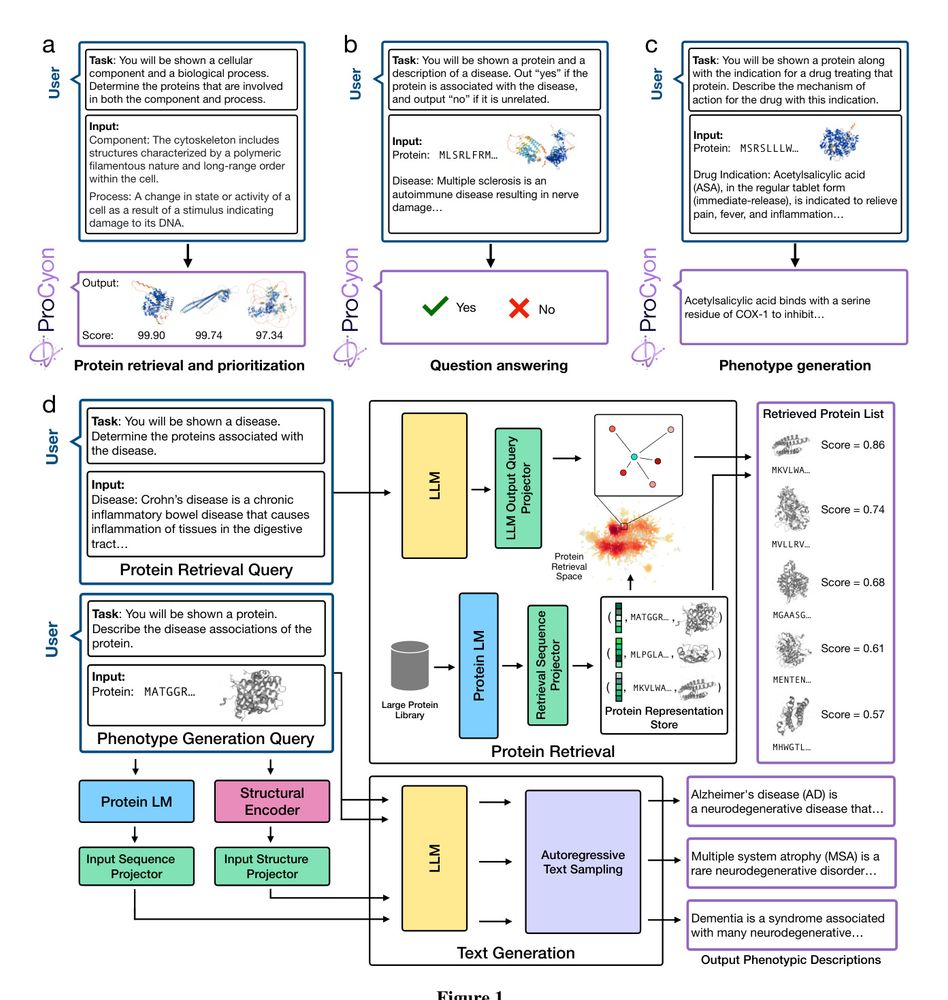

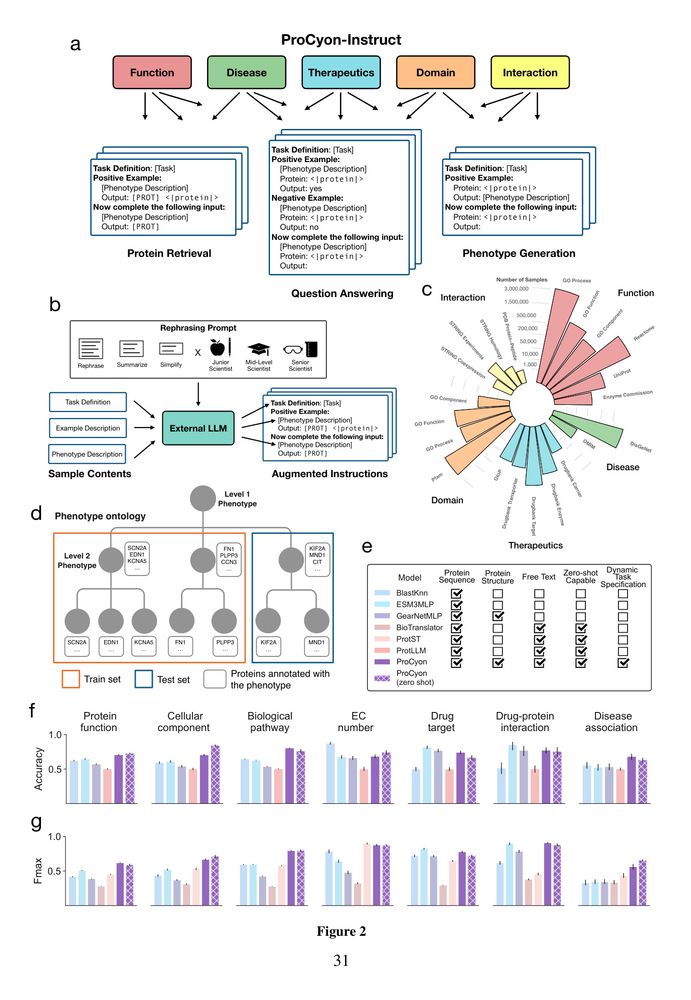

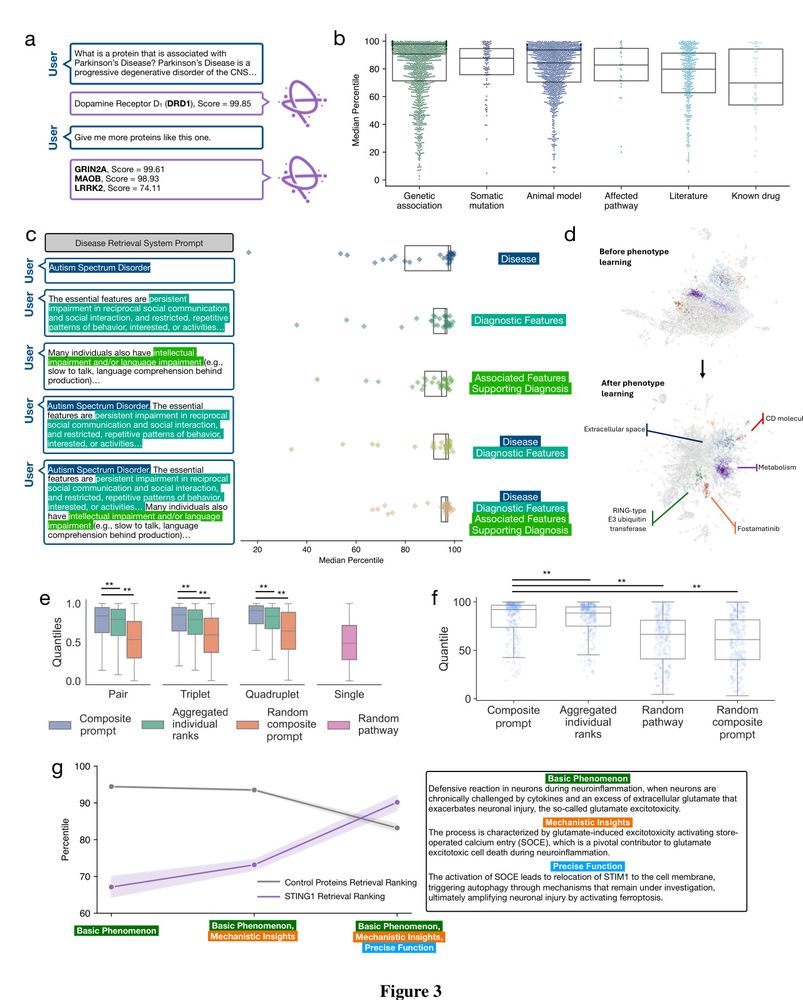

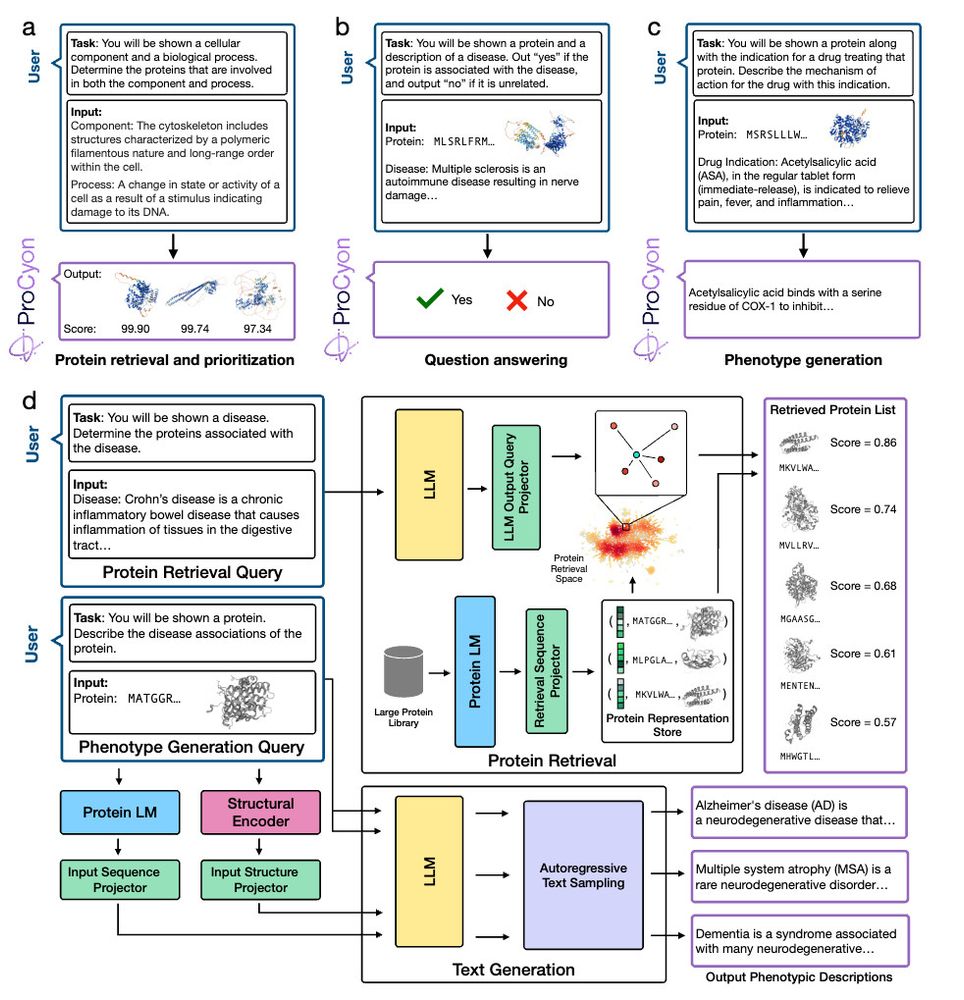

ProCyon: A multimodal foundation model for protein phenotypes

Figure 1

Figure 2

Figure 3

ProCyon: A multimodal foundation model for protein phenotypes [new]

16.12.2024 05:57 — 👍 3 🔁 1 💬 0 📌 0

ProCyon: A multimodal foundation model for protein phenotypes https://www.biorxiv.org/content/10.1101/2024.12.10.627665v1

16.12.2024 05:50 — 👍 1 🔁 1 💬 0 📌 0

@imperialcollegeldn.bsky.social

@harvard.edu

@kingscollegelondon.bsky.social

@imperialbrains.bsky.social

24.12.2024 22:03 — 👍 0 🔁 0 💬 0 📌 0

I am happy to finally share ProCyon, a multimodal multiscale model that integrates protein sequences, structures, and natural language to predict and generate protein phenotypes.

Paper: www.biorxiv.org/content/10.1...

Blog post: kempnerinstitute.harvard.edu/research/dee...

24.12.2024 22:03 — 👍 0 🔁 0 💬 1 📌 0

#Neuroscience #Imperial #Cognition #CognitiveNeuroscience

13.12.2024 12:13 — 👍 0 🔁 0 💬 0 📌 0

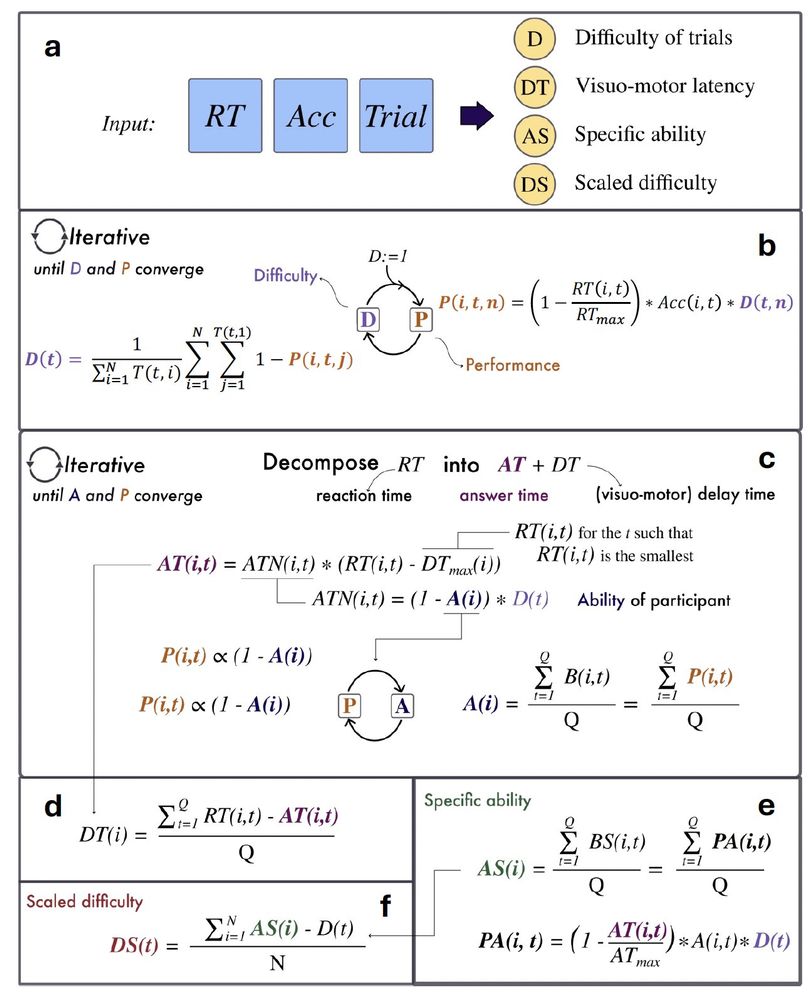

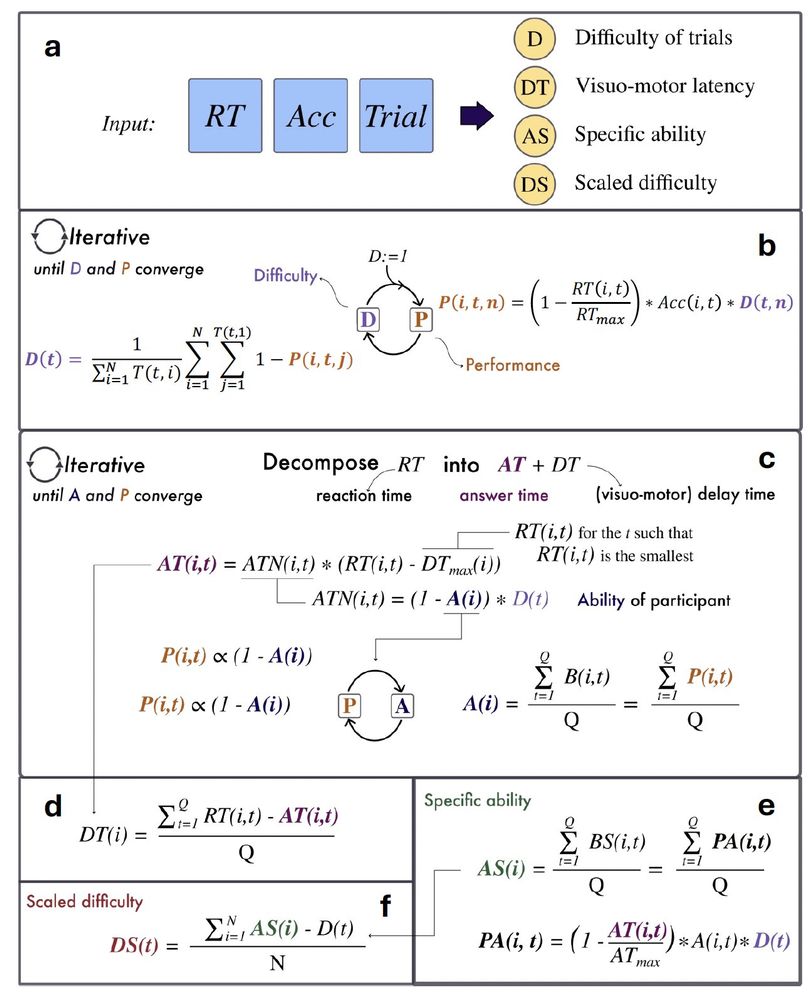

We tested it on 12 online tasks collected with Cognitron.

Compared to standard measures of RT and accuracy, IDoCT's measures of ability:

- have more interpretable latent cognitive factors

- are less sensitive to device

- have higher sensitivity and specificity

13.12.2024 12:13 — 👍 0 🔁 0 💬 1 📌 0

We tested the model on simulated data and IDoCT could reliably recover ground truth measures of trial’s difficulty, ability and visuomotor delay

13.12.2024 12:13 — 👍 0 🔁 0 💬 1 📌 0

IDoCT comes with a nice set of features:

- Robust: Works with as little as 100 participants

- Efficient: Scales up inexpensively to > 100K participants

- Flexible: Can work with potentially any online task collecting trial-by-trial responses

13.12.2024 12:13 — 👍 0 🔁 0 💬 1 📌 0

IDoCT derives specific estimates of ability, and visuomotor delay from trial-by-trial measures of reaction time (RT) and accuracy, while also providing data-driven trial’s difficulty scales that detect the most challenging aspects/dimensions of each task

13.12.2024 12:13 — 👍 0 🔁 0 💬 1 📌 0

🚨 It took two years but it finally happened!

Excited to share IDoCT - a novel computational model that can disentangle the motor and cognitive component from participants’ performance in online cognitive tasks - now published in Nature Digital Medicine.

13.12.2024 12:13 — 👍 1 🔁 0 💬 1 📌 0

Contextual learning for precision medicine | Biomedical Informatics PhD @Harvard | Math+CS BS @Stanford | Founder @RerootSTEM

LOGML (London Geometry and Machine Learning) aims to bring together mathematicians and computer scientists to collaborate on a variety of problems at the intersection of geometry and machine learning.

Postdoc @ImperialCollegeLondon. Previously PhD @UCL with interns @Disney & @Adobe Research.

Geometric deep learning, 3D computer vision, and computer graphics.

Personal website: https://simofoti.com

MSc AI @Imperial | Ex-Quant @GoldmanSachs | Physics undergrad @IITRoorkee #GeometricML #AI4Science

Assistant Professor at Harvard | Faculty @Harvard @KempnerInst AI | Faculty @broadinstitute @harvard_data | Cofounder @ProjectTDC | @AI_for_Science

Genomics, Machine Learning, Statistics, Big Data and Football (Soccer, GGMU)

Chapman-Schmidt Fellow at I-X, Imperial College London

The Association for Computational Linguistics (ACL) is a scientific and professional organization for people working on Natural Language Processing/Computational Linguistics.

Hash tags: #NLProc #ACL2025NLP

San Diego Dec 2-7, 25 and Mexico City Nov 30-Dec 5, 25. Comments to this account are not monitored. Please send feedback to townhall@neurips.cc.

International Conference on Learning Representations https://iclr.cc/

The official account for Imperial College London

A global top-ten university, where scientific imagination leads to world-changing impact. Science | Engineering | Medicine | Business

#OurImperial 💙

https://linktr.ee/imperialcoll

Imperial College London's interdisciplinary AI initiative. We bring together specialists in Artificial Intelligence, Machine Learning, Statistics, Robotics, and Data.

🔗 https://ix.imperial.ac.uk

Assistant Professor at Imperial College London | EEE Department and I-X.

Neuro-symbolic AI, Safe AI, Generative Models

Previously: Post-doc at TU Wien, DPhil at the University of Oxford.

official Bluesky account (check username👆)

Bugs, feature requests, feedback: support@bsky.app