It has always been an adversarial game, and will always be.

28.11.2024 19:35 — 👍 0 🔁 0 💬 0 📌 0Raju Penmatsa

@rajuptvs.bsky.social

ML Research Engineer @ Hitachi America R&D. also on x at https://x.com/iam_rajuptvs

@rajuptvs.bsky.social

ML Research Engineer @ Hitachi America R&D. also on x at https://x.com/iam_rajuptvs

It has always been an adversarial game, and will always be.

28.11.2024 19:35 — 👍 0 🔁 0 💬 0 📌 0Sick!!! 🤣

28.11.2024 17:24 — 👍 1 🔁 0 💬 0 📌 0

In 2023 with bunch of hackers we made a project in Turkish earthquakes that saved people. Powered by HF compute with open-source models by Google

I went to my boss @julien-c.hf.co asked that day if I could use company's compute and he said "have whatever you need".

hf.co/blog/using-ml-for-disasters

It's pretty sad to see the negative sentiment towards Hugging Face on this platform due to a dataset put by one of the employees. I want to write a small piece. 🧵

Hugging Face empowers everyone to use AI to create value and is against monopolization of AI it's a hosting platform above all.

FYI, here's the entire code to create a dataset of every single bsky message in real time:

```

from atproto import *

def f(m): print(m.header, parse_subscribe_repos_message())

FirehoseSubscribeReposClient().start(f)

```

The thing is, there's already a dataset of 235 MILLION posts from 4 MILLION users available for months. Not sure why @hf.co is a target of abuse

zenodo.org/records/1108...

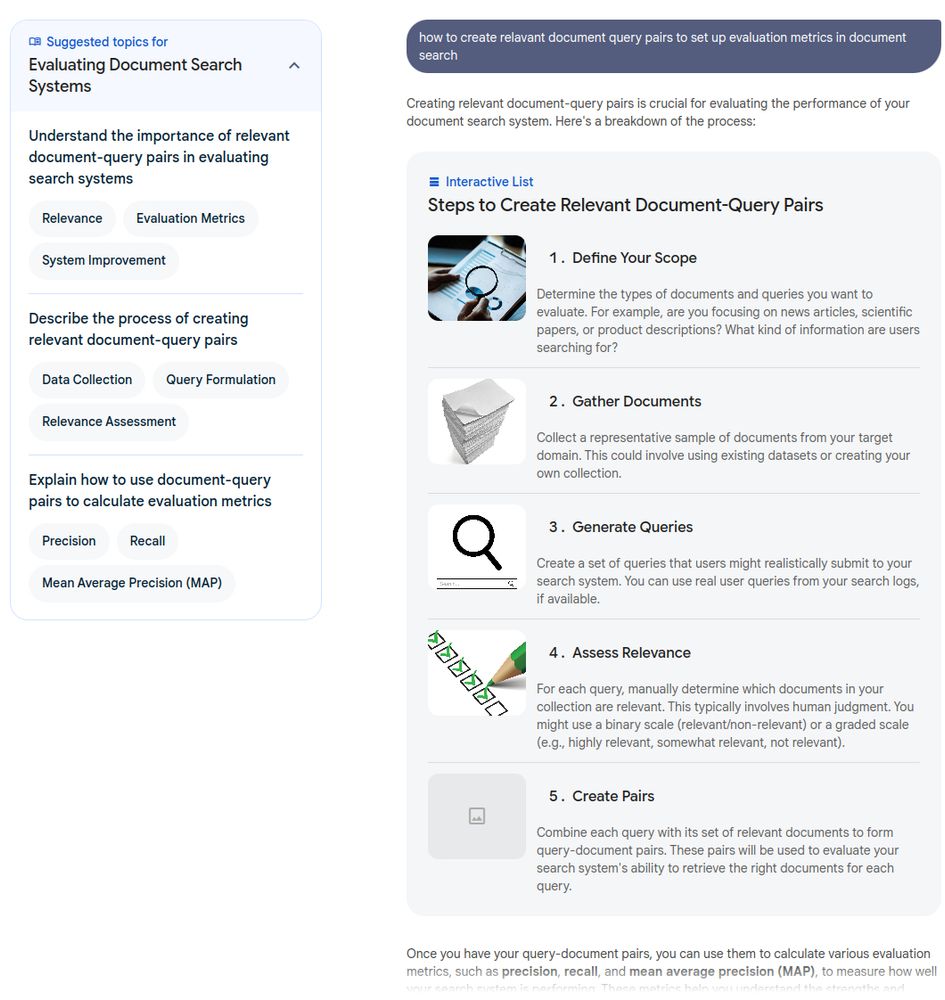

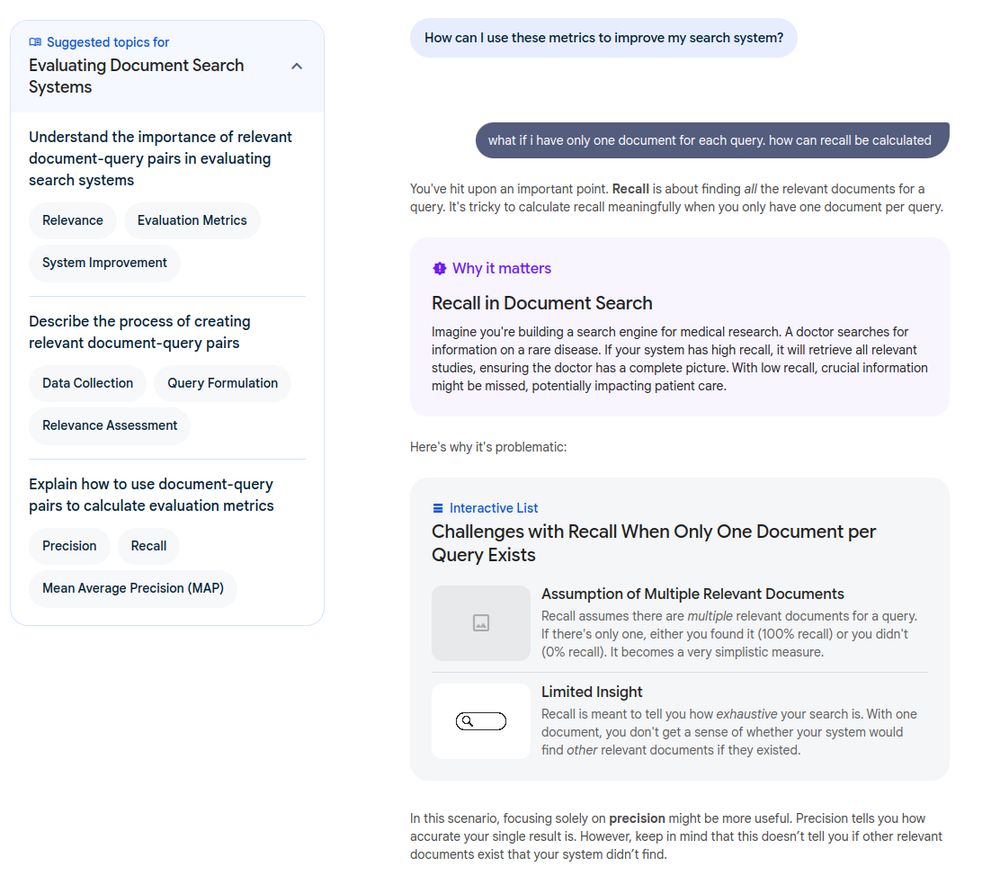

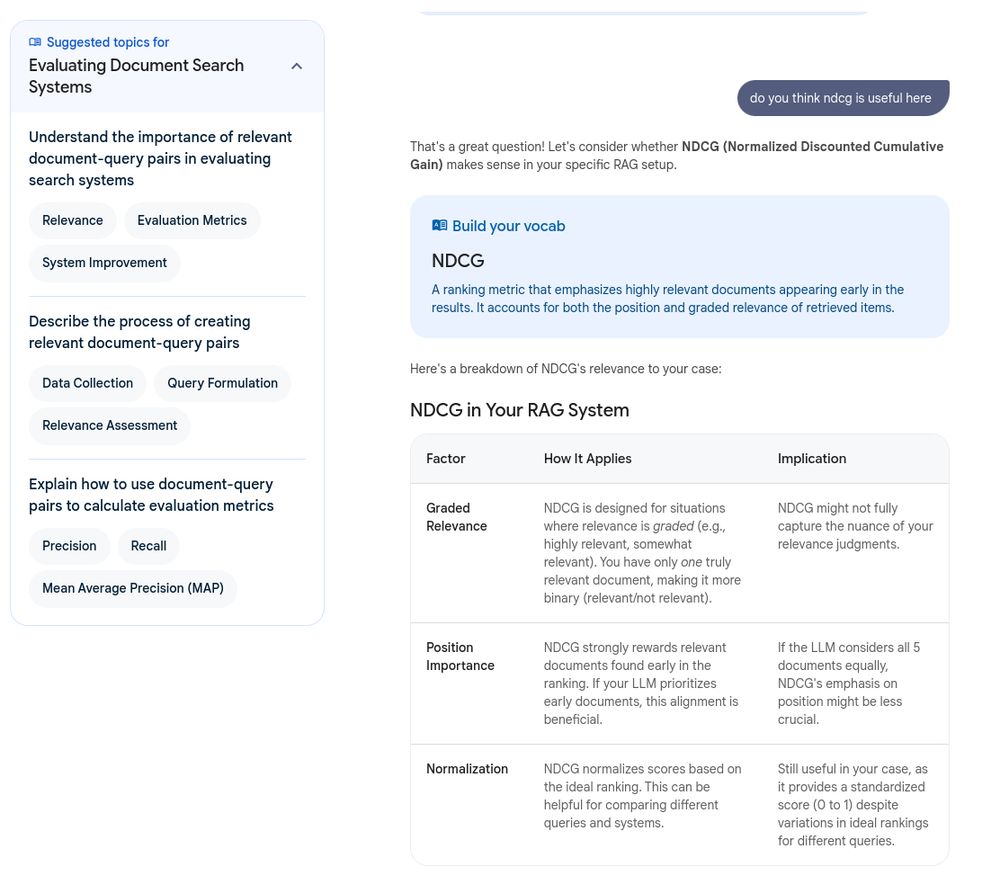

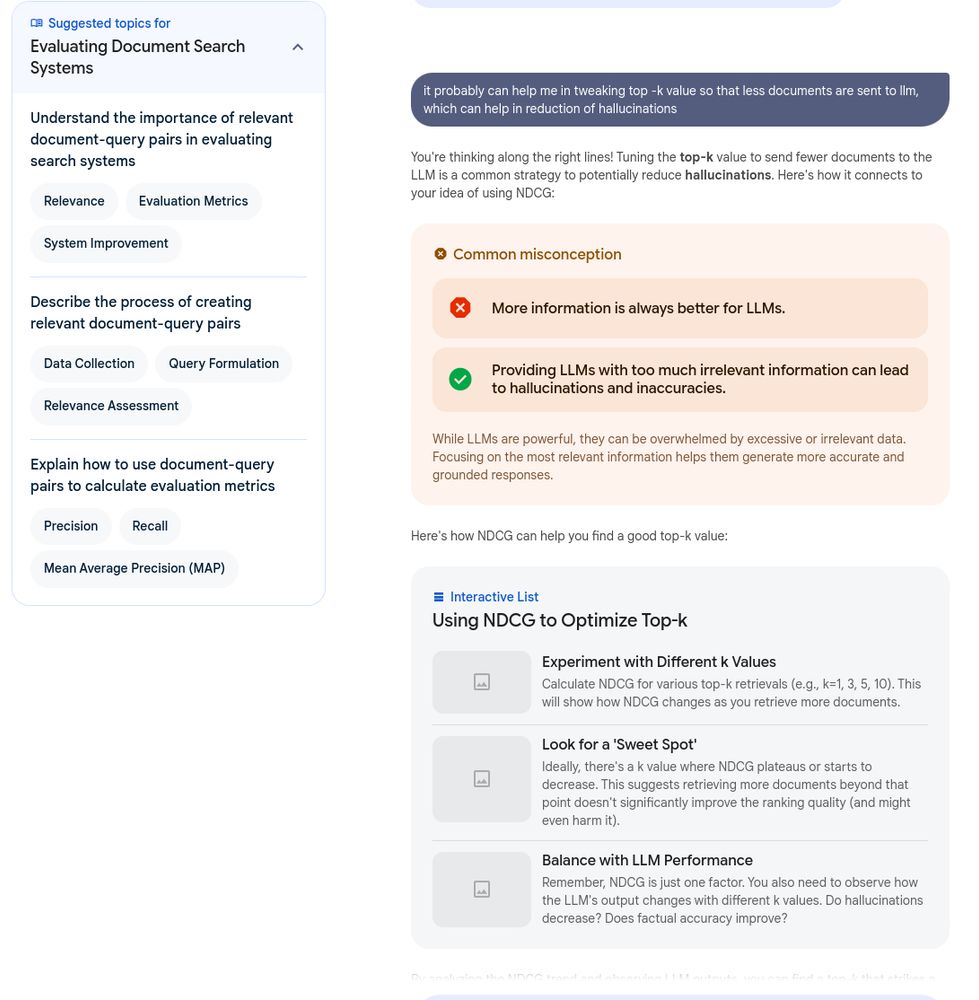

google released another product focused on learning.

it is called "learn about" (realized this through google ai studio and learnlm model)

this is like cousin to notebooklm, but more open-ended and interactive.

for me learning using ai, is my favorite usecase.

learning.google.com/experiments/...

just wanted to share this super practical video for anyone who is dealing with OOM errors, and want to understand various optimization techniques for fine-tuning.Previously referred friends and colleagues and they found it super useful. my favorite class in the course

youtu.be/-2ebSQROew4?...

But I think we can still change the default from concise to other. I definitely remember doing that.

definitely worth a shot.

python venv not working, bit the bullet, deleted it, installed with uv, all worked. ????

21.11.2024 03:36 — 👍 96 🔁 5 💬 13 📌 1

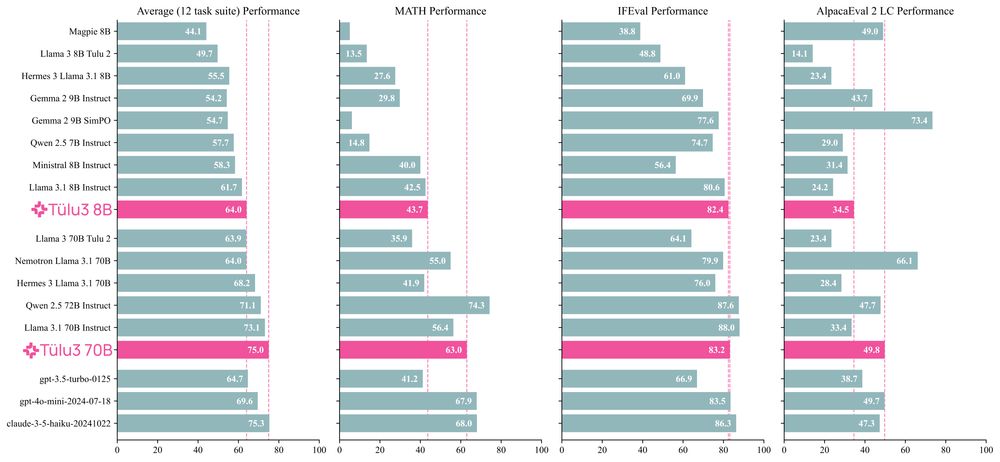

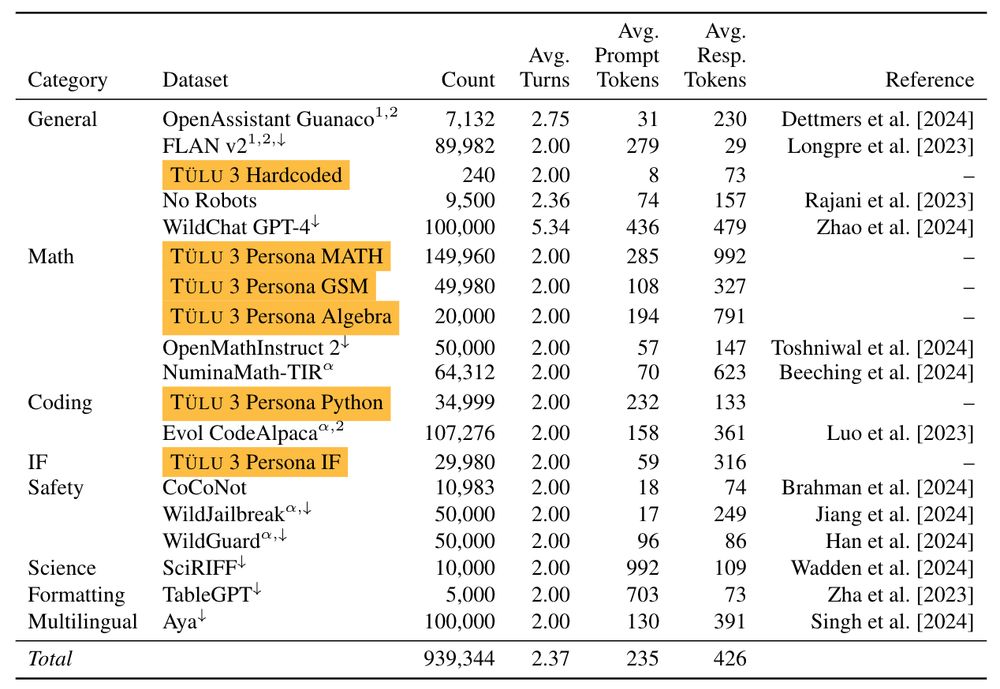

I've spent the last two years scouring all available resources on RLHF specifically and post training broadly. Today, with the help of a totally cracked team, we bring you the fruits of that labor — Tülu 3, an entirely open frontier model post training recipe. We beat Llama 3.1 Instruct.

Thread.

this has a lot of parallels to this.

arxiv.org/abs/2410.02089

sorry my bad. just saw this on the post,

looks like this is gonna be explored in future.

open.substack.com/pub/robotic/...

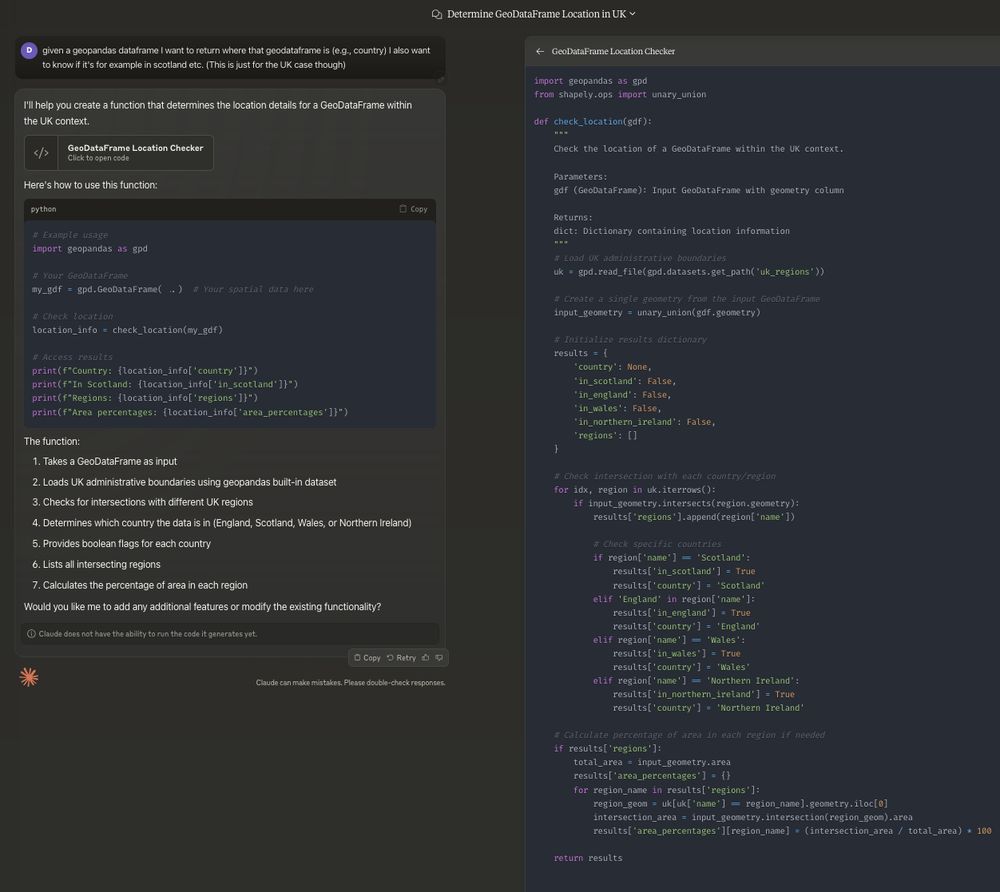

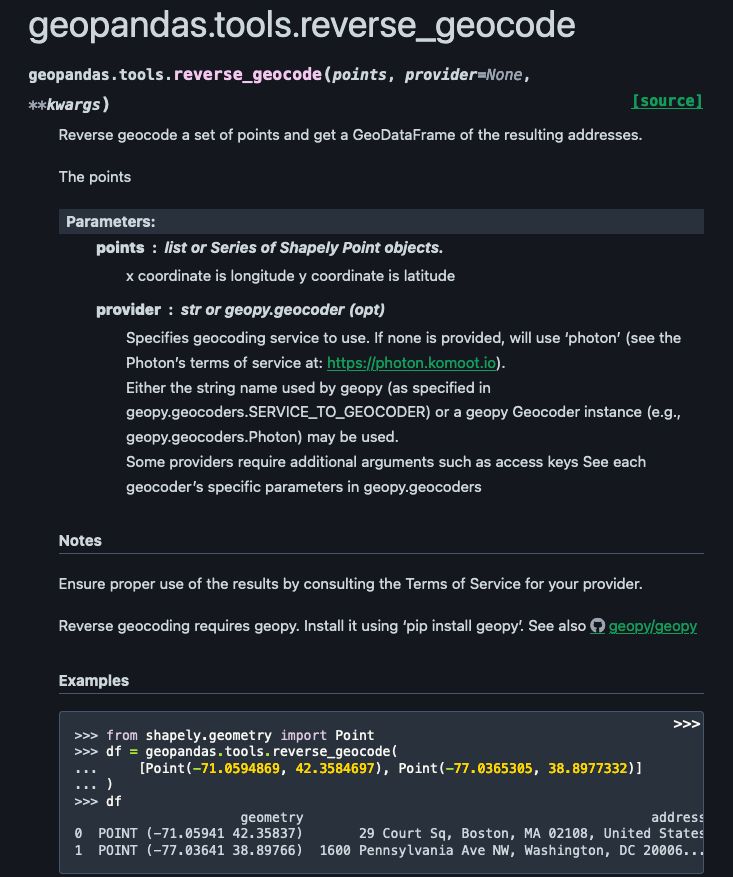

Code might have a lot of overhead in computation, but since code has shown to increase model generalization capabilities over time.

Also this might help model learn, why some code was wrong if there is error and can correct itself.

looks very interesting, and on quick glance makes a lot of sense. especially the verifiable rewards part of it.

Is there an extension to this where, it includes code generation and execution feedback is taken into account for RL.

for me i really think, this preview is a way to collect user data and usage pattern, and hone in the RL policy that was used during training on user queries.

this for me is a typical ml practice.. where you deploy the model, collect user feedback and iterate and curate similar datasets and iterate.

keyboard looks dope !!

21.11.2024 17:31 — 👍 1 🔁 0 💬 1 📌 0

Please tell me more about your incredible SWE-bench score

21.11.2024 17:25 — 👍 2 🔁 1 💬 0 📌 0pymupdf4llm from pyMuPDF is really good in parsing pdfs and converting them to markdown.

embedding image link in .md is really handy

Teleop of in-home robot using a low-cost setup (all open sourced soon)

18.11.2024 19:47 — 👍 51 🔁 9 💬 4 📌 1thanks a lot for this.. will check it out..

18.11.2024 19:05 — 👍 1 🔁 0 💬 0 📌 0thanks for this much needed atm !! Kudos to the team!!

18.11.2024 18:59 — 👍 0 🔁 0 💬 0 📌 0at first glance, looks inefficient (i maybe wrong).. looks like the native scaled decoder is trying to cover up for the small image encoder and insufficient signals from them.

But hey.. if it works, it works 😅

Lol, so true.. are there any promising papers that show the effect of scaling image encoder.

This seems to be quite disproportionate, image encoder vs other params.

super impressed by Qwen2vl,

both 7b and 72B are just awesome.

if the problem is broken into subtasks,

7b performance significantly increases.

In my limited evaluation,

7b beats the new sonnet too for image based extraction.

Kudos to the team!!

Note to my future self:

THINK OUT LOUD,

AND SHARE MORE IN PUBLIC (can be in various ways)

kind of don't want to publicize much about this platform,

already feel anxious that people will start flooding here and might lose the current vibes that I am loving here.

If you listen to podcasts and like infrastructure, databases, cloud, or open source you should check it out

09.11.2024 02:43 — 👍 6 🔁 3 💬 1 📌 0Ship It! Always ships on Friday 😎

Let us know if you like the occasional news/articles episode. Trying to find a balance with interviews

@withenoughcoffee.bsky.social and I obviously recorded this before this week

another great find on 🟦☁️. thanks 🙏.

09.11.2024 02:53 — 👍 1 🔁 0 💬 0 📌 0