AI and Fraternity, Abeba Birhane, AI Accountability Lab

I envision a future where human dignity, justice, peace, kindness, care, respect, accountability, and rights and freedoms serve as the north stars that guide AI development and use. Realising these ideals can’t happen without intentional tireless work, dialogues, and confrontations of ugly realities – even if they are uncomfortable to deal with. This starts with deciphering hype from reality. Pervasive narratives portray AI as a magical, fully autonomous entity approaching a God-like omnipotence and omniscience. In reality, audits of AI systems reveal a consistent failure to deliver on grandiose promises and suffer from all kinds of shortcomings, issues often swept under the rug. AI in general, and GenAI in particular, encodes and exacerbates historical stereotypes, entrenches harmful societal norms, and amplifies injustice. A robust body of evidence demonstrates that — from hiring, welfare allocation, medical care allocation to anything in between — deployment of AI is widening inequity, disproportionately impacting people at the margins of society and concentrating power and influence in the hands of few. Major actors—including Google, Microsoft, Amazon, Meta, and OpenAI—have willingly aligned with authoritarian regimes and proactively abandoned their pledges to fact-check, prevent misinformation, respect diversity and equity, refrain from using AI for weapons development, while retaliating against critique. The aforementioned vision can’t and won’t happen without confrontation of these uncomfortable facts. This is precisely why we need active resistance and refusal of unreliable and harmful AI systems; clearly laid out regulation and enforcement; and shepherding of the AI industry towards transparency and accountability of responsible bodies. "Machine agency" must be in service of human agency and empowerment, a coexistence that isn't a continuation of modern tech corporations’ inequality-widening,

so I am one of the 12 people (including the “god-fathers of AI”) that will be at the Vatican this September for a two full-day working group on the Future of AI

here is my Vatican approved short provocation on 'AI and Fraternity' for the working group

04.08.2025 11:31 — 👍 532 🔁 157 💬 31 📌 16

(reply to review request) I won't review articles that aren't open access as I'd be performing free labour for the publisher's profit. Providing access to journals which I already access via university is not renumeration, and besides the community still suffers if articles are kept paywalled.

04.08.2025 19:16 — 👍 1 🔁 0 💬 0 📌 0

AI for Good [Appearance?]

Reflections on the last minute censorship of my keynote at the AI for Good Summit 2025

A short blogpost detailing my experience of censorship at the AI for Good Summit with links to both original and censored versions of slides and links to my talk

aial.ie/blog/2025-ai...

11.07.2025 14:01 — 👍 131 🔁 79 💬 3 📌 11

Open Joint Letter against the Delaying and Reopening of the AI Act

CDT Europe, alongside the European Consumer Organisation (BEUC), European Digital Rights (EDRi) and the European Centre for Not-for-Profit Law (ECNL), co-drafted a letter signed by 52 civil society or...

⏰ Today, CDT Europe, together with 51 experts, academics & civil society organisations, sent an open letter to the European Commission to express our concerns regarding the forthcoming Digital Simplification package, which could include revisiting the AI Act.

👇🏻 Read the full letter on our website:

09.07.2025 09:10 — 👍 21 🔁 12 💬 2 📌 3

OSF

Indeed! I wrote an article recently called "Simple now, Complex later: The Questionable Efficacy of Diluting GDPR Article 30(5)" which shows that this exemption is toothless and actually risks increasing issues! doi.org/10.31235/osf...

10.07.2025 09:46 — 👍 0 🔁 0 💬 0 📌 0

a couple of hours before my keynote, I went through an intense negotiation with the organisers (for over a hour) where we went through my slides and had to remove anything that mentions 'Palestine' 'Israel' and replace 'genocide' with 'war crimes'

1/

08.07.2025 09:58 — 👍 1345 🔁 652 💬 37 📌 63

Unethical - if there are things in the work intended or unintended to obscure or manipulate or influence the "reviewer" they are by definition unethical.

03.07.2025 22:15 — 👍 0 🔁 0 💬 0 📌 0

The simplest solution to "stop the clock" on the AI Act is to not use AI. You can't have it both ways, sorry. Want to drive? Get a license. Want to use AI? Get compliant.

03.07.2025 15:52 — 👍 0 🔁 0 💬 0 📌 0

Digital sovereignty can’t be bargained away

The European Commission has tools, public support and a mandate to act on Big Tech. Trading that away for short-term calm would be a costly mistake.

Do you think that Europe should bargain away its digital sovereignty to appease Trump and the broligarchy? Strong majorities in Germany, France, and Spain are against that (YouGov).

@coricrider.com and I have a better plan:

www.politico.eu/article/digi...

03.07.2025 07:00 — 👍 71 🔁 27 💬 2 📌 5

How US Firms Are Weakening the EU AI Code of Practice | TechPolicy.Press

Instead of giving in, the Commission must ensure that the Code reflects the intent of the AI Act and safeguards public interest, write Nemitz and Oueslati.

How #US Firms are weakening #EU #AI #Code of practice: By pressuring the European Commission to prioritize a few US firms over 1,000 stakeholders, the companies put entire process at risk and lose credibility as actors of public interest.#AIAct #OpenAI #GAFAM www.techpolicy.press/how-us-firms...

30.06.2025 12:57 — 👍 10 🔁 13 💬 0 📌 0

Same. I've started using "social media" as my literature review source to get updates and know about work/stuff. So it's less personal and more professional.

02.07.2025 09:44 — 👍 1 🔁 0 💬 0 📌 0

I'll start with an obvious ones: data on device and the advertising identifier. I wish there was a penalty for such obvious lies 😪

02.07.2025 06:22 — 👍 2 🔁 0 💬 0 📌 0

Agree. Looks increasingly like the selling point is either to trigger the fear of social judgement (language), or being left behind (prestige), or to encourage not having to take responsibility (learning).

26.06.2025 12:16 — 👍 0 🔁 0 💬 0 📌 0

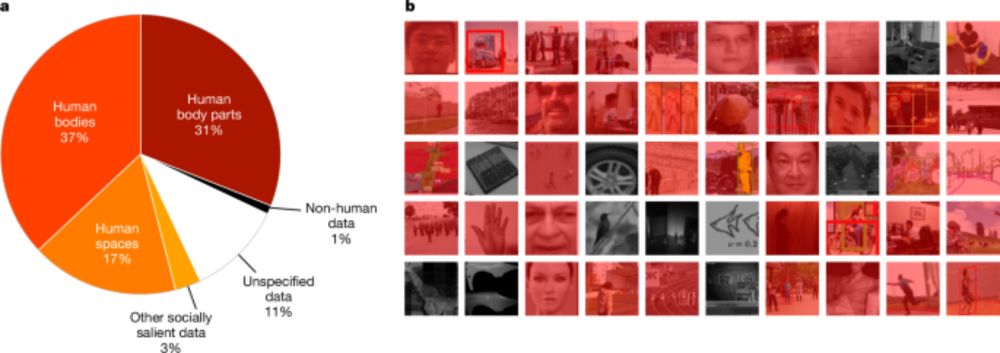

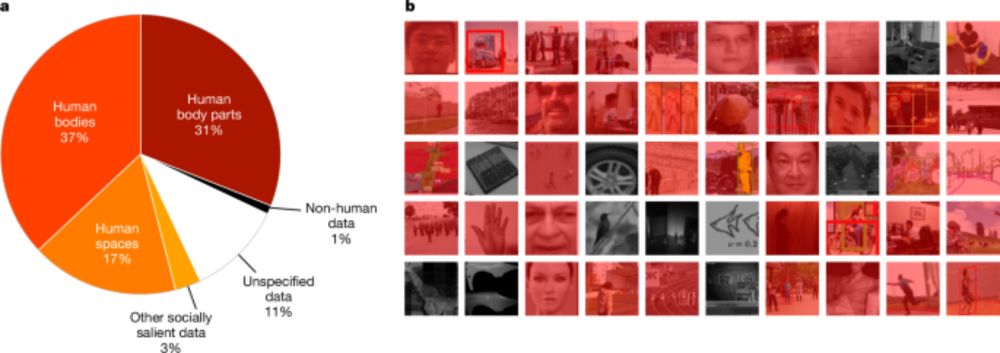

Computer-vision research powers surveillance technology - Nature

An analysis of research papers and citing patents indicates the extensive ties between computer-vision research and surveillance.

New paper hot off the press www.nature.com/articles/s41...

We analysed over 40,000 computer vision papers from CVPR (the longest standing CV conf) & associated patents tracing pathways from research to application. We found that 90% of papers & 86% of downstream patents power surveillance

1/

25.06.2025 17:29 — 👍 756 🔁 449 💬 24 📌 59

😲 this is becoming a worrying trend - there was also a hugely missing presence (through exclusion) of civil society in the AI Act GenAI code of conduct workshops

26.06.2025 09:07 — 👍 1 🔁 0 💬 0 📌 0

OSF

Draft article "Simple now, Complex later: The Questionable Efficacy of Diluting GDPR Article 30(5)" that questions the Commission's proposal and shows this approach will not yield the intended results, and will lead to more compliance issues for organisations. doi.org/10.31235/osf...

14.06.2025 12:39 — 👍 2 🔁 1 💬 0 📌 0

So it wasn't just me! I called Vista the "frosted glass" look and was laughing when Apple launched "liquid glass". If its anything like Vista, with the UX also changing, it will feel pretty for a week and then we will realise it's full of friction and wastes time. Hopefully not.

14.06.2025 07:38 — 👍 0 🔁 0 💬 0 📌 0

Combine this with use of personal data to train models which could then contain it in pseudonymous form, and we're going to be in a pickle to fix the mess. We need to address that before it gets too complicated both legally and socially.

14.06.2025 07:32 — 👍 1 🔁 0 💬 1 📌 0

DP people also have diversity and factions ;) My personal worry is that instead of regulating processing of personal data we're now heading towards what looks more like PII, thereby losing a strong privacy foundation in US regulations.

14.06.2025 07:29 — 👍 1 🔁 0 💬 1 📌 0

Thanks for sharing. This is getting increasingly absurd. The relativity goes against the GDPR is as it leads to weird cases such as processors not professing personal data and not being subject to the GDPR, and not to mention the high risk of advertisers wanting to use this as loophole.

13.06.2025 06:59 — 👍 1 🔁 0 💬 1 📌 0