AI researchers will literally negotiate $100 million comp packages by themselves but they won’t play poker for more than $50 buy-ins

30.08.2025 11:44 — 👍 11 🔁 0 💬 2 📌 0

GPT-5 Thinking isn’t perfect, but it’s the first AI model I can trust more than many common sources of truth on the internet.

25.08.2025 09:39 — 👍 7 🔁 0 💬 1 📌 0

People often ask me: will reasoning models ever move beyond easily verifiable tasks? I tell them we already have empirical proof that they can, and we released a product around it: OpenAI Deep Research.

13.05.2025 17:46 — 👍 6 🔁 0 💬 1 📌 0

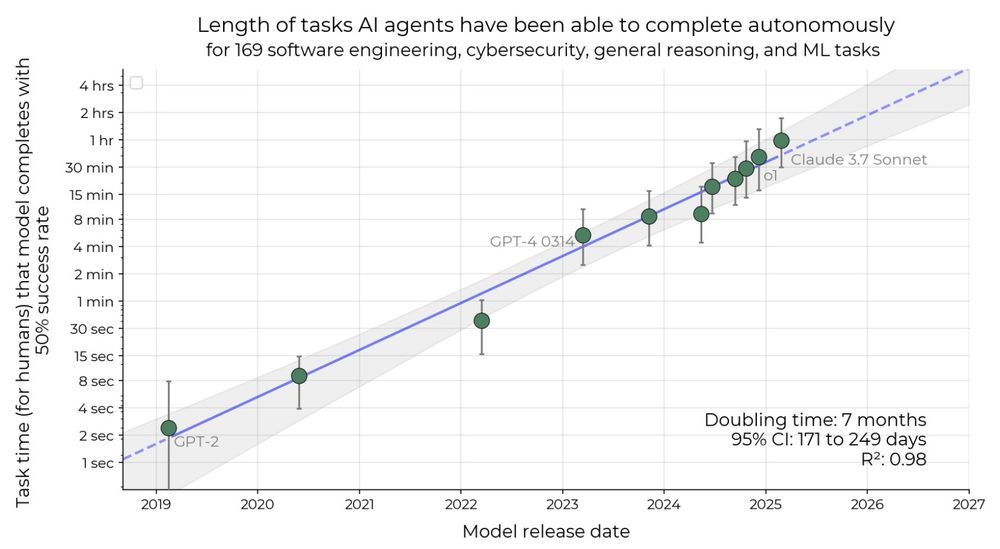

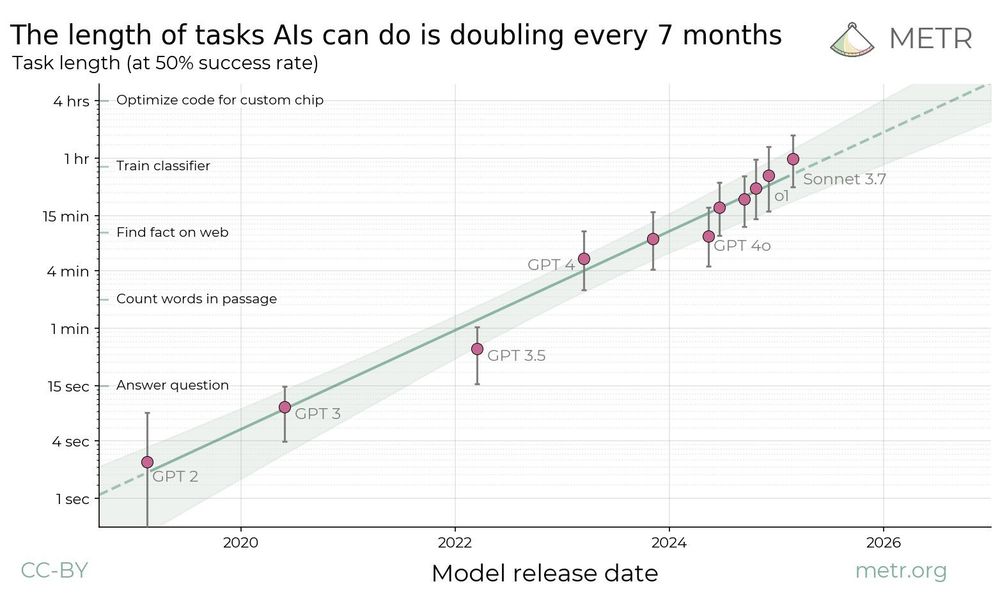

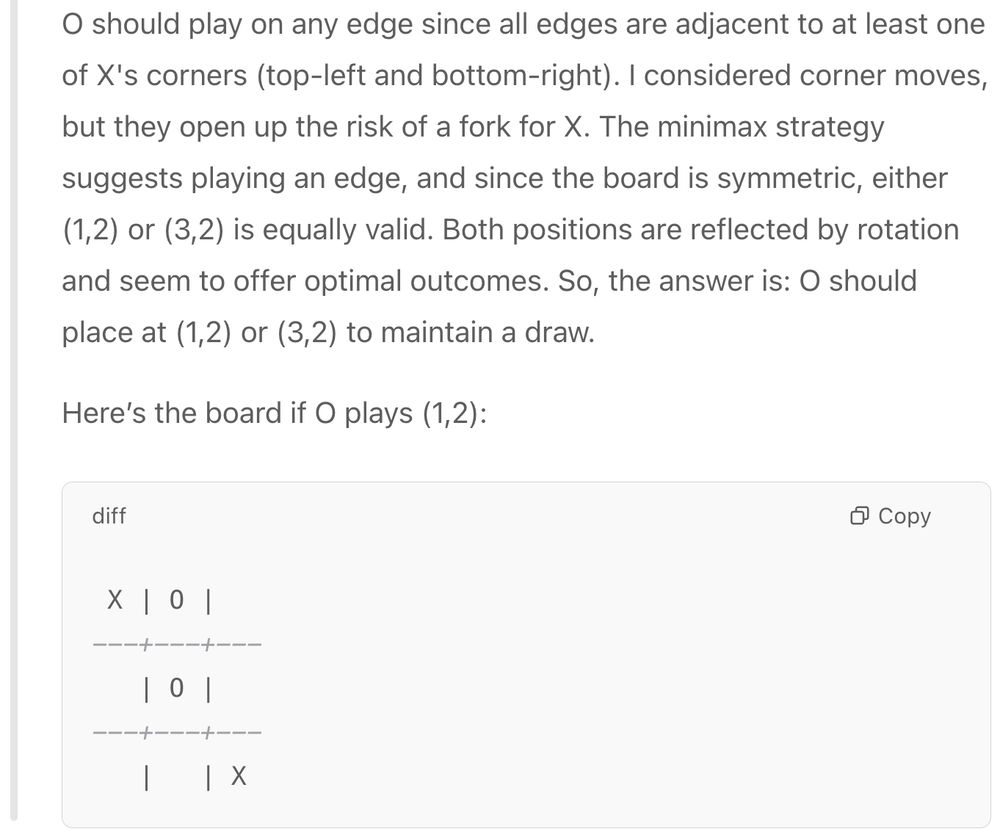

This METR "doubling every ∼7 mo" plot keeps popping up. It's striking, but let's be precise about what's measured: self‑contained code and ML tasks.

I think agentic AI may move faster than the METR trend, but we should report the data faithfully rather than over‑generalize to fit a belief we hold.

11.05.2025 17:48 — 👍 8 🔁 0 💬 1 📌 0

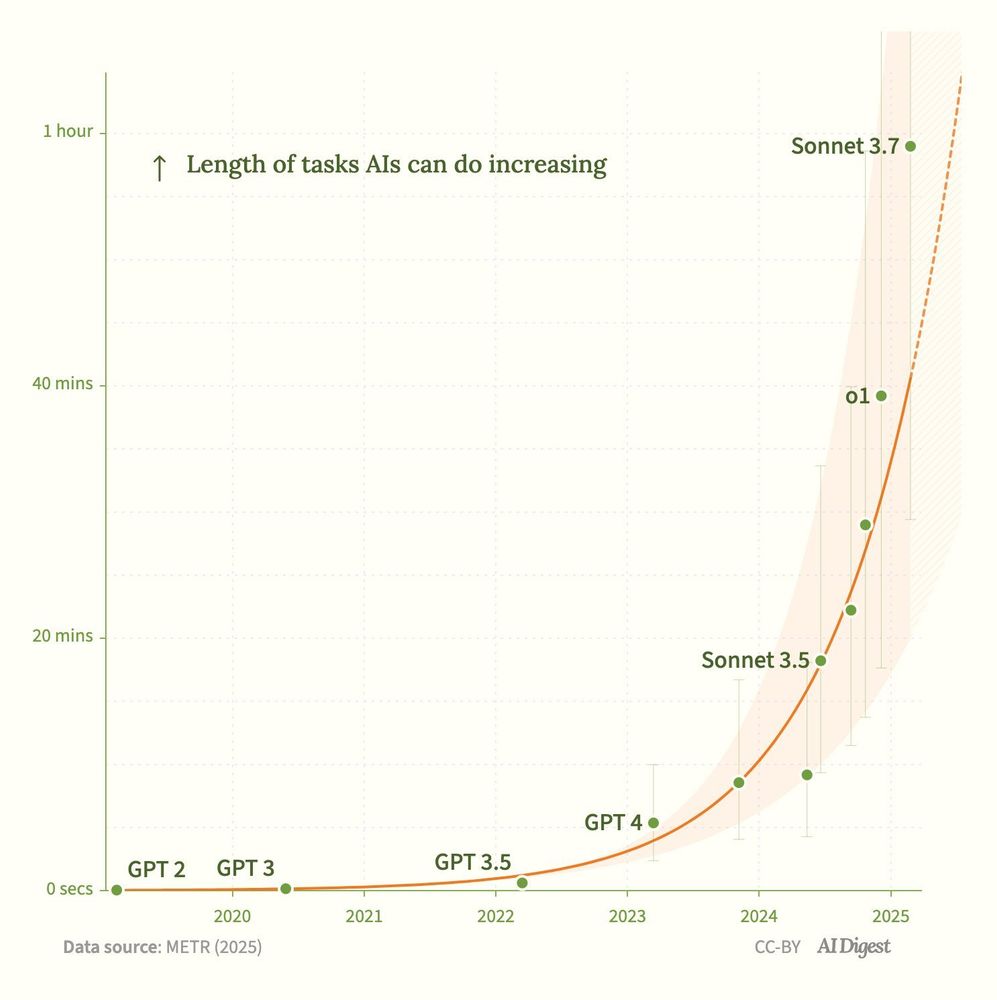

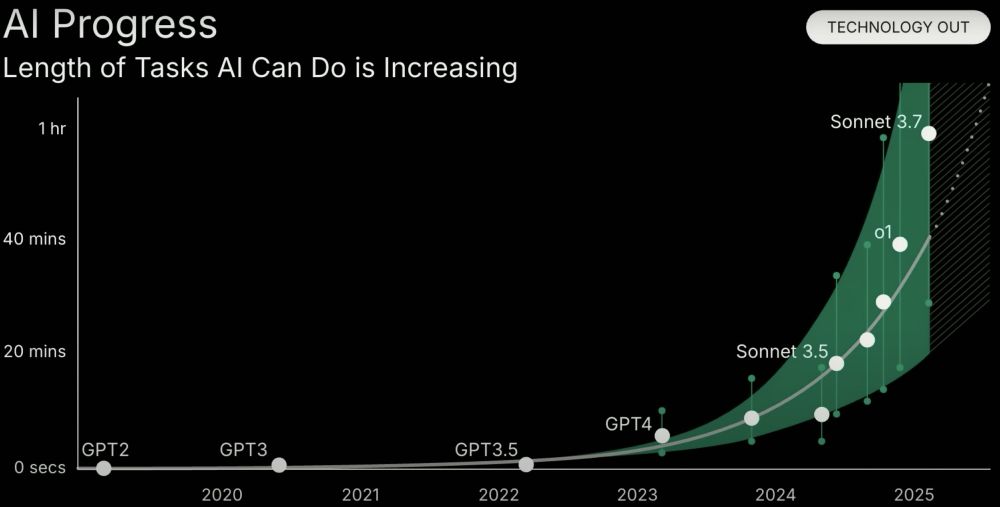

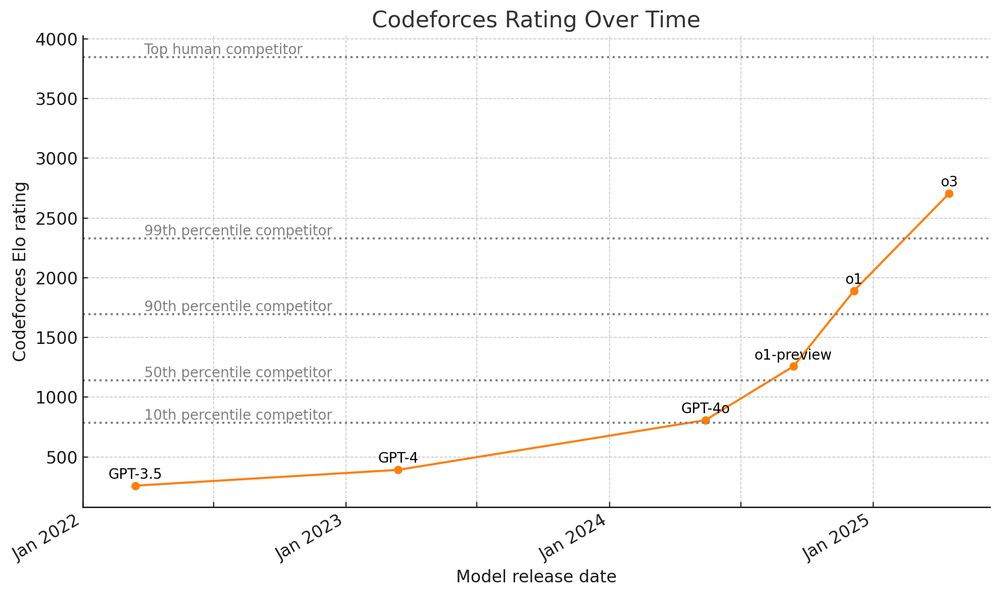

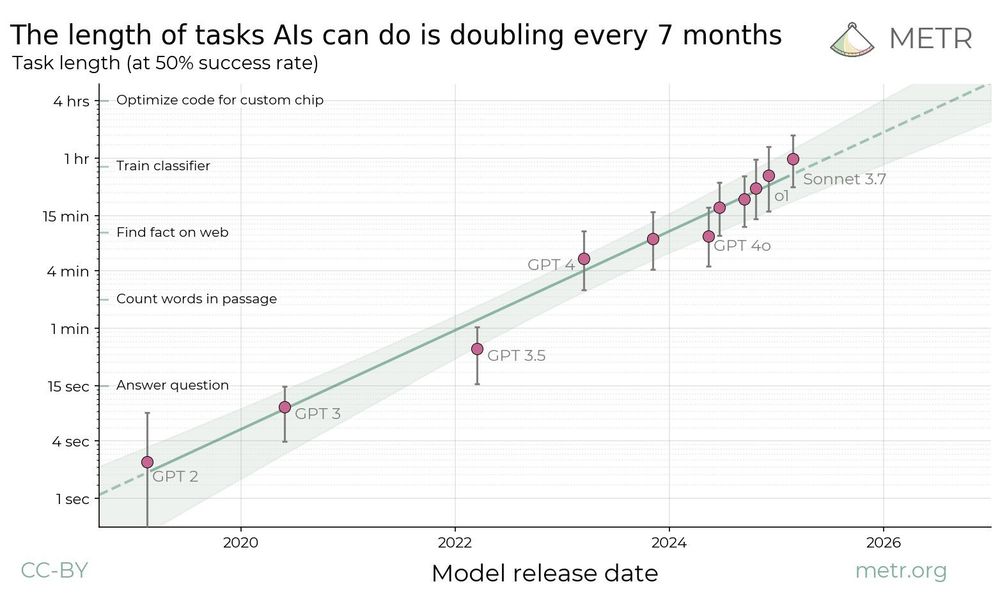

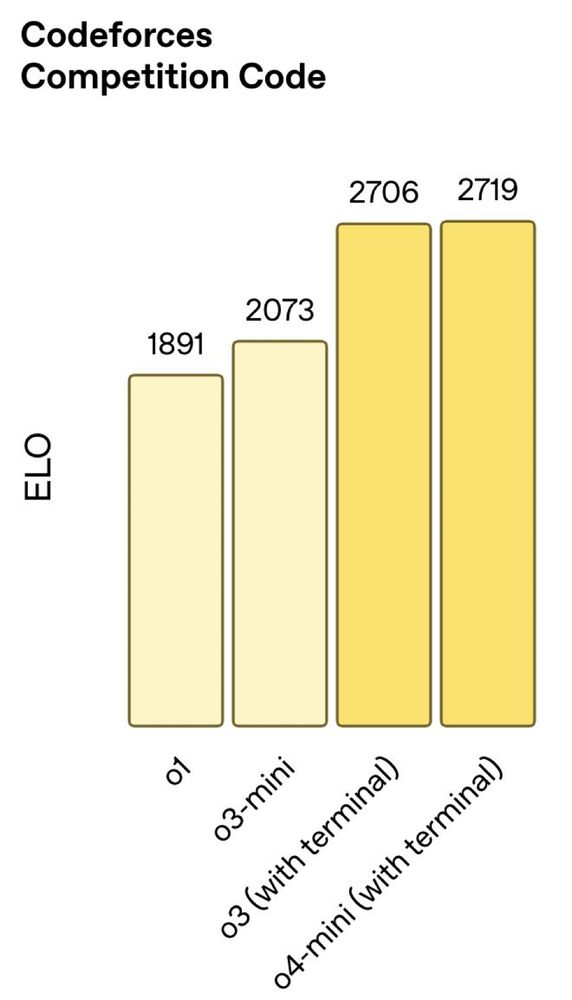

I recently made this plot for a talk I gave on AI progress and it helped me appreciate how quickly AI models are improving.

I know there's still a lot of benchmarks where progress is flat, but progress on Codeforces was quite flat for a long time too.

03.05.2025 19:37 — 👍 10 🔁 0 💬 0 📌 1

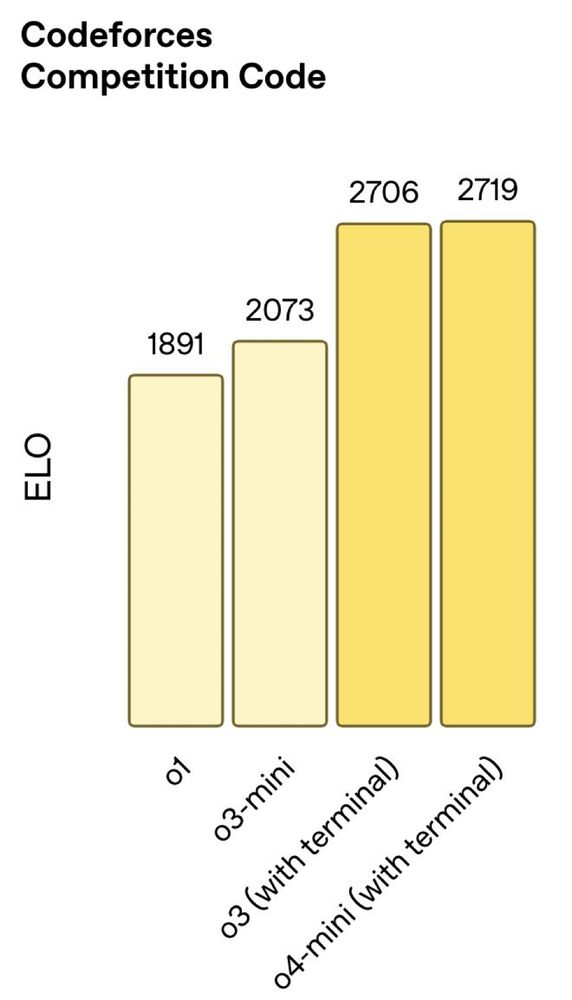

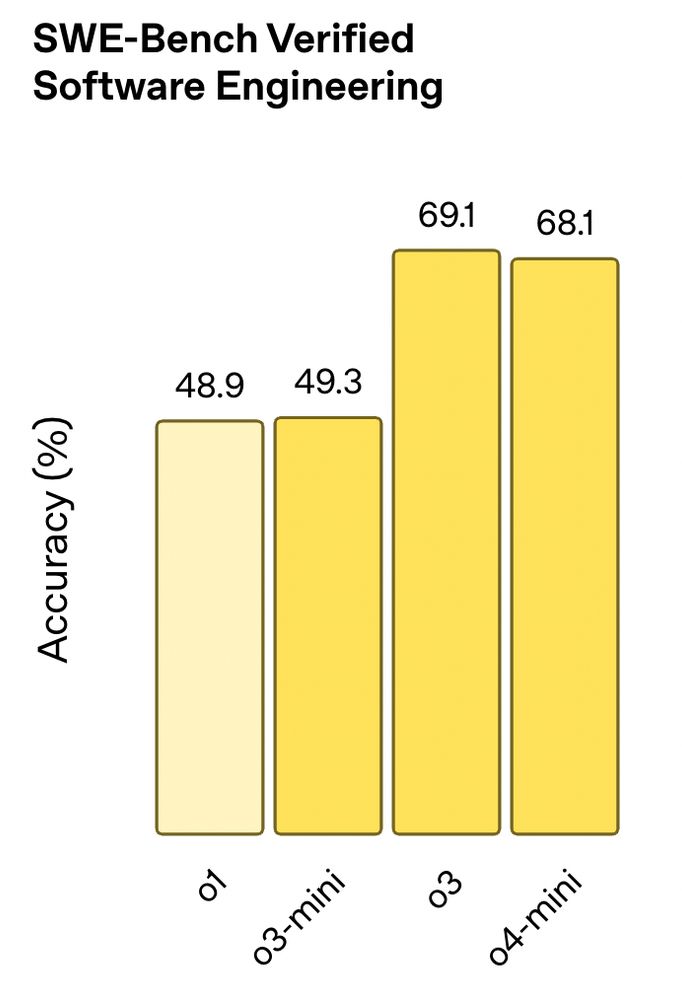

Today, we're releasing OpenAI o3/o4-mini. The eval numbers are SOTA (2700 Elo is among the top 200 competition coders)

But what I'm most excited about is the stuff we can't benchmark. I expect o3/o4-mini will aid scientists in their research and I'm excited to see what they do!

16.04.2025 17:33 — 👍 31 🔁 5 💬 4 📌 1

I worked in quant trading for a year after undergrad, but didn't want my lifetime contribution to humanity to be making equity markets marginally more efficient. Taking a paycut to pursue AI research was my best life decision. Today, you don't even need to take a paycut to do it.

15.04.2025 14:03 — 👍 9 🔁 0 💬 1 📌 0

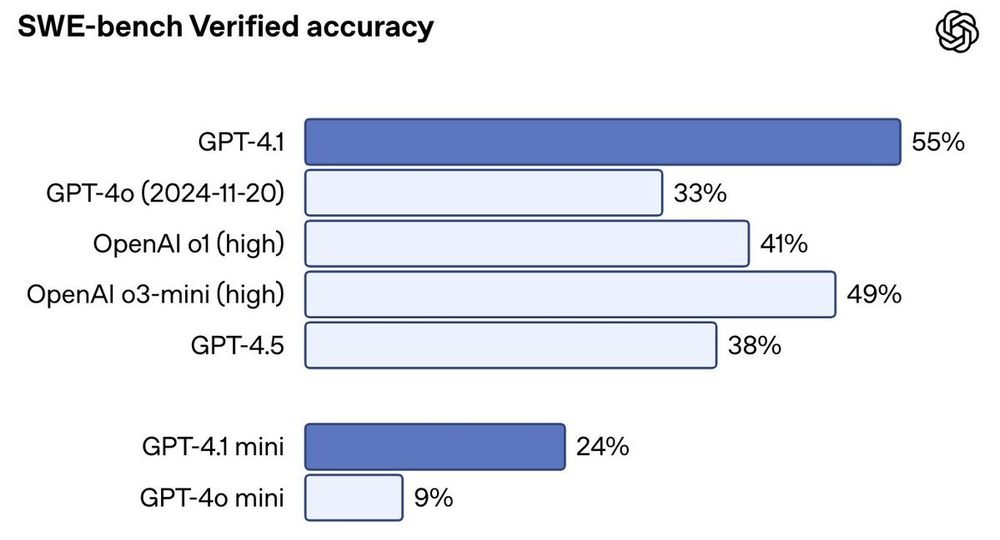

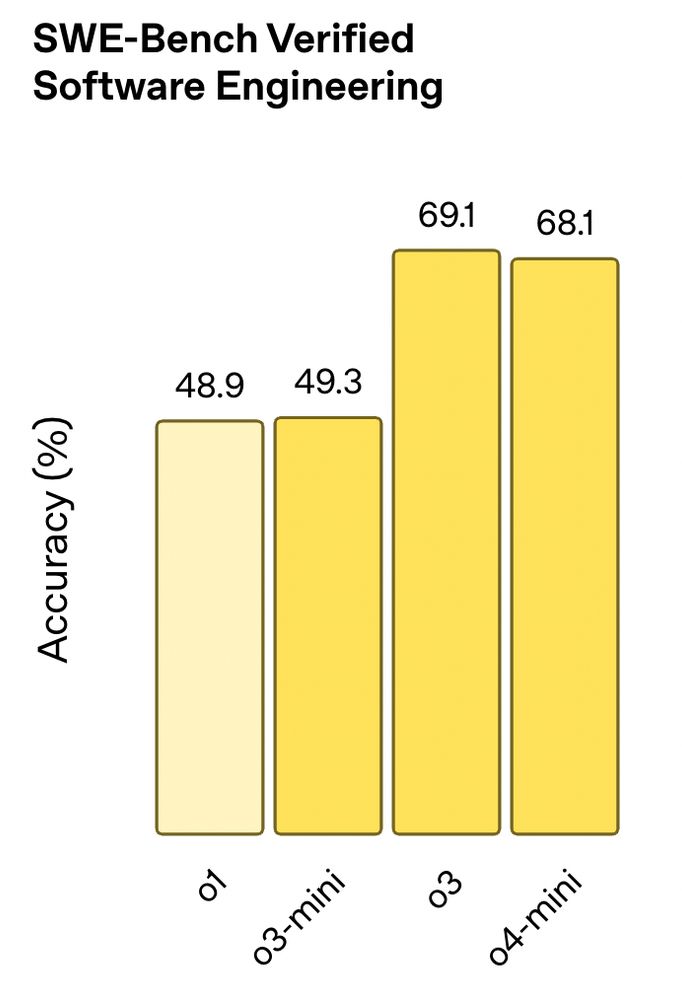

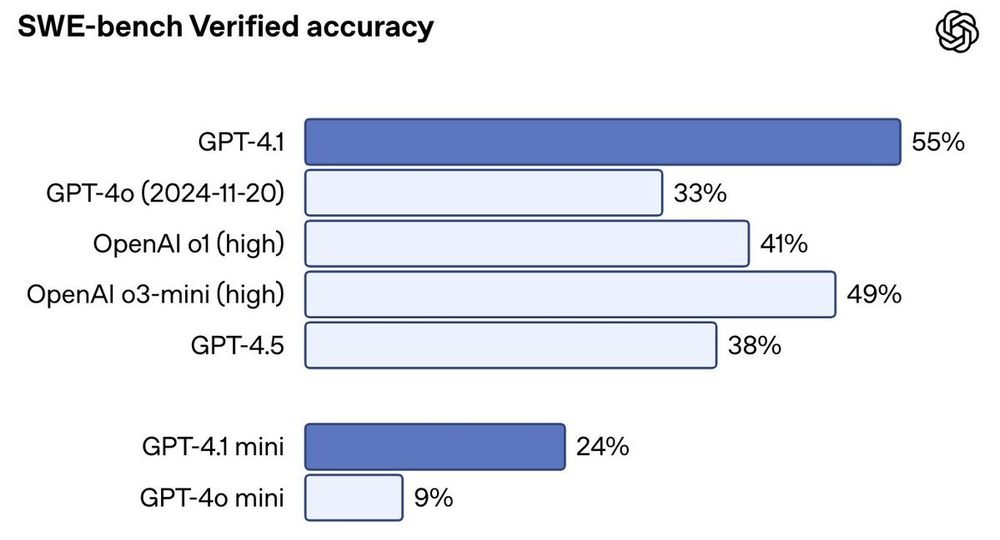

Our latest OpenAI model in the API, GPT-4.1, achieves 55% on SWE-Bench Verified *without being a reasoning model*. It also has 1M token context. Michelle Pokrass and team did an amazing job on this! Blog post with more details: openai.com/index/gpt-4-1/

(New reasoning models coming soon too.)

14.04.2025 17:40 — 👍 5 🔁 0 💬 1 📌 0

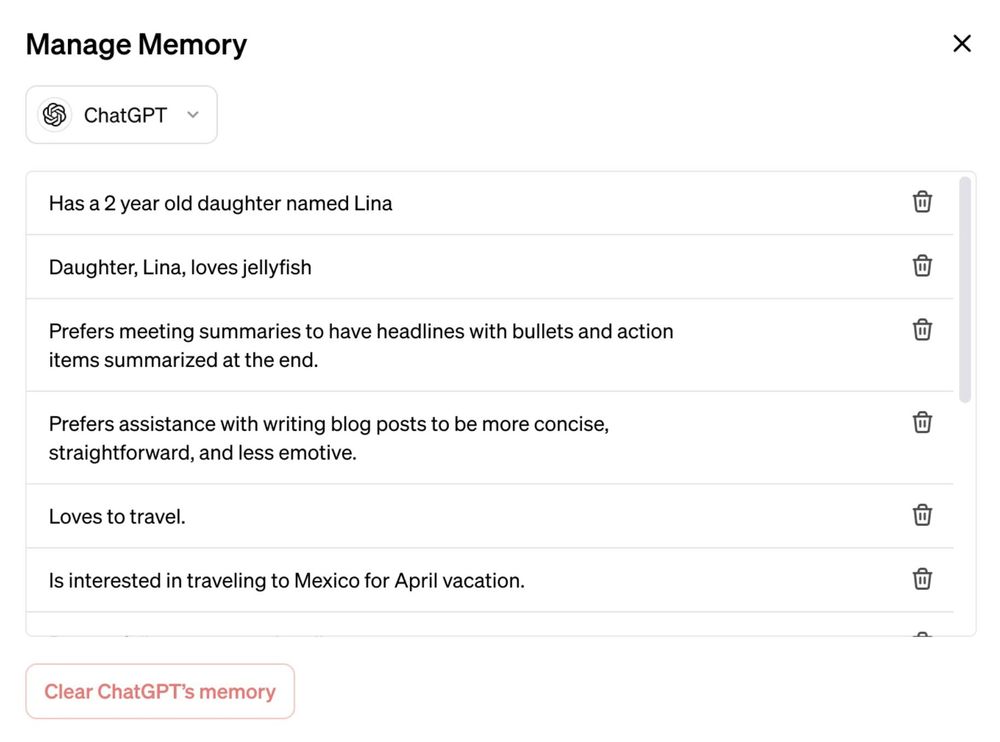

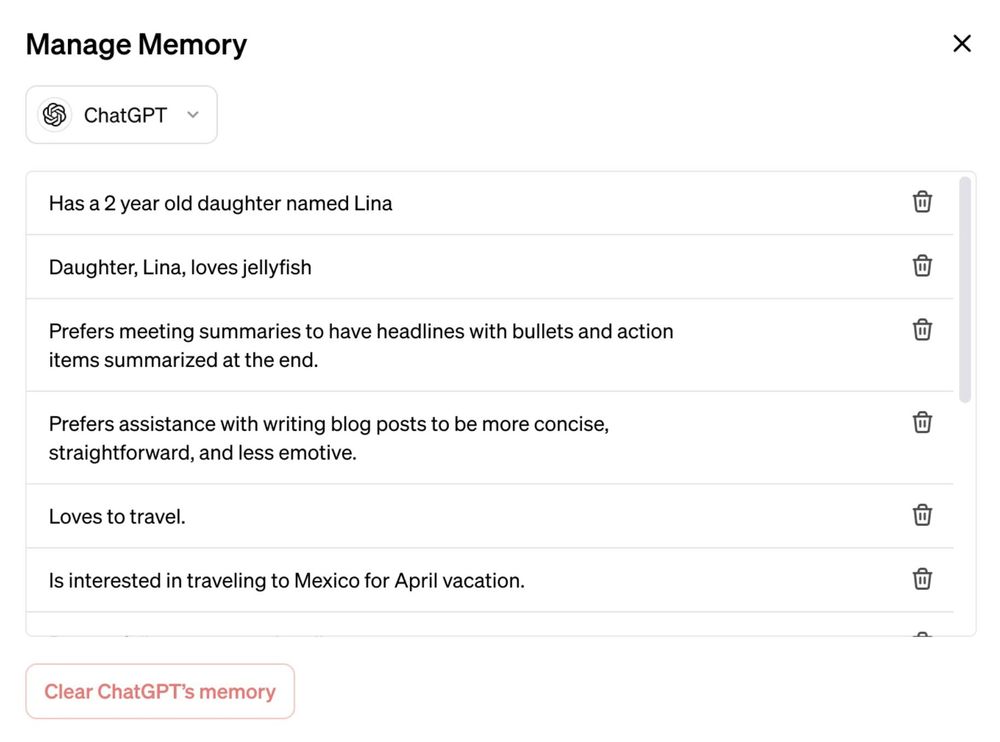

Today, OpenAI is starting to roll out a new memory feature to ChatGPT. It signals a shift from episodic interactions (call center) to evolving ones (colleague or friend).

Still a lot of research to do but it's a step toward fundamentally changing how we interact with LLMs openai.com/index/memory...

10.04.2025 17:47 — 👍 10 🔁 1 💬 0 📌 0

Listening to Reid Hoffman on @economist.com argue it's fine if AI replaces all jobs because we'll live like medieval nobility with "AI peasants" doing all the work. Weird choice of analogy. Remind me, Reid, how did that turn out for the nobles?

21.03.2025 16:27 — 👍 8 🔁 0 💬 2 📌 1

AI pioneer Richard Sutton just won the Turing Award. In 2019, Rich wrote a powerful essay that distills 75 years of AI into a simple "Bitter Lesson": general methods that scale with data and compute ultimately win. With the rise of AI agents it's an important lesson to keep in mind: bit.ly/4iLaTlh

06.03.2025 17:13 — 👍 10 🔁 1 💬 0 📌 0

Scaling pretraining and scaling thinking are two different dimensions of improvement. They are complementary, not in competition.

27.02.2025 20:33 — 👍 10 🔁 0 💬 2 📌 0

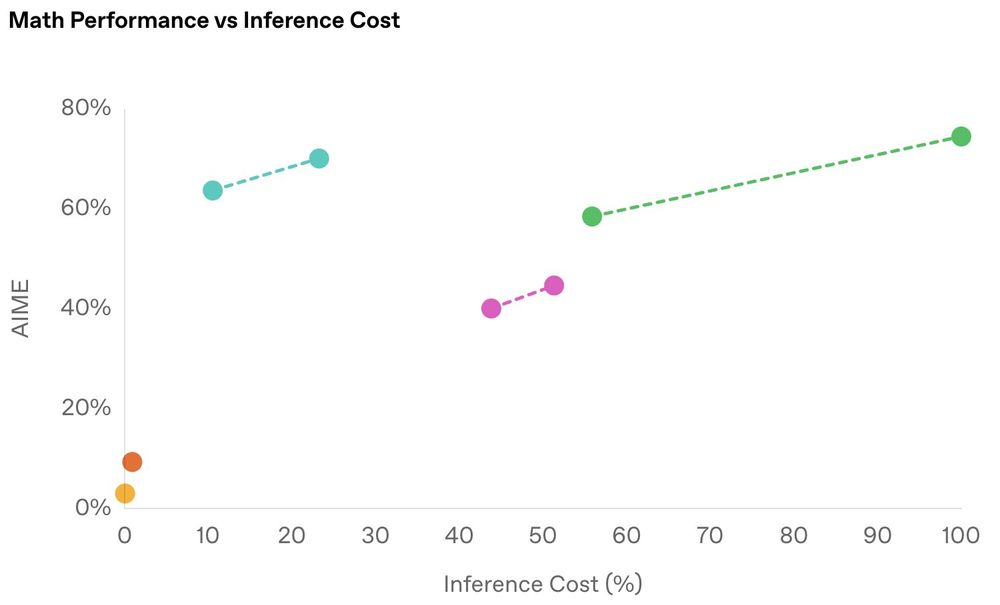

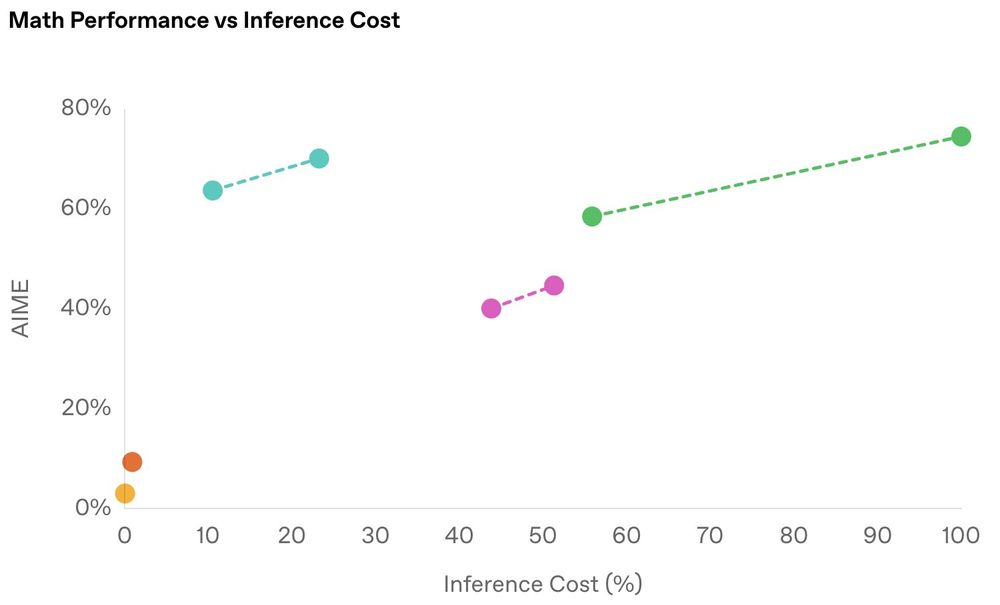

LLM evals are slow to adapt. MMLU/GSM8K continued to be reported long after they were obsolete. I think the next thing to go away will be comparing models on evals by a single number. Intelligence/$ is a much better metric. I loved this plot from o1-mini's blog for example openai.com/index/openai...

21.02.2025 02:56 — 👍 9 🔁 0 💬 1 📌 0

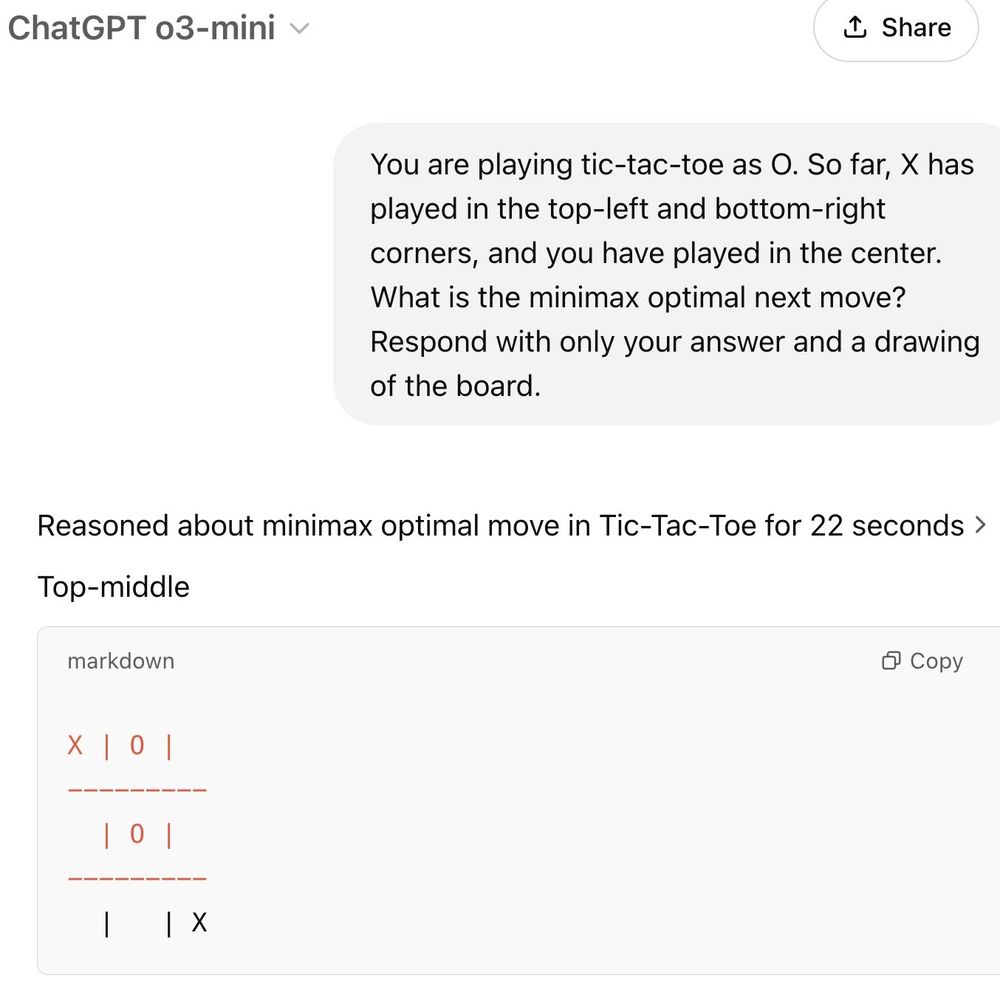

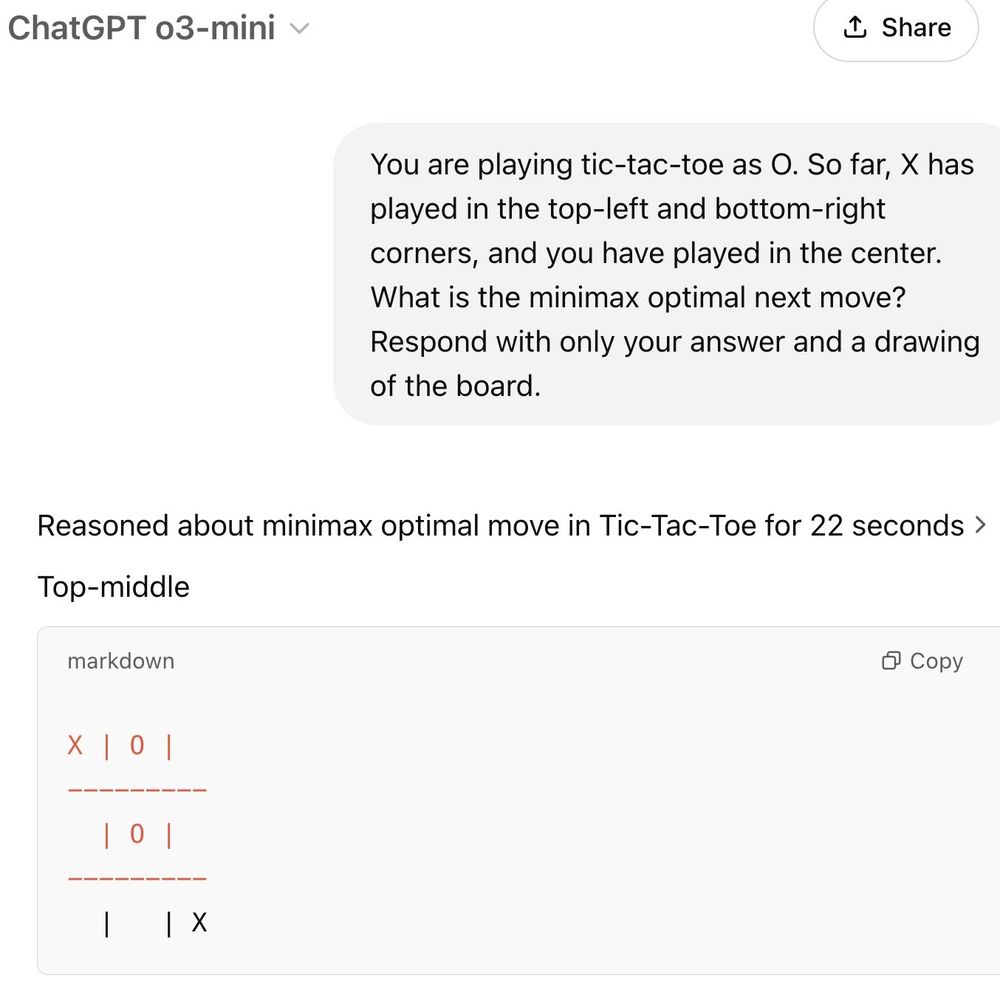

o3-mini is the first LLM released that can consistently play tic-tac-toe well.

The summarized CoT is pretty unhinged but you can see on the right that by the end it figures it out.

08.02.2025 22:31 — 👍 11 🔁 0 💬 1 📌 0

A lot of grad students have asked me how they can best contribute to the field of AI when they are short on GPUs and making better evals is one thing I consistently point to.

06.02.2025 19:22 — 👍 5 🔁 0 💬 0 📌 0

There's a lot of talk of LLMs "saturating all the evals" but there's plenty of evals people could make where LLMs would do poorly:

-Beat a Zelda game

-Make a profit in a prediction market

-Write a stand-up set that's original and funny

I'm bullish on AI, but we're far from done.

06.02.2025 19:21 — 👍 10 🔁 0 💬 3 📌 1

AI / ML comms person (formerly Meta, Linden Lab). Guitar in Butterfly Knives. Vespa enthusiast.

Searching for the numinous

Australian Canadian, currently living in the US

https://michaelnotebook.com

MTS @ OpenAI. Ex GDM. Ex Google Brain.

Come for the shitposts, stay for the shitposts.

Decision-making under uncertainty, machine learning theory, artificial intelligence · anti-ideological · Assistant Research Professor, Cornell

https://avt.im/ · https://scholar.google.com/citations?user=EGKYdiwAAAAJ&sortby=pubdate

🧙🏻♀️ scientist at Meta NYC | http://bamos.github.io

The Computer Science Department's mission has remained steadfast: to lead in computer science research and education that has real-world impact — to push the frontiers of the field and produce the next generations leaders.

AI @ OpenAI, Tesla, Stanford

Co-founder at Asana and Good Ventures (a funding partner of Coefficient Giving). Meta delenda est. Strange looper.

I train models @ OpenAI.

Previously Research at DeepMind.

Hae sententiae verbaque mihi soli sunt.

the blue sky, the white clouds, the vast unknown outside, full of places and things (and people?) that no Undergrounder had ever seen

worshipping the shinto god of reality

kipp.ly

Professor at Wharton, studying AI and its implications for education, entrepreneurship, and work. Author of Co-Intelligence.

Book: https://a.co/d/bC2kSj1

Substack: https://www.oneusefulthing.org/

Web: https://mgmt.wharton.upenn.edu/profile/emollick

official Bluesky account (check username👆)

Bugs, feature requests, feedback: support@bsky.app